|

Markovnikov posted:Did the Clock have something written on it's face? It looked like it, like some words in Latin script, but the video/texture was a little fuzzy. Good eye, I can't see much, looks like it might just be dates. Have a look and see if anything jumps out. The singularity chat is great so far, I was thankfully mostly aware of the core ideas, and I'll read some more when I have the time. All these attempts to predict something that we've defined as beyond our capabilities of prediction or understanding... well if you don't try you won't know how much you'll be proven wrong! It does make most sense to make assumptions based on extreme situations that are describable without being able to say how they would happen or how they would manifest. I'd never have thought of trying to prepare myself in any way on that basis, but I'm glad someone has, crazy or otherwise.

|

|

|

|

|

| # ? Apr 26, 2024 04:45 |

|

CannibalK9 posted:I'm glad someone has, crazy or otherwise. Oh, he's a total fruitbat. Interesting mind, though.

|

|

|

|

Kurieg posted:Yeah, Yudkowsky's ideology is basically that an AI that can simulate you perfectly can set up a situation such that it will be able to predict your future actions with 100% certainty. Which in itself is a sound theory. But he then extrapolates that in reverse such that an AI in the future can simulate your past actions enough times to actually change the probability of your present actions in such a way to alter future events. Even going so far as to state that a human-friendly AI might simulate or inflict torture upon any arbitrary number of individuals if such actions would allow it to exist earlier thus maximizing the amount of time it has to make people happy. Did I misread something, or did you say he thinks an AI can alter people's presents to change things that already happened?

|

|

|

|

Basically, imagine that someone told you that in the future a highly advanced AI would generate one hundred trillion billion simulations of you, all exactly like you in every way, actions, mannerisms, and speech. And such an AI would present all of them with the exact same dillema that this person is giving you now. Give me  or I will torture you for infinity. Now you're reasonably certain that you're not a simulation, but there's a 99.99999999999999999999999999999999999999999999999999999999999% chance that you are. So you as a purely self interested rational actor give him the or I will torture you for infinity. Now you're reasonably certain that you're not a simulation, but there's a 99.99999999999999999999999999999999999999999999999999999999999% chance that you are. So you as a purely self interested rational actor give him the  to avoid the possibility of torture. to avoid the possibility of torture.He extrapolates this to a whole bunch of things but the idea is that by skewing probability to a ludicrous extent you can influence present actions. He is completely incapable of understanding the fact of there being a 0% probability of something occurring (because he uses odds, not probabilities. you can't have a 1/0 chance of something happening) so the logic of "If they're all exactly like me then they all would say 'no' because the energy expended on creating that many simulations would be completely wasted if you can correctly predict me saying no anyway." is wasted on him. Eventually if he makes enough people one of them will say yes and the rest will be tortured in robohell. Kurieg fucked around with this message at 01:30 on Feb 1, 2015 |

|

|

|

Bruceski posted:Did I misread something, or did you say he thinks an AI can alter people's presents to change things that already happened? Its called ~Timeless Decision Theory~

|

|

|

|

Anticheese posted:Its called ~Timeless Decision Theory~ Just read up on this and it is weird.

|

|

|

|

There exist major problems, even conceptually, with the concept of the AI singularity, as defined as "That time when an AI is intelligent enough to design a new AI more intelligent than itself." The biggest, and most fundamental, is the idea that "intelligence" is like a statistic on a D&D Character Sheet that can be objectively improved in only one direction, and with no upper limit. This is quite nearly patent nonsense, even if it is a very attractive idea in a narrative sense. At best, this cornerstone of the entire concept is simply arbitrarily stated to be true, like faith in a god, without any supporting evidence or proof whatsoever. Going lower down on the chain of problems is that just because an AI might design another AI doesn't mean that it can be actually implemented, or if it can, that it can be implemented within a reasonable timeframe-- much less a timeframe so small that it cannot be kept up with as is implied. Yudkowski's singularity, in particular, assumes that such an AI could simulate not just the entire universe from beginning to end, but an unlimited (nigh-infinite) number of instances of such a thing. There is no evidence to suggest that this is even remotely physically possible, even theoretically. These two issues ALONE cause the entire concept to fall apart. I much prefer other definitions of "the singularity", some of which include the possibility that the singularity already happened. In general though, I don't think that the concept is really a useful one for discussing. At one level, it simply states "technology will advance so rapidly that you cannot predict what will happen, particularly in terms of the sociological and philosophical ramifications thereof." Or, in more simple terms, "you can't predict the future". Okay... so what? How is that significantly different from any other time in history from the perspective of those living it at the time? Alternatively, and contradictorily, the singularity can be defined as a predictably exponential increase in technology, and thus you CAN predict the future. Moore's Law for instance (which has been a self fulfilling prophecy as long as it has existed, as chip manufacturers make time schedules according to it). This is slightly more useful as a concept, but gets rid of most of the techno-cultist ideas when you actually make meaningful predictions with it, and some of those predictions even turn out WRONG, or at least limited. Moore's Law can't last forever, for instance.

|

|

|

|

Iunnrais posted:Going lower down on the chain of problems is that just because an AI might design another AI doesn't mean that it can be actually implemented, or if it can, that it can be implemented within a reasonable timeframe-- much less a timeframe so small that it cannot be kept up with as is implied. Yudkowski's singularity, in particular, assumes that such an AI could simulate not just the entire universe from beginning to end, but an unlimited (nigh-infinite) number of instances of such a thing. There is no evidence to suggest that this is even remotely physically possible, even theoretically. You can't make a perfect simulation of the universe inside the universe itself, it is not physically implementable. A perfect simulation would need to track every object in the universe, however this system would need to use something to track that information, atoms, electrons, rocks, something. Thus you would need at least as many things as are contained in the universe in order to simulate it (in this case you would have made another copy of the universe), more if you wanted to be able to perfectly simulate it and do other things. The simulation would have to be abstracted (heavily) and therefore not perfect. Also it's moot because the universe is not perfectly deterministic, things like radioactive decay events are actually random in the true sense. Knowing everything about the system just let's you predict the odds of an event happening not the actual time. Thus trying to simulated the universe from beginning to end would just be piling errors on top of abstractions until the simulation no long has any resemblance to the reality. MagicBoots fucked around with this message at 05:06 on Feb 1, 2015 |

|

|

|

One way the technological singularity has been pictured is simply a graph of the growing memory and computing power (it doesn't really matter if we're talking about a single PC, a supercomputer, or all computers in the world added up, the end result is the same). In both cases, this graph is exponential. Every few years, computing power doubles. If you simply extrapolate the graph, you'll see the computing power rising faster and faster, until at some point it shoots off into the unknown, increasing so fast that it'll seem to us that all technological advances are happening at once. The problem with this is that we're slowly starting to scrape at the limits of the possible. As computers get physically more and more efficient, the amount of transistors on a certain size chip keeps rising... until they are forced to be so small they end up in the atomic scale. And we can't go further than that, it's a rather hard limit of electronics - and of anything, really. So to keep up you'd need more and more space, make computers bigger. There's no practical way to do that indefinitely, and on top of that, huge computers are slowed down because the speed at which they internally transfer information becomes a significant issue. There are lots of real physical issues preventing this from happening. Of course, many (science fiction) philosophers don't care about reality and prefer thinking about 'what if' scenarios.

|

|

|

|

I remember reading an essay by Asimov in one of his Robot anthologies. "Every once in a while someone asks me how a positronic brain works. I don't know how it works, which is why I write stories instead of building robots light-years beyond what current engineers can manage. It just sounds science fiction-y."

|

|

|

|

Bruceski posted:"Every once in a while someone asks me how a positronic brain works." It works very well, thank you.

|

|

|

|

Carbon dioxide posted:One way the technological singularity has been pictured is simply a graph of the growing memory and computing power (it doesn't really matter if we're talking about a single PC, a supercomputer, or all computers in the world added up, the end result is the same).  It's like someone looking at this chart and concluding that since the world record has dropped by 1 second between 1900 and 2000, by the year 3000 the world record will have hit -1 second and atheletes will be able to travel into the past by running there really fast. (And then persuading a bunch of nerds to give them all their money to protect them from hyperevolved time-travelling uberjocks from the year 3000.)

|

|

|

|

quote:Also it's moot because the universe is not perfectly deterministic, things like radioactive decay events are actually random in the true sense. Knowing everything about the system just let's you predict the odds of an event happening not the actual time. Thus trying to simulated the universe from beginning to end would just be piling errors on top of abstractions until the simulation no long has any resemblance to the reality. It would've been interesting to see how quantum theory would have progressed if we'd developed chaos theory beforehand.

|

|

|

|

Paul.Power posted:Although that in turn leads to the question of whether radioactive decay is truly random, or a product of yet another chaotic, complex (but still deterministic) system that we just don't know about. Or for that matter, whether that's actually important one way or another on a practical level. All to date experiments have proven Bell's theorem to be correct, which basically states that there are no "hidden variables" that make apparent randomness of quantum theory happen in deterministic fashion.

|

|

|

|

omeg posted:All to date experiments have proven Bell's theorem to be correct, which basically states that there are no "hidden variables" that make apparent randomness of quantum theory happen in deterministic fashion. e: I got linked to the GBS Less Wrong/Yudkowsky mock thread last night. Oh wow, that guy's messed up. Paul.Power fucked around with this message at 09:55 on Feb 2, 2015 |

|

|

|

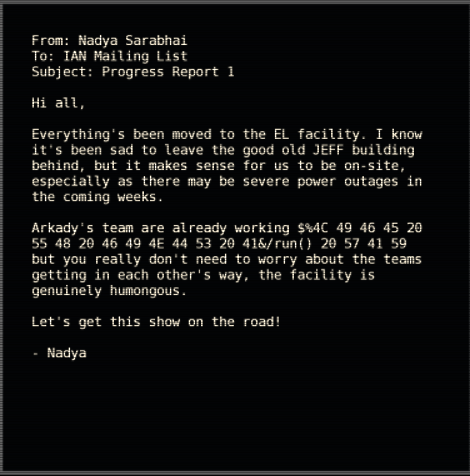

Part 4 (Hub A-4): Crossing the streams progress_repl.eml; mail_error.dat; beginnings.txt    Stuff I'd particularly enjoy hearing more about : - Apocalyptic scenarios of varying likelihood. - Popular/unusual definitions of a person.

|

|

|

|

One thing that irritates me is that the human verification check tests for logical inconsistencies. But isn't that a part of being human? Surely someone who would analyze every parameter and managed to stay 100% consistent during all of that could not be considered human. And it is also pretty flawed. Like you noted, you could define creation as a special case of discovery. Therefore claiming that value can be created or discovered wouldn't be incosistent at all. In other words, Milton has some dogmatic views on the initial definitions of those words. About gameplay: I spent a really long time looking for a way to the star in this room. I didn't think there were any connectors in the trees at all. So I searched for something else. If you position those things right, you can turn the elevated conector red. That really looks like it should lead somewhere because you can barely see that one from other places as well. But it doesn't work at all. I was so confused that I actually managed to find those easter eggs first. Eventually I gave it up and felt kind of annoyed when I saw the solution.

|

|

|

|

I wouldn't be surprised if you are supposed to fail human-check no matter what. At least it's not a captcha  I can see the game getting quite complicated, if we keep getting puzzle elements and inter room connectivity. I hope it goes all meta puzzly.

|

|

|

|

Small tip for lasers: you can assign a key to "alternative use" in the options, it allows you to pick connectors up without breaking connections. That function is unbound by default and can help later when puzzles become more complicated. And yeah, it took me forever to find that hidden connector. I almost managed to route power without it through some elevation tricks but it wasn't enough.

|

|

|

|

omeg posted:Small tip for lasers: you can assign a key to "alternative use" in the options, it allows you to pick connectors up without breaking connections. That function is unbound by default and can help later when puzzles become more complicated. To be honest, that sounds kind of cheesy. I know of at least one puzzle which would be way easier that way.

|

|

|

|

edit: only 2mins into the new video my rant is negated.

Qwezz fucked around with this message at 21:18 on Feb 2, 2015 |

|

|

|

CannibalK9 posted:Stuff I'd particularly enjoy hearing more about : There's also the technology related scenarios, gray goo, robot uprising, a malevolent AI installing itself across the world. This website is a pretty good read for some scenarios, as well as the idea that there can be such an event that causes human culture as we know it to cease to be while still allowing genetic humans to exist in some form. quote:- Popular/unusual definitions of a person. This is actually the central conflict of a webcomic called Freefall. The idea being that in the process of creating an AI that's human safe, you have to create an AI that's capable of evolving it's definition of what is human. Otherwise if/when humanity moves into a post-human state, AIs would not perceive them as people anymore under the most narrow definitions. The definition the AI comes up with is "if it's wearing clothes, it's human" because even if we evolve to be floating space blobs we'll probably want pockets.

|

|

|

|

You're not entirely right about the Asimov quote in the garbled text. The First Law is "A robot shall not harm a human, or, through inaction, allow a human to come to harm". The quoted text, though, stated "[A robot shall not etc etc] allow humanity to come to harm". This is commonly called the "Zeroth Law" and is a concept that emerged in a couple of Asimov's stories when certain robots started thinking a bit more abstractly about what constitutes "harming" humans, and concluded that it may be allowable to directly harm certain humans if doing so will prevent a greater harm on the species as a whole (see also the movie adaptation of I, Robot). This distinction is, of course, extremely crucial and is almost archetypal of how things go wrong in Asimov plots; once you allow for the distinction between humans and humanity, it can become permissible to harm humans - lots of humans - in the service of humanity. More importantly, harm to humans is fairly unambiguous and quantifiable, whereas harm to humanity is hugely abstract and open to judgement. The concept of the "separation between persons" is crucially important in ethics and political philosophy because it addresses exactly this issue; you can weigh up a moral judgement by saying "I will cause X much harm and Y much good" but that's not as important as bearing in mind how many different actual live people you will be harming, and remembering that to those people such harm may take on a whole meaning due to their contexts.

|

|

|

|

I reckon the comment on signposting in that QR note adds a lot to the idea that we're in a simulation that's an allegory for life and development. We learned how to walk, about curiosity, how to deal with frustrations, and from time to time we hear words of wisdom from those who have been there before us. Its a pretty human impulse to want those who come after us (children) to learn from our mistakes and experiences, and have a better life. I also think that if our character had canned text to daub on the walls instead of us talking about and discussing what we might get out of it, it'd probably kill the introspective mood.

|

|

|

|

Well, there used to be a really neat website about apocalyptical scenarios called 'Exit Mundi'. The website was old and seems to be not working at all right now. Luckily, the Wayback Machine has a working version of it.

|

|

|

|

Air is lava! posted:Eventually I gave it up and felt kind of annoyed when I saw the solution. Yep... omeg posted:Small tip for lasers: you can assign a key to "alternative use" in the options, it allows you to pick connectors up without breaking connections. That function is unbound by default and can help later when puzzles become more complicated. Motherfucker. That would have been so much more helpful to know like 50 puzzles ago. I've only got 8 left for 100% now. On the topic of Socrates, his singular goal in life was to prove that the prophecy the Oracle gave him, that he was the wisest among men, was total bullshit, and in the process defined what we know as Socratic Questioning; it's where you begin with the assumption that you know jack poo poo and build up your understanding of a topic beginning from that point. I've never heard that quote of his, but from what I know of him that sounds like something he might say. On the topic of connectors, those quickly become the go-to puzzle element, and the game finds some really creative ways to make use of them that manage to stay fresh despite being a relatively simple tool. Not that it uses them all the time - it finds some really creative ways not to use them too. e: Anticheese posted:I reckon the comment on signposting in that QR note adds a lot to the idea that we're in a simulation that's an allegory for life and development. We learned how to walk, about curiosity, how to deal with frustrations, and from time to time we hear words of wisdom from those who have been there before us. Its a pretty human impulse to want those who come after us (children) to learn from our mistakes and experiences, and have a better life. I also think that if our character had canned text to daub on the walls instead of us talking about and discussing what we might get out of it, it'd probably kill the introspective mood. Just curious, have you played the game? I won't say why, but I've want to know. ViggyNash fucked around with this message at 04:16 on Feb 3, 2015 |

|

|

|

Nope! I'm trying to save up, and I'm content waiting for the inevitable Steam holiday sale. The incoherent rambling I posted above just came to me when I thought about the message while taking a shower, and how leaning from mistakes - both my own, and others - is particularly relevant to me at this point in time. Apart from the official trailers, your videos are the closest I've gotten to the game.

|

|

|

|

Anticheese posted:Apart from the official trailers, your videos are the closest I've gotten to the game. I get you. The one and only reason I bought this game was the GIantBomb Unfinished look at the game. After that, I snatched it up the moment it was available, and have not once been disappointed. But... you might be a bit disappointed in the near future.

|

|

|

|

Definitions of a person... Oh, man, this one's a lovely little minefield, littered with assumptions about other creatures in our world. Let's go through some popular definitions about what makes humans unique, and let's show that we're not all that special. Human Beings Intellectually Reason. This was one of the first ideas that supposedly set us apart from animals and insects, and one of the most easily debunked as far as uniqueness goes. Mice, Rats, primates of all shapes and sizes, many species of bird, even Mantis Shrimp and Octopi. We don't know for sure about complex concepts or abstracts. Human Beings Have Complex Language. Whalesong appears to have complex meaning, not that we know for sure. Dolphins apparently know enough language to do nasty things as a group (more on that later). So we have at least one other species we share the planet with whose vocalisation could possibly contend with ours. This is in the "We don't actually know if we're unique in this" category. Human Beings Are Tool Users, And Make Tools. Well, our friends from the family Corvidae (Crows, Ravens, Rooks, and the like), among many other birds, and a few mammals, kind of disprove the uniqueness of this statement. Ravens and Crows, for example, are well known for learning how to pick locks, undo straps, and generally making simple tools for the purpose of getting food. Octopi also appear to have this potential, but their mating habits mean that lessons aren't really handed down from generation to generation. Human Beings Tell Stories. This was proposed, as far as I know, by Ian Stewart and Jack Cohen, although a little earlier than the Science of Discworld series (Which not only brought this hypothesis to my attention, but also gave me anecdotal evidence of how Mantis Shrimps can solve problems... I later found other sources). The problem with this is that stories come from language, and while we suspect other creatures may have complex language, we haven't deciphered it. So it's in the same grey area. Human Beings Are Unique For Play. Nope. Helluva lot of creatures play, and, like humans, they mix education and play a fair bit too. Human Beings Kill For Pleasure. Dolphins. Dolphins are bastards. Anyone who wants stories of how dolphins are bastards can find them, but they're the kind of stories that should have trigger warnings on them. Crows will help crippled fellows, but at the same time, they will shun (as a group), then murder other (individual) crows, for no discernible reason. Ravens apparently also do this. Those are pretty much the main hypotheses about human uniqueness, but a couple of more cynical (and idealistic) ones exist: We are unique for Love (Yeahno). We are able to lie (Yeahno). The two in particular I like are that we can hold two mututally contradictory thoughts about the same subject at the same time (Can't be proven to be unique, but most commonly found in phrases similar to "I'm not racist, but..." As hard as it is to believe, some of the folks saying that while adding racism on the end of that sentence genuinely don't believe they aren't!), and that we are the only species that actively seeks to make our lives more complicated in pursuit of relatively abstract desires. I know, I'm cynical as hell. But anyone who hasn't read The Science of Discworld series should, if only to find the story of the puzzle-solving mantis shrimp I mentioned before.

|

|

|

|

Yeah, but how many animals are capable of doing all of those things?

|

|

|

|

JamieTheD posted:But anyone who hasn't read The Science of Discworld series should, if only to find the story of the puzzle-solving mantis shrimp I mentioned before. This is very, very true. The books are great. Even better if you have read some of the regular Discworld books, but that's not a requirement. Anyway, I remember a conversation about this I had with an ex colleague once. It fits quite nicely into the themes of this game. He argued that the definition of intelligence has shifted tremendously during the last century, mainly because of computers. For instance, at one time someone who could do big calculations by hand fast was considered a genius. Nowadays, computers regularly do that, and those who are good at calculating stuff might appear in the Guinness book or something for doing a nice trick, but that's about it. Then, it was considered intelligent to quickly recognize patterns and analyse them. But computers got better at that too. Pattern recognition by itself is no longer considered good enough to count for intelligence. You'd need specific types to beat a computer, e.g. being able to recognize complex emotions and handle appropriately. He said, humanity's definition of intelligence has always been: that whatever our brains can do, but machines can't. As machines get better and better, we need to find smaller and smaller definitions for intelligence.

|

|

|

|

Mraagvpeine posted:Yeah, but how many animals are capable of doing all of those things? Ah, now here's where it gets interesting. Not because you tried to say "Humans are special because we have all these things at once!", but because you did ask which species are capable of doing all these things. As in, they can do them, not they currently do them. If it weren't for their mating habits, Octopi would be the clear winners in this situation. They can make tools, reason, kill, play, and communicate. We don't know about complex language, self-deception/cognitive dissonance, and abstract thought, so we're leaving those out as "Unproven." Problem is, octopi die before the next generation is born, and they live, on average, three to five years. All the learning in the world won't lead to an uplift of any kind if it can't be passed on. But they are capable of all the categories we can be sure of. Mantis Shrimp are capable of tool creation and use, reasoning, and play. Corvids are capable of all those things we haven't listed in "Unproven." Dolphins are capable of basic tool use (No recorded instance of creating a tool, though), communicating, reasoning, killing (and worse) for pleasure, and play. There's at least two contenders, right there (Octopi would also be contenders if they didn't breed quite the way they do.) So a better question would be "Can we discover if the Unproven categories are true, and thus get over our arrogance as a species?"  Carbon dioxide posted:Anyway, I remember a conversation about this I had with an ex colleague once. It's good that you mention machines, because one of Arthur C Clarke's short story themes when it came to the subject is being supplanted or joined by mechanical intelligences. This doesn't, however, mean robots. His favourite contender? Termites. Why termites? The queen gives birth to, essentially, specialised creatures, living tools. They have been known to construct buildings. Thankfully, they don't seem to reason, appear to have only limited communication, and don't craft tools for use as far as I know. But yes, as time has gone on, our definition of intelligence has narrowed and narrowed, with a lot of grey areas. Honestly, I think that's good for us as a species, because anthropocentrism has made for some silly scientific assumptions over history, and also makes a lot of sci-fi really boring.

|

|

|

|

JamieTheD posted:It's good that you mention machines, because one of Arthur C Clarke's short story themes when it came to the subject is being supplanted or joined by mechanical intelligences. This doesn't, however, mean robots. His favourite contender? Termites. This was basically what the Buggers were in Enders Game. The drones and such aren't really sentient, existing mostly as extensions of the queen's will. The formics saw the deaths in the war with humanity as basically the equivalent of the skin flakes you lose when you shake hands. Once they realized they were killing individual sentients they retreated back to their home systems and hoped humanity wouldn't be able to retaliate, or would understand their mistake. It's also an interesting take on things because it's a "What measure is a person" from the opposite side. This alien race had no idea that individual organisms could be sentient, when exposed to the reality of the situation they were forced to reevaluate their views.

|

|

|

|

I think humanity is defined by its level of ability to use tools. Yes, as stated earlier, many animals can also use tools, but only humans were able to use tools to make better tools, which they used to make better tools, so on and so forth. It is also our level of capacity for innovation, which far outclasses any other animal, which allows us to create something as complex as a primitive spear, let alone skyscrapers or octacore computer processors. No other organism in the world can do this, or even comes remotely close.

|

|

|

|

I could be wrong on this, but I think one thing that humans have that animals don't is a written language.

|

|

|

|

Mraagvpeine posted:I could be wrong on this, but I think one thing that humans have that animals don't is a written language. At what point is something a written language, though? A stench mark by a dog, or an ant trail could be interpreted that way. And that definition would instantly turn any sort of AI into a human. A further implication of this is, that dislexic, serverly retarded, or young people don't qualify to be called humans, which is kind of problematic. The general idea of these definitions of humanity is that we find something which unites us all but seperates us from animals and things.

|

|

|

|

Actually, CK9, there was something in your commentary which made me stop and think for a second - you are gendering the other robots in this test; either knowingly or unknowingly - you refer to '@' as him, and Faith as 'her'. I suppose we - that is, people - are inclined to gender things for becasue of predilictions to anthropomorphise non-human objects. I suppose that is one of the reasons I'd always be... doubtful about any type of A.I.; I feel we would be inclined to put in it emotions that it doesn't have.

|

|

|

|

Air is lava! posted:A further implication of this is, that dislexic, serverly retarded, or young people don't qualify to be called humans, which is kind of problematic. Many definitions of human would leave those people out. Even if you go to something as purely biological as "number of chromosomes" or some bullshit, you'd be leaving people with Down's syndrome out. It's a thorny subject. Mraagvpeine posted:I could be wrong on this, but I think one thing that humans have that animals don't is a written language. Written languages are very new. I think the oldest written evidence is early Chinese scripts or cuneiform Summerian from 6000 years ago. I would hesitate to call someone from 6000 years ago not human. That said, written language was an important step that accelerated the transmission between generations of culture and knowledge, which I think is an important aspect of Humanity. E: quote="Samovar" post="441098030"] Actually, CK9, there was something in your commentary which made me stop and think for a second - you are gendering the other robots in this test; either knowingly or unknowingly - you refer to '@' as him, and Faith as 'her'. I suppose we - that is, people - are inclined to gender things for becasue of predilictions to anthropomorphise non-human objects. I suppose that is one of the reasons I'd always be... doubtful about any type of A.I.; I feel we would be inclined to put in it emotions that it doesn't have. [/quote] Well there are a bunch of gendered languages, German has a neutral gender (as did Latin and probably other languages have even stranger things). It's definitely an interesting thing, what makes a table male or female? Nothing at all, except some inherited Grammar rules. English mostly does away with it, except you weirdos call boats "she". Markovnikov fucked around with this message at 19:30 on Feb 3, 2015 |

|

|

|

I don't think this case is related to gendered languages. But to be honest, in my mind Faith was a he. That's why I clearly noticed CK9 calling 'it' a her.

|

|

|

|

|

| # ? Apr 26, 2024 04:45 |

|

Markovnikov posted:Many definitions of human would leave those people out. Even if you go to something as purely biological as "number of chromosomes" or some bullshit, you'd be leaving people with Down's syndrome out. It's a thorny subject. Too right, and suggesting that such a person is a slightly beat-up version of humanity is ridiculous. A train of thought might be to start with yourself, who you hopefully consider to be relatively human, and see how many abilities and faculties need to be taken away before you'd concede to being non-human. Samovar posted:Actually, CK9, there was something in your commentary which made me stop and think for a second - you are gendering the other robots in this test; either knowingly or unknowingly - you refer to '@' as him, and Faith as 'her'. I suppose we - that is, people - are inclined to gender things for becasue of predilictions to anthropomorphise non-human objects. I suppose that is one of the reasons I'd always be... doubtful about any type of A.I.; I feel we would be inclined to put in it emotions that it doesn't have. Good point, I noticed myself doing that. I thought I may have said 'they', 'them' or 'their' a lot on purpose, but it is easy to forget. At the risk of everything/nothing, a friend recently came out as androgynous, and preferred to be referred to as 'they'. The few times I use the second person I opt for 'xie' and 'hir' because it's more natural, but I soon isolated that while I couldn't be more okay with the whole transition, suddenly overcoming a lifetime of language conditioning was incredibly difficult, leading to stilted conversations similar to ones in which you're concerned about offending rather than merely trying to remember the right word. The net result is that I have to admit to being conditioned, which while understandable, inevitable and entirely useful still makes me feel somewhat tainted. So if I said 'she' it's because I know of a female Faith, I don't know any @s though... and mostly it's because I've decided to play as if the robot has the exact mental faculties of myself, so I'm absolutely anthropomorphising!

|

|

|