- Vulture Culture

- Jul 14, 2003

-

I was never enjoying it. I only eat it for the nutrients.

|

Echoing this and expanding it even further: don't touch anything related to XML as a beginner.

While I'm absolutely sure it has some great features about it, I'm finding it's very much a "big boy" format and not beginner friendly. Maybe not the format necessarily but the ecosystem of tools in Python for it are really dense.

Hell, just look at the name "lxml" as a package. Gonna throw out a dumb hot take that I literally put no thought into: Acronyms should be banned from package naming.

If you can make guarantees about the documents you're loading like "text will never contain other elements" then it gets a lot easier to work with and enables much more straightforward APIs like Pydantic

|

#

?

Apr 4, 2024 17:47

#

?

Apr 4, 2024 17:47

|

|

- Adbot

-

ADBOT LOVES YOU

|

|

|

#

?

Apr 27, 2024 20:00

|

|

- Fender

- Oct 9, 2000

-

Mechanical Bunny Rabbits!

-

Dinosaur Gum

|

Chiming in about how someone gets wormy brains to the point where they use lxml. In short, fintech startup-land.

We had a product that was an API mostly running python scrapers in the backend. I don't know if it was ever explained to us why we used lxml. By default BeautifulSoup uses lxml as its parser, so I think we just cut out the middleman. I always assumed it was just an attempt at resource savings at a large scale.

Two years of that and I'm a super good scraper and I can get a lot done with just a few convenience methods for lxml and some xpath. And I have no idea how to use BeautifulSoup.

|

#

?

Apr 4, 2024 18:44

#

?

Apr 4, 2024 18:44

|

|

- Jose Cuervo

- Aug 25, 2004

-

|

That's right, the responses will have the same order as the list of tasks provided to gather() even if the tasks happen to execute out of order. From the documentation, "If all awaitables are completed successfully, the result is an aggregate list of returned values. The order of result values corresponds to the order of awaitables."

Great. I saw that in the documentation and thought that is what it meant but I wanted to be sure.

Another related question - I have never built a scraper before but from the initial results it looks like I will have to make about 12,000 requests (i.e., there are about 12,000 urls with violations). Is the aiohttp stuff 'clever' enough to not make all the requests at the same time, or is that something I have to code in so that it does not overwhelm the website if I call the fetch_urls function with a list of 12,000 urls?

Finally, sometimes the response which is returned is Null (when I save it as a json file). Does this just indicate that the fetch_url function ran out of retries?

|

#

?

Apr 4, 2024 21:35

#

?

Apr 4, 2024 21:35

|

|

- Fender

- Oct 9, 2000

-

Mechanical Bunny Rabbits!

-

Dinosaur Gum

|

Great. I saw that in the documentation and thought that is what it meant but I wanted to be sure.

Another related question - I have never built a scraper before but from the initial results it looks like I will have to make about 12,000 requests (i.e., there are about 12,000 urls with violations). Is the aiohttp stuff 'clever' enough to not make all the requests at the same time, or is that something I have to code in so that it does not overwhelm the website if I call the fetch_urls function with a list of 12,000 urls?

Finally, sometimes the response which is returned is Null (when I save it as a json file). Does this just indicate that the fetch_url function ran out of retries?

For your first question, it looks like the default behavior for aiohttp.ClientSession is to do 100 simultaneous connections. If you want to adjust it, something like this will work:

Python code:connector = aiohttp.TCPConnector(limit_per_host=10)

aiohttp.ClientSession(connector=connector)

|

#

?

Apr 4, 2024 22:50

#

?

Apr 4, 2024 22:50

|

|

- Jose Cuervo

- Aug 25, 2004

-

|

For your first question, it looks like the default behavior for aiohttp.ClientSession is to do 100 simultaneous connections. If you want to adjust it, something like this will work:

Python code:connector = aiohttp.TCPConnector(limit_per_host=10)

aiohttp.ClientSession(connector=connector)

Thank you! I am saving the center ID and inspection ID which fail to get a response and plan to try them again.

|

#

?

Apr 5, 2024 00:38

#

?

Apr 5, 2024 00:38

|

|

- CarForumPoster

- Jun 26, 2013

-

â¡POWERâ¡

|

Chiming in about how someone gets wormy brains to the point where they use lxml. In short, fintech startup-land.

We had a product that was an API mostly running python scrapers in the backend. I don't know if it was ever explained to us why we used lxml. By default BeautifulSoup uses lxml as its parser, so I think we just cut out the middleman. I always assumed it was just an attempt at resource savings at a large scale.

Two years of that and I'm a super good scraper and I can get a lot done with just a few convenience methods for lxml and some xpath. And I have no idea how to use BeautifulSoup.

I use lxml when needing to iterate over huge lists via xPaths from scraped data. Seems to be the fastest and it ain�t that hard. Selenium is slow at finding elements via xpath when you start needing to find hundreds of individual elements.

Also if you�re using selenium, lxml code can kinda look similar.

I spent multiple years writing and maintaining web scrapers and basically never used BS4.

CarForumPoster fucked around with this message at 10:13 on Apr 5, 2024

|

#

?

Apr 5, 2024 10:10

#

?

Apr 5, 2024 10:10

|

|

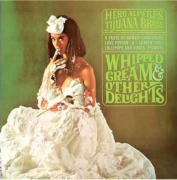

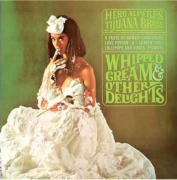

- rich thick and creamy

- May 23, 2005

-

To whip it, Whip it good

-

Pillbug

|

Has anyone played around with Rye yet? I just found it yesterday and am giving it a spin. So far it seems like a pretty nice Poetry alternative.

|

#

?

Apr 13, 2024 02:19

#

?

Apr 13, 2024 02:19

|

|

- Cyril Sneer

- Aug 8, 2004

-

Life would be simple in the forest except for Cyril Sneer. And his life would be simple except for The Raccoons.

|

Fun little learning project I want to do but need some direction. I want to extract the all the video transcripts from a particular youtube channel and make them both keyword and semantically searchable, returning the relevant video timestamps.

I've got the scraping/extraction part working. Each video transcript is returned as a list of dictionaries, where each dictionary contains the timestamp and a (roughly) sentence-worth of text:

code: {

'text': 'replace the whole thing anyways right so',

'start': 1331.08,

'duration': 4.28

}

I don't really know how YT breaks up the text, but I don't think it really matters. Anyway, I obviously don't want to re-extract the transcripts every time so I need to store everything in some kind of database -- and in manner amenable to reasonably speedy keyword searching. If we call this checkpoint 1, I don't have a good sense of what this solution would look like.

Next, I want to make the corpus of text (is that the right term?) semantically searchable. This part is even foggier. Do I train my own LLM from scratch? Do some kind of transfer learning thing (i.e., take existing model and provide my text as additional training data?) Can I just point chatGPT at it (lol)?

I want to eventually wrap it in a web UI, but I can handle that part. Thanks goons! This will be a neat project.

Cyril Sneer fucked around with this message at 03:46 on Apr 17, 2024

|

#

?

Apr 17, 2024 03:42

#

?

Apr 17, 2024 03:42

|

|

- PierreTheMime

- Dec 9, 2004

-

Hero of hormagaunts everywhere!

-

Buglord

|

Fun little learning project I want to do but need some direction. I want to extract the all the video transcripts from a particular youtube channel and make them both keyword and semantically searchable, returning the relevant video timestamps.

I've got the scraping/extraction part working. Each video transcript is returned as a list of dictionaries, where each dictionary contains the timestamp and a (roughly) sentence-worth of text:

code: {

'text': 'replace the whole thing anyways right so',

'start': 1331.08,

'duration': 4.28

}

I don't really know how YT breaks up the text, but I don't think it really matters. Anyway, I obviously don't want to re-extract the transcripts every time so I need to store everything in some kind of database -- and in manner amenable to reasonably speedy keyword searching. If we call this checkpoint 1, I don't have a good sense of what this solution would look like.

Next, I want to make the corpus of text (is that the right term?) semantically searchable. This part is even foggier. Do I train my own LLM from scratch? Do some kind of transfer learning thing (i.e., take existing model and provide my text as additional training data?) Can I just point chatGPT at it (lol)?

I want to eventually wrap it in a web UI, but I can handle that part. Thanks goons! This will be a neat project.

This sounds like a good use-case for a vectored database and retrieval-augmented generation (RAG) and/or semantic search. You can use your dialog text as the target material and the rest as metadata you can retrieve on match. Theres a number of free options for database, including local ChromaDB instances (which use SQLite) or free-tier Pinecone.io which has good library support and a decent web UI.

|

#

?

Apr 17, 2024 11:49

#

?

Apr 17, 2024 11:49

|

|

- Hed

- Mar 31, 2004

-

-

Fun Shoe

|

I just wanted to say I appreciated this error message when I forgot to put in the '-r'.

Bash code:(venv) $ pip install requirements.txt

ERROR: Could not find a version that satisfies the requirement requirements.txt (from versions: none)

HINT: You are attempting to install a package literally named "requirements.txt" (which cannot exist). Consider using the '-r' flag to install the packages listed in requirements.txt

|

#

?

Apr 19, 2024 17:49

#

?

Apr 19, 2024 17:49

|

|

- boofhead

- Feb 18, 2021

-

|

I love the "consider using". It feels very much like "you don't have to go home, but you can't stay here"

|

#

?

Apr 19, 2024 18:15

#

?

Apr 19, 2024 18:15

|

|

- Cyril Sneer

- Aug 8, 2004

-

Life would be simple in the forest except for Cyril Sneer. And his life would be simple except for The Raccoons.

|

I have a case where I create two instances of an object via a big configuration dictionary. The difference between the two objects is a single, different value for one key. So, this works:

code:big_config_dict = { .... }

B = dict(big_config_dict)

B['color'] = 'blue' #this one key is the only difference

thingA = Thing(big_config_dict) #default values

thingB = Thing(B) #single modified value

|

#

?

Apr 24, 2024 20:07

#

?

Apr 24, 2024 20:07

|

|

- boofhead

- Feb 18, 2021

-

|

If I want to take a base dict and change value in one line, I'll usually use a spread operator if the structure and changes are simple

Python code:config_1 = {'val1': 100, 'val2': 200}

# {'val1': 100, 'val2': 200}

config_2 = {**config_1, 'val2': 0}

# {'val1': 100, 'val2': 0}

Python code:default_person = {"name": "john doe", "age": 50, "mother": {"name": "jane doe", "age": 80}}

default_hobbies = {"hobbies": ["going to church", "reading the bible"]}

sample_person = {**default_person, **default_hobbies}

# {'name': 'john doe', 'age': 50, 'mother': {'name': 'jane doe', 'age': 80}, 'hobbies': ['going to church', 'reading the bible']}

sample_stoner = {**default_person, **default_hobbies, "mother": {"name": "janet doe"}, "hobbies": ["smoking weed"]}

# {'name': 'john doe', 'age': 50, 'mother': {'name': 'janet doe'}, 'hobbies': ['smoking weed']}

# see how it replaces, it doesn't update partials/nested

boofhead fucked around with this message at 21:00 on Apr 24, 2024

|

#

?

Apr 24, 2024 20:53

#

?

Apr 24, 2024 20:53

|

|

- Cyril Sneer

- Aug 8, 2004

-

Life would be simple in the forest except for Cyril Sneer. And his life would be simple except for The Raccoons.

|

[quote="boofhead" post="539140163"]

If I want to take a base dict and change value in one line, I'll usually use a spread operator if the structure and changes are simple

Python code:config_1 = {'val1': 100, 'val2': 200}

# {'val1': 100, 'val2': 200}

config_2 = {**config_1, 'val2': 0}

# {'val1': 100, 'val2': 0}

|

#

?

Apr 24, 2024 21:32

#

?

Apr 24, 2024 21:32

|

|

- QuarkJets

- Sep 8, 2008

-

|

If your things are dataclasses you can neatly dictionary-ize one while creating the other

Python code:@dataclass

class Thing:

a: int

b: int

c: int

thing1 = Thing(a=1, b=2, c=3)

thing2 = Thing(**{**asdict(thing1), 'c': 4})

Python code:@dataclass

class Thing:

a: int = 1

b: int = 2

c: int = 3

thing1 = Thing()

thing2 = Thing(c=4)

|

#

?

Apr 26, 2024 08:32

#

?

Apr 26, 2024 08:32

|

|

- Falcon2001

- Oct 10, 2004

-

Eat your hamburgers, Apollo.

-

Pillbug

|

Data classes rule. Use them everywhere.

|

#

?

Apr 26, 2024 20:29

#

?

Apr 26, 2024 20:29

|

|

- CarForumPoster

- Jun 26, 2013

-

â¡POWERâ¡

|

Data classes rule. Use them everywhere.

I�ve met like three functions that should be a class.

|

#

?

Apr 26, 2024 20:35

#

?

Apr 26, 2024 20:35

|

|

- QuarkJets

- Sep 8, 2008

-

|

Wait a minute, I was just using dataclasses for something similar and was going to mention something annoying about them, which is

Python code:----> 1 thing1 = Thing()

TypeError: __init__() missing 3 required positional arguments: 'a', 'b', and 'c'

You can assign defaults in the dataclass definition, see my example

|

#

?

Apr 26, 2024 22:42

#

?

Apr 26, 2024 22:42

|

|

- Seventh Arrow

- Jan 26, 2005

-

|

I'm hoping that pyspark is python-adjacent enough to be appropriate for this thread (since the Data Engineering thread doesn't seem to be responding)

I'm applying for a job and Spark is one of the required skills, but I'm fairly rusty. They sprang an assignment on me where they wanted me to take the MovieLens dataset and calculate:

- The most common tag for a movie title and

- The most common genre rated by a user

After lots of time on Stack Overflow and Youtube, this is the script that I came up with. At first, I had something much simpler that just did the assigned task, but I figured that I would also add commenting, error checking, and unit testing because rumor has it that this is what professionals actually do. I've tested it and know it works but I'm wondering if it's a bit overboard? Feel free to roast.

code:# Set up the Google Colab environment for pyspark, including integration with Google Drive

from google.colab import drive

drive.mount('/content/drive')

!pip install pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, count, row_number, split, explode

from pyspark.sql.window import Window

import unittest

# Initialize the Spark Session for Google Colab

def initialize_spark_session():

"""Initialize and return a Spark session configured for Google Colab"""

return SparkSession.builder\

.master("local")\

.appName("Colab")\

.config('spark.ui.port', '4050')\

.getOrCreate()

"""

This code will ingest data from the MovieLens 20M dataset. The goal will be to use Spark to find the following:

- The most common tag for each movie title:

Utilizing Spark's DataFrame operations, the script joins the movies dataset with the tags dataset on the movie ID.

After joining, it aggregates tag data by movie title to count occurrences of each tag, then employs a window function

to rank tags for each movie. The most frequent tag for each movie is identified and selected for display.

- The most common genre rated by each user:

Similarly, the script joins the movies dataset, which includes genre information, with the ratings dataset on the movie ID.

It then groups the data by user ID and genre to count how many times each user has rated movies of each genre.

A window function is again used to rank the genres for each user based on these counts. The top genre for each user

is then extracted and presented.

"""

def load_dataset(spark, file_path, has_header=True, infer_schema=True):

"""Load the need files, with some error checking for good measure"""

try:

df = spark.read.csv(file_path, header=has_header, inferSchema=infer_schema)

if df.head(1):

return df

else:

raise ValueError("DataFrame is empty, check the dataset or path.")

except Exception as e:

raise IOError(f"Failed to load data: {e}")

def calculate_most_common_tag(movies_df, tags_df):

"""Calculates the most common tag for each movie title."""

try:

movies_tags_df = movies_df.join(tags_df, "movieId")

tag_counts = movies_tags_df.groupBy("title", "tag").agg(count("tag").alias("tag_count"))

window_spec = Window.partitionBy("title").orderBy(col("tag_count").desc())

most_common_tags = tag_counts.withColumn("rank", row_number().over(window_spec)) \

.filter(col("rank") == 1) \

.drop("rank") \

.orderBy(col("tag_count").desc())

return most_common_tags

except Exception as e:

raise RuntimeError(f"Error calculating the most common tag: {e}")

def calculate_most_common_genre(ratings_df, movies_df):

"""Calculates the most common genre rated by each user."""

try:

movies_df = movies_df.withColumn("genre", explode(split(col("genres"), "[|]")))

ratings_genres_df = ratings_df.join(movies_df, "movieId")

genre_counts = ratings_genres_df.groupBy("userId", "genre").agg(count("genre").alias("genre_count"))

window_spec = Window.partitionBy("userId").orderBy(col("genre_count").desc())

most_common_genres = genre_counts.withColumn("rank", row_number().over(window_spec)) \

.filter(col("rank") == 1) \

.drop("rank") \

.orderBy(col("genre_count").desc())

return most_common_genres

except Exception as e:

raise RuntimeError(f"Error calculating the most common genre: {e}")

def main():

# Calling Spark session info

spark = initialize_spark_session()

# Load CSV files from MovieLens

movies_df = load_dataset(spark, "/content/drive/My Drive/spark_project/movies.csv")

tags_df = load_dataset(spark, "/content/drive/My Drive/spark_project/tags.csv")

ratings_df = load_dataset(spark, "/content/drive/My Drive/spark_project/ratings.csv")

# Perform analysis

most_common_tag = calculate_most_common_tag(movies_df, tags_df)

most_common_genre = calculate_most_common_genre(ratings_df, movies_df)

# Displaying results

print("Most Common Tag for Each Movie Title:")

most_common_tag.show()

print("Most Common Genre Rated by User:")

most_common_genre.show()

class TestMovieLensAnalysis(unittest.TestCase):

'''This will perform some unittests on a truncated set of files in a dedicated testing directory'''

def setUp(self):

self.spark = initialize_spark_session()

self.movies_path = "/content/drive/My Drive/spark_testing/movies.csv"

self.tags_path = "/content/drive/My Drive/spark_testing/tags.csv"

self.ratings_path = "/content/drive/My Drive/spark_testing/ratings.csv"

def test_load_dataset(self):

# Assuming fake paths to simulate failure

with self.assertRaises(IOError):

load_dataset(self.spark, "fakepath.csv")

def test_calculate_most_common_tag(self):

movies_df = load_dataset(self.spark, self.movies_path)

tags_df = load_dataset(self.spark, self.tags_path)

result_df = calculate_most_common_tag(movies_df, tags_df)

self.assertIsNotNone(result_df.head(1))

def test_calculate_most_common_genre(self):

ratings_df = load_dataset(self.spark, self.ratings_path)

movies_df = load_dataset(self.spark, self.movies_path)

result_df = calculate_most_common_genre(ratings_df, movies_df)

self.assertIsNotNone(result_df.head(1))

if __name__ == "__main__":

main()

unittest.main(argv=['first-arg-is-ignored'], exit=False)

|

#

?

Apr 26, 2024 23:19

#

?

Apr 26, 2024 23:19

|

|

- nullfunction

- Jan 24, 2005

-

-

Nap Ghost

|

At first, I had something much simpler that just did the assigned task, but I figured that I would also add commenting, error checking, and unit testing because rumor has it that this is what professionals actually do. I've tested it and know it works but I'm wondering if it's a bit overboard? Feel free to roast.

I'll preface this with an acknowledgement that I'm not a pyspark toucher so I'm not going to really focus on that. From the point of view of someone who is reviewing your submission, I'm very happy to see comments, error checking, and unit tests! They give me additional insight into how you communicate information about your code and how you go about validating your designs. However, if the assignment was supposed to take you 4 hours and you turn in something that looks like it's had 40 put into it, that isn't necessarily a plus.

Since you're offering it up for a roast, here are some things to consider:

- Your error handling choices only look good on the surface. Yes, you've made an error message slightly more fancy by adding some text to it, yes, the functions will always raise errors of a consistent type. They also don't react in any meaningful way to handle the errors that might be raised or enrich any of the error messages with context that would be useful to an end user (or logging system). You could argue that they make the software worse because they swallow the stack trace that might contain something meaningful (because they don't raise from the base exception).

- Docstrings for each function are a good practice. Some docstring formats have a description for each argument, raised exception, and a description of the return value, and I tend to like these because it's helpful to tie relevant info directly to an argument. The docstrings you wrote contain the function's name, just reworded, and are not useful. In fact, you could take the tiny bit of extra information from the docstring and put it back into the function name and have a function name that is even better than the one you started with. calculate_most_common_genre_by_user is better than calculate_most_common_genre (but I would probably go with top_genre_by_user personally).

- Normalize your use of single or double quotes. Run it through a formatter like black or ruff. The best case is that the reviewer doesn't notice or thinks it's a bit sloppy, the worst case is that they assume you copied it from two different websites.

- You don't have any type annotations in your arguments or on your return values. Help your IDE help you.

- Your unit tests check that an answer was returned, but stop short of actually seeing if that answer is correct. Constructing a tiny fake dataset with some known answers for your unit tests is a great way to validate that, and it seems like that's what you did from the filenames, but it seems unfinished.

It's clear that you've seen good code before and have some idea of what it should look like when trying to write it for yourself, but you're missing fundamentals and experience that will allow you to actually write it. To be clear this is a fine place to be for a junior. If this is for a junior role, submitted as-is it's a hire from me but there's a lot of headroom for another junior to impress me over this submission.

|

#

?

Apr 27, 2024 00:36

#

?

Apr 27, 2024 00:36

|

|

- QuarkJets

- Sep 8, 2008

-

|

I don't like how the function code is all wrapped in large try/except blocks that raise new errors. That's not really error checking, it's error obfuscation; yes, you print the caught exception object, but it's hiding the stack trace for no good reason. If you absolutely felt like you had to add more context to exceptions, like if you wanted to use logging or send a notification to someone, then you could use exception chaining to raise the original exception

Python code:def test():

try:

raise RuntimeError('foo')

except RuntimeError as e:

logging.warning(f'gently caress, I saw exeption {e}!!!')

raise # <-- This simple line raises the original exception as though it wasn't caught at all

Don't run pip install commands in your notebook code. A comment that describes what's needed is fine.

|

#

?

Apr 27, 2024 03:15

#

?

Apr 27, 2024 03:15

|

|

- Seventh Arrow

- Jan 26, 2005

-

|

Thanks guys, much appreciated! I will look into your suggestions.

|

#

?

Apr 27, 2024 06:03

#

?

Apr 27, 2024 06:03

|

|

- Adbot

-

ADBOT LOVES YOU

|

|

|

#

?

Apr 27, 2024 20:00

|

|

- StumblyWumbly

- Sep 12, 2007

-

Batmanticore!

|

Any recommendations for places to start with interfacing dlls and python?

I'm interested in playing around with this and trying to make some file parsing stuff faster by reading binary data in c, then passing it to python for the higher level stuff.

I've used ctypes before, but I'm under the impression dll stuff has changed in the last 4 versions or so, and I'm worried searching will suggest bad habits.

|

#

?

Apr 27, 2024 15:33

#

?

Apr 27, 2024 15:33

|

|