|

I'm confused as to what you are asking, but doing say you combine the rotation and translation matrices of the first bone into M1. M1 = T1*R1. Then the end of your tentacle is at V1 = M1 * V0. You can Rotate the next bone around its center via M2 = M1 * R2. Or you can rotate the transformed point via M2 = R2 * M1. Which do you want? Or you can just make the matrix yourself Think of the first 3 columns as the axes of a coordinate space. (the first is the x-axis, the second the y-axis, etc) That lets you define which direction the bone is facing. The last column is position. Spite fucked around with this message at 06:10 on May 17, 2010 |

|

|

|

|

| # ? Apr 19, 2024 03:17 |

|

A little while ago I asked about the performance of branching in shaders, thanks for the answers by the way, but I'd like to know where to find that sort of info myself. I'm guessing this is GPU dependent and outside the scope of specific APIs which would explain why I don't recall anything about this mentioned in the D3D10 documentation. A quick google search doesn't seem to return anything useful either. I've found some info on NVIDIA's website but it's mostly stuff about how some GPU support dynamic branching and very little about performance. Ideally I'd like something that goes in some amount of detail about modern GPU architectures so I can really know why and when it's not a good idea to use branching in shaders, preferably with actual performance data shown. I guess the real answer here would be to write some code and test the drat thing myself, but I'm wary of generalizing whatever results I'd get without better understanding of the underlying hardware.

|

|

|

|

Spite posted:I'm confused as to what you are asking, but doing say you combine the rotation and translation matrices of the first bone into M1.  Now the left diagram is the 'bind pose' of the tentacle - note that in the red 'mesh', point P1 is already beyond the tip of bone 3. For simplicity's sake, that point will be skinned 100% onto bone 3. What I need is the single matrix for bone 3 that would transform that point P1 into its new position in the second diagram, when the bones have rotations added. Neither of the things you propose do that; a simple proof of that is that when the bones aren't rotated (ie. we're still in bind pose), M1, M2 and M3 should all be an identity matrix since no mesh points need to be moved, but both your versions of M2 (even before adding in the third bone) would be a translation matrix up the length of bone 1. That's the transformations that I already have, that I think are the same ones you'd get from the XNA function "model.copyAbsoluteBoneTransformsTo". I'm now going to try the equivalent of the totally unintuitive XNA code I mentioned last night.

|

|

|

|

Maybe I'm missing something, but why not translate so that the tip of bone 3 is the origin (in the 'bind pose' - so that would be (L3*L2*L1)^-1 ), then perform the standard transform L3*R3*L2*R2*L1*R1?

|

|

|

|

Hobnob posted:Maybe I'm missing something, but why not translate so that the tip of bone 3 is the origin (in the 'bind pose' - so that would be (L3*L2*L1)^-1 ), then perform the standard transform L3*R3*L2*R2*L1*R1?  I'm happy to do crazy differentiation of quartic equations for collision detection but this stuff just remains really alien and opaque to me. It doesn't help that the DirectX docs do a really poor job of explaining exactly what happens with the bone matrices - if I have it figured right now, if you have 2 weights in your vertex, 0.3 of worldmatrix4 and 0.3 of worldmatrix5 then the end result would be 0.4*world + 0.3*worldmatrix4 + 0.3*worldmatrix5. Is this right?

|

|

|

|

YeOldeButchere posted:A little while ago I asked about the performance of branching in shaders, thanks for the answers by the way, but I'd like to know where to find that sort of info myself. I'm guessing this is GPU dependent and outside the scope of specific APIs which would explain why I don't recall anything about this mentioned in the D3D10 documentation. A quick google search doesn't seem to return anything useful either. I've found some info on NVIDIA's website but it's mostly stuff about how some GPU support dynamic branching and very little about performance. Ideally I'd like something that goes in some amount of detail about modern GPU architectures so I can really know why and when it's not a good idea to use branching in shaders, preferably with actual performance data shown. That's all really proprietary stuff, so I doubt you'd get the actual numbers. On modern cards, I think it's around the order of 2000-4000 pixels for branching and discard. So if you can reasonably assume a block of that size will go down that path, you'll get the branch prediction benefit. Prediction misses are really expensive, so if you have a very discontinuous scene (in terms of code path), your perf will tank. Of course, testing is the best way to determine this, as you say.

|

|

|

|

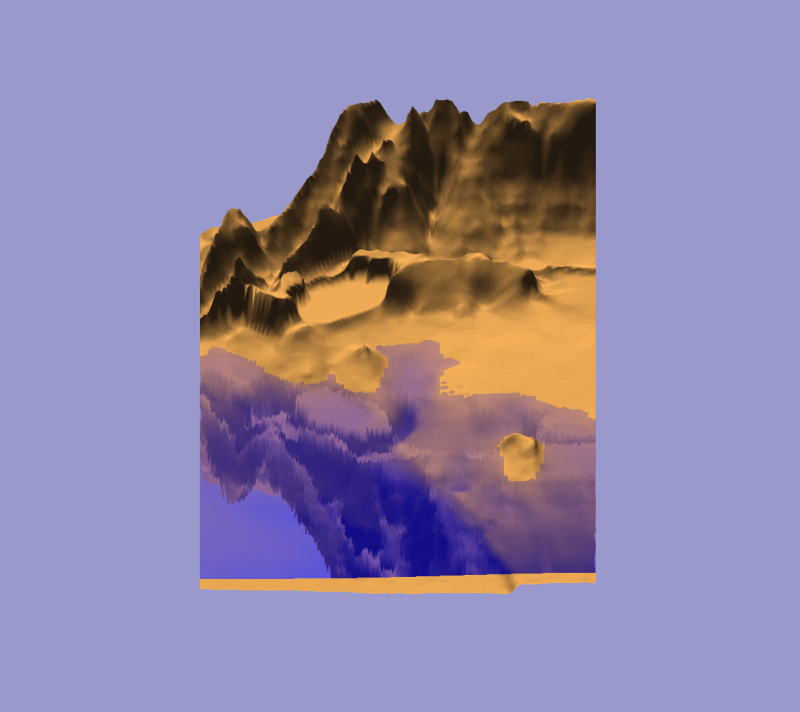

edit: Never mind, now I'm wondering why the hell noise() is always returning 0.0. Apparently it's designed that way. What the christ good is that? edit2: Victory! Sorta. The reflection isn't quite right.

UraniumAnchor fucked around with this message at 06:20 on May 18, 2010 |

|

|

|

YeOldeButchere posted:A little while ago I asked about the performance of branching in shaders, thanks for the answers by the way, but I'd like to know where to find that sort of info myself. I'm guessing this is GPU dependent and outside the scope of specific APIs which would explain why I don't recall anything about this mentioned in the D3D10 documentation. A quick google search doesn't seem to return anything useful either. I've found some info on NVIDIA's website but it's mostly stuff about how some GPU support dynamic branching and very little about performance. Ideally I'd like something that goes in some amount of detail about modern GPU architectures so I can really know why and when it's not a good idea to use branching in shaders, preferably with actual performance data shown. It's going to really vary, not just from vender to vendor but from chip to chip. If you want to test it yourself, you probably want to test (a) static branches, (b) branches off of constant buffer values, and (c) dynamic branches (either on interpolants or on texture lookups) at varying frequencies. It's really hard for you to test, though, because it's not obvious how the compilers will interpret the code branch into assembly (as a conditional move versus an actual branch).

|

|

|

|

Another strange problem, I'm wondering if someone knows a solution off the top of their head or has had similar expriences, if not I can dig around more. Anyway, I initialize my GLUT window thing like this:code:Anyway, if I try to put the game into full screen mode by replacing the above code with this: code:edit: even if I don't enter game mode, but just create a window and then do glutFullScreen, the CPU still goes wild, hmm. If I don't go full screen but make a window that's as big as my screen, the CPU usage stays low. hey mom its 420 fucked around with this message at 16:58 on May 18, 2010 |

|

|

|

^^^^ I noticed a similar thing with DirectX, though my framerate went wild too - are you measuring your framerate by counting passes through your code, or are you asking OpenGL for a framerate? Because for me, the difference is that the 'present' call is instant in full screen (even when directed to wait for a vsync, it's just waiting before displaying, not waiting before returning) but it waits for a vsync before returning in a window. So while it's 60FPS in actual frames, it's 300 passes through the code (or whatever). My loving problem with the skeleton is still not resolved. Here's a sample moment with actual numbers. The 'left forearm' bone has its bind-time inverse matrix thus: code:code:Now, the final translation for the bone I set as code:The end result is that it's rotating around the wrong point, and on the wrong axis - if I make a rotation around the bone's own axis (Y) on the torso it twists like it should, but a Y-axis rotation on the arm makes it rotate round a different axis, presumably because of the shoulder rotation below. The 'origin' it's rotating around is somewhere weird too, definitely not 0,0,0, nor the base of the bone. What am I doing wrong? (Note: everything is where it should be when the bones are back in bind-position.) Edit: gently caress, is the absolute bone matrix supposed to be comprised of just the rotations-in-place and no translations? If so, how do I calculate one of those, given that what I have to work with is lengths and rotations? roomforthetuna fucked around with this message at 20:19 on May 18, 2010 |

|

|

|

YeOldeButchere posted:A little while ago I asked about the performance of branching in shaders, thanks for the answers by the way, but I'd like to know where to find that sort of info myself. I'm guessing this is GPU dependent and outside the scope of specific APIs which would explain why I don't recall anything about this mentioned in the D3D10 documentation. A quick google search doesn't seem to return anything useful either. I've found some info on NVIDIA's website but it's mostly stuff about how some GPU support dynamic branching and very little about performance. Ideally I'd like something that goes in some amount of detail about modern GPU architectures so I can really know why and when it's not a good idea to use branching in shaders, preferably with actual performance data shown. Given the incredibly long pipelines that GPUs have, it should make sense that branching will be painful. The original fixed function pipelines could be hundreds of stages long. Obviously if you have to flush/stall on that you are going to lose significant throughput.

|

|

|

|

Is there a way to transform a heightmap with non-planar quads into one with planar quads?

|

|

|

|

UraniumAnchor posted:edit: Never mind, now I'm wondering why the hell noise() is always returning 0.0. Apparently it's designed that way. What the christ good is that? As a note, no GPU implements noise() in hardware. So don't ever use it.

|

|

|

|

At loving last! I finally got sick of trial-and-erroring my matrices and did a bit of simple pen-and-paper math to figure out exactly the order of transformations that would get the right results, got it going in that order, and thus found that my transformations were still askew even with the matrices done right. Why? Because the mesh was attached to the third bone of the skeleton, so I needed to offset the mesh 'base' by the position of those two bones before doing any of the matrix math. Which is to say, I'd been totally barking up the wrong tree for the last three days. Just in case anyone else needs the same matrix knowledge, the answer to what the bone matrix is for bone 3, in a row of 3 bones, is: code:Also probably worth mentioning that M3 = R3 * T2 * R2 * T1 * R1 is the same as M3 = R3 * T2 * M2, which is handy when you're iterating or recursing through the tree.

|

|

|

|

Spite posted:As a note, no GPU implements noise() in hardware. So don't ever use it. I figured this out and grabbed an actual implementation of it. I hope one of the later specs says that returning 0.0 all the time is NOT a valid noise generator.

|

|

|

|

roomforthetuna posted:skeletal animation stuff You probably want to use 3x4 matrices, you can invert them because they're always rotate-translate and you can just translate in the inverted rotation's space. If you need to steal some code to do this, you're welcome to steal terMat3x4 from my project. Anyway, any vertex or bone is always going to be expressed in either WORLD SPACE or the BONE SPACE of whatever it belongs to. Parentless bones belong to the world, so they are always in world space. You can convert between the two, and what you really want is to convert vertices out of world space (in their base pose) into bone space, then back into world space using the CURRENT bone poses instead of the base pose. To convert anything from bone space into world space, concatenate it with the WORLD-SPACE transform matrix of what it belongs to. To do the reverse, concatenate it with the inverse of that matrix. The most common approach to skeletal animation is matrix palette, which works as follows: - Compute the world-space transform matrices for each bone in the base pose. - Take the inverse of that, which gives you the world-to-bone-space matrices. When rendering: - Compute the world-space transform matrices for each bone. - Transform each vert out of its base pose into bone space. - Transform the bone space coordinate into world space based on the CURRENT pose. For those two transforms, what you want is, essentially: boneSpaceCoord = baseWorldSpaceCoord * Inverse(baseBonePoseWorldSpace) currentWorldSpaceCoord = boneSpaceCoord * currentBonePoseWorldSpace Because matrix multiplies are associative, you can concatenate Inverse(bonePoseWorldSpace)*currentBonePoseWorldSpace to produce a single matrix for transforming anything from that bone's base pose to its current position. So: baseToCurrentMatrix = Inverse(baseBonePoseWorldSpace) * currentBonePoseWorldSpace currentWorldSpaceCoord = baseWorldSpaceCoord * baseToCurrentMatrix Maybe I'll write up an article on this poo poo for Code Deposit since people get confused by this poo poo pretty easily. OneEightHundred fucked around with this message at 05:54 on May 19, 2010 |

|

|

|

I'm glad you didn't post that sooner, because I would have been utterly confused by wanting to be converting into world-space and inverse-world-space - to me, world space means that if you're rendering a 2x2x2 cube centered at 50,50,50 then its corners are at 49,49,49 etc. I've mostly seen "absolute" for what I think you're calling world-space, because it's centered on 0,0,0. And even aside from that it wouldn't have helped me because I was previously not clear on what the transform was that constitutes "currentBonePoseWorldSpace", which is why I was asking specifically for the full sequence of transforms in terms of individual bone lengths and rotations. Actually, I still don't know what your boneSpaceCoord and currentWorldSpaceCoord equations are about. If you're going to do an article I'd suggest a better explanation of what your terms and variables are, and also including mention that: 1. any transform necessary to get the mesh fitted in place on the skeleton goes at the start of the transform - for the position calculation but not for the inverse matrix. 2. any transform to get the whole skeletal object into its place in your world goes at the end of the skeletal transform, and must be done for every bone matrix.

|

|

|

|

roomforthetuna posted:If you're going to do an article I'd suggest a better explanation of what your terms and variables are, and also including mention that: Think of it this way: If you were to define a rotation and translation that would say, relative to the corner of your room, where your elbow was, you should be able to compute, relative to that, where your wrist is. It's like a foot and a half forward or something. If your elbow ever moves or rotates within the room, you can always compute your wrist's location given that. You don't really need to know where any other part of your body is, because your wrist is at a fixed position relative to your elbow. The reason you do things this way is so you don't have to do matrix mults for every bone in the heirarchy, you only need ONE matrix multiply You'd say then that "a foot and a half forward" is the bone-space location of your wrist. Normally, what you have is a base pose, where all of the bones are in pre-set locations and all of the verts are in pre-set locations. If you see character models in a sort of Vitruvian Man pose, that's the base pose. So I guess you could say you'd be calculating this: wbaseR2L2 = baseR1L1*baseR2L2 wbaseR3L3 = baseR2L2*baseR3L3 wR2L2 = R1L1*R2L2 wR3R3 = R2L2*R3L3 P1 = baseP1*(Inverse(wbaseR3L3)*wR3R3) Inverse(wbaseR3L3)*wR3L3 is the "deform" matrix and can be recycled for every vert attached to R3L3.

|

|

|

|

OneEightHundred posted:wbaseR2L2 = baseR1L1*baseR2L2 Also, not sure if it makes a difference when you're multiplying by transform-and-inverse, but when something is attached to bone 2 you shouldn't be going up the length of bone 2 - a point right by your wrist is not at your fingertips. Actually, just tried this, and yeah, it does make a difference - everything is all screwed up if you do R2L2 * R1L1. It is R2 * L1R1. This is assuming that R1 and L1 refer to the rotation of the joint at the base of bone 1, and the length of bone 1. Maybe your R1 refers to the rotation at the tip of bone 1, in which case you might be right but I've never seen anyone doing it that way. quote:Inverse(wbaseR3L3)*wR3L3 is the "deform" matrix and can be recycled for every vert attached to R3L3. (Also, I see your matrix multiply operator must be taking the operands in the same order as mine, since you put the inverse first here.) quote:The reason you do things this way is so you don't have to do matrix mults for every bone in the heirarchy, you only need ONE matrix multiply Thanks for saving me some multiplies there.

|

|

|

|

roomforthetuna posted:(Unless your matrix multiply operator takes its operands in a different order than mine, I guess!) So either way is valid, as long as you're consistent. (I actually do both, because SSE likes them one way and vertex shaders like them the other!) quote:Also, not sure if it makes a difference when you're multiplying by transform-and-inverse, but when something is attached to bone 2 you shouldn't be going up the length of bone 2 - a point right by your wrist is not at your fingertips. Don't think of them as bones, think of them as joints. Your fingertip bones are actually fixed relative to the joint they're attached to, and your fingernails (for example) rotate exclusively around that joint. So, it only makes sense to treat your fingernails as relative to the joint, rather than an arbitrary thing that is attached to that joint. OneEightHundred fucked around with this message at 20:56 on May 20, 2010 |

|

|

|

code:

|

|

|

|

heeen posted:

You're using CG? It tries to emulate directx by offering "profiles" - unfortunately they don't expose all the card's features (for example, it requires gpu_program4 instead of gpu_shader4). What are you trying to use texture_gather for? Shadow Maps?

|

|

|

|

I came across this ebook on the Real Time Rendering site - any thoughts on it? On one hand, Wolfgang Engel is one of the authors, but it's so terribly written that I'm wary to trust any of the technical details... Also, it looks like there's a second edition of Physically Based Rendering coming out! As I recall, one of the authors used to post on these here forums; is he still around here? If so, any thoughts on the new edition?

|

|

|

|

Dijkstracula posted:I came across this ebook on the Real Time Rendering site - any thoughts on it? On one hand, Wolfgang Engel is one of the authors, but it's so terribly written that I'm wary to trust any of the technical details... THE NEW EDITION IS TEN ZILLION HILLION SKILLION TIMES BETTER AND YOU SHOULD ALL BUY THREE COPIES EACH. (Author checking in  ) )

|

|

|

|

OpenGL noob here, hoping that someone could give me a bit of help. Alright, so I want to make a really simple 2D graphics engine with OpenGL. Basically, it would allow you to load in a texture (e.g. a .png using libpng, most likely RGBA format), apply it to a polygonal square or rectangle, and you could rotate or scale it, of course. I've been trying to read the OpenGL Red Book chapter on texturing, but my mind is seemingly unable to handle the concept of simply putting a texture (or part of one, for animation purposes) on a single, quadrilateral polygon. Can anyone give me a little help here. Also, is there anything else I have to do (reformat the image, take into account byte ordering, setting OGL settings or using glEnable/Disable, etc.) to get this to work properly? I can't imagine this could be that hard. I have managed to get a polygon drawn to the screen (I'm using GLFW, by the way), and I have a proper real-time loop created (GLFW doesn't use up main() like GLUT). I'm not a complete newbie at OpenGL, but I'm pretty close.

|

|

|

|

I'm not sure what your skill level is, but all textures need Texture Coordinates, which are values that determine the texture mapping. A mapping from 0 - 1.0 will give the entire texture in a specific dimension, whereas 0 - 0.5 will give half of it, etc. Usually this means you have two coordinates for the x and y dimensions of a quad. They are usually called s and t. Do you know how VBOs work? You'll need to set up your data in VBOs (please don't use Begin/End). You'll also need to Gen and upload the data to the GPU via TexImage2D. You can use glTexParameteri to set the filtering modes. There are Magnification filters (to magnify) and minification filters (to minify). GL_LINEAR is bilinear filtering and GL_LINEAR_MIPMAP_LINEAR is trilinear (which only makes sense for a minification filter). Then setup the VBO: glBindBuffer(GL_ARRAY_BUFFER, vbo); glVertexPointer(3, GL_FLOAT, 0, NULL); glBindBuffer(GL_ARRAY_BUFFER, tcVBO); glTexCoordPointer(2, GL_FLOAT, 0, NULL); turn on state: glEnableClientState(GL_VERTEX_ARRAY); glEnableClientState(GL_TEXTURE_COORD_ARRAY); bind the texture: glBindTexture(GL_TEXTURE_2D, tex); glEnable(GL_TEXTURE_2D); draw: glDrawArrays(GL_QUADS, 0, 4); Try the Superbible/blue book instead of the red book, because the red book is old.

|

|

|

|

Spite posted:Try the Superbible/blue book instead of the red book, because the red book is old.

|

|

|

|

Spite posted:I'm not sure what your skill level is, but all textures need Texture Coordinates, which are values that determine the texture mapping. A mapping from 0 - 1.0 will give the entire texture in a specific dimension, whereas 0 - 0.5 will give half of it, etc. Usually this means you have two coordinates for the x and y dimensions of a quad. They are usually called s and t. Blue book has been replaced by a reference website. But anyway, I actually found that Nate Robins' site was very helpful. Being able to play with the values manually helped a lot. Thank you, though. Especially for the bit about VBO's. I'm sure I can figure out how they work.

|

|

|

|

I swear if you could find a D3D book not overly reliant on D3DX then that would probably be a better way of learning OpenGL than any OpenGL book at this point.

|

|

|

|

OneEightHundred posted:I swear if you could find a D3D book not overly reliant on D3DX then that would probably be a better way of learning OpenGL than any OpenGL book at this point.

|

|

|

|

I'm writing a little program with a top-down camera (like Diablo) and I want to add some shadows. Is shadow volume or shadow mapping a better option for this? I'm concerned shadow mapping will produce aliasing effects since the light sources will be close to the ground but cast long shadows, but I've read in this thread that shadow volumes are more or less unused these days?

|

|

|

|

Dijkstracula posted:Honestly, the optimal solution is to build a time machine and flash forward two years, when this promising tutorial is finished Learning OpenGL ES 2.0 is also a relatively good choice since it doesn't support most of the deprecated features or the fixed pipeline as far as I know.

|

|

|

|

tractor fanatic posted:I'm writing a little program with a top-down camera (like Diablo) and I want to add some shadows. Is shadow volume or shadow mapping a better option for this? I'm concerned shadow mapping will produce aliasing effects since the light sources will be close to the ground but cast long shadows, but I've read in this thread that shadow volumes are more or less unused these days? There are some specific situations where shadow volumes are useful but for the most part they are not really used. You can filter your shadow maps to reduce aliasing effects, or increase the size of your shadow map buffer. If they are good enough for AAA titles then they should be good enough for you

|

|

|

|

Dijkstracula posted:Sadly, the first half of the Superbible still uses immediate mode / BEGIN/END, so it's only marginally better That's because Benj has decided he's never writing a book again. I'd love to spend a few months writing a really good tutorial, but I think my employer may frown on that. As for shadows, it depends on what you are targeting and what you are doing. Very old stuff and mobile phones with little VRAM will probably benefit from Shadow Volumes if you want to save memory. They also tend to have crappy fill-rate, which puts you in a bind since that technique burns fill. Shadow mapping is the most common implementation, but keep in mind it's harder to have a light the player can walk all the way around cast correct shadows. Think of a brazier or campfire - since you have to draw from the light's POV, you'd have to draw the scene 4-6 times to get all the info you need to do the shadowing. Or you could try something wonky with a 360 degree FOV, but I've never attempted that myself. Or just have certain objects be casters and only draw in that direction.

|

|

|

|

On Learning 3D: Really, though, it's better to learn the fundamentals of 3d graphics and real-time rendering and not tie yourself to an API. Once you get to the API level the rules of thumb are pretty simple: Put everything into vertex buffers Try to keep your scenes smaller than VRAM so you don't have to page Do minimal draw calls (cull out stuff that's not visible) Sort everything so you have as few state changes as possible - especially shader and rendertarget state (this is really freaking important) There's more, of course, but that's a decent start.

|

|

|

|

Edit: Figured out the problem when I decided to toss glutSwapBuffers() into the loop that draws the tiles. OpenGL draws from right to left in rows from bottom to top. Now my question is, how do I force OpenGL draw my way? Oh yeah, by flipping the y-axis. But now my map and my quads are upside down. Problem resolved. OpenGL and Transparency/Blending problem. I'm making a tile-based 2D map, and I'm drawing quads from left to right in rows from top to bottom. I have one 64x64 object surrounded by 32x32 objects. When blending is turned off, the larger object overlaps the objects that were drawn before it as expected. However, when blending is turned on, the larger object only overlaps the object to the left of it. Why exactly is this happening and how can a fix it? Here is an image showing what I've described.

Chris Awful fucked around with this message at 21:54 on Jun 10, 2010 |

|

|

|

Are you sorting your quads by z-level? Do you have the depth buffer enabled? Are you passing a z co-ordinate?

|

|

|

|

The1ManMoshPit posted:Are you sorting your quads by z-level? Do you have the depth buffer enabled? Are you passing a z co-ordinate? Yeah. I figured it out though. I changed glOrtho(left, right, bottom, top) too glOrtho(left, right, top, bottom.); thus, flipping the map. After that, I had to flip the large quad and it's texture, and modify a few other things.

|

|

|

|

I can get some recommendations on papers or tutorials for rendering fire effects? Any kind of fire.

|

|

|

|

|

| # ? Apr 19, 2024 03:17 |

|

This shows how to do fire with a particle engine, along with the code if you are familiar with C#/XNA.

|

|

|