|

Syano posted:Ive got a MD3200i being delivered soon. How do you like the thing? We have a MD3220i (the one with 24 disks), and my opinion of the thing is mixed. On the positive : Having a total of 8 iSCSI ports is great, the management software is vastly improved, it has 4 times the cache of the MD3000i and is generally a pretty nice bit of kit to manage. It also uses DM-Multipath on Linux (although you do still need to load the supplied RDAC drivers). On the downside : Despite various bits of documentation proclaiming XenServer compatibility, the driver installation makes modifications to /etc/multipath.conf which under XenServer is a symlink. You need to make sure the changes go into /etc/multipath-enabled.conf for it to work. Performance is a bit ho-hum, and it makes me wonder if Dell have deliberately crippled it so they can sell you the £3k "High performance" licence-key. On the major downside : There have been big QA problems with it. It arrived with 2 LEDs on the front not working. We called out for a replacement backplane, this arrived and was DOA. We got another replacement, this didn't work as the controllers refused to recognise it. Next day, a new backplane and new controller turn up - again, no joy. At this point, we have Dell tech support all over this issue, with engineers in two countries working on it. They finally manage to resolve the issue and have re-created it in a lab - it appears that when the replacement backplanes are tested before being shipped, they are installed in a test unit to verify they work. However, this "locks" the backplane to the test system controllers, so they cannot be recognised by any other system. The engineer had to hook up to our unit via serial connection, and issue some weird commands (looking like he was changing the contents of memory locations - like an old school "poke") and then the new backplane was recognised. Only, this replacement also had faulty LEDs on it. One week, and 6 replacement parts later, we finally have a working system. I know it's a new unit, but QA issues like this should really be caught before things leave the factory - and the issue with backplanes getting locked should never have happened. The engineers and Dell support team had no idea about it before we raised it; you would have expected that they would catch issues like this before customers run into them.

|

|

|

|

|

| # ? Apr 25, 2024 13:52 |

|

Intraveinous posted:I'm not sure if that's how EQL works it or not, but I hope it wasn't a waste of time. Not a waste of time at all, went back through it and still couldn't find an option for it. I submitted a support ticket to Dell yesterday and this what they said EquaLogic Support posted:Dell Storage Support received your request concerning your EQLC PS4000X stating that you have questions concerning multiple raid types on a single array. Which is fine, just would be nice to do multiple RAID types with the same member (physical unit). Apparently this requires you spend more than $30,000 or go with a different vendor

|

|

|

|

Syano posted:Ive got a MD3200i being delivered soon. How do you like the thing? Well, this was probably stupid on my part -- it's been thrown into a brand new and barely cabled infrastructure.. You basically need every port you're going to use connected to your LAN to configure it. I was hoping to just set up the mgmt ports then iscsi it later. I'll update when I've actually got it running and handling my VMs this weekend. Mine is a 3220i. Seems like a great machine physically.. Will update.

|

|

|

|

vty posted:I'll update when I've actually got it running and handling my VMs this weekend. I'd be interested in how your experiences compare to mine. What Virtualization platform are you using ?

|

|

|

|

Echidna posted:I'd be interested in how your experiences compare to mine. What Virtualization platform are you using ? I would be interested in hearing also. We are going to be doing P2V with Hyper-V on about 5 boxes over the next month or so. Looking forward to finally centralizing our storage.

|

|

|

|

Echidna posted:I'd be interested in how your experiences compare to mine. What Virtualization platform are you using ? Platform is HyperV on-top of Datacenter R2. I'm doing 2x R710s w/ 32gb ram, dual-quad xeons, and 10 nics for the iscsi. Going to be be doing the live migration load balancing between the hosts. My 3220i has 14x 15k sas drives, I believe 320s. Raid 10 iirc. Will update-- my missing equipment didn't come in today, so I wasn't able to get to it. Edit: I should mention I've also got an MD1200i directly attached that has around 8tb of storage for dfs shares etc. Edit2: I also have very little testing time before production, will do what I can. vty fucked around with this message at 03:01 on Aug 12, 2010 |

|

|

|

i always see how many nics people use for iSCSI and wonder, are they going overkill or are we woefully underestimating our needs. We use 3 nics on each host for iscsi, 2 for the host iscsi connections and 1 for the guest connections to our iSCSI network. We have 6 total nics on each of filers, setup as 2 3 nic trunks in an active/passive config. We have roughtly 100-120 guests (depends if you include test or not) and don't come close to the max throughput of our nics on either side.

|

|

|

|

Personally I'm of the mindset that it's so inexpensive to use those extra 2+ iscsi ports -- why not? I think an extra bcom5709 was $100-200. Doesn't really answer your throughput question though -- I'm too new to iscsi to give a good answer there, but what I said above was my basic logic in the purchase. Hopefully it's overboard!

|

|

|

|

regarding the NICs discussion, is anything wrong with using multiport NICs (apart from availability issues) or a single port NIC is the best solution?

|

|

|

|

ghostinmyshell posted:Also don't be afraid to open a case with NetApp, they don't mind these kind of cases if you think something is weird as long as you act like a reasonable human being. I'd like to emphasize this post. You are probably paying a lot of money to keep your equipment within support. Use it!

|

|

|

|

adorai posted:i always see how many nics people use for iSCSI and wonder, are they going overkill or are we woefully underestimating our needs. We use 3 nics on each host for iscsi, 2 for the host iscsi connections and 1 for the guest connections to our iSCSI network. We have 6 total nics on each of filers, setup as 2 3 nic trunks in an active/passive config. We have roughtly 100-120 guests (depends if you include test or not) and don't come close to the max throughput of our nics on either side. It's worth your while to install Cacti or Nagios somewhere to monitor the switches your filers are plugged into. Are we talking about NetApp filers here? If so you can also check autosupport for network throughput graphs.

|

|

|

|

Cultural Imperial posted:It's worth your while to install Cacti or Nagios somewhere to monitor the switches your filers are plugged into. Are we talking about NetApp filers here? If so you can also check autosupport for network throughput graphs.

|

|

|

|

adorai posted:i always see how many nics people use for iSCSI and wonder, are they going overkill or are we woefully underestimating our needs. We use 3 nics on each host for iscsi, 2 for the host iscsi connections and 1 for the guest connections to our iSCSI network. We have 6 total nics on each of filers, setup as 2 3 nic trunks in an active/passive config. We have roughtly 100-120 guests (depends if you include test or not) and don't come close to the max throughput of our nics on either side. All I can say is monitor your server's throughput and see if you're bouncing off the 1 gig ceiling. Most of my customers use 1 active gigE NIC for iSCSI with a standby and have no performance issues.

|

|

|

|

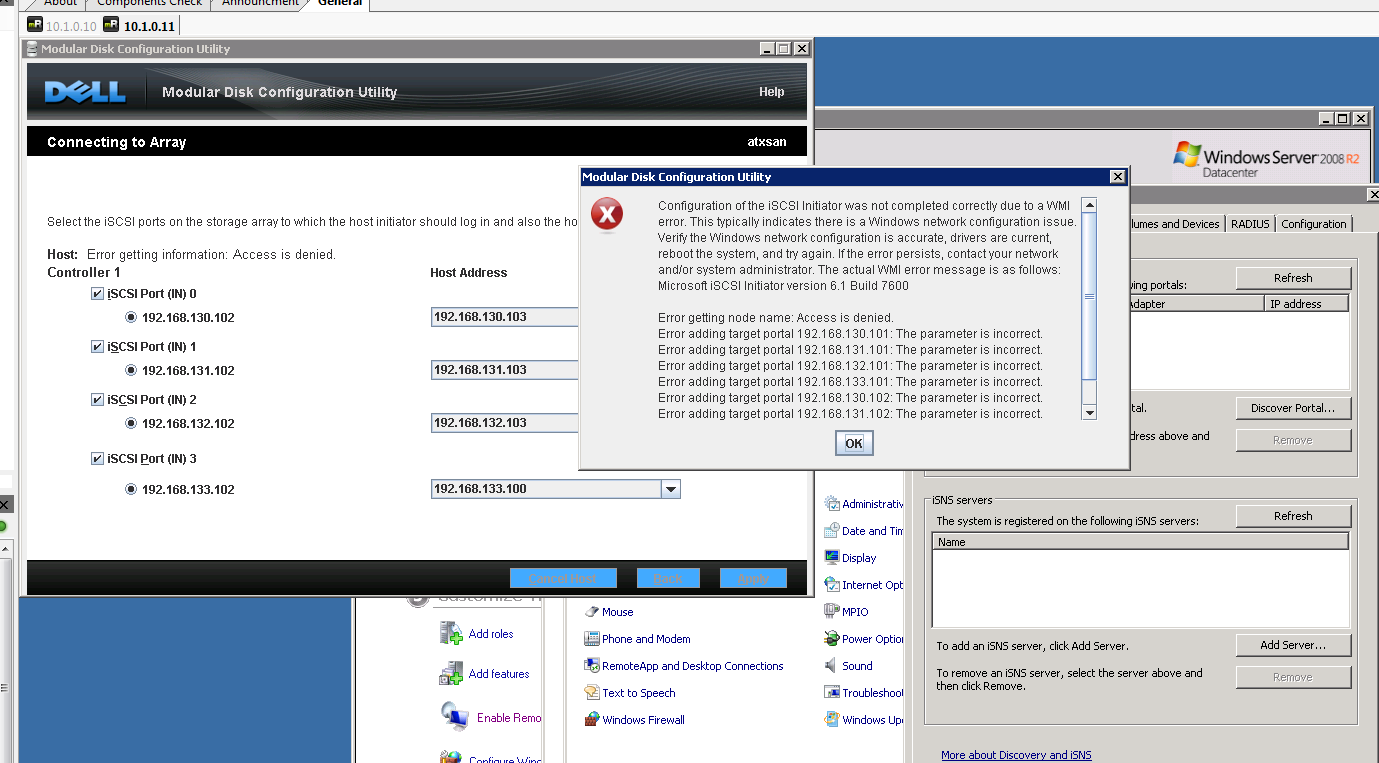

Alright, I'm completely stumped and need some assistance, this has been a major headache. I've got the iscsi controllers on their own switch completely - with several of my server nics plugged in. When I attempt to point my host initiator to the iscsi initator I'm getting access denied. The most generic access denied ever. Firewall disabled, can ping every single IP.  Click here for the full 1379x763 image. Edit: Wow. I decided to run as admin the dell config utility.. and voila. No-way. I've even reinstalled once I made so many changes that I lost count. Edit2: Nevermind. It now tells me the host name that in the picture above has "access denied" (left) but now I'm getting Root\ISCSIPRT\0000_0 Initiator Errors down the line on every connection. ugh. vty fucked around with this message at 13:20 on Aug 14, 2010 |

|

|

|

vty posted:Alright, I'm completely stumped and need some assistance, this has been a major headache. Is your Dell storage device a member of the same Windows domain as your server? As well, do you see anything in your event logs? edit: I'm reading through this manual: http://www.google.co.uk/url?sa=t&so...N4O4y6nS-mr7quA Have you gone throught the host access configuration stuff on page 43? namaste friends fucked around with this message at 15:29 on Aug 14, 2010 |

|

|

|

Well, I just renamed my initiators back to their generic iqn names and they're now connecting. So, if you buy a powervault, don't get all specific with your iqn name, as apparently it has to be the default format, and not something like "serveriscsi1". Edit: That, and the whole run as admin thing. Oh 2008. vty fucked around with this message at 22:41 on Aug 14, 2010 |

|

|

|

Anyone here have hands on experience with a Compellent? We are looking at replacing our EMC CX3-40 and Compellent is on our short list. They offer a lot of interesting features at a much lower cost than say NetApp, but being a newer, smaller company I'm somewhat nervous about them. Any feedback would be much appreciated.

|

|

|

|

amishpurple posted:Anyone here have hands on experience with a Compellent? We are looking at replacing our EMC CX3-40 and Compellent is on our short list. They offer a lot of interesting features at a much lower cost than say NetApp, but being a newer, smaller company I'm somewhat nervous about them. Yes. Feel free to ping me on AIM parisioa, or msn parisioa@hotmail.com

|

|

|

|

cosmin posted:regarding the NICs discussion, is anything wrong with using multiport NICs (apart from availability issues) or a single port NIC is the best solution? Theoretically you could have issues with crap cards that have ToE implementations that can't keep up with 4Gb being pushed through in round-robin or something, but I've never run into anything like that.

|

|

|

|

Building a new fileserver, would like to use ZFS, but I would rather use linux than solaris... is this legit now?: http://github.com/behlendorf/zfs/wiki

|

|

|

|

Eyecannon posted:Building a new fileserver, would like to use ZFS, but I would rather use linux than solaris... is this legit now?: http://github.com/behlendorf/zfs/wiki And while we're at it, what's the difference between ZFS on linux and btrfs?

|

|

|

|

Anybody have experience with Promise VessRAID units? I know it's super low-end, but it's just going to be our backup-to-disk target/old files archive. My concern with the unit is that it's been synchronizing the 6TB array for almost 23 hours now, and it's at 79%. If I add another array down the road and it takes a day to synchronize, the existing array better be usable.

|

|

|

|

Eyecannon posted:Building a new fileserver, would like to use ZFS, but I would rather use linux than solaris... is this legit now?: http://github.com/behlendorf/zfs/wiki

|

|

|

|

Misogynist posted:OpenSolaris was definitely the best option, but with that project dead in the water, your best bets are probably Nexenta and FreeBSD in that order.

|

|

|

|

Bluecobra posted:What's wrong with x86 Solaris 10?

|

|

|

|

Any recommendations on RAID controllers for a small array of SSDs (4-6). Will be in either Raid 10 or RAID 5/6 if the controller is good enough at it. Read heavy database (95%+), so R5/6 write penalty shouldn't be too big an issue. The HP Smart Array P400 results below: code:

|

|

|

|

Intraveinous posted:Any recommendations on RAID controllers for a small array of SSDs (4-6). Will be in either Raid 10 or RAID 5/6 if the controller is good enough at it. Read heavy database (95%+), so R5/6 write penalty shouldn't be too big an issue. That's impressive! What's your rationale for RAID10? What's the database?

|

|

|

|

I know this has to be an elementary question but I just wanted to throw it out there anyways to confirm with first hand experience. We have our new MD3200i humming along just nice and we have the snapshot feature.Before i start playing around though I assume that snapshotting a LUN that supports transactional workloads like SQL or Exchange is probably a bad idea right?

|

|

|

|

Syano posted:I know this has to be an elementary question but I just wanted to throw it out there anyways to confirm with first hand experience. We have our new MD3200i humming along just nice and we have the snapshot feature.Before i start playing around though I assume that snapshotting a LUN that supports transactional workloads like SQL or Exchange is probably a bad idea right?

|

|

|

|

Keep in mind that there may be a substantial performance penalty associated with the snapshot depending on how intensive, and how latency-sensitive, your database workload is. Generally, snapshots are intended for fast testing/rollback or for hot backup and should be deleted quickly; don't rely on the snapshots themselves sticking around as part of your backup strategy. The performance penalty scales with the size of the snapshot delta.

|

|

|

|

Misogynist posted:Keep in mind that there may be a substantial performance penalty associated with the snapshot depending on how intensive, and how latency-sensitive, your database workload is. Generally, snapshots are intended for fast testing/rollback or for hot backup and should be deleted quickly; don't rely on the snapshots themselves sticking around as part of your backup strategy. The performance penalty scales with the size of the snapshot delta.

|

|

|

|

adorai posted:are speaking specifically to the md3200i or snapshots in general? Snapshots in general. Ones done at the storage level generally have the least impact, but there is always an amount of overhead for them. If your backup consists solely of snapshots, you are going to run into trouble. They are a great tool, but just one of the many needed to reliably backup and protect data.

|

|

|

|

So talk to me a bit more in depth about hardware based snapshots then. I am guessing based on what you guys are saying that my best use of them as a backup tool would be to take a snapshot, mount that snapshot to a backup server, run my backup out of band from the snapshot, then delete the snapshot when that is done as not to inccur a ton of overhead? That sound about right?

|

|

|

|

Syano posted:So talk to me a bit more in depth about hardware based snapshots then. I am guessing based on what you guys are saying that my best use of them as a backup tool would be to take a snapshot, mount that snapshot to a backup server, run my backup out of band from the snapshot, then delete the snapshot when that is done as not to inccur a ton of overhead? That sound about right?

|

|

|

|

I think you'd be wise to test first to see what performance degradation you're getting without doing that.

|

|

|

|

EoRaptor posted:there is always an amount of overhead for them. Now I'll admit, there are some extra pieces we use to put our databases into hot backup mode and we create a vmware snapshot before we snap the volume that hosts the lun, but it's not changing the snapshot itself, just making sure the data is consistent before we snapshot.

|

|

|

|

adorai posted:This is simply not true. A copy on write snapshot, like the kind data on tap (netapp) and zfs (sun/oracle) use have zero performance penalty associated with them. Additionally, snapshots coupled with storage system replication make for a great backup plan. We keep our snapshots around for as long as we kept tapes, and we can restore in minutes. If you keep three months of one snap each day writing one block will result in 90 writes per block? Im sure that database will be fast for updates. Edit: i guess that depends on how smart the software is. clasical snaps would turn to poo poo. conntrack fucked around with this message at 15:18 on Sep 2, 2010 |

|

|

|

We are looking into getting new storage. We are a small vfx studio with 15-20 artist (depending on freelancers / project ) and about 15 render nodes. We are hoping to double that within the coming year. We do most work in Maya,Renderman,Nuke and have over the last year done a lot of fluid simulations resulting in rather big data sets. We are pretty much all linux, apart from a few mac and a couple of windows laptops. We have gotten quotes from both Isilon and Blue Arc as well as looked in to building something ourself with glusterFS (other suggestions appreciated). I am curious to hear what experience you people have had with these two companys? The configurations we have gotten are: Blue Arc: Mercury 100 with 4 disk enclosures with 48x1 TB SAS 7200 RPM drives. The total throughput ( with enough disks) of the mercury 100 is 1400 MB/s although with the amount of disks in our offer they are saying something around 500-600 MB/s. Isilon: 5 x IQ 12000x nodes with a total of 60x1 TB SATA-2 7200 RPM drives, giving us 60 TB of raw storage, about 43 TB of usable. They claim that each node is able to do about 200 MB/s giving us an aggregated throughput of about 1 GB/s. Both company's have ended up meeting our budget of around $ 90 000. What annoys me is the support cost, especially for Isilon who want $22578 a year, Blue Arc are somewhat more sane with $7245 a year. They both give us 24x7x365 support and offer next day delivery on parts. The systems are extremly scalable both in performance and size which is what we need, we have also asked to get some kind of price guarantee on future upgrades. I have more info about the quotes at work so ask away if there is anything else you wounder.

|

|

|

|

pelle posted:What annoys me is the support cost, especially for Isilon who want $22578 a year, Blue Arc are somewhat more sane with $7245 a year. They both give us 24x7x365 support and offer next day delivery on parts. I don't have experience with either, but make sure you look at the support & maintenance costs beyond just year 1. Have them give you costs through at least year 5 if possible and then ask what happens when you hit the magical year 5. Will you have to forklift upgrade to the latest and greatest? Can you still get support beyond year 5? These are some of the question you'll definitely want to ask and get all the answers in writing.

|

|

|

|

|

| # ? Apr 25, 2024 13:52 |

|

conntrack posted:If you keep three months of one snap each day writing one block will result in 90 writes per block? Im sure that database will be fast for updates. Something like a NetApp will maintain a hash table of where every logical block is physically located for any given level of the snapshot. True copy-on-write will simply allocate an unwritten block, write the data, and then change the hash table to point to the new data. Any reads will also check the hash table. Very minor performance overhead, brilliant for all sorts of things.

|

|

|