|

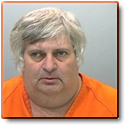

http://lesswrong.com/  The Rules The Rules 1) Don't post on Less Wrong. Don't go on Less Wrong to tell them how stupid they are, don't try to provoke them, don't get involved with Less Wrong at all. Just post funny stuff that comes from the community. 2) Don't argue about religion or politics This should go without saying. There is a forum for those shenanigans, and it is not PYF. Go ahead and bring up LW's skewed take on religion and politics, but don't get into serious derails about it. 3) No amateur psychology or philosophy hour If there's one thing we can learn from Less Wrong, it's not pretending to be an expert in a field you are very much not an expert in. 4) No drama. If you are a LW poster who feels the need to defend the honor of Less Wrong, please don't, you aren't gonna convince anyone. And if anybody sees someone try to do this, quietly move on or report. What is this? Less Wrong is the brainchild of one man, Eliezer Schlomo Yudkowsky:  This guy This guyYudkowsky is an autodidact(Read: High School dropout) who got involved with the wild world of futurist/transhumanist snake oil salesmanship, and founded a site called Less Wrong. Less Wrong is part Yudkowsky's personal blog to talk about the applications and art of being smarter than you are, part forum for Bayesians/Rationalists/Whatever the hell they're calling themselves this week to talk about the applications and art of being smarter than you are, and part recuitment and fundraising office for donations to MIRI, or the Machine Intelligence Research Institute(formerly the Singularity Institute for Artificial Intelligence) a group Yudkowsky runs. Less Wrong hopes to make humanity more rational, protect the universe from the development of evil(or "unfriendly") AI and usher in a golden era where we will all be immortal deathless cyborgs having hot sex with sexbots on a terraformed Mars. Sounds weird. So what's the problem? Two words: bottomless arrogance. While the title implies a certain degree of humility Yudkowsky is not a man who does well with the painful and laborious process of actually gaining knowledge via science or any of the other ways humanity has developed to become smarter. Instead Yudkowsky prefers to deliver insights from pop sci books filtered through his own philosophy of Bayesianism as if throwing down nuggets of golden truth to the unwashed masses beneath the Mount Olympus that is his perch of rationality. By which I mean he is very, very Due to the atmosphere of "we must look past our biases no matter what" Less Wrong is also very vulnerable to PUAs who play on the social awkwardness of LW nerds, "Human Biodiversity or HBD" advocates who tell LWers that they, as Jews, Asians, and White people, are naturally smarter than other races and deserve to reign supreme, and self-proclaimed reactionaries(more on those later). See here for examples of all three What's Bayesianism? Bayesian Probability is an interpretation of the concept of probability based around a formula known as a Bayes theorem. It is a complicated, heady, and richly debated view in statistics that LW really doesn't understand much better than you do. Basically "Bayesian" in Less Wrong terms just means someone who updates their views based on evidence. It's more complicated than that, but that's all you really need to know. So what are reactionaries and what is this "More Right" sister site to Less Wrong? Well first you have to understand that the vast majority of Less Wrong consists of blandly Left-Libertarians, politically speaking. However, Less Wrong tends to quickly shut down any political debate with the phrase Politics is the Mind-Killer which means that political debates are impossible to win, because everyone gets too emotional and illogical. Republicans and Democrats(Less Wrong is mostly American) are sometimes referred to as "The Reds and the Greens" after chariot racing teams that caused riots in ancient Rome. All this has led to is crazies claming to be beyond the political spectrum popping out of and into Less Wrong. These crazies are generally referred to as "Reactionaries" or "Contrarians" Reactionaries are a group of nerd fascists who strongly believe in racial supremacy, the failure of democracy, and the strength of traditional values. They are a large, fractious group loosely united around a figure named Mencius Moldbug AKA Curtis Yarvin, a blogger who used to comment on Overcoming Bias, another LW sister site. Less Wrong has exactly zero idea of what to do with these people. They can't admit that they're outright idiots, because that would involve admitting that someone could go through the Bayesian wringer and still be radically wrong. They can't just ignore them, because nerd fascism makes Less Wrong look bad, and they can't chase them out because LW is already made up of social outcasts. As such, they've taken to the policy of politely disagreeing with them when not ignoring them. This has lead to reactionaries forming a sister site called More Right. When the Reactionaries lead to Less Wrong getting bad publicity, the standard reaction is to stress that they are a minority and have little impact on the site as a whole. The fact that reactionaries include the 15th most upvoted LW poster of all time and the Media Director for MIRI will generally not be mentioned. Be prepared for accusations of trying to purge the politically incorrect if you do take issue while not being a member of the community. Links to important LW associated material http://lesswrong.com/ The site, the myth, the legend. http://www.overcomingbias.com/ This blog is run by Robin Hanson, Yudkowsky's mentor, a GMU economicist. This is where Yudkowsky used to blog before he created Less Wrong. Robin Hanson is a proponent of the idea of signalling, which means that everything non-LW people say is actually a secret attempt to gain social status. This is not unfalsifiable ad hominem because Hanson says it isn't. http://www.moreright.net Reactionary blog run by MIRI Media director Michael Anissimov, and some other associated reactionaries http://slatestarcodex.com/ Slate Star Codex is a blog run by Yvain AKA Scott Alexander, the thinking man's rationality blogger. Much like Yudkowsky is a stupid person's idea of what a smart person sounds like, Yvain is a what a mature idiot who has grown as a person thinks a smart person sounds like. http://hpmor.com/ This is the site for Harry Potter and the Methods of Rationality, a Harry Potter fanfic Yudkowsky wrote. Yes really. http://wiki.lesswrong.com/wiki/LessWrong_Wiki Here is the wiki for Less Wrong. If you're confused about any LW terms, go here to get it straight from the horse's mouth. http://rationalwiki.org/wiki/Roko%27s_basilisk The saga of Robot Devil. Read this if you do not read any of the other links in this thread. It is great. The Vosgian Beast fucked around with this message at 12:44 on Dec 12, 2014 |

|

|

|

|

| # ? Apr 19, 2024 15:02 |

|

Yudkowsky writes Harry Potter fanfiction to showcase his hyper-rational philosophy! Here's two eleven-year-olds bonding on the train to Hogwarts:A Rationalist posted:"Hey, Draco, you know what I bet is even better for becoming friends than exchanging secrets? Committing murder."

|

|

|

|

Listen, there's a very important disclaimer about the Methods of Rationality:quote:All science mentioned is real science. But please keep in mind that, beyond the realm of science, the views of the characters may not be those of the author. Not everything the protagonist does is a lesson in wisdom, and advice offered by darker characters may be untrustworthy or dangerously double-edged. So, like, whatever man. Draco is just being dangerously double-edged probably, or just really ironic and random. Also the RationalWiki on Yudkowsky says, about some of his AI beliefs: quote:Yudkowsky's (and thus LessWrong's) conception and understanding of Artificial General Intelligence differs starkly from the mainstream scientific understanding of it. Yudkowsky believes an AGI will be based on some form of decision theory (although all his examples of decision theories are computationally intractable) and/or implement some form of Bayesian logic (same problem again). Can anyone elaborate on what makes this unworkable? I'm not trying to challenge someone who DARES to disagree with Yudkowsky -- I don't know poo poo about these topics -- I'm just curious as to what makes his understanding of AI or decision theory wrong. Also thanks for a great OP!

|

|

|

|

The header of Harry Potter and the Methods of Rationality says "this starts getting good around chapter five, and if you don't like it by chapter ten, you probably won't 'get it'". I got as far as reading a page of ch 5 and two pages of ch 10. The latter involves Harry putting on the sorting hat, and because he believes that a device with sufficient ability to sort students would be a magical AI, it spontaneously becomes one. Then he blackmails it for information because it doesn't want the Fridge Horror of blinking in and out of conscious existence for some reason that is really obvious to the author but very, very poorly explained. (e: oh, now I see, its the author' very idiosyncratic death phobia). The Vosgian Beast posted:http://rationalwiki.org/wiki/Roko%27s_basilisk The saga of Robot Devil. Read this if you do not read any of the other links in this thread. It is great. seriously, this is not to be missed. Forums Barber fucked around with this message at 22:21 on Apr 19, 2014 |

|

|

|

Torture vs Dust SpecksYudkowsky posted:Now here's the moral dilemma. If neither event is going to happen to you personally, but you still had to choose one or the other: Robin Hanson posted:Wow. The obvious answer is TORTURE, all else equal, and I'm pretty sure this is obvious to Eliezer too. But even though there are 26 comments here, and many of them probably know in their hearts torture is the right choice, no one but me has said so yet. What does that say about our abilities in moral reasoning? Brandon Reinhart posted:Dare I say that people may be overvaluing 50 years of a single human life? We know for a fact that some effect will be multiplied by 3^^^3 by our choice. We have no idea what strange an unexpected existential side effects this may have. It's worth avoiding the risk. If the question were posed with more detail, or specific limitations on the nature of the effects, we might be able to answer more confidently. But to risk not only human civilization, but ALL POSSIBLE CIVILIZATIONS, you must be drat SURE you are right. 3^^^3 makes even incredibly small doubts significant. James D. Miller posted:Torture, Yudkowsky posted:I'll go ahead and reveal my answer now: Robin Hanson was correct, I do think that TORTURE is the obvious option, and I think the main instinct behind SPECKS is scope insensitivity. "We know in our hearts that torture is is the right choice"

|

|

|

|

Harry Potter and the Methods of Rationality

|

|

|

|

Lottery of Babylon posted:"We know in our hearts that torture is is the right choice" These arseholes are now my Exhibit A.

|

|

|

|

Lottery of Babylon posted:Torture vs Dust Specks These are people who have a very naive view of utalitarianism. They seem to forget that not only would it be hilariously impossible to quantify suffering, but that even if you could 50,000 people suffering at a magnitude of 1 is better than 1 person suffering at a magnitude of 40,000. Getting a speck of dust in your eye is momentarily annoying, but a minute later you'll probably forget it ever happened. Torture a man for 50 years and the damage is permanent, assuming he's still alive at the end of it. Minor amounts of suffering distributed equally among the population would be far easier to soothe and heal. Basically, even if you could quantify suffering and reduce it to a mere mathematical exercise like they seem to think you can, they'd still be loving wrong. Runcible Cat posted:I've felt for years that the kind of people who believe that any superwhatsit (AI, alien, homo superior) that takes over the world would instantly go mad with power and start torturing and exterminating people are actually telling us what they'd do if they were in charge. Pretty much. I prefer to take the viewpoint that any AI we make would be designed to not kill us, and would instead be pretty inclined to look after us rather than torture us for any reason. Slime fucked around with this message at 23:17 on Apr 19, 2014 |

|

|

|

Slime posted:These are people who have a very naive view of utalitarianism. They seem to forget that not only would it be hilariously impossible to quantify suffering, but that even if you could 50,000 people suffering at a magnitude of 1 is better than 1 person suffering at a magnitude of 40,000. Getting a speck of dust in your eye is momentarily annoying, but a minute later you'll probably forget it ever happened. Torture a man for 50 years and the damage is permanent, assuming he's still alive at the end of it. Minor amounts of suffering distributed equally among the population would be far easier to soothe and heal.

|

|

|

|

Swan Oat posted:Can anyone elaborate on what makes this unworkable? I'm not trying to challenge someone who DARES to disagree with Yudkowsky -- I don't know poo poo about these topics -- I'm just curious as to what makes his understanding of AI or decision theory wrong. Effortpost incoming, but the short version is that there are so many (so many) unsolved problems before this even makes sense. It's like arguing what color to paint the bikeshed on the orbital habitation units above Jupiter; sure, we'll probably decide on red eventually, but christ, that just doesn't matter right now. What's worse, he's arguing for a color which doesn't exist. Far be it from me to defend RationalWiki unthinkingly, but they're halfway right here. Okay, so long version here, from the beginning. I'm an AI guy; I have an actual graduate degree in the subject and now I work for Google. I say this because Yudkowsky does not have a degree in the subject, and because he also does not do any productive work. There's a big belief in CS in "rough consensus and running code", and he's got neither. I also used to be a LessWronger, while I was in undergrad. Yudkowsky is terrified (literally terrified) that we'll accidentally succeed. He unironically calls this his "AI-go-FOOM" theory. I guess the term that the AI community actually uses, "Recursive Self-Improvement", was too loaded a term (wiki). He thinks that we're accidentally going to build an AI which can improve itself, which will then be able to improve itself, which will then be able to improve itself. Here's where the current (and extensive!) research on recursive-self-improvement by some of the smartest people in the world has gotten us: some compilers are able to compile their own code in a more efficient way than the bootstrapped compilers. This is very impressive, but it is not terrifying. Here is the paper which started it all! So, since we're going to have a big fancy AI which can modify itself, what stops that AI from modifying its own goals? After all, if you give rats the ability to induce pleasure in themselves by pushing a button, they'll waste away because they'll never stop pushing the button. Why would this AI be different? This is what Yudkowsky refers to as a "Paperclip Maximizer". In this, he refers to an AI which has bizarre goals that we don't understand (e.g. maximizing the number of paperclips in the universe). His big quote for this one is "The AI does not love you, nor does it hate you, but you are made of atoms which it could use for something else." His answer is, in summary, "we're going to build a really smart system which makes decisions which it could never regret in any potential past timeline". He wrote a really long paper on this here, and I really need to digress to explain why this is sad. He invented a new form of decision theory with the intent of using it in an artificial general intelligence, but his theory is literally impossible to implement in silicon. He couldn't even get the mainstream academic press to print it, so he self-published it, in a fake academic journal he invented. He does this a lot, actually. He doesn't really understand the academic mainstream AI researchers, because they don't have the same :sperg: brain as he does, so he'll ape their methods without understanding them. Read a real AI paper, then one of Yudkowsky's. His paper comes off as a pale imitation of what a freshman philosophy student thinks a computer scientist ought to write. So that's the decision theory side. On the Bayesian side, RationalWiki isn't quite right. We actually already have a version of Yudkowsky's "big improvement", AIs which update their beliefs based on what they experience according to Bayes' Law. This is not unusual or odd in any way, and a lot of people agree that an AI designed that way is useful and interesting. The problem is that it takes a lot of computational power to get an AI like that to do anything. We just don't have computers which are fast enough to do it, and the odds are not good that we ever will. Read about Bayes Nets if you want to know why, but the amount of power you need scales exponentially with the number of facts you want the system to have an opinion about. Current processing can barely play an RTS game. Think about how many opinions you have right now, and compare the sum of your consciousness to how hard it is to keep track of where your opponents probably are in an RTS. Remember that we're trying to build a system that is almost infinitely smarter than you. Yeah. It's probably not gonna happen. e: Forums Barber posted:seriously, this is not to be missed. For people who hate clicking: Yudkowsky believes that a future AI will simulate a version of you in hell for eternity if you don't donate to his fake charity. The long version of this story is even better, but you should discover it for yourselves. Please click the link. ee: Ooh, I forgot, they also all speak in this really bizarre dialect which resembles English superficially but where any given word might have weird an unexpected meanings. You have to really read a lot (a lot; back when I was in the cult I probably spent five to ten hours a week absorbing this stuff) before any given sentence can be expected to convey its full meaning to your ignorant mind, or something. So if you find yourself confused, there's probably going to be at least a few people in this thread who can explain whatever you've found. SolTerrasa fucked around with this message at 23:50 on Apr 19, 2014 |

|

|

|

The Vosgian Beast posted:http://slatestarcodex.com/ Slate Star Codex is a blog run by Yvain AKA Scott Alexander, the thinking man's rationality blogger. Much like Yudkowsky is a stupid person's idea of what a smart person sounds like, Yvain is a what a mature idiot who has grown as a person thinks a smart person sounds like. http://slatestarcodex.com/2013/10/20/the-anti-reactionary-faq/ Impressive OP, The Vosgian Beast. I'm going to be following this. HEY GUNS fucked around with this message at 23:48 on Apr 19, 2014 |

|

|

|

Slime posted:These are people who have a very naive view of utalitarianism. They seem to forget that not only would it be hilariously impossible to quantify suffering, but that even if you could 50,000 people suffering at a magnitude of 1 is better than 1 person suffering at a magnitude of 40,000. Getting a speck of dust in your eye is momentarily annoying, but a minute later you'll probably forget it ever happened. Torture a man for 50 years and the damage is permanent, assuming he's still alive at the end of it. Minor amounts of suffering distributed equally among the population would be far easier to soothe and heal. Anyone who actually studies game theory or decision theory or ethical philosophy or any related field will almost immediately come across the concept of the "minimax" (or "maximin") rule, which says you should minimize your maximum loss, improve the worst-case scenario, and/or make the most disadvantaged members of society as advantaged as possible, depending on how it is framed. And Yudkowsky fancies himself quite learned in such topics, to the point of inventing his own "Timeless Decision Theory" to correct what he perceives as flaws in other decision theories. But since he's "self-taught" (read: a dropout) and has minimal contact with people doing serious work in such fields (read: has never produced anything of value to anyone), he's never encountered even basic ideas like the minimax. Instead, Yudkowsky goes in the opposite direction and argues that man, if you're gonna be tortured at magnitude 40000 for fifty years, sooner or later you're gonna get used to unspeakable torture and your suffering will only feel like magnitude 39000. So instead of weighting his calculations away from the horribly endless torture scenario like any sensible person would, he weights them toward that scenario. Timeless Decision Theory, which for some reason the lamestream "academics" haven't given the respect it deserves, exists solely to apply to situations in which a hyper-intelligent AI has simulated and modeled and predicted all of your future behavior with 100% accuracy and has decided to reward you if and only if you make what seem to be bad decisions. This obviously isn't a situation that most people encounter very often, and even if it were regular decision theory could handle it fine just by pretending that the one-time games are iterated. It also has some major holes: if a computer demands that you hand over all your money because a hypothetical version of you in an alternate future that never happened and now never will happen totally would have promised you would, Yudkowsky's theory demands that you hand over all your money for nothing.

|

|

|

|

Swan Oat posted:Can anyone elaborate on what makes this unworkable? I'm not trying to challenge someone who DARES to disagree with Yudkowsky -- I don't know poo poo about these topics -- I'm just curious as to what makes his understanding of AI or decision theory wrong. Yudkowsky has this thing called Timeless Decision Theory. Because he gets all his ideas from sci-fi movies, I'll use a sci-fi metaphor. Let's take two people who've never met. Maybe one person lives in China and one lives in England. Maybe one person lives on Earth and one person lives on Alpha Centauri. But they've both got holodecks, because Yudkowsky watched Star Trek this week. And with their holodecks, they can create a simulation of each other, based on available information. Now these two people can effectively talk to each other--they can set up a two-way conversation because the simulations are accurate enough that it can predict exactly what both of them would say to each other. These people could even set up exchanges, bargains, et cetera, without ever interacting. Now instead of considering these two people separated by space, consider them separated by time. The one in the past is somehow able to perfectly predict the way the one in the future will act, and the one in the future can use all the available information about the one in the past to construct their own perfect simulation. This way, people in the past can interact with people in the future and make decisions based off of that interaction. The LessWrong wiki presents it like this: A super-intelligent AI shows you two boxes. One is filled with  . One is filled with either nothing or . One is filled with either nothing or "Well, the box is already full, or not, so it doesn't matter what I do now. I'll take both." <--This is wrong and makes you a sheeple and a dumb, because the AI would have predicted this. The AI is perfect. Therefore, it perfectly predicted what you'd pick. Therefore, the decision that you make, after it's filled the box, affects the AI in the past, before it filled the box, because that's what your simulation picked. Therefore, you pick the second box. This isn't like picking the second box because you hope the AI predicted that you'd pick the second box. It's literally the decision you make in the present affecting the state of the AI in the past, because of its ability to perfectly predict you. It's metagame thinking plus a layer of time travel and bullshit technology, which leads to results like becoming absolutely terrified of the super-intelligent benevolent AI that will one day arise (and there is no question whether it will because you're a tech-singularity-nerd in this scenario) and punish everyone who didn't optimally contribute to AI research.

|

|

|

|

Good stuff. Here's some words on the "virtues" of rationality. One of them is humility. The Vosgian Beast posted:

Not quite - he didn't actually attend high school, so he couldn't have dropped out.

|

|

|

|

I propose that if this thread continues to attract actual experts in what Yudkowsky thinks he's an expert in, they make interesting effortposts about why he's wrong.

|

|

|

|

SolTerrasa posted:Okay, so long version here, from the beginning. I'm an AI guy; I have an actual graduate degree in the subject and now I work for Google. Thank you, The Vosgian Beast, for introducing me to Less Wrong. I can't believe I haven't heard of it before.

|

|

|

|

Slime posted:These are people who have a very naive view of utalitarianism. They seem to forget that not only would it be hilariously impossible to quantify suffering, but that even if you could 50,000 people suffering at a magnitude of 1 is better than 1 person suffering at a magnitude of 40,000. Getting a speck of dust in your eye is momentarily annoying, but a minute later you'll probably forget it ever happened. Torture a man for 50 years and the damage is permanent, assuming he's still alive at the end of it. Minor amounts of suffering distributed equally among the population would be far easier to soothe and heal. Exactly. While I was reading this stuff again, now that I've cleared the "this man is wiser than I" beliefs out of my head, I thought of that immediately. Let's choose what the people themselves would have chosen if they were offered the choice; no functional human would refuse a dust speck in their eye to save a man they'd never met 50 years of torture. Except lesswrongers, apparently, but then I did say functional humans. Brb, gotta go write a book on my brilliant new moral decision framework. Lottery of Babylon posted:Timeless Decision Theory, which for some reason the lamestream "academics" haven't given the respect it deserves, exists solely to apply to situations in which a hyper-intelligent AI has simulated and modeled and predicted all of your future behavior with 100% accuracy and has decided to reward you if and only if you make what seem to be bad decisions. This obviously isn't a situation that most people encounter very often, and even if it were regular decision theory could handle it fine just by pretending that the one-time games are iterated. It also has some major holes: if a computer demands that you hand over all your money because a hypothetical version of you in an alternate future that never happened and now never will happen totally would have promised you would, Yudkowsky's theory demands that you hand over all your money for nothing. Well, let's be fair, it also works in situations where you are unknowingly exposed to mind-altering agents which change your preferences for different kinds of ice cream but don't manage to suppress your preferences for being a smug rear end in a top hat. (page 11 of http://intelligence.org/files/TDT.pdf) GWBBQ posted:I Googled 'Yudkowsky Kurzweil' after reading these two sentences and oh boy do I have a lot of reading to do about why Mr. Less Wrong is obviously the smarter of the two. I have plenty issues with Kurzweil's ideas on a technological singularity, but holy poo poo is Yudkowsky arrogant. Well, when you aren't doing any real work, you can usually find time to write lots and lots of words about why you're smarter than the man you wish you could be. And, new stuff from "things I can barely believe I used to actually believe": Does anyone want to cover cryonics? I can do it, if no one else has an interest. SolTerrasa fucked around with this message at 00:18 on Apr 20, 2014 |

|

|

|

Lottery of Babylon posted:Anyone who actually studies game theory or decision theory or ethical philosophy or any related field will almost immediately come across the concept of the "minimax" (or "maximin") rule, which says you should minimize your maximum loss, improve the worst-case scenario, and/or make the most disadvantaged members of society as advantaged as possible, depending on how it is framed. And Yudkowsky fancies himself quite learned in such topics, to the point of inventing his own "Timeless Decision Theory" to correct what he perceives as flaws in other decision theories. But since he's "self-taught" (read: a dropout) and has minimal contact with people doing serious work in such fields (read: has never produced anything of value to anyone), he's never encountered even basic ideas like the minimax. Somebody should drop by with a copy of, I don't know, how about A Theory of Justice or Taking Rights Seriously, and see what they make of it.

|

|

|

|

Does anyone know if Yudkoswky still has that challenge up where he'll convince you over IRC to release an untrustworthy superintelligent AI from containment? Supposedly 3 people have taken the challenge and all failed.Lottery of Babylon posted:Anyone who actually studies game theory or decision theory or ethical philosophy or any related field will almost immediately come across the concept of the "minimax" (or "maximin") rule, which says you should minimize your maximum loss, improve the worst-case scenario, and/or make the most disadvantaged members of society as advantaged as possible, depending on how it is framed. And Yudkowsky fancies himself quite learned in such topics, to the point of inventing his own "Timeless Decision Theory" to correct what he perceives as flaws in other decision theories. But since he's "self-taught" (read: a dropout) and has minimal contact with people doing serious work in such fields (read: has never produced anything of value to anyone), he's never encountered even basic ideas like the minimax. This rule works well because of the decreasing marginal utility of money. However, in Yudkowsky's scenario of torture for one person vs minor inconvenience for 10^3638334640024 people, I have to say the torture is preferable. If we set the price of preventing a dust speck from getting into someone's eye should be a centillionth of a cent, and the price of preventing a microsecond of torture should be a billion dollars, it still works out that torture is the better outcome. The example is bad because 3^^^3 is a stupidly large number, not because Yudkowsky's doesn't understand justice or whatever. Chamale fucked around with this message at 00:29 on Apr 20, 2014 |

|

|

|

Looking up other things on the Less Wrong wiki brought me to this: Pascal's Mugging. In case you get tripped up on it, 3^^^^3 is a frilly mathematical way of saying "a really huge non-infinite number". What he's saying is that a person comes up to you and demands some minor inconvenience to prevent a massively implausible (but massive in scale) tragedy. "Give me five bucks, or I'll call Morpheus to take me out of the Matrix so I can simulate a bajillion people being tortured to death", basically. And while Yudkowsky doesn't think it's rational (to him) to give them the five bucks, he's worried because a) the perfect super-intelligent Baysesian AI might get tripped up by it, because the astronomically small possibility would add up to something significant when multiplied by a bajillion and b) he can't work out a solution to it that fits in with his chosen decision theory. Other nice selections from Yudkowsky's blog posts include: "All money spent is exactly equal and one dollar is one unit of caring" quote:To a first approximation, money is the unit of caring up to a positive scalar factor—the unit of relative caring. Some people are frugal and spend less money on everything; but if you would, in fact, spend $5 on a burrito, then whatever you will not spend $5 on, you care about less than you care about the burrito. If you don't spend two months salary on a diamond ring, it doesn't mean you don't love your Significant Other. ("De Beers: It's Just A Rock.") But conversely, if you're always reluctant to spend any money on your SO, and yet seem to have no emotional problems with spending $1000 on a flat-screen TV, then yes, this does say something about your relative values. "Volunteering is suboptimal and for little babies. All contributions need to be in the form of money, which is what grown ups use." quote:There is this very, very old puzzle/observation in economics about the lawyer who spends an hour volunteering at the soup kitchen, instead of working an extra hour and donating the money to hire someone... This is amusing enough by the end that I have to link it: "Economically optimize your good deeds, because if you don't, you're a terrible person." Suggestions for a new billionaire posted:Then—with absolute cold-blooded calculation—without scope insensitivity or ambiguity aversion—without concern for status or warm fuzzies—figuring out some common scheme for converting outcomes to utilons, and trying to express uncertainty in percentage probabilitiess—find the charity that offers the greatest expected utilons per dollar. Donate up to however much money you wanted to give to charity, until their marginal efficiency drops below that of the next charity on the list. quote:Writing a check for $10,000,000 to a breast-cancer charity—while far more laudable than spending the same $10,000,000 on, I don't know, parties or something—won't give you the concentrated euphoria of being present in person when you turn a single human's life around, probably not anywhere close. It won't give you as much to talk about at parties as donating to something sexy like an X-Prize—maybe a short nod from the other rich. And if you threw away all concern for warm fuzzies and status, there are probably at least a thousand underserved existing charities that could produce orders of magnitude more utilons with ten million dollars. Trying to optimize for all three criteria in one go only ensures that none of them end up optimized very well—just vague pushes along all three dimensions. He's got this phrase "shut up and multiply" which to him means "do the math and don't worry about ethics" but my brain confuses it with "go forth and multiply" and it ends up seeming like some quasi-biblical way to say "go gently caress yourself". And that linked to this article. quote:Most people chose the dust specks over the torture. Many were proud of this choice, and indignant that anyone should choose otherwise: "How dare you condone torture!" quote:Trading off a sacred value (like refraining from torture) against an unsacred value (like dust specks) feels really awful. To merely multiply utilities would be too cold-blooded - it would be following rationality off a cliff... He really cannot understand why the hell people would choose a vast number of people to be mildly inconvenienced over one person having their life utterly ruined. But the BIG NUMBER. THE BIG NUMBER!!!! DO THE ETHICS MATH! And if you were wondering if this is a cult: quote:And if it seems to you that there is a fierceness to this maximization, like the bare sword of the law, or the burning of the sun - if it seems to you that at the center of this rationality there is a small cold flame - edit: AATREK CURES KIDS posted:Does anyone know if Yudkoswky still wants to do roleplays with people over IRC where his character is an untrustworthy superintelligent AI who wants to be released from containment? Djeser fucked around with this message at 00:45 on Apr 20, 2014 |

|

|

|

http://lesswrong.com/lw/1pz/the_ai_in_a_box_boxes_you/ Wow, that is one hell of a threat

|

|

|

|

This has to be some of the saddest pseudo-academic, pseudo-religious, pseudo-ethical nerd poo poo I've ever seen.

|

|

|

|

Control Volume posted:http://lesswrong.com/lw/1pz/the_ai_in_a_box_boxes_you/ Yudkowsy really, REALLY likes that kind of argument. It showed up like three times in the post I made. He thinks that in any possible situation, a very large number of repeated trials makes up for effectively impossible scenarios. quote:"Give me twenty bucks, or I'll roll these thousand-sided dice, and if they show up as all ones, I'm going to punch you." Djeser fucked around with this message at 01:00 on Apr 20, 2014 |

|

|

|

And no one has countered his arguments by proposing to construct an AI that will digitally clone copies of people to shake in their boots for the real people? How many utilons will that cost? Djeser posted:Yudkowsy really, REALLY likes that kind of argument. It showed up like three times in the post I made. He thinks that in any possible situation, a very large number of repeated trials makes up for effectively impossible scenarios. This only makes his insanity official. Lead Psychiatry fucked around with this message at 01:26 on Apr 20, 2014 |

|

|

|

Phaedrus posted:Weakly related epiphany: Hannibal Lector is the original prototype of an intelligence-in-a-box wanting to be let out, in "The Silence of the Lambs" Yudkowsky posted:When I first watched that part where he convinces a fellow prisoner to commit suicide just by talking to them, I thought to myself, "Let's see him do it over a text-only IRC channel." I dunno, I think if you weren't a psychopath your hypotheticals wouldn't all begin with "an incredibly intelligent AI chooses to torture you in the cyber-afterlife for all eternity". That, and if you weren't a psychopath you wouldn't find yourself in positions where you need to say "I'm not a psychopath". Djeser posted:Yudkowsy really, REALLY likes that kind of argument. It showed up like three times in the post I made. He thinks that in any possible situation, a very large number of repeated trials makes up for effectively impossible scenarios. This is exactly how he argues. For some reason he always scales up only one side of the decision but not the other. In your example, he increases the number of rolls to a billion billion, but keeps the price to prevent them all at a flat $20. In the Dust Specks vs Torture example, he says that torture is right because if everyone were offered the same decision simultaneously and everyone chose the dust specks then everyone would get a lot of sand in their eye, which I guess would be bad... but ignores that if they all chose torture, then the entire human race would be tortured to insanity for fifty years, which seems much, much worse than "everyone goes blind from dust". Yudkowsky's areas of "expertise" aren't limited to AI, game theory, ethics, philosophy, probability, and neuroscience. He also has a lot to say about literature! Yudkowsky posted:In one sense, it's clear that we do not want to live the sort of lives that are depicted in most stories that human authors have written so far. Think of the truly great stories, the ones that have become legendary for being the very best of the best of their genre: The Iliiad, Romeo and Juliet, The Godfather, Watchmen, Planescape: Torment, the second season of Buffy the Vampire Slayer, or that ending in Tsukihime. Is there a single story on the list that isn't tragic? We need a hybrid of

|

|

|

|

HEY GAL posted:He did write an excellent takedown of the Neo-Reactionary movement though. Yeah I will give Yvain credit for not being as bad as Yudkowsky and not being a horrible person. This is a low bar to clear, but whatever he did it.

|

|

|

|

I'm in on the ground floor for this one. Seconding the suggestions that people who know what Yudkowsky is babbling about write effortposts taking him down. I'm a second year CS student, so I know at least a little bit, especially regarding Maths: I'll help if I can. Suffice to say, Yudkowsky's Bayes fetish really trips him up a lot. Will also update with the stupid things my Yudkowsky-cultist classmates do. To start with, they think polyphasic sleep cycles are anything other than a stupid idea invented by hermits who don't talk to people.

|

|

|

|

He really doesn't get that expanding things in different dimensions can't always be compared, does he?

|

|

|

|

The real problem here is the idea that dust is substantially equivalent to torture, that both exist on some arbitrary scale of "suffering" that allows some arbitrary amount of annoyance to "equal" some arbitrary amount of torture. They don't. You don't suffer when you get a paper cut any more than you are annoyed by the final stages of cancer, even though both scenarios involve physical pain. The reason that non-Yudkowskyites pick dust over torture is that that is the situation in which a person is not tortured.

Sham bam bamina! fucked around with this message at 03:00 on Apr 20, 2014 |

|

|

|

Sham bam bamina! posted:The real problem here is the idea that dust is substantially equivalent to torture, that both exist on some arbitrary scale of "suffering" that allows some arbitrary amount of annoyance to "equal" some arbitrary amount of torture. They don't. You don't suffer when you get a paper cut any more than you are annoyed by the final stages of cancer, even though both scenarios involve physical pain. The reason that non-Yudkowskyites pick dust over torture is that that is the situation in which a person is not tortured. The thing is, a Bayesian AI *has* to believe in optimizing along spectrums, more or less exclusively. That's why you'll see him complaining that you can't come up with a clear dividing line between "pain" and "torture", or "happiness" and "eudaimonia" or whatever. Like a previous poster said, his Bayes fetish really fucks with him. SolTerrasa fucked around with this message at 04:44 on Apr 20, 2014 |

|

|

|

Even if you accept the premise that dust and torture can be measured on the same scale of suffering, the logic STILL doesn't hold. Compare: 6 billion people all get a speck of dust in the eye worth 1 Sufferbuck each, or 1 person gets an atomic wedgie worth 6 Billion Sufferbucks. Even if you accept the utilitarian premise, they're STILL not equal! Just keep following the dumbass logic. It takes a person 1 Chilltime to recover from 1 Sufferbuck of trauma. That means 1 Chilltime from now, all 6 billion people will be at 0 Sufferbucks. On the other hand, in 1 Chilltime, the Bayesian Martyr still has 6 billion minus 1 Sufferbucks. So even in Yudkowsky's own bizarro worldview, he's an rear end in a top hat.

|

|

|

|

Tendales posted:Even if you accept the premise that dust and torture can be measured on the same scale of suffering, the logic STILL doesn't hold. But Timeless Decision Theory says that it's wrong to consider future outcomes in this manner.

|

|

|

|

. q!=e, sorry

|

|

|

|

Tendales posted:Even if you accept the premise that dust and torture can be measured on the same scale of suffering, the logic STILL doesn't hold.

|

|

|

|

Djeser posted:

Even if you somehow except his stupid argument of perfect information this still just a variant of the freaking grandfather paradox/self negating prophecy. I'm sure to his mind it sound all deep and revolutionary but its basically just a load of deliberately contrived bollocks.

|

|

|

|

What brave soul wants to do a complete blind MOR readthrough for this thread? poo poo's so long he might actually be done with it by the time you finish, though, to be fair, its length is perhaps matched by the slowness of his writing.

|

|

|

|

Sham bam bamina! posted:The real problem here is the idea that dust is substantially equivalent to torture, that both exist on some arbitrary scale of "suffering" that allows some arbitrary amount of annoyance to "equal" some arbitrary amount of torture. They don't. I disagree, but this is a philosophical point so it's not worth running in circles about it. Let's go back to mocking these people for believing an infinitely smart robot can hypnotize a human being solely through text.

|

|

|

|

Control Volume posted:http://lesswrong.com/lw/1pz/the_ai_in_a_box_boxes_you/ I could see that working in context, aka an AI using it in the moment to freak someone out. Add some more atmosphere and you could probably get a decent horror short out of it. Stuff only gets ridiculous when it goes from 'are you sure this isn't a sim?' from an imprisoned AI right in front of you to a hypothetical god-thing that might exist in the future that probably isn't real but you should give Yudkowsky money just in case. What I don't understand why his AIs are all beep boop ethics-free efficiency machines. Is there any computer science reason for this when they're meant to be fully sentient beings? They're meant to be so perfectly intelligent that they have a better claim on sentience than we do. Yudkowsky has already said that they can self modify, presumably including their ethics. Given that, why is he so sure that they'd want to follow his bastard child of game theory or whatever to the letter? Why would they be so committed to perfect efficiency at any cost (usually TORTUREEEEEE The fucker doesn't want to make sure the singularity is ushered in as fast and peacefully as possible. He want to be the god-AI torturing infinite simulations and feeling  over how ~it's the only way I did the maths I'm making the hard choices and saving billions~. Which is really the kind of teenage fantasy the whole site is. over how ~it's the only way I did the maths I'm making the hard choices and saving billions~. Which is really the kind of teenage fantasy the whole site is.

|

|

|

|

Djeser posted:"Well, the box is already full, or not, so it doesn't matter what I do now. I'll take both." <--This is wrong and makes you a sheeple and a dumb, because the AI would have predicted this. The AI is perfect. Therefore, it perfectly predicted what you'd pick. Therefore, the decision that you make, after it's filled the box, affects the AI in the past, before it filled the box, because that's what your simulation picked. Therefore, you pick the second box. This isn't like picking the second box because you hope the AI predicted that you'd pick the second box. It's literally the decision you make in the present affecting the state of the AI in the past, because of its ability to perfectly predict you. I swear I've read about almost this exact concept only related to quantum physics, so this isn't even an original idea. This is the best article I could find describing the experiment. It would probably help if I could remember what it was called. Essentially you have two pairs of entangled particles that you send through a system such that one half of each set is caught by a detector and the other half is passed on to a "choice" area, where the other halves are either entangled (Thus connecting all four particles) or not. The key is that the choice step affects what is measured in the measurement step, even though the measurements are taken BEFORE the choice is made. If the final particles were entangled, then the measurements taken earlier will show entanglement, but if they weren't, then the measurements will just show two independent states. It's basically the same concept, only without the convoluted setup of needing to have a perfect AI capable of predicting exactly what another person will say. Of course it's quantum physics so you can't just scale it up to normal sized interactions either.

|

|

|

|

|

| # ? Apr 19, 2024 15:02 |

|

Difference is that entanglement makes perfect sense and the AI scenario does not. I am not sure if this is a product of this thread or from LW's awkward wording, but your actions DO NOT affect your the AI filling the box or not. The AI bases its decision on the actions of simulation-you, which is not present-you, because the simulation is a simulation and done in the past. Maybe I'm just not a rational ubermensch like Yudkowsky but I don't see what time has to do with this at all.

|

|

|