|

Hey, let's play an Atari 2600 game! We all remember the Atari 2600. It came out in the late 1970s and had games that looked kind of like this:  ... and then it went under in the Great Videogame Crash of 1983 and videogames were dead until the NES brought in a new rennaissance three years later. This is a filthy lie. The Atari 2600 wasn't discontinued until 1992 and received some level of commercial development all the way through its lifespan. It was a last-gen also-ran by the mid-1980s but even so, about half the games I remember most fondly from the console are post-1983. That includes Solaris, which came out in 1986, the same year that Super Mario Brothers and The Legend of Zelda did. It looks rather nicer than the earliest games:  It's also kind of interesting because it's technically beatable, and it's also in that weird anti-sweet spot where it's too simple to get a real remake, and too complicated to work as a casual game. History In 1979, an Atari employee named Doug Neubauer wrote a game for the Atari 400 (a full-scale home computer) called Star Raiders. It was a mix of first-person space combat and galactic patrol not unlike the old minicomputer Star Trek games. (For the modern incarnation of those, Netrek has had you covered for awhile.) Some time later, Atari decided they wanted to do a similar game as a tie-in to the movie The Last Starfighter, but that fell through. The project targeting the home computer market was ultimately released as Star Raiders II, and the project targeting the Atari 2600—which was a one-man job from the original Star Raiders developer Doug Neubauer—became Solaris. Goals of the LP There will be two strands within this LP thread, interleaved. The first strand is about playing the game and has the following goals:

Format of the LP I'm hoping to get at least one game update in per week, and one tech post in each week as well until I run out of topics. If I have a session where the update is pretty much one "normal" playthrough, I will also post a video at the end, but the only thing you'll miss if you stick with the screenshots will be some kickass sound effects and a sense of how everything fits together in realtime. That's actually quite a bit, so you'll want to watch the videos. Glitches Solaris has some glitches. I will show off the ones I know of, but when I'm building a route to win the game, I won't rely on them. Every other systematic run I've found exploits them, but as far as I know I didn't trigger any when I beat it myself awhile back. I hope to prove it can be done glitchless as part of this run. All right, let's rock Buckle up, folks. We're going to break a game in half live. Gameplay Strand

ManxomeBromide fucked around with this message at 10:41 on Aug 25, 2016 |

|

|

|

|

| # ? Apr 20, 2024 04:06 |

|

I am not the only one with an interest in this stuff. The thread has delivered a bounty of further reading and information of their own.

ManxomeBromide fucked around with this message at 06:18 on Aug 27, 2016 |

|

|

|

Hell yes.  Chokes' thread left me antsy for more retro computer goodness. Chokes' thread left me antsy for more retro computer goodness.

|

|

|

|

Also, for those of you who are generally interested in making old computer systems dance to tunes they were never really intended to dance to, you should definitely also check out Prenton's Commodore Format Covertapes thread and Chokes McGee's LP of COMPUTE!'s Gazette. Those are both for the C64, a generation after this, but Solaris was competing with the stuff from the Gazette directly while the Commodore Format stuff is what you get when the C64 tries to stay relevant in an Amiga and SNES world.

|

|

|

|

Oo, I remember playing this on my brother's 2600 back in the day. I also remember getting killed a lot while accomplishing pretty much nothing and having a vague sense that there was more to the game than eight-year-old me could figure out. I'll be interested to see where a more systematic approach to the game will lead.

|

|

|

|

Part 1: Mission of Mercy Here's the official story for Solaris straight from the manual: quote:The Zylons are back—those spaceway sneaks, villains of Venus, Saturnian scoundrels! They're swarming through the galaxy in huge forces, attempting another takeover. They've got to go! And we need YOU to go get 'em. We'll be defying the spirit of our orders and thus actually have a go at carrying out that mission of mercy. We start out on a nameless Federation planet, and blast off dramatically:    That accomplished, we find ourselves at the map screen, which shows our current quadrant:  We can't enter starfield sectors. We're the X next to the big star that means "Federation planet". Various enemy groups are scattered about the quadrant:  Generic attack groups... Generic attack groups... Kogalon Star Pirates... Kogalon Star Pirates... Flagship fleets... Flagship fleets... Blockaders. Blockaders.There's also a JUMP counter at the bottom of the screen. That counts down in realtime, continuously. Every time it hits zero, mobile enemy fleets change their position. They generally will move to attack Federation planets, and if they manage to stay a full cycle there, they will destroy it. That's bad, for reasons that will soon become apparent. But still, we're on a mission of mercy here. It wouldn't do to go murder dudes right away. Let's fly to an empty sector of space and see if we somehow left the lost planet of Solaris under a couch cushion or something. This cues a HyperWarp sequence! These are actually kind of neat.   Your engines start revving up, and then while that happens, your ship shifts in and out of focus. You need to adjust your flight so that the ship stays "in focus"—the more in focus your ship is at the point your warp takes, the better your fuel economy and the less downtime you'll have once you reach wherever you're going. What's actually happening is that it's drawing your ship in two different places every other frame. This becomes more obvious if I deliberately mess with the focus along the way:  I've had to slow this down to 50Hz, because animated GIFs seem to not work right faster than that, so the in-game image looks a bit cleaner than this, but this gives the general flavor.  If you're unlucky you can also crash into planets, which makes them explode and costs you a little fuel. I hope that wasn't the Lost Planet of Solaris, or we will never hear the end of it back at Federation HQ. (You can also blast planets, which one-shots them.) OK, we've done what we need to to keep up appearances. Let's go murder some dudes. We warp off to take on the generic attack group.  Most of the game will be spent fighting enemies in these sorts of sequences. We fly around and get buzzed by various types of enemies, hoping to get the drop on them before they do the same to us. The pink guy is a "Mechanoid", and it's good to fight them first because they get meaner as an engagement goes on longer. The TIE-fighter looking guys are "Kogalon Star Pirates" and they're basically Mechanoids that start out as assholes. On the plus side, they're just as likely to blast other enemies as they are to blast you. Everyone else is better at staying on target.  Once we clear out the basic enemies, a Flagship appears. Taking him out will generally end the combat. It launches those little red diamond guys ("Distractors") that zip around, interfere with our shots, and can eat our fuel if they ram us. They're annoying but mostly ignoreable. Our radar kind of shows where some of the enemies are, and the numbers on the left and right estimate distance and direction. I've never found these particularly reliable in a combat situation.  After cleaning up that generic attack group, we go hunt down the Flagship group too. It's moved a step towards the Federation planet because we've spent enough time that a Jump has happened. This attack group is basically the same deal, but there are more flagships in the group.  I was just on a peaceful reconnaissance mission of mercy, your Honor, and that planet was coming right for me. I had no choice. While we're at it, checking at the bottom of the screen, it seems we're at half fuel. If we run out of fuel, we explode, and exploding is bad. (The flags over to the left of the fuel are our lives count.) Let's go refuel. To do that, we chart a course back to a Federation planet.  The citizens of the planet wave jauntily at us as we go past. Our radar is showing a docking station ahead and this is quite a bit more accurate than our radar display was in combat.  We're good to go, and we've eliminated all actual threats in the first sector. (The remaining enemy groups are sentries guarding quadrant bottlenecks or exits and will never move.)  CLICK HERE FOR VIDEO CLICK HERE FOR VIDEO  We've just had a ton of exposition, but the game is actually pretty fast-paced. CLICK THE LINK ABOVE to see all this play out in realtime. ALSO NOTE that you must set the quality to 720p60 OR THE HYPERWARP ANIMATIONS WILL NOT LOOK RIGHT. One disadvantage of shifting the display every other frame is that when video encoders drop to 30FPS, half the objects disappear. NEXT TIME: We explore the adjacent quadrants, and maybe find some new enemy and mission types!

|

|

|

|

Holy crap, they managed to squeeze this out of an Atari 2600?

|

|

|

|

This is the worst recreation of the classic Lem novel/Tarkovsky movie I have ever seen.

|

|

|

|

Solaris: great Atari game, or the the greatest Atari game? Seriously this is really impressive for a 2600 joint. gschmidl posted:This is the worst recreation of the classic Lem novel/Tarkovsky movie I have ever seen. Yeah they really strayed from the source material.

|

|

|

|

You can probably sort of duplicate the effect in 30fps by doing frame blending since that's basically what they were doing This game looks neat tho

|

|

|

|

Oh hell yes. I had an Atari 2600 and this game (as well as a lot of others) when I was about 7, and I never had any idea what I was doing in it, so I can't wait to see the rest. Anyone watching this, I encourage you to go find yourself an emulator and play this game. You won't get it just from watching. The way the ship controls and whether or not you hit the enemies is something you've just got to feel. Cathode Raymond posted:Solaris: great Atari game, or the the greatest Atari game?

|

|

|

|

Is this the most beefed up variant of Star Raiders (action-y Star Trek) from the 80s? https://www.youtube.com/watch?v=3_VDM8nC9sM

|

|

|

|

Tech Post 1: Introducing the Atari 2600 VCS Before we really dig into how Solaris does what it does, let's take a brief tour of just what the Atari 2600 can do in the first place. My primary reference here is the Programmer's Reference Guide from 1979, which is still, as near as I can tell, the primary reference information for homebrew developers. The Atari 2600 was the most popular and successful console in the second generation of game consoles. The first generation were devices like the Magnavox Odyssey. The first-gen consoles were made entirely of logic circuits, and to the extent that you could make them play different games, you did it by tuning the circuits or replacing plugboards that changed how they were wired. You also had devices that were custom circuits that could, say, play Pong. The great insight that led to the second generation of consoles was that instead of a circuit that played one game or a set of related games, you could put a microprocessor in the console, and then you could hook up detachable ROM chips to its address bus with a modular cartridge system and this would let one console play any game your developers could come up with. You could even release more after launch and they'd just work! So let's start our tour with the details of this genuinely revolutionary innovation. CPU and RAM The Atari 2600 uses a variant of the same 6502 chip that we know and love from the Commodore 64, the Apple II, and the NES. The stock 6502 chip can address 64 kilobytes of memory, which can be backed by RAM, ROM, or special memory locations that talk to I/O ports. It's also got some pins to send external signals: RESET, Interrupt ReQuest (or IRQ) and Non-Maskable Interrupt (or NMI). But it's 1977 here, and pins are really expensive, so Atari went with the cheaper 6507 model. This was, in silicon, identical to the 6502, but it had fewer pins so it basically couldn't do as much because there was no way to for the chip to communicate or hear everything it was actually capable of. In particular, the 6507 drops some address pins, so it can only address 8 kilobytes of memory. It also removes the ability to receive IRQ or NMI signals, but since those are usually used for precise timing or I/O information, that's no great loss. The 2600 splits up its 8KB address space to give 4KB to the cartridge ROM, and then the other 4KB to the RAM and the I/O registers. 4KB is a bit cramped, but it's still actually pretty decent. For those of you who followed along with Chokes McGee's COMPUTE!'s Gazette LP, the only games that cracked 8KB were the extremely text-heavy BASIC games, and even the really sophisticated games like Crossroads 2 were more like 6KB. Solaris is 16KB. It's using a special cartridge that has four 4KB ROM chips, and if you try to read from certain locations on the ROM, it will swap which of the four is used. (Obviously, the code that does this has to be replicated across all the ROMs or it will suddenly be reading garbage for code.) This general technique is called bankswitching, and the NES in particular made incredibly heavy use of it. The Atari's use of the technique was far more modest, but it does at least get us out of the 4KB prison and lets us have significantly larger games. Where things get a bit nuts is the RAM. While it's possible to pack extra RAM into a cartridge, this wasn't generally done and Solaris doesn't do it. Instead, it is restricted to the Atari 2600's native RAM, which is a princely 128 bytes. Not kilobytes. Bytes. This is even crazier than it sounds. The 6502 series chips—and the 6507 is no exception here—kind of assume that you have access to at least 512 bytes of RAM, because the chip itself hardcodes certain capabilities into that region. The first 256 bytes (the "Zero Page") are faster to access and many addressing modes (the ones that look like array dereferences) only work on addresses in the zero page. Bytes 256-511 are reserved for the CPU's execution stack. When you call a subroutine in machine code, the return addresses are stored by the CPU in this region, and it counts down from 511. The push/pop instructions also work with this stack. The 2600's architecture deals with this by having locations 0-127 be I/O registers, and then it mirrors its 128 bytes of RAM across 128-255, 256-383, and 384-511. So if you push a value onto an empty stack, it looks to the CPU like it gets written to locations 511, 383, and 255 simultaneously. Atari 2600 programs don't make heavy use of function calls. I/O: Joysticks and Config Switches This, on the other hand, is a refreshingly simple relic of an earlier era. There are a bunch of switches on the console that correspond to the NES's SELECT and START buttons, and a few other switches for difficulty selection and display type and such. These are simply treated as memory locations, and the CPU can look at those memory locations to discover the position of the switches. This is an Atari joystick:  It's basically a D-pad with one button, and the plug is the 9-pin trapezoidal port that you can find on really old mice and similar serial devices on the PC. This isn't serial, though. The joystick has switches (up/down/left/right/fire), and so each direction has its own switch and thus its own pin. Checking the joystick state is again as simple as just looking at the contents of a "memory" location, and it all fits in a byte with room to spare. Sound The Atari was never intended to play music. It's got 2 channels, and you can set either one to 1 of 15 "instruments" and then give it 1 of 32 frequencies across five octaves. Not exactly fine control, but the "instruments" aren't all in tune with each other so you can get a few tunes out if you're really clever and lucky. Generally though this is for sound effects alone, and it's honestly not bad. It sounds better and integrates better than the Apple II or VIC-20, but it's less flexible. The NES and Commodore 64 blow it out of the water, but those are next-gen so we'd expect no less. Graphics Here's where all sanity ends, and here's where we're going to go party. The Atari 2600 does not exactly have a notion of graphics, at least as we know them. The hardware has only a vague model of sprites or backgrounds. Where you would expect a GPU, the Atari has a device it calls the Television Interface Adapter, or TIA for short. It is very strongly tied to the broadcast television/composite video standard, and the game software itself is responsible for ensuring that the output is appropriate for display. Third-generation systems like the NES would have their equivalent chip handle the transformation of a display into television data on its own, and use the interrupt mechanisms to report to the CPU that it was time to compute a new frame. On the Atari, the software has to throw the VBLANK and VSYNC signals manually, and count out each of the scanlines in the VBLANK period and the display itself. And since it has to do that, the TIA actually only knows about one scanline at any given time. Drawing a display on the 2600 is a matter of setting up the graphics registers for the current scanline, waiting for the stuff you want drawn to actually be sent to the TV, and then changing all the values that need changing for the next one. 192 times a frame, 60 times per second. So it does have a notion of a background color, and of a foreground (the "playfield"). The playfield is 20 pixels wide and covers half the screen. It can be either repeated or mirrored on the other side. If you want an asymmetric playfield, you need to alter the playfield registers at exactly the right time mid-scanline so that the left side has been drawn but the right hasn't started being drawn yet. There are also two sprites as we know them, 8 pixels wide and 1 pixel tall. These are called "player 0" and "player 1", and to get sprites that are bigger than one pixel tall you just change their values in between scanlines. The Atari 2600's horizontal resolution was 160 pixels, which is kind of laughable but turns out to actually be the absolute maximum you can have without getting color distortion on an NTSC standard definition television. (Since we're controlling each scanline individually, the vertical resolution is as good as it gets until the HD era, which basically means it's doing as well vertically as the PS2. Good times!) Player sprites can also be horizontally magnified so that each pixel in the design is 1, 2, 4, or 8 pixels wide. You can also replicate the player sprite across the screen, and if you're real precise in your timing, shift the graphics mid-scanline so they aren't all clones of each other. (Of course, it's not so bad if they are all clones of each other, either; that's pretty much exactly how Space Invaders worked.) In addition to the player sprites, each player has a "missile", which is a one-bit sprite that's the same color as the player. Like the player sprite, it can be horizontally expanded or replicated. (To vertically expand it, you'd just leave the Missile Enable mode on longer.) The playfield also has a missile, but it's called the "ball". You can really tell that this system was designed pretty much exclusively to implement Combat and Pong. But even this is less confining than it sounds: if you look at games like Yars' Revenge you can pretty readily pick out how these components are used to build a more sophisticated game without any cheating:

This "comb" effect is ugly, so most developers would do the nudge every single scanline, nudging sprites for 0 if they didn't need to move. This produced a display with a black bar down the left side of the screen, and that's way less obnoxious. Solaris follows best practices and does this. Each player sprite has its own color, which it shares with its missile, and the playfield has a color that it shares with the ball. These are selected from a palette of 128 colors evenly spaced across the color wheel, which is unironically really good and actually better than pretty much anything else until you hit the 16-bit era. Also, since you're changing stuff each scanline, you also can just as well change colors then too, which is why you'll see a lot of horizontal striping effects on Atari graphics. The TIA also handles collision detection for us, and it's pixel-perfect. Any collision that happens is "remembered" until we check it, so we don't have to check every scanline for a collision; we can just draw both full sprites and check once we're done. A Weapon For Sanity Placing sprites and playfields both require writes with exact timing to I/O registers, repeatably, in order to get a stable display. Being off by a single CPU cycle will cause the display to lurch over by 3 pixels. Getting the wrong number of cycles on the frame as a whole will cause our television display to roll. How can we do anything here? We have two things to help us out, and they're both tremendously powerful. First, there's a generic programmable timer that's part of the TIA we can use for measuring just about anything we want. Traditionally, we use this to count out the VBLANK period, so that we can spend the entirety of the VBLANK period computing what the next frame should be. Once that's done, we simply wait for the counter to wind down, shut off VBLANK, and start generating the display again. But there's still going to be some wobble there because the tightest possible loop on the 6502 is like five cycles long. Even if we had IRQ capability—which, as you may recall, we don't, because the 6507 removed those pins to save money—interrupts take the better part of a scanline to fire and there's just as much wobble on those as any other instruction. Here's where the Atari system hands us a weapon to even the odds, and this weapon is a thermonuclear bazooka. By reading or writing memory location 2, the CPU is shut down until the very end of the current scanline, and then it's started up again right at the start of the horizontal blanking period. This is 100% reliable and cycle exact and perfect. If you want to count out N scanlines, just read that location N times. If you want to place a sprite 45 pixels from the start of the blanking period, have your write instruction happen fifteen cycles after a read from memory location 2. This is ungodly useful. I've messed around with graphical effects a bit on both the 2600 and the Commodore 64, and I'd have killed for an equivalent to this on the C64. (There are ways. But it's much harder.) A Final Note Everything I've described is pretty much howling madness from the point of view of any modern software developer. But by being so unconstrained, I do think that this improved the Atari's longevity. It didn't cease production until 1992, and it had commercial software made for it all the way through. Its competitors of the time, like the Intellivision, sank without a trace when the NES and friends came along, but the 2600 was sufficiently oblivious of its environment that its very weaknesses let it stay relevant longer, because every time someone found a new trick, it was like a hardware upgrade. ManxomeBromide fucked around with this message at 07:54 on Aug 27, 2016 |

|

|

|

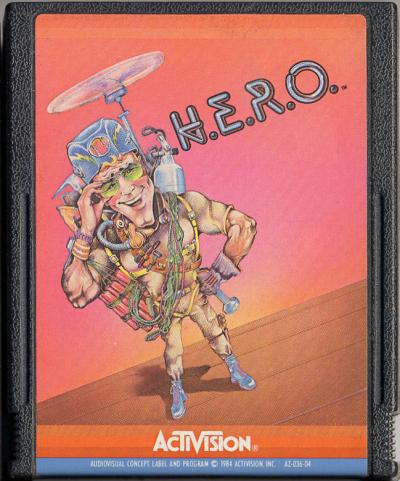

Tiggum posted:Anyone watching this, I encourage you to go find yourself an emulator and play this game. You won't get it just from watching. The way the ship controls and whether or not you hit the enemies is something you've just got to feel. The Internet Archive has a "Console Collection" with a lot of Atari games playable in the browser. quote:Well, it's not HERO or River Raid, so no, not the greatest. I wouldn't argue with anyone who picked those. (I had those on the C64 growing up; my 2600 shortlist was Solaris, Dragonfire, Encounter at L-5, Yars' Revenge, Thunderground, and a number of decent ports like Joust and Stargate.) And as for HERO, who can argue with this face:  (image credit: AtariAge) SelenicMartian posted:Is this the most beefed up variant of Star Raiders (action-y Star Trek) from the 80s? I was vaguely aware that there was a game called Star Raiders, but I was only familiar with the Atari 2600 version, which was stripped down but needed a special controller anyway. I didn't realize that it was originally for the Atari 8-bit home computers, which makes a lot more sense. Now that I've looked into this... Solaris is literally a sequel to Star Raiders. It has the same designer (Doug Neubauer) and the same enemy list. It was originally going to be worked into a licensed game for The Last Starfighter, but that apparently fell through. The Atari Computers got a different, also-fell-through Last Starfighter game that actually got the name Star Raiders II, but Neubauer wasn't involved with that one. (This does also explain why the cover art for Solaris is recycled artwork from Star Raiders. I'll go update the history bit in the OP.) All that said, I believe that after all was said and done, the most beefed-up 80s version of Star Raiders was probably Elite.

|

|

|

|

ManxomeBromide posted:Tech 1: Introducing the Atari 2600 VCS For some more insight into the pitfalls and insanities of 2600 programming, I found Fixing E.T. for the Atari 2600 a fascinating read, even if you don't read machine code at all.

|

|

|

|

ManxomeBromide posted:Technical details There is also Racing the Beam which attempts to taxonomize/contextualize types of gameplay available on the system through the lens of its hardware constraints. Looking forward to more!

|

|

|

|

ManxomeBromide posted:We've just had a ton of exposition, but the game is actually pretty fast-paced. CLICK THE LINK ABOVE to see all this play out in realtime. ALSO NOTE that you must set the quality to 720p60 OR THE HYPERWARP ANIMATIONS WILL NOT LOOK RIGHT. One disadvantage of shifting the display every other frame is that when video encoders drop to 30FPS, half the objects disappear.

|

|

|

|

Alright, so I'm not going crazy. As soon as I clicked the video in your first update, and saw the graphics and heard the sound effects, I had a ridiculously quick onset of nostalgia harkening back to when I played our 2600 as a kid (before we inherited our uncle's NES... towards the end of the 16-bit era... we were somewhat poor). Here's the thing, though: I've never played Solaris. What I did play, and actually still have the cartridge of, is Radar Lock.  I had a difficult time even remembering the name because I couldn't find it on Wikipedia and instead had to scour archive.org's Atari 2600 page until I saw a screenshot. Here's the Giant Bomb page listing for Radar Lock, though, which gives a pretty good synopsis: it came out three years after Solaris in 1989, and Doug Neubauer used the same engine with some tweaks (hence why I got a huge dose of deja vu after hearing the "blast off" sound effect in the video). The game is less graphically "complicated" (har har) than Solaris, as it doesn't have any fancy warp maps or planets to crash into, but it's technically ambitious in other ways, such as requiring the use of two joysticks in order to fully utilize the control scheme. (The "two player" mode is even analogous to an F-14 pilot/copilot setup!) There seems to be literally only one video on YouTube showing this game, which is kinda funny, but the player doesn't show off any of the missiles: https://www.youtube.com/watch?v=k4RguF-vcg4 Anyway, didn't mean to hijack the thread, but I figure it's pretty relevant and thought I should share in case you haven't heard of it.

|

|

|

|

Lots of great links from everyone here! The E.T. patch is worth reading just for the discussion of why it had a mixed reception at the time; Zelda shows up again there as an example of success vs. failure. I actually have a copy of Racing The Beam and it's a fantastic book. It sort of has a notion of game-as-literature going on, not in the sense of having a story, but as being an artifact that is a snapshot of a certain era and mindset. The history of Yars' Revenge was probably my favorite part. I hadn't heard of Radar Lock, but wow, it's real clear that, there's a bunch of UI elements that are clearly just being reskinned. Check out how the fuel display is remade as guns there. And man. 1989. You know what Atari also released in 1989? S.T.U.N. Runner, that's what. The tech spread in game technology was completely crazy in the late 80s/early 90s.

|

|

|

|

I have nothing useful to add, but I'll be following this thread with fascination!

|

|

|

|

ManxomeBromide posted:And man. 1989. You know what Atari also released in 1989? S.T.U.N. Runner, that's what. The tech spread in game technology was completely crazy in the late 80s/early 90s.

|

|

|

|

pumpinglemma posted:...is that running on a 2600?! No, STUN Runner was arcade. Still, it looks like an early ps1 title but it came out in 1989.

|

|

|

|

pumpinglemma posted:...is that running on a 2600?! Emphatically not; it's an arcade game. The graphics, sound, pseudo-3D-background, etc. wouldn't be feasible on the 2600.

|

|

|

|

Reading the history of early consoles and the programming madness needed to make them work is always a bit of an edification.

|

|

|

|

Yeah, if you want even an idea of the tech spread of the time (One hell of an interesting time, although the modern day is actually no less interesting), check out this port comparison. All of those systems would still have been contemporary with each other. Glad to see another old-system dissection LP (Apologies I let the BBC BASIC one fall down, go boom... If I don't get this job tomorrow, I'll be trying to resurrect it, among other projects) , and looking forward to more tech posts!

|

|

|

|

Part 2: Distress Call Last time, we cleared out our first sector and refueled.  Sharp-eyed viewers will note that the score and hangar location are different, because I did a restart after last time. While I'm getting a handle on the game and introducing all the mechanics, I'll just be playing normally. We aren't trying to rip the game in half yet. Anyway, with the threat to the Federation Planet in this sector dealt with, a path is now clear for us to go south:  Warping to empty space is a no-op, like we saw last time, but when we warp to a space that opens up on the edge, like this one, once we come out of warp we end up in the adjacent quadrant:  That's a lot of enemies. We've also got two new galactic features:  ... Cobra Fleets, a new kind of enemy, and ... Cobra Fleets, a new kind of enemy, and ... Zylon worlds, the counterpart to Federation worlds. ... Zylon worlds, the counterpart to Federation worlds.Notice that since we entered from the north, we can't actually reach the Zylon world. We'll have to fly around through some other sectors if we want to reach it. In the meantime, let's go check out Blockaders. We saw some of those in the first sector, but didn't engage them.  Blockaders, as it turns out, are assholes. There's a huge swathe of fast-moving mines and they're like planets except they murder you if they hit you instead of costing a trivial amount of fuel. My best strategy for this is to stay relatively still, open fire on anything that looks like it might be flying into my travel path, and hope for the best.  It turns out hope is not a strategy. One interesting mechanic here is that if you take a glancing hit, then instead of dying you instead lose a bunch of fuel and your targeting computer goes on the fritz. I actually take two glancing hits in the image above, and you if you look closely can see my fuel plummet each time. The targeting computer doesn't get any more broken, though.  After awhile I make it through the minefield. I'm supposed to fight a flagship now, but I have no idea where it is. The arrows and numbers on my targeting computer in principle will still lead me to them or at least suggest a direction...  ... but they're not particuarly helpful. The X means I'm aligned with it but there's nothing at all visible here.  There he is. And my scanner is telling me it's to my left when it's clearly to my right. What the Hell, targeting computer.  Anyway, once we get a visual on him he's easily dispatched. Time to fly off to the Federation world in this sector and refuel and repair. Docking will restore our targeting computer as it restores our fuel. Anyway, once we get a visual on him he's easily dispatched. Time to fly off to the Federation world in this sector and refuel and repair. Docking will restore our targeting computer as it restores our fuel.But wait!  On the way to the Federation planet, an alarm rings and our score starts having SCANNER overlaid over it. That means the planet is under attack! To the rescue! Rescuing worlds is the same as refueling in that we just fly to them as usual. But once we're there, instead of waving bystanders, we have waves of Raiders...  and Targeters.  We have to kill them all or we need to warp back here and try again. That's real bad because the 40-second time limit we're under is actually pretty tight, especially if the alarm came in while we were in mid-combat. If you have a second controller, that stick is for the navigator and they can abort combats and fly off to the rescue at any point... but this is super-glitchy and I'm not going to actually do that in the standard runs. We'll cover that when I start showing off glitches. Also worth noting in these gifs is that the enemies and I are actually trading shots that will cancel each other out. Firing defensively is very important. You can also use up and down to alter your speed on planetary surfaces; I slow down to trade shots with those Targeters and make sure I don't miss any. Once the enemies are all dead the citizens come back out and I can dock just like I would normally. As long as we're weapons-free on Federation planets, be aware that if you blast the docking bay, this will destroy it and render the sector useless for repairs or refuels. So if you're going to shoot two shots at the last enemy, sooner or later the docking bay will turn out to spawn right behind that last enemy and get taken out by your extra shot.  Things are looking a bit rough for this world. Let's go clear out the immediate threats.  Ow  Dammit      So, that happened. (Full video of this session here, though except for the planetary defence and the minefield it is more of the same.) That's also why when we start seriously trying to beat the game we'll be using savestates. You only have to go the tiniest bit on tilt for things to go terribly, terribly wrong. NEXT TIME: We restart again, survive rather longer, cover the remaining mission types, and start seeing how big this Galaxy really is.

|

|

|

|

Flagships can kill you? I thought they only launched Distractors.

|

|

|

|

Tiggum posted:Flagships can kill you? I thought they only launched Distractors. After a bit more play and studying of the videos, I think if you're fighting a flagship battlegroup the subsidiary flagships are allowed to open fire upon you.

|

|

|

|

Yup, that's some serious ATARI sounds

|

|

|

|

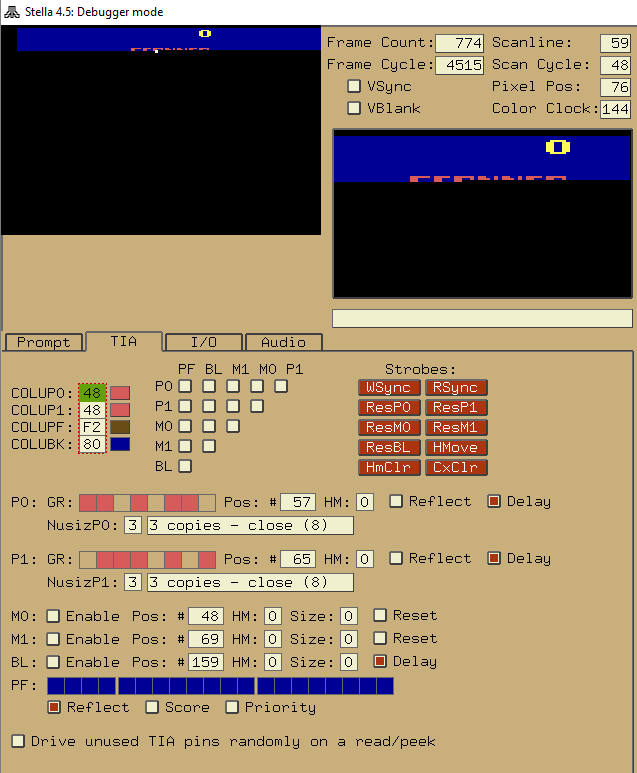

Tech Post 2: The Penultimate Technique For our first trick, let's take a look at the map screen...  ... and very specifically, this.  As we covered last time, the Atari only has two sprites, and they're 8 pixels wide. We're not doing anything that's any 16 pixels wide for this score, or for the "SCANNER" header there. We also definitely aren't doing this with the playfield, because the playfield's elements are a minimum of four pixels wide each, which looks like this:  So what's going on? Let's fire up Stella's debugger mode and find out. Stella's debugger mode is awesome:  One of the advantages of having such basic hardware is that the debugger can capture pretty much the entire state of the system in a single screen. That's all of RAM there in the upper right, because it's not really all that tough to lay out 128 bytes cleanly. Then we've got the state of the graphics registers in the lower left, and a disassembly in the lower right. We can flip through tabs for a little extra info, but there's rarely cause to change from this configuration. The part that makes this amazing is the upper left, which shows the screen as we know it. As we advance, we can see a little white dot work its way across the screen. That bright white dot is the simulated CRT beam. The buttons in the upper right next to the RAM let us step forward an instruction at a time, a scanline at a time, or a frame at a time, so we're in good shape for time control. We also see the previous frame in black and white, going color as the next frame is drawn over it, but this isn't consistent and I haven't actually worked out what makes this stick around or not. Let's flip through to where the SCANNER graphic is being generated.  The basics of the trick fall right out of the sprite registers here. You can see the sprites 0 and 1 are set to "three copies, close together", and then they are also 8 pixels apart from one another (57 vs 65). Having the replicated copies "close together" means that there's a sprite's worth of space between each copy of the sprite. We've got the two sprites interleaved 010101, so that there are 48 pixels worth of graphics all in a row. This does leave the minor issue of how you actually make all those 48 pixels be individually controllable, as opposed to three copies of two. The simple solution would be to reassign each sprite three times a scanline. We have all the time in the world to set up the first 16 pixels, so we'd just have to do four more writes during the draw to make it work. We've got eight pixels worth of time to do the write, too, since they alternate. This is a pretty good plan, and it almost works. There are two issues we have to deal with. The first is that accessing memory or graphics registers is really slow. Reading or writing a value in RAM costs nine pixels worth of time. Reading a value out of the cartridge ROM costs twelve pixels worth of time. All of these are longer than the length of a sprite unit. That said, nine pixels isn't too bad. If we have four write statements in a row, we've got plenty of buffer space as long as we tune our first write to a decent time. The other problem is much nastier: We want to write 6 individual values, because we're trying to create a full 48-pixel sprite here. If we want to write 4 different values in a row, we'd need to have four registers pre-loaded with values, ready to write. (The fastest instruction to load a register with a value costs six pixels worth of time; that plus the nine pixels for writing cost us 14 pixels which guarantees at least one missed cue.) The 6502, alas and alack, only has three registers. We would ordinarily be out of luck, but we've got one extra trick up our sleeves. If you check that list of TIA registers in the screencap up there, you'll notice that a mode called "Delay" is on. What "Delay" does is enable two extra registers inside the TIA itself. When it's on, the graphics that are displayed for a sprite are not the graphics that you wrote most recently to the graphics register, but instead the graphics that you wrote the time before that. Once Delay is enabled, we can preload not merely the first sixteen pixels worth of graphics, but the first thirty-two. Then we write the last sixteen pixels to the player 0 and 1 graphics at the time we want to switch from the first 16 to the middle 16, and then simply write garbage to the registers to flip over to the last sixteen as needed. We can then use the rest of the scanline to prepare the next line of graphics and preload what the graphics to repeat the trick the second time around. The timing's a little bit tight, but it's not too tough to embed this technique in a loop that takes exactly 76 machine cycles per iteration. This results in expending exactly one scanline's worth of time per iteration and lets you just keep repeating the process for as many lines as you need. And that's this trick! This trick was old when Solaris was written; it was worked out in 1981 or so and was traditionally used to display six-digit scores. Discussions of it I found online even referred to it as "the venerable six-digit score trick." All stuff published during the Atari 2600's lifespan required you to place your 48-pixel graphics at a fixed location on the screen; in 1998 someone put together enough pre-existing techniques to let you actually move a 48-pixel wide supersprite around the screen. It's so inconvenient to do this that it's really more of a curiosity, though. That just leaves one question, really: why does this Delay mode even exist in the first place? The basic idea here, I think, was that the original designers didn't really expect you to change the graphics every scanline. You kind of have to if you're doing anything fancy, but they expected you to keep sprite graphics constant for pairs of scanlines. An individual pixel on the 2600 is nearly twice as wide as it is tall, so doubling up pixel rows gives you something that looks a little more square. I think the original idea here as that you could set the Delay mode and then your sprite would move down one scanline without you having to alter the graphics update code to check every scanline. Hence, the documented name of the register here: VDEL (Vertical DELay). But they decided to implement it as two secret extra graphics registers, and a few years after release one of Atari's developers noticed that he could exploit that within a single scanline. As a result, this control register was used in drat near everything made for the system, but it was used for this 48-pixel sprite trick and not for its intended purpose. By 1986, when Solaris was published, you could be forgiven for thinking this was the intended purpose of the mode all along.

|

|

|

|

ManxomeBromide posted:That just leaves one question, really: why does this Delay mode even exist in the first place? Holy crap. It's hard to express just what a beautiful bit of computer engineering this is. Let's start with the chip: In 1977 cost was a big deal for microprocessors. Basically, the smaller your chip, the more chips you can fit on one die that comes out of your manufacturing process. Since cost is pretty much set per die, that means smaller chips are cheaper more or less in proportion to how much smaller they are. The 6502 is a 40 pin package, and by cutting down to a 28 pin package on the 6507 they could offer it at a lower price. But, to do that they dropped 3 address pins, and ended up with a sixteenth the addressable memory space. If you looked at that now, the savings vs. lost capability seems insane. The really, truly crazy thing is that it was justifiable in 1977 because ROM chips were so drat expensive that the notion of a game on the home market with more than 4kB of memory was laughably overpriced. By 1980 process improvements had made ROM chips a whole lot cheaper. That means that for most of the Atari's commercial career it used a chip based on functionally obsolete design tradeoffs. That the 1982 Atari 5200 (which did use the 6502) failed is a very interesting story in large part about the 2600. Now, the delay mode is really remarkable from a intended function vs. use standpoint. Registers take up a lot of space and therefore money. The SNES' processor, two generations hence, only had four useable registers vs. the Atari's three. What the next generation did to address this problem (although it also added dedicated sprite related hardware) was something called Direct Memory Addressing that lets the device responsible for making the picture access the RAM directly on a few specific RAM addresses. CPU sends an input signal to the peripheral, it reads the RAM, and you're good to go. But that requires close integration between the display device and the CPU and the NES or 5800 had 2kB of ram vs. the 2600's 128b. A simpler solution to synchronization problems with peripherals is to implement a buffer. Buffers are just parking spaces that let you load information before the associated device can act on it. So you get something like: [CPU]----A---->[ ][ ][ ][Device(not ready)] [CPU]----B---->[ ][ ][A][Device(not ready)] [CPU]----C---->[ ][B][A][Device(not ready)] [CPU]__________[C][B][A][Device(not ready)] [CPU]__________[ ][C][B][Device(ready)] [CPU]__________[ ][ ][C][Device(ready)] [CPU]__________[ ][ ][ ][Device(ready)] The VDEL trick essentially does this for the space of one sprite. It's not really a buffer though because the point of a buffer is to allow asynchronous operation between two devices. What Atari actually did in 1982 with the 5200 is a mix of both strategies (not that weird) that used a separate ASIC that's almost it's own microprocessor (kinda odd). I point this out because the update the display register just at the right time trick (called "racing the beam" in late 70's cool speak) kept the price of the system a lot lower than it might have been. That was a big deal in making the 2600 a success. But the 2600 was built at the end of a period of price assumptions and is very, very limited compared to what was being sold just 5 years later. There's a lot of things Atari did to gently caress up the 5200 for themselves, but the fact that 2600 games kept on coming that looked good and fresh (if not cutting edge) really killed the market. So how awesome is the engineering in this game (and others like it)? So awesome that it ate the next generation in the era of some of the most rapid advances in computer hardware and probably is one of the main causes of the video games crash of 1982. Centurium fucked around with this message at 23:06 on Jul 29, 2016 |

|

|

|

Bonus Post: The Sweep of History I'm going to break from my plan on this one because a major topic in the thread discussion so far has been on the incredible technical advancements through the 1980s and the disparities within it. So I went and thought of all the really iconic 8-bit technologies and their immediate competitors and looked up when they were all introduced. The result is a timeline that's honestly pretty mindboggling, even if you lived through it. I didn't live through all of it, but enough that I'm seeing technologies show up way the Hell before they were supposed to. This timeline is a bit America-centric but a number of foreign systems were important enough that news of them reached our shores. 1975: MOS Technologies develops the 6502 chip, itself a clone of an older Motorola chip and changed just enough to get Motorola's lawyers off their backs. It is a full-featured CPU implemented in under 10,000 transistors. This is an astonishing feat, even then. 1976: The Zilog Z80 is released, a software-compatible enhancement of an older Intel chip. It ends up being the 6502's main competitor for 8-bit stuff, but its presence in the game console world is somewhat lighter than you'd expect. 1977: The Atari 2600 is released. So is the Apple II. Both are 6502-based systems and both remain relevant far beyond the other machines of their generation. The Atari is usually considered a relic of the 1970s, but the Apple II's upgrades keep it the default competitor to machines introduced five years later. 1978: Intel releases the 8086, a 16-bit CPU. 1979: Motorola releases the 68000 chip, a system of 40,000 transistors that is also the first 32-bit CPU that is seriously used in the consumer space. It won't be for a while, but let's just note that we're still in the 1970s and the 32-bit era has already kind of begun. Intel, meanwhile, releases a version of the 8086 with fewer data pins called the 8088. 1981: IBM decides to enter the personal computer market to compete with Apple, Commodore, and Atari. They have agreements with Intel for chip supply, so they go with the 8088. 1982: Commodore releases the Commodore 64, a 6502-based system. It's kind of a big deal. Atari releases E.T.: The Extraterrestrial for the 2600, ships more copies to retailers than exist Atari 2600 consoles, and refuses to accept returns of unsold product from retailers. Merchants blacklist videogames generally and Atari specifically. This is probably not the only cause of the Great Videogame Crash of 1982, but it's the incident I usually point to as the greatest contributing factor. 1983: Nintendo releases the Famicom in Japan, also a 6502-based system. It's also kind of a big deal. Apple releases an improved version of the Apple II, the Apple IIe. The IIe, the Famicom, and the Commodore 64 together basically define the 8-bit era, at least in the US. A 16-bit extension to the 6502, called the 65816, is developed. 1984: Apple releases the Macintosh, a system based on the Motorola 68k and incorporating notions of graphical user interfaces that were developed at Xerox PARC and which now are the default. 1985: The Famicom makes it to the US, with its light gun and toy robot proving that it is totally not a videogame system like those Atari swindlers. The IBM PC series of computers enters the 32-bit era with the release of the 386. This is the point where machine code written to execute on machines of the time can in principle run unmodified on modern processors. The Commodore Amiga and Atari ST, each also a Motorola 68000-based 32-bit system with a modern-ish graphical user interface (Amiga Workbench and Digital Research's GEM system). Hardware manufacturers manage to cram a million transistors onto a single chip. A British company receives a prototype for a new kind of processor based on some research out of UC Berkeley. 1985 may, in all honesty, be the single most eventful year in computing. 1986: Apple releases the Apple IIgs, a sort of bizarre hybrid between the Apple IIe and the Macintosh. It's a 65816-based system that ultimately wound up running a slow and ugly version of the Macintosh OS, but at this point it looks more like a fancier IIe. 1987: IBM releases the Video Graphics Array technology, or "VGA". It'll take a few years for game developers to start using it by default, but the VGA 320x200x256 color mode is the iconic graphical mode for what we now think of as DOS Gaming. In the UK, the prototype from 1985 bears fruit and the Acorn Archimedes is released. This is the first consumer system powered by a true RISC processor—the Acorn RISC Machine, or ARM for short. It's a big deal, and it stays one. 1988: Sega releases the Mega Drive/Genesis, a Motorola 68000-based system intended to push game consoles into the modern world. 1989: Nintendo releases the Game Boy, a Z80-based handheld system. 1990: Nintendo releases the Super Famicom, a 65816-based system. The 16-bit era has unequivocally begun. The 8-bit world is still going strong, though: this is also the year that Super Mario Bros. 3 is released outside of Japan. The NES is really only in its middle age at this point. 1992: Mega Man 4 and 5 come out for the NES. The Atari 2600 and the Apple IIgs are formally discontinued. In the PC world, Alone in the Dark, Wolfenstein 3D, and Dune II are released, each founding a major genre. Ultima VII, Star Control II: The Ur-Quan Masters and Indiana Jones and the Fate of Atlantis also come out this year and are still considered classics of their own genres. Here's about where I'd put the 8-bit generation as almost irrelevant. But not completely, because there's still... 1993: Kirby's Adventure released for the NES. This is the latest-published 8-bit game that I can imagine still having wide appeal today. The Apple IIe is discontinued. 1994: Wario's Woods is published. It is the last commercial, officially licensed NES game published in North America. The Commodore 64 is discontinued. While the NES will survive another year in the US, and the Famicom actually wasn't formally discontinued until 2003, the 8-bit era is now indisputably a relic of the past. Meanwhile, in Japan, Sony releases the Playstation console, a MIPS-based system. The 32-bit era of console has unequivocally begun. MIPS is actually the architecture whose design inspired the Acorn Archimedes back in '87. Speaking of which, this is also the year that the ARM line reaches version 7 of its design. ARMv7 is kind of the 80386 of the ARM architecture; any 32-bit ARM code in the modern era is basically ARMv7 with some extra tuning or special features. ARMv7 is also at least as important as the 386 for the purposes of this history; code built to this spec powers the GBA, the DS, the 3DS, and basically every mobile iOS or Android device out there. Actually, it's probably more relevant in the present day: we're only just now seeing the 64-bit variants coming to sweep the 32-bit systems away, while 32-bit x86 code has been the legacy mode for quite some time in the Intel world. So, there we go. 1975 to 1994, a 20-year period from the development of the 6502 chip through the last NES game published. In the interim, we had the entire era of 16-bit dominance and pretty much every kind of computer that wasn't a Mac or a PC. If you were trying to stay cutting-edge through the 80s, there was at least one shattering revolution or vastly influential product released every single year. The last revolution I can think of that we've had of this magnitude was the switch to fully programmable GPUs, which would show up on this kind of history as "generic pixel shaders become actually practical". Fabricating chips has continued to be a rush of desperate innovation, and we still haven't even begun to properly explore the power the past decade has put at our fingertips. The future is bright—but let us look back and realize that the past was a nova. ManxomeBromide fucked around with this message at 06:33 on Aug 27, 2016 |

|

|

|

The last three posts have been phenomenal, and I can't wait to read more.

|

|

|

|

At this point, someone could tell me they've worked out a way to port Doom to the 2600 and I wouldn't be that surprised. Everything I've ever read about programming it is wizards.

|

|

|

|

Some British additions, to make sure the diversity of the era gets across: 1979: Sinclair begins its official life with the ZX80. 1981: The BBC Micro is released as part of the BBC Computer Literacy Project. It gets some hilarious advertising, and is introduced to many schools in the UK. The ZX81 is launched. Americans might know it as the TS 1000. 1982: The Sinclair ZX Spectrum also releases. 1983: The Acorn Electron releases, a cheaper alternative to the BBC line. Now pretty much all the 8-bit systems (including Commodore) and Apple are all competing in the UK Market. It makes for funtimes. 1984: The Sinclair QL releases. It doesn't do all that well, for various reasons. The ZX Spectrum+ releases later that year, which is not much of a plus at all. AMSTRAD (Alan Michael Sugar TRADing) comes onto the scene with the CPC 464. 1986: The Sinclair ZX Spectrum 128 (For its amazing 128K!) finally arrives in the UK. It had actually been sold in Spain from last year, but all the ZX Spectrum+-es that didn't get sold delay the UK launch. This is also the year Sinclair get bought out by Amstrad. 1987: The Acorn Archimedes, affectionately known among brits as "The Archie", releases. Funnily enough, this is where ARM (Acorn RISC Machine) chips have their start. You know, those chips that are still used in many mobile phones. 1988: Amstrad decides to go into the PC market with the PPC512 and PPC640 1990: The Amstrad GX4000 is released, one of the times Amstrad gets its nose bloodied. There are lots of reasons, but the main one is that it was crap, especially compared to the SNES and Genesis. The CPC line gets discontinued. 1992: The ZX Spectrum line is discontinued. Amstrad has better things to do in the then rising PC Market. The Archimedes line releases its last model, the Acorn A4000. 1994: The BBC Micro line gets discontinued, marking the end of the 8-bit era for the UK. The Archie is discontinued at some point between this and 1996. EDIT: It's amusing that the "port" of Doom to the Atari 2600 is mentioned. It was, in fact, a famous hoax. The Doom Wikia article is slightly out of date, as ports have been attempted. It's just the only thing to come out of it to my knowledge is this. JamieTheD fucked around with this message at 12:04 on Jul 31, 2016 |

|

|

|

One minor quibble. The Famicom/NES did not use a 6502, but rather a Ricoh 2A03. The 2A03 was 6502-based, but had the Binary Coded Decimal hardware sliced off and an audio component grafted on.

|

|

|

|

|

Gnoman posted:One minor quibble. The Famicom/NES did not use a 6502, but rather a Ricoh 2A03. The 2A03 was 6502-based, but had the Binary Coded Decimal hardware sliced off and an audio component grafted on. I was intentionally fuzzing over that; I think the only machine named in that whole timeline that actually uses a real, actual, Part Number 6502 is the original Apple II and maybe not even that. (The 2600 was the 6507, the C64 the 6510, the IIe the 65C02 which used a different kind of transistor and had some instruction set extensions). The BBC micro was also a 6502-series chip, but the Spectrums were all Z80s. The Archimedes was treated at the time as being basically Amiga-class, but the specs seem like it should have been able to outperform it. I think I'm going to need to collect everyone else's links and discussions for this too as a third strand.

|

|

|

|

So basically the 2600 -> 5200 transition is marred because developers were able to pull off more sophisticated code on the old system than they were on the new system, stranger and more powerful? It's interesting seeing just how viable the previous generation remained.

|

|

|

|

^^^^^ A big part of that problem was that Atari failed to launch the 5200 with what we know and groan about today as tech demo launch titles. That combined with a lack of internal discipline to move Atari's own designers to 5200 games and suddenly Atari had a console on shelves that they hadn't provided a compelling reason to develop for. Ouch. And then the market crashed. ManxomeBromide posted:Tech Post 3: The Sweep of History ManxomeBromide posted:

So what's the deal with these instruction sets? Why should you care if they're reduced or not? Well, let's talk about a complex instruction set like x86. They use commands that let you do, in one instruction, the things you regularly want to happen, like add register A to register B and store the result in memory. When we say instruction, this is the mnemonic you see in the debug window that shows lines of code. That's (more or less) human readable and lets you know what's going on (like ADD $1 $2 $3- add register one to register two and store the result in register 3) (this is not a real language). But to the machine, this one for one corresponds to logic high/logic low on its inputs that selects which portions of the CPU to use and what to do with the result. Real x86 instructions can be quite complex but man, why would you want to write out every single stage of every single operation? Well, some smart folks started thinking about this. See, the things a microprocessor does don't all happen in one cycle. Without getting into too much detail, the computer has discrete stages where input signals pass through transistors and become results. Some smart folks in a bunch of places figured out that you could squeeze speed in by loading the first part of the next instruction before the last part of the last instruction finished. But there's a limit to how much extra you can get out of that because the hardware is built to minimize the time complicated instructions take and so you have 'lock out' periods where you absolutely, positively can't load anything else before the current instruction passes a certain stage. Then, those Californians and Subjects of Her Majesty got to thinking- what if you just removed all the instructions that fowled up your cramming? That cramming is called pipelining- and thus we have the Microprocessor without Interlocking Pipeline Stages instruction set. No division- too hard. Just subtract a bunch instead. Each instruction does one thing. Add A to B, or add A to 125 in one cycle. Want to store that in the RAM? Write an instruction for it. What you get is a processor that can get pretty close to taking in and putting out one instruction every clock cycle. So even if you're a bit more verbose in your assembly, your processor is just burning through those lines of assembly code. Interestingly, both are still competing designs (well, ARM and Intel. MIPS is a real thing, but I don't think there's much of note using it any more) but for completely different reasons than in this period. Reduced Instruction Set Computers made a lot of sense if you're taking the Atari approach and trying to cram critical operations into a tiny amount of time like if you have to race the cathode tube beam. Complex Instruction Set Computers can be better (especially in this period) at a really broad range of tasks, especially if you need to do a bunch of high complexity (to the processor) tasks regularly. The Playstation was a sweet spot of RISC being really good at video games. Now, we have so drat many transistors cycling so quickly no one really gives a drat (comparatively). Instead, RISC processors are attractive because the same hardware choices that let them run quickly make them easier to eliminate power draw from. That's why ARM is so dominant in phones and light duty computers (whatever your marketing department wants to call the thing you use to browse the internet and that's it.) CISC computers are likewise killing power consumption quickly, but they continue to go for doing more things over a RISC architecture. How does this relate to our plucky 2600? Think about what ManxomeBromide was showing us with having to wait X clock cycles to read or write to the cache, or to perform a write to the I/O to the TV controller. All the while that cathode tube moves at its predestined rate, deaf to your excuses as your write is tardy. Gosh, wouldn't it be better if you could read/write to the cache faster? That's the fundamental pain that led people to work towards RISC architectures. And now you know. And knowing is half the battle. Centurium fucked around with this message at 05:28 on Aug 1, 2016 |

|

|

|

|

| # ? Apr 20, 2024 04:06 |

|

This is all real interesting stuff, thanks to everyone who's contributing. I mean, all I can say about MIPS is that the annoying rabbit in Super Mario 64 is named after it.

|

|

|