|

Paul MaudDib posted:Using it as a cache drive can thrash it pretty hard, depending on how much turnover it's getting. It's really hard to 'guess' what type of user a SHSC goon might be. Half of us are uber nerds that set up enterprise scenarios in our homes for fun. The other half are just normal people that want to use games and facebook.

|

|

|

|

|

| # ? Apr 19, 2024 21:27 |

|

redeyes posted:Yeah I checked a friends computer and it was in fact writing way way too much. Maybe the new metro app fixes it?

|

|

|

|

Combat Pretzel posted:The "new" Metro app is actually the old Chromium based desktop client run through Project Centennial. Which is kind of odd, with what WinRT being able to run apps written in HTML+JS. The porting effort to the Edge runtime can't be that ball breakingly hard. But no, ship a fat as gently caress Chromium runtime with it instead. One step forward, 10 steps back. Figures.

|

|

|

|

Is there a consensus on which is the best budget mATX AM4 mobo?

|

|

|

|

Palladium posted:Is there a consensus on which is the best budget mATX AM4 mobo? I don't know if you will ever have a consensus, but its probably one of the Asus, MSI, or Asrock offerings. I personally ended up with the ASrock Pro 4 and it has been pretty good. The first set of ram I purchased for it wouldn't run above 2400, but it wasn't on the QVL and I knew it might not work when I bought it. Ended with some Vengance LPX (also not on the QVL but confirmed online it would likely work). Other then that its functioned as one would hope. If I remember correctly MSI had very good VRAM cooling and power delivery. The ASUS had great power delivery but only above average VRAM cooling. Finally the ASrock had the best VRAM cooling but the power delivery was only above average at best. The only reason I think that to be relevant is because the socket is supposed to be supported for several more years so if they don't move to AM4+ that extra overhead might be able to handle a bigger more powerful chip down the line.

|

|

|

|

Palladium posted:Is there a consensus on which is the best budget mATX AM4 mobo? No consensus, but I'd check out the Asrock AB350m pro4

|

|

|

|

Combat Pretzel posted:The "new" Metro app is actually the old Chromium based desktop client run through Project Centennial. Which is kind of odd, with what WinRT being able to run apps written in HTML+JS. The porting effort to the Edge runtime can't be that ball breakingly hard. But no, ship a fat as gently caress Chromium runtime with it instead. That's still a fork they'd have to maintain separately.

|

|

|

|

Munkeymon posted:That's still a fork they'd have to maintain separately. Also, there's generally this thing about common code.

|

|

|

|

The Chromium (really Blink) embedding APIs are much richer than those for Edge, which are basically enough for a help viewer and not an application. There's a reason nobody has done the work to rehost Electron on top of Edge, and it's not because nobody has whined about using 0.2% of the disk. I expect that the Westminster-hosted app will be totally new code, and much less capable. (Like all WinRT ports of popular applications.)

|

|

|

|

The WinRT apps being less capable is partly fault of the developers, though. Sure, some of the sandboxing restrictions are a pain in the rear end, but that's on the storage and IPC side mostly. There's no reason to not to be able to create a rich functional app. WPF XAML apps had no issues emulating the Win32 UI controls and their complexity.

|

|

|

|

WinRT apps based on HTML/JS have much reduced capability compared to non-RT apps based on Blink. Just as with web-tech apps trying to build on top of Edge.

|

|

|

|

AMD/Newegg are bundling Quake Champions with R5/R7 CPUs now/soon. https://www.newegg.ca/Product/Product.aspx?Item=N82E16819113428 amdrewards.com posted:Campaign Period: Campaign Period begins August 22, 2017 and ends on February 15, 2018 or when supply of Eyochigan fucked around with this message at 02:10 on Aug 23, 2017 |

|

|

|

Anyone have a good overview of socket/mobo compatibility for the past years of AMD CPUs? Preferably in one-ish page.

|

|

|

|

ufarn posted:Anyone have a good overview of socket/mobo compatibility for the past years of AMD CPUs? Preferably in one-ish page. https://en.m.wikipedia.org/wiki/Comparison_of_AMD_processors

|

|

|

|

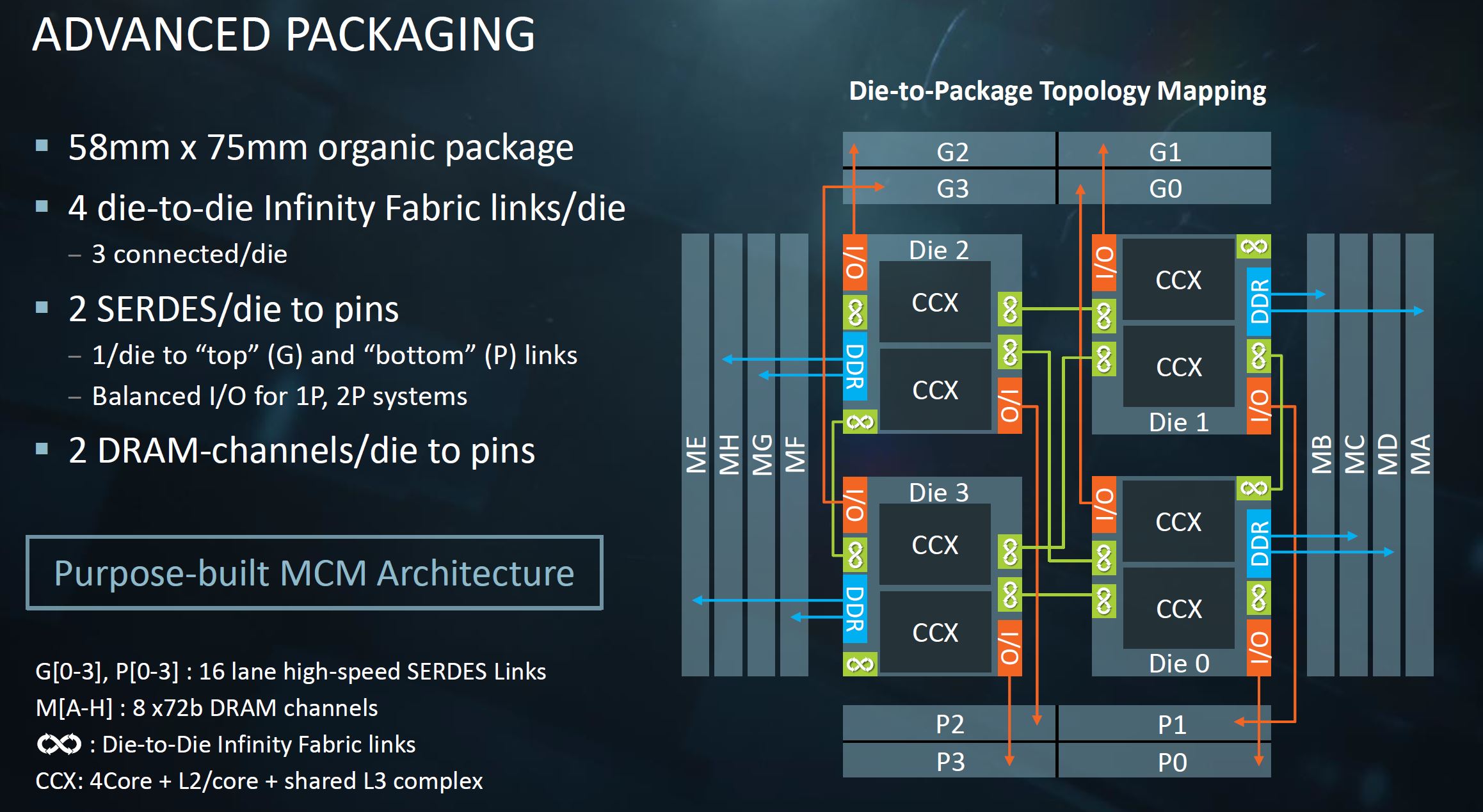

From AMD's HotChips presentation: Looks like EPYC could, in theory, scale beyond four dies. https://www.servethehome.com/amd-epyc-infinity-fabric-update-mcm-cost-savings/ Penalty for reaching across to another die's cache or memory controller is purely due to latency, not bandwidth, right? quote:AMD claims that it costs 59% as much as it would to manufacture a MCM 32 core package versus a monolithic die. This includes the approximately 10% area overhead for MCM related components. And this is why we anticipate MCM GPUs. SwissArmyDruid fucked around with this message at 04:15 on Aug 23, 2017 |

|

|

|

I'd like to say its as much the density afforded by 14nm and smaller going forward that allows for all this fancy MCM stuff, especially for GPUs because at 28nm, even if scaling efficient, fuuuuckk that package size.

|

|

|

|

Speaking of package size, GamersNexus did an A/B test of coldplate sizes on Threadripper: https://www.youtube.com/watch?v=9v5B79vdiXU Unsurprisingly, full coverage matters a lot.

|

|

|

|

Seamonster posted:I'd like to say its as much the density afforded by 14nm and smaller going forward that allows for all this fancy MCM stuff, especially for GPUs because at 28nm, even if scaling efficient, fuuuuckk that package size. It's also so you can make designs that would be completely unfeasible without MCM. An Epyc with a 16 core Ryzen refresh as the individual die would probably end up pushing the boundaries of the reticle size on modern commercial equipment, same as how the Fury cards were bumping right against the total possible die size restriction on the old 28nm process. I picture a graphics card that consists of 2 or 4 cores that talk to a single stack of HBM 2 a piece, so you can spread the thermal load out across a larger area and use substantially cheaper masks, with a much higher yield, because you're making 4 parts that are 160mm^2 vs. one part that's 550mm^2.

|

|

|

|

Do you folks think it's fair to say AMD is the primary innovator in chip design, and Intel's the innovator in fab process design? Looking at 21st century CPU improvements, it seems like (correct me if I'm wrong) AMD was first to market for most of the major innovations: x86-64, multi-core, on-die GPU (Intel HD graphics came with Westmere, but AMD bought ATI in '06 and talked at the time of doing integrated graphics), integrating the northbridge, and now MCM to squeeze more performance while chips butt up against Moore's Law.

|

|

|

|

Mofabio posted:Do you folks think it's fair to say AMD is the primary innovator in chip design, and Intel's the innovator in fab process design? Looking at 21st century CPU improvements, it seems like (correct me if I'm wrong) AMD was first to market for most of the major innovations: x86-64, multi-core, on-die GPU (Intel HD graphics came with Westmere, but AMD bought ATI in '06 and talked at the time of doing integrated graphics), integrating the northbridge, and now MCM to squeeze more performance while chips butt up against Moore's Law. AMD is forced to go the cheapass route since they have no money which occasionally makes them invest in new tech to save bux And then they piss it away on canards like HBM

|

|

|

|

Mofabio posted:Do you folks think it's fair to say AMD is the primary innovator in chip design, and Intel's the innovator in fab process design? Looking at 21st century CPU improvements, it seems like (correct me if I'm wrong) AMD was first to market for most of the major innovations: x86-64, multi-core, on-die GPU (Intel HD graphics came with Westmere, but AMD bought ATI in '06 and talked at the time of doing integrated graphics), integrating the northbridge, and now MCM to squeeze more performance while chips butt up against Moore's Law. Thing is, Intel has had their fair share of firsts, but they are very tight fisted about introducing them to the consumer market. For some reason they let themselves get blind sided when AMD puts out products that are clearly ahead of Intel's own in some way. AMD tends to be less tight with that because they are playing a perennial game of catch up, either in tech or market share. At least thats my take on things.

|

|

|

|

Intel is innovative in discovering ways to prevent competition.

|

|

|

|

They're also screwing up their rollouts in new and innovative ways as well.

|

|

|

|

JD.com and Tencent are onboard with EPYC, and AMD has Sugon and Lenovo now for additional OEMs. That's loving huge http://ir.amd.com/phoenix.zhtml?c=74093&p=irol-newsArticle&ID=2295144 These guys are absolutely massive and even if the ratio of AMD/Intel they buy is 1:9 that's huge for AMD.

|

|

|

|

FaustianQ posted:JD.com and Tencent are onboard with EPYC, and AMD has Sugon and Lenovo now for additional OEMs. That's loving huge http://ir.amd.com/phoenix.zhtml?c=74093&p=irol-newsArticle&ID=2295144 Pretty much, 10% of Intel's server and compute gross revenues is a fuckhuge quantity of money for AMD.

|

|

|

|

Mofabio posted:Do you folks think it's fair to say AMD is the primary innovator in chip design, and Intel's the innovator in fab process design? Looking at 21st century CPU improvements, it seems like (correct me if I'm wrong) AMD was first to market for most of the major innovations: x86-64, multi-core, on-die GPU (Intel HD graphics came with Westmere, but AMD bought ATI in '06 and talked at the time of doing integrated graphics), integrating the northbridge, and now MCM to squeeze more performance while chips butt up against Moore's Law. pretty much none of that was done by AMD first, although AMD did introduce some of those things to the consumer space. and a lot of AMDs history is straight up reverse engineering stuff, license producing stuff, and copying stuff. its also worth noting that most of the gains in computing can be traced back to process improvements. i would warn against building up AMD as some sort of hero in this dynamic. intel may be loving awful with its business practices and make most of the money, but these are both thieving, sheisty, backhanded shitassed tech corporations that would grind your family to powder and snort it if they thought it would give them a .01% stock price bump. no heros here, just capitalism.

|

|

|

|

I realllllly hope EPYC, Threadripper, and ryzen allows AMD to be somewhat profitable, and reinvest that money into people and their gpu side, because MCM gpus is needed.

|

|

|

|

Cygni posted:pretty much none of that was done by AMD first, although AMD did introduce some of those things to the consumer space. and a lot of AMDs history is straight up reverse engineering stuff, license producing stuff, and copying stuff. its also worth noting that most of the gains in computing can be traced back to process improvements. We absolutely have AMD to thank for x86-64, what are you talking about. You'd be living in an Itanium World otherwise. But yeah, AMD shouldn't be built into a paragon of virtue. Although Intel is definitely the far greater of "evils".

|

|

|

|

Krailor posted:You could save a little money by getting the EVO instead of the pro. You'll never notice the difference between the two unless you run SSD benchmarks for a living. You could also buy sm961 series.. 512GB MLC is 225€ in Germany: https://www.mindfactory.de/product_info.php/512GB-Samsung-SM961-M-2-2280-PCIe-3-0-x4-32Gb-s-3D-NAND-MLC-Toggle--MZV_1114534.html While 512GB MLC 960 pro is 295€: https://www.mindfactory.de/product_info.php/512GB-Samsung-960-Pro-M-2-2280-NVMe-PCIe-3-0-x4-32Gb-s-3D-NAND-MLC-Togg_1124051.html Saves you 70€.. I think other sizes have similar price differences. Not amd cpu related but I can't figure out why to buy Evo or pro series when sm961's are so cheap.

|

|

|

|

Well I am posting this from my new rig  It seems to run ok. Still installing everything. And it has the 960 Pro. Finicky stuff, such a small little SSD. It seems to run ok. Still installing everything. And it has the 960 Pro. Finicky stuff, such a small little SSD. Any benchmarks you want me to run?

|

|

|

|

SourKraut posted:We absolutely have AMD to thank for x86-64, what are you talking about. You'd be living in an Itanium World otherwise. But yeah, AMD shouldn't be built into a paragon of virtue. Although Intel is definitely the far greater of "evils". Itanium had some cool features. Maybe if it'd taken over we'd have the sufficiently smart compiler it needed to realize its potential. More likely ARM would have buried it, heh.

|

|

|

|

At the time (early 2000s) Itanium looked like the platform that will free us from the clutches of x86, with all its hacks and band-aids upon hacks and band-aids that it received over its long history. Alas, it wasnt meant to be.

|

|

|

|

Volguus posted:At the time (early 2000s) Itanium looked like the platform that will free us from the clutches of x86, with all its hacks and band-aids upon hacks and band-aids that it received over its long history. Alas, it wasnt meant to be. Turns out people should have switched over to ARM a long time ago, but hindsight.

|

|

|

|

Munkeymon posted:Itanium had some cool features. Maybe if it'd taken over we'd have the sufficiently smart compiler it needed to realize its potential. More likely ARM would have buried it, heh. The reality is the magic compilers that were needed to really get the performance Intel was predicting out of EPIC for general purpose work loads are still a pipe dream and all talk by Intel of them being "on the way" or "just a few years out" was straight up lies. And Intel's response of "performance doesn't really matter anymore" to that issue, after several years of saying it'd all work out ~somehow~, was incredibly jaw dropping both from a practical standpoint of "we need more performance guys WTF" and from a marketing one given Intel's own comments about how badass EPIC was going to be. AMD isn't some virtuous company at all (see their recent handling of Vega's launch/pricing) but Intel's been such incredible, and thorough, assholes for so many years on so many different things that AMD comes off looking like saints in comparison. SourKraut posted:We absolutely have AMD to thank for x86-64, what are you talking about. You'd be living in an Itanium World otherwise. But yeah, AMD shouldn't be built into a paragon of virtue. Although Intel is definitely the far greater of "evils". PC LOAD LETTER fucked around with this message at 05:21 on Aug 25, 2017 |

|

|

|

Well, I took the plunge and bought an all-new Ryzen system. I think I goofed getting the 1600X instead of the bare 1600, but I'm hoping to step up to whatever the top tier of the 2nd gen is as soon as they come out. Are most of the hiccups ironed out by now? My use case is Adobe stuff, lots of unnecessary Handbrake, and eventually compiling and 3D rendering.

|

|

|

|

Daily use for a few months. Update your BIOS, and I think everything except some virtualization stuff is sorted. Even that is on the vendor's end, not AMD IIRC.

|

|

|

|

Yeah daily use has been fine since at least the 1st month. Installation has been boringly smooth on the one I did for my bother.

|

|

|

|

Volguus posted:At the time (early 2000s) Itanium looked like the platform that will free us from the clutches of x86, with all its hacks and band-aids upon hacks and band-aids that it received over its long history. Alas, it wasnt meant to be. It really didn't. I remember talking to a friend back then and betting ARM would become the next big architecture, even on the desktop, but I never saw the demise of x86, it's just too deeply embedded. It's.. kind of come true. At least ARM is far more successful than Itanium.

|

|

|

|

PC LOAD LETTER posted:A lack of Itanium isn't something that has been keeping compiler development back at all though. Yeah, and I was saying the opposite of that and also that ARM would probably have taken over the market before the compilers got good enough to make Itanium competitive with ARM or x86. Munkeymon fucked around with this message at 16:14 on Aug 25, 2017 |

|

|

|

|

| # ? Apr 19, 2024 21:27 |

|

It's somewhat of an afterthought today but I think POWER would have been a more likely candidate to take over on the desktop and server if x86-64 had never happened/caught on, mainly because it supported 64-bit which ARM didn't until 2011.

|

|

|