|

Furism posted:I'm still baffled by the level of technology we reached. "Yeah, let's throw helium inside because gently caress friction." Yeah, they're very impressive technology. The whole forged drive enclosure is laser-welded shut because helium would escape through normal seals. The drives even have a SMART value indicating helium pressure level. I own two of those drives and they run noticeably cooler and quieter than similar non-He models, presumably because the platters suffer less friction and thus require less energy from the motor to keep them spun up.

|

|

|

|

|

| # ? Apr 19, 2024 16:13 |

|

eames posted:I own two of those drives and they run noticeably cooler and quieter than similar non-He models, presumably because the platters suffer less friction and thus require less energy from the motor to keep them spun up.

|

|

|

|

Are there any recommended documentaries about how large scale datacenters work? I don't want to just see "Wow! This has 8 exobyes of storage!". I'd like something with a bit of info about how the arrays are organised, what software/config they're using and what they do to expand and replace drives. Just hit YouTube?

|

|

|

|

Steakandchips posted:You don't need ESD bags. Those are perfectly fine. Those are not fine. They are actually the opposite of fine. The whole idea of an ESD bag is to make it slightly conductive so that electrostatic charges can't build up on the surface and subsequently discharge a few kilovolts into an IC that can't take it. Food bags are ordinary plastic without extra conductive material added so they are generally decent insulators -- which is to say materials that will collect a static charge by rubbing against random things. That is not a thing you want touching electronic components. Thermopyle posted:1 out of 1000 times you might have a problem without ESD bags. Sure but in a thread that's often about being excessively careful about data integrity I feel it's not out of place to point out best practices. I neglected to mention before that if you need a cheap field expedient commonly found in the kitchen, wrap a circuit board in a single layer of aluminum foil before putting it in another container. btw, a fun fact about ESD damage: it's often a lot more insidious than "works / doesn't work". It's easy to fool yourself into believing you did no harm, but ESD-damaged ICs are sometimes just destined to fail a lot earlier in their life than they otherwise would have. Worse yet, they can have "soft" failure modes where data integrity is compromised rather than the chip outright failing to work.

|

|

|

|

In this case it's just to have enough bits to put back together for an RMA claim if the drive fails. I do see your point though.

|

|

|

|

Thanks Ants posted:In this case it's just to have enough bits to put back together for an RMA claim if the drive fails. I do see your point though. This. I know the NAS thread is all about overkill, but those are fine.

|

|

|

|

In addition to inappropriate ESD bags chat is people (engineers, even!!) setting up bench top workstations and sticking an esd bag underneath circuit boards with through hole conductors and powering that poo poo on. While it's perhaps not as conductive as just slapping metal against all the conductors it is still drat well not insulating! I have engineers in my group who carry $3-4k test cards out of the lab and into the carpeted cube area and they just keep doing it no matter how many times I lose my poo poo. Gahhhh. Anyway like Bob Howard it is a huge pet peeve of mine.

|

|

|

|

priznat posted:I have engineers in my group who carry $3-4k test cards out of the lab and into the carpeted cube area and they just keep doing it no matter how many times I lose my poo poo. Gahhhh. How many of these have ever gotten damaged by ESD? I mean, I'm not gonna say don't be prudent--especially with something so easy, but it's always seemed to me that the dangers of ESD during normal person operation is pretty far down the list of things I'm worried about breaking my gear.

|

|

|

|

Pretty sure I've killed a motherboard USB port when trying to plug in a fan header near the CPU.

|

|

|

|

DrDork posted:How many of these have ever gotten damaged by ESD? The worst thing is when they are intermittent failures that are impossible to definitively nail down, which ~may~ have happened since we get flaky cards from time to time. I don't think I've encountered anything that I could say for sure was fried by a static discharge from unsafe handling. If a board was just dead it is better though because you can junk it and move on. Normal person operation isn't too big a thing. Just touch a grounded surface to discharge after moving around, should be fine.

|

|

|

|

priznat posted:In addition to inappropriate ESD bags chat is people (engineers, even!!) setting up bench top workstations and sticking an esd bag underneath circuit boards with through hole conductors and powering that poo poo on. I have to confess that (a) I'm an engineer and (b) it's me, I've been the guy who powered up a circuit board after laying it down on top of an esd bag on a lab bench. I know it's not actually a good idea but I've sometimes done it. but yeah this diversion has all been pet peeve chat

|

|

|

|

They have these cheap mats connected to a small cable that you attach to a grounded metal bit. You just lay it on a desk, connect it to the ground, and you're (mostly) good (make sure your shoes are insulated in that case I guess). These go for like $10 I think (I just get them for free at work, not sure) and I always felt it was a cheap, easy and safer way to tinker with electronics.

|

|

|

|

Welp, I'm rolling back to the Corral tech preview, FreeNAS 11 just isn't impressing me.

|

|

|

|

Is there a way to get a sense of how close the next release is? Their forum isn't too helpful. My Corral's docker host is now making GBS threads the bed every other day.m, nd I don't wanna invest time fixing something I'm gonna throw out anyway once 11.1 comes out

|

|

|

|

11.1 is slated for Oct 2, but their roadmap makes me think they're going to miss that date by a country mile. Maybe by the end of the year. And I think I saw that their docker support got bumped to 11.2

|

|

|

|

Recommendation for FreeNAS 9 vs 11 for a new build? I was planning to go with 11 since it's STABLE (and the UI looks a lot nicer from the screenshots) but maybe I'm missing something. Most of my pre-build tinkering has been on 9, I find the UI... serviceable.

|

|

|

|

You might as well go 11. It still has the same UI as well as the new responsive one (which doesn't render properly for me, fonts are huge).

|

|

|

|

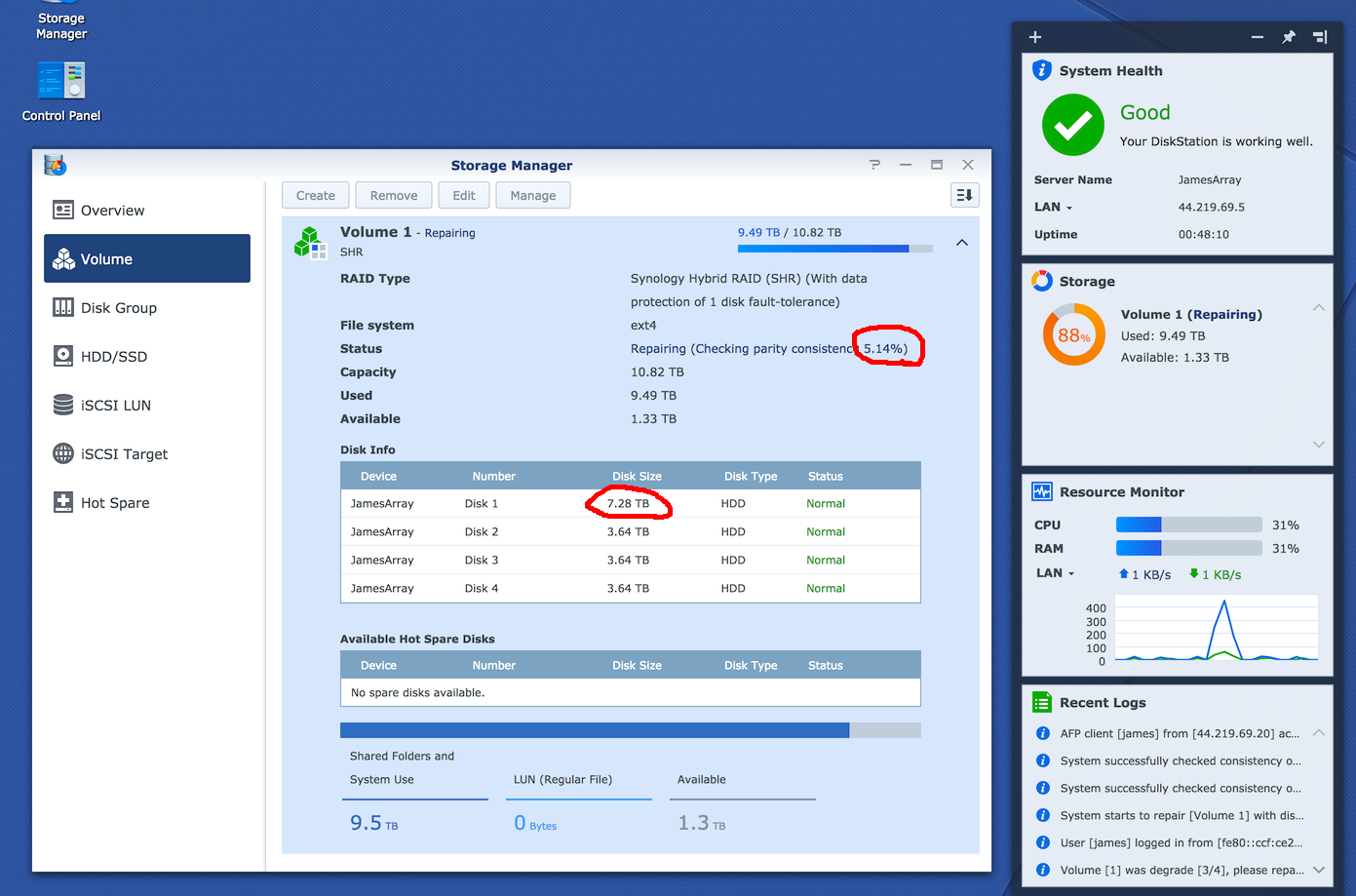

Upgrading my nearly full Synology DS416j from 4TB drives to 8TB drives This is taking aaaaages - one disk at a time to rebuild the RAID - looking like 14 hours per disk or so, going from initial progress on the 1st drive swap?

|

|

|

|

For what it's worth, it took roughly that amount of time per disk for me doing precisely the same thing on FreeNAS.

|

|

|

|

Yeah, it's going to take a while.

|

|

|

|

G-Prime posted:For what it's worth, it took roughly that amount of time per disk for me doing precisely the same thing on FreeNAS. Proteus Jones posted:Yeah, it's going to take a while. Cool, glad to know it's not just me / only cuz of this slow-rear end enclosure. (Which is more than needs suiting for me, read speeds near gbit ethernet speeds so that's all I need for my home..)

|

|

|

|

G-Prime posted:11.1 is slated for Oct 2, but their roadmap makes me think they're going to miss that date by a country mile. Maybe by the end of the year. And I think I saw that their docker support got bumped to 11.2 I figured as much  . Maybe if I have a free afternoon I'll just do what I was avoiding and upgrade then redo the VM by hand. . Maybe if I have a free afternoon I'll just do what I was avoiding and upgrade then redo the VM by hand.

|

|

|

|

admiraldennis posted:Recommendation for FreeNAS 9 vs 11 for a new build? There's really no reason not to go with 11. 11 is basically 9 with some updates. All the real cool changes were in 10, which they're now in the process of porting over to 11. Slowly. Also, the "nicer" UI is still considered Beta and has a lot of small issues for many people. Chances are you'll still spend a good bit of time in the 9.x-style UI.

|

|

|

|

In other Synology news, apparently the "cool" fan setting (3rd of 4 "full" = highest) is complete bullshit - the drives were at 43°C under full use, switched to "full" and now 35°C. And they aren't that loud either; I'd have been running it on full this whole time if I knew it'd make a near 10° difference Sniep fucked around with this message at 06:03 on Sep 10, 2017 |

|

|

|

Sniep posted:Upgrading my nearly full Synology DS416j from 4TB drives to 8TB drives Just out of curiosity, would ZFS be faster to rebuild/extend volumes, or this is just part of data redundancy no matter what technology you look at?

|

|

|

|

ZFS should be faster because it just resilvers active data, since it knows what parts of the array are used and what not. Not sure what the case for mdraid is, whether it communicates with upstream by now or not. Back when I last messed with it, it didn't. As such it had to rebuild free space, too.

|

|

|

|

That's a valid thing I should point out in agreement. The times for me were on a 8x4TB RAID-Z2 array that had less than 1TB free, going to 8x8TB.

|

|

|

|

Also afaik raid z2 resilvering is going to be slower than mirrored drives in zfs.

|

|

|

|

OK cool - I will plan on going with FreeNAS 11. Thanks everyone. Final decision time for this NAS! I ended up picking up a few more drives. Trying to decide between: RAID-Z3 of 11x 8TB drives or 2x RAID-Z2 of 6x 8TB drives Both net the same usable pool of storage. This is mostly for single-user archival storage / backup / media files. Here's the pros/cons as I see them: Pros of RAID-Z3 config: - Better reliability, seemingly by an order of magnitude according to all the calculators (even though the Z2 reliability is still quite high) - One less disk needed (can save some $) Pros of RAID-Z2 config: - Better paths to upgrading: can upgrade only 6 drives at once for more space in the future, or add a 3rd vdev - More IOPS (moot for single-user?) - Faster resilvers My plan is to have a cold spare handy for either config, and to stay under 80% space utilization (part of my reasoning for buying a few more drives) at all times. I'm leaning towards the Z3 because the order-of-magnitude reliability increase is enticing. My main worry is resilvering - will it be a nightmare? Anyone with vaguely similar setups want to chime in on their resilver times? admiraldennis fucked around with this message at 22:00 on Sep 10, 2017 |

|

|

|

admiraldennis posted:- More IOPS (moot for single-user?)

|

|

|

|

Virtualization is cool. I smushed my HTPC/Gaming machine into unraid , passed the devices through, and it works great. Might slap an i7 in there eventually for MORE CORES.

|

|

|

|

I game via virtualization as well and love it, but I don't do it ON my NAS. I tried setting up iSCSI from the NAS to the VM, but it was agonizingly slow over gigabit. Seriously considering 10G cards now, because I'm broken inside and my NAS sits all of like 6 feet from my desktop.

|

|

|

|

G-Prime posted:I game via virtualization as well and love it, but I don't do it ON my NAS. I tried setting up iSCSI from the NAS to the VM, but it was agonizingly slow over gigabit. Seriously considering 10G cards now, because I'm broken inside and my NAS sits all of like 6 feet from my desktop. I moved my 1060, my SSD, USB devices, and a NIC to my unRAID box, passed them all through, works great. Might not even need the i7. I only do 1080p and Dark Souls 3/Rocket League run just the same as they did on my HTPC. A+, would smush together again.

|

|

|

|

G-Prime posted:I game via virtualization as well and love it, but I don't do it ON my NAS. I tried setting up iSCSI from the NAS to the VM, but it was agonizingly slow over gigabit. Seriously considering 10G cards now, because I'm broken inside and my NAS sits all of like 6 feet from my desktop. I'm planning to try to set up 10G using eBay'd parts to run a fast line from my NAS to my gaming PC and (much more annoyingly) MacBook Pro. Likely going the SFP+ route, it seems cheaper for used gear. 1Gbps just feels crazy slow for wired networking in 2017 despite its ubiquitousness. Wireless (ac) is basically just as fast; heck, affordable home internet connections can come in 1Gbps these days. Where's the 10G proliferation!? (I want 10G everywhere for work reasons too; I work in game dev and pulling things down over 1Gbps sucks there too but nobody's going to pay for 10G to every workstation)</rant> Combat Pretzel posted:Depends on your workload. If you're just archiving videos and documents on the NAS, I guess yea. If you start doing stuff like installing apps and games to it, then you need IOPS. I've never done the latter before - but maybe with a 10G line I'd want to. What do people use to set this kind of thing up? iSCSI? admiraldennis fucked around with this message at 01:44 on Sep 11, 2017 |

|

|

|

admiraldennis posted:Wireless (ac) is basically just as fast; heck, affordable home internet connections can come in 1Gbps these days. Where's the 10G proliferation!? 802.11ac is only "just as fast" if you believe everything written on the box. In real life, you rarely can find setups that can do 500Mbps reliably. Affordable 1Gbps internet connections are awesome (and I have one), but the "average" user in the US is well below 100Mbps, and only a few countries in the world have average speeds above 100Mbps. We haven't seen an explosion in 10G consumer networking because, frankly, there isn't a huge demand for it. Most people are a-ok with 100Mbps, let alone 1Gbps. I mean, I'd love affordable 10G, too, but I'm not gonna pretend that wanting to shove a NAS box down in the basement but still have near-spindle performance when I access it three floors up is a common use case. poo poo, just having a NAS box puts all of us solidly in the niche market. Our time will come, though...you can already get server motherboards with dual-10G ports for $600 or so. Now if only switch prices would come down...

|

|

|

|

If your rigs are physically close together, InfiniBand QDR is definitely the way to go for the moment. You can pick up a pair of dual-port IB cards and some cables for less than a single 10GbE adapter card, and if you end up having to lose your $100 investment then oh well, you had 40 gbps networking for 2 years or w/e at $50 per year. Switches are cheap too, a 24- or 36-port switch should run you around $125. Having a lot of 10 GbE switching capacity gets real expensive, it's kinda hard to justify in a homelab setting past a trunk connection to a NAS, and IB still does better IOPS there. I would go so far as to say that if you need longer than a 7m run it might be worth looking into a retarded setup like a 10 GbE card bridged to its own InfiniBand port to handle the longer runs between your switches or something. Right now, for computers that are physically close, the economics of Infiniband on a per-adapter basis are just fantastic. Paul MaudDib fucked around with this message at 05:13 on Sep 11, 2017 |

|

|

|

admiraldennis posted:I've never done the latter before - but maybe with a 10G line I'd want to. What do people use to set this kind of thing up? iSCSI? But I've ZFS set up with 32GB of RAM and 192GB of L2ARC, and the link is 40GbE, so there's plenty of haul-rear end. If I'm running from RAM, I'm getting like 66% of NVMe, if I'm running from L2ARC, I'm getting SATA SSD performance. Anything else comes from a RAID-10, so it's merely OK once it actually needs to hit the disks.

|

|

|

|

How did I miss that? Microsoft is submitting code for SMBDirect support! Hell yea, RDMA! https://lwn.net/Articles/731508/ --edit: Ah gently caress, it's for the CIFS client. Hope Samba ports it over quickly. --edit2: Seems the Samba guys want to leverage that stuff, whenever it hits the kernel. Hurry up goddamnit. I want 4-5GB/s over my link. Combat Pretzel fucked around with this message at 12:22 on Sep 11, 2017 |

|

|

|

Someone should point out to Microsoft that FreeBSD also has a CIFS client, and that it's desperately out-of-date. It's not like they're foreigners to commiting to FreeBSD as it is.

|

|

|

|

|

|

| # ? Apr 19, 2024 16:13 |

|

Combat Pretzel posted:How did I miss that? Microsoft is submitting code for SMBDirect support! Hell yea, RDMA! Just when they dropped SMB direct from the regular Windows 10 client.......

|

|

|