|

Thanks for the feedback, guys! That means a lot to me.Karia posted:I adore this stuff. I played around with 3d modelling a bunch years ago, but never really got into the technical side. Definitely fun to learn what I was actually doing when I clicked that render button. You're right, game rendering is mostly ways to cheat ray tracing! But some stuff still apply - Phong interpolation was used in offline renderers long before it became a real-time thing because it was originally too expensive and it's still behind a bunch of code so you don't have to subdivide mesh infinitely. The basis for most post-processing effects are the same and since color correction has been a part of the rendering pipeline it's been used for movies/archvis a long while before these algorithms were ported into game engines. Also, the basis of having a single composite image based off several renderers was available in traditional renderers like MentalRay a while before deferred rendering and g-buffers were popular in games. As for shaders, remember that shaders were originally invented by Pixar to enhance Ray Tracing Renderers, we only started using them in games long after. When you ray trace you still need an algorithm to define what color will return and there are just as many optimizations in Indigo as there are in games, only in a different scale or serving a different purpose, they're never 100% accurate. For example, very few renderers fully model the physical properties of surface porosity and instead split it into different consequences of porosity at different degrees, such as SSS, multilayered materials or micro-facets. Boksi posted:This stuff is fun to read, even though it makes my brain hurt sometimes. And my eyes too, with that last image. Here's the abominable color curve used:

|

|

|

|

|

| # ? Apr 25, 2024 19:06 |

|

Elentor posted:

is there some bio/technical reason that the color is so harsh and gross?

|

|

|

|

Elentor posted:Thanks for the feedback, guys! That means a lot to me. Oh, yeah, I've never been under the impression that any renderer is literally modelling the interaction of photons with electrons to simulate materials, they're using approximated material models to simulate the interaction of the light-path with the surface model. It's just about the level of the approximations, as you said. AO, shadows, reflections and refraction, etc, arise naturally from ray tracing, but even Indigo's much-vaunted sun-sky model is cheating in some ways: they pre-baked all the data rather than modelling the entire planet every time you want to do a render (fricking cheaters.) Hell, even path tracing is a weird approximation where they simulate photon bouncing backwards since it's less computationally wasteful. So... exactly what you just said. And thinking about it one way: even in photography (which uses the most physically-accurate rendering engine imaginable) they still us compositing, color correction, and tons of other cheats to get a better looking image, even if it's not the most representative of reality. Karia fucked around with this message at 16:08 on Jul 5, 2018 |

|

|

|

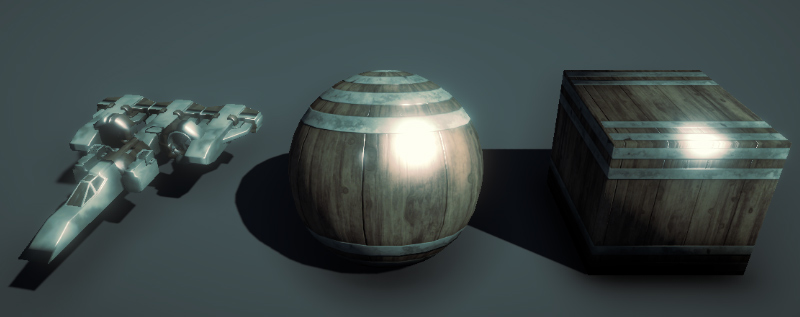

Captain Foo posted:is there some bio/technical reason that the color is so harsh and gross? The curve starts black, diverges towards teal (blue + green) and then harshly transitions towards having a red predominance, creating a band of colors that is effectively a harsh gradient rather than a traditional curve between low/medium/high intensity bands. If done with the standard trackballs, the result would be a smoother interpolation. Here's an equally exaggerated teal & orange color scheme to compare:   Here's a fun tidbit about bloom/glare: Because blooms have a predefined threshold of luminosity below which the bloom will not happen, there's a specific point in the lighting curve which corresponds to the gradient a bloom will necessarily cover.  So to create the most aberrant effect, I made the transition between teal to red set in such a way that it would cover the bloom radius, giving it an unnatural appearance that looks like the color is being peeled off around the light reflection. Elentor fucked around with this message at 16:14 on Jul 5, 2018 |

|

|

|

Karia posted:Oh, yeah, I've never been under the impression that any renderer is literally modelling the interaction of photons with electrons to simulate materials, they're using approximated material models to simulate the interaction of the light-path with the surface model. It's just about the level of the approximations, as you said. AO, shadows, reflections and refraction, etc, arise naturally from ray tracing, but even Indigo's much-vaunted sun-sky model is cheating in some ways: they pre-baked all the data rather than modelling the entire planet every time you want to do a render (fricking cheaters.) Hell, even path tracing is a weird approximation where they simulate photon bouncing backwards since it's less computationally wasteful. So... exactly what you just said. The ultimate irony is that every photographer spends a shitload of money to get a clean image, companies spend millions trying to advance lens to make images as nice as possible, and we're tossing around chromatic aberration and lens dirt effects on purpose. Elentor fucked around with this message at 16:17 on Jul 5, 2018 |

|

|

|

Elentor posted:The curve starts black, diverges towards teal (blue + green) and then harshly transitions towards having a red predominance, creating a band of colors that is effectively a harsh gradient rather than a traditional curve between low/medium/high intensity bands. thanks!

|

|

|

|

Elentor posted:The ultimate irony is that every photographer spends a shitload of money to get a clean image, companies spend millions trying to advance lens to make images as nice as possible, and we're tossing around chromatic aberration and lens dirt effects on purpose. I'm curious how you'd react if I were to say lens flair. You know, hypothetically.

|

|

|

|

Karia posted:I'm curious how you'd react if I were to say lens flair. You know, hypothetically. I think that would unironically be a good name to generalize lens effects.

|

|

|

|

These posts fuckin' rule, Elentor!

|

|

|

|

Bonus Chapter - The Elephant in the Room, Part I There's a corridor, hidden away from the masses. It's a corridor of peril and despair. Some see it as a manifestation of human ambition and imagination. There are many paths that lead to that corridor. Some paths are... inconspicuous, unsuspecting. Others, not so. Months ago you started to follow a thread that ultimately led you to it - the corridor. The first door has no label, no title. There are scratches on the door. Upon closer inspection, the scratches form a crude drawing of a human. You keep walking. Some doors are closed, some doors are unlocked, some doors are half-open. One of the doors is covered with a gelatinous substance. You scratch some of it away from the label, only to read a most puzzling choice of words. "Subsurface Scattering". The door is unlocked. It's your choice to pry. You slowly open the door, and ahead of you there seems to be a gallery most bizarre. Hundreds - no, thousands of sculpted torsos are on display, lined up as far as your eyes can see. Most hold the same caucasian male's face, but every single one of them differs slightly from another. Some look like wax. Some look like rubber. You keep looking at them until you finally meet one that looks like it's made of human flesh. You touch it, but you don't feel anything. "There's nothing to be felt", you hear a voice in your head. "There won't be for a while. Eventually, yes, but not yet." It's not real. You know that to be. Some of the things you see here come from the real world, some will leak to it. Some have consequences, but they're not real, at least not in the physical sense. The other side of the room is filled with vases, assorted food, a lot of candles, and spilled liquid all over. There's a Chinese Dragon made of glass. You follow the leak further and further, not knowing where it leads since there's a thick fog clouding your vision. Eventually the darkness gives way to a hazy, blurry light, and as you leave the fog you see yourself amidst the ocean. You stand amid the roar of a subsurface-tormented shore. And you hold within your hand particle emitters of golden sand. How few! Yet how they creep, and they keep creeping, and creeping, and creeping... in an infinite loop, through your fingers to the deep. The ocean covers the horizon. The water doesn't look... real. But the light shining from behind the waves, that greenish glow, it's definitely there. Looking up you see a myriad of clouds in the pale blue sky. Some clouds look mute, others look way too bright. You get it. They're attempts at simulating how the sunlight scatters inside a cloud. Some move so freely but they look so flat. Others look so beautiful, but move so slow. You feel uncomfortable watching them. You walk back. As you go back to the stands showcasing candles, you notice some of the candles have human hands in front of them. "Can you see how the flames behind the hand light up the blood inside the fingers?" "It's all about blood. There must be blood. Did you know an artist once asked the color of Yoda's blood to George Lucas so he could set it up properly? Blood shows through your ear flesh and Yoda's ear is long. It must show a lot of blood. Subsurface Scattering demands blood. Do you have blood? Light reaches it from behind and it gets lit. Light comes from all sides and lights it. Light enters your body, scatters inside your body, and leaves it. It scatters in your blood." "Blood, blood, blood, blood." You start hearing a dozen voices. "Is this blood dark enough?", "What is the refraction of human skin?". The voices get louder. You run away. You close the door. You can't lock it, but you can make the voices stop. You tell yourself never to set foot upon that terrible place again. You move on again and for a while you walk, until a very peculiar stench taints the air. The stench renders you sick. It's getting hard to move forward. You look at a door to your left. "Animation". There are vines, blurry appendages covering that door, and they seem to come from the next door.  You hear a voice in your head again, this time a different voice - "Be careful. Those who enter that room lose something, for there is no blur without loss of information. That door... has a life of its own. It once belonged to a far away place, the Convolution Matrix, and before that it was part of an entirely different corridor altogether, a place I miss dearly. But that door, as I said, it has a life of its own. And it's spreading. Oh, woe the day! More to blur did never meddle with my thoughts." You ask the voice its name. "Carl Friedrich Gauss". He continues - "This is not my corridor, I'm not meant to be here. I'm merely here to warn you. Go back, lest be too late!" The next door is also being slightly tainted by the Blur's influence. There are two labels in it, both extremely bloodied, and there are many scratches upon this most terrible wall. With much terror in your heart you read the two labels, and ponder upon yourself what horrors must have been wrought for such a conflict to exist. "60 FPS" "Cinematic Experience" You hear whispers from behind that door. "The human eye can't see more than 24 frames per second. The human eye can't see more than 24 frames per second." Upon closer inspection, you see many faint marks written with dried blood. BOYCOTT. BOYCOTT. BOYCOTT. There's a very powerful steam coming from underneath the door. You step back, startled. Such is not a place for a sane mind to reach. And then you reach the source of the stench. The elephant in the room, or corridor if you will. A huge elephant, with a VR Kit on its head, bashing its head against a door, while vomiting uncontrollably. The vomit is all around. Some of it is blurry. The door is covered in blood. Some of it is blurry also, but only around the edges. You look upon the door the Elephant is desperately trying to enter, but it's no use. Even if you were to hold the key, its lock is so blurry that it can no longer be used. The door seems very old, and almost entirely taken by the blurriness. Through considerable effort, you manage to squint your eyes enough to read the door's label. You dread what lies behind it. "Anti-Aliasing" Proceed. Elentor fucked around with this message at 03:44 on Mar 11, 2020 |

|

|

|

|

|

|

|

Oh gods. I can taste the pain behind the text, but I am laughing uncontrollably.

|

|

|

|

The face is the same, but the hands are many.

|

|

|

|

So is the elephant in the room the limited resolution of most consumer displays, leading to jaggies if you don't antialias (and therefore throw away detail)?

|

|

|

|

Karia posted:Hell, even path tracing is a weird approximation where they simulate photon bouncing backwards since it's less computationally wasteful. * Caveat: the results are identical if the virtual materials you use behave like actual, physical materials in certain key ways. This is one of a few reasons the physically-based trend has been very strong in offline land: if you cheat too much you get unpredictable results between different rendering techniques, and we'd like everything to be predictable. Games are going more physical, but I don't believe any game uses models that are 100% energy conserving, perfectly reciprocal, etc, since those are comparably costly and games don't have to care that much. You can gently caress up post-processing in various different ways (like approximating a real bloom filter by processing an LDR image with a hard cutoff threshold and blur  ) which I think gives it an overly bad rap in video games. The truth is we have to do lens flare, bloom, aberration, etc because, ultimately, your monitor is not as bright as the sun. This is probably a good thing as an 8000K monitor half a meter from your face would likely be uncomfortable. However, since we still want to render images of the sun, and reflections of the sun, and things lit by the sun, and still have people read those things as "very loving bright" even though they aren't, we absolutely, positively have to cheat. There is no other option. We can inform our cheats by how the eye behaves when it looks at bright things and emulate that adaption effect, and by how cameras and imperfect lenses behave when there's something very bright in view and I think as an industry we do a reasonable job most of the time. Sometimes you still get a JJ Abrams who wants to dial the lens flare knob to 11 but most people use their powers responsibly. ) which I think gives it an overly bad rap in video games. The truth is we have to do lens flare, bloom, aberration, etc because, ultimately, your monitor is not as bright as the sun. This is probably a good thing as an 8000K monitor half a meter from your face would likely be uncomfortable. However, since we still want to render images of the sun, and reflections of the sun, and things lit by the sun, and still have people read those things as "very loving bright" even though they aren't, we absolutely, positively have to cheat. There is no other option. We can inform our cheats by how the eye behaves when it looks at bright things and emulate that adaption effect, and by how cameras and imperfect lenses behave when there's something very bright in view and I think as an industry we do a reasonable job most of the time. Sometimes you still get a JJ Abrams who wants to dial the lens flare knob to 11 but most people use their powers responsibly.

|

|

|

|

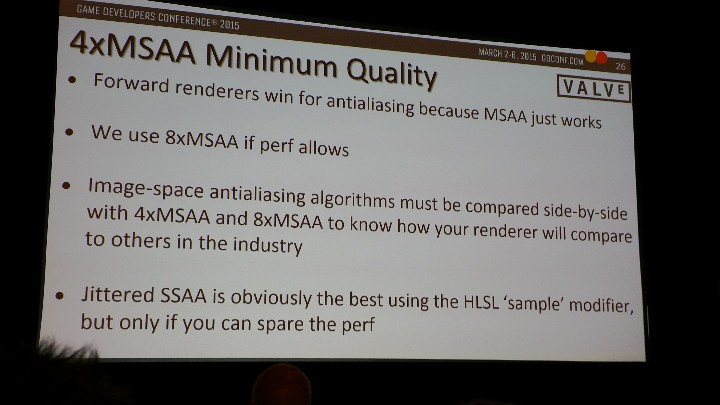

TooMuchAbstraction posted:So is the elephant in the room the limited resolution of most consumer displays, leading to jaggies if you don't antialias (and therefore throw away detail)? The elephant is MSAA, desperately trying to become relevant again, while being a necessity in VR due to the lack of temporal stability in other AAs. Xerophyte posted:

If you have any fun anecdotes/trivia re: path tracing I'm all ears. I'm really interested in reading more about this stuff. Elentor fucked around with this message at 14:35 on Jul 6, 2018 |

|

|

|

I am thoroughly interested and entertained hearing about the

|

|

|

|

Elentor posted:If you have any fun anecdotes/trivia re: path tracing I'm all ears. I'm really interested in reading more about this stuff. Well, uh, jeez. I'm not entirely sure where to start with that one. If you or anyone else has something they're curious about then rest assured that I am both very fond of the sound of my own typing and full of questionable facts & opinions. Someone mentioned subsurface scattering, so let me just apropos of that post this classic animation showcasing what you can do with subsurface scattering in your path tracer and a soft body simulation: https://www.youtube.com/watch?v=jgLzrTPkdgI Xerophyte fucked around with this message at 07:19 on Jul 7, 2018 |

|

|

|

No further questions.

|

|

|

|

Xerophyte posted:Well, uh, jeez. I'm not entirely sure where to start with that one. If you or anyone else has something they're curious about then rest assured that I am both very fond of the sound of my own typing and full of questionable facts & opinions. ahahahahhahaha

|

|

|

|

Xerophyte posted:Someone mentioned subsurface scattering, so let me just apropos of that post this classic animation showcasing what you can do with subsurface scattering in your path tracer and a soft body simulation:

|

|

|

|

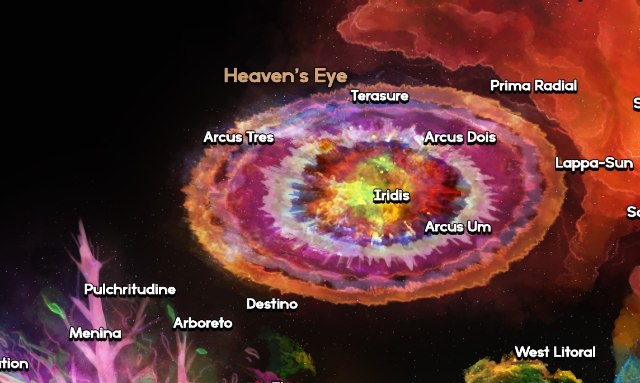

The upcoming 4 to 5 chapters (including bonus) will be the last chapters before the Alpha. I have them planned in advance. I'm taking a two week break because working has been a bit exhausting. During this break I'll probably try to relax and get some energy back doing something else. Probably coding something procedural.  I'll take this break time to write and post the chapters I have planned since I'll have a lot more energy to do so. Afterwards I'll release the Alpha, make a few chapters about it, and do some closed tests before releasing a public test. In the meanwhile I leave you with a concept art for a cute nebula I painted.

|

|

|

|

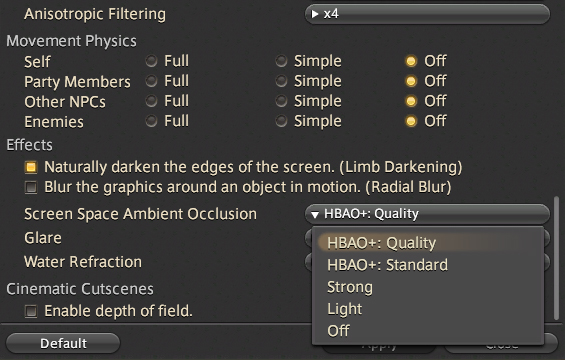

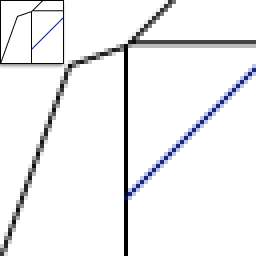

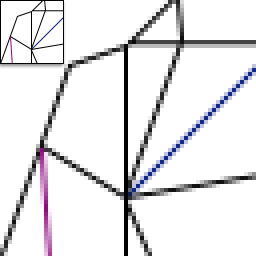

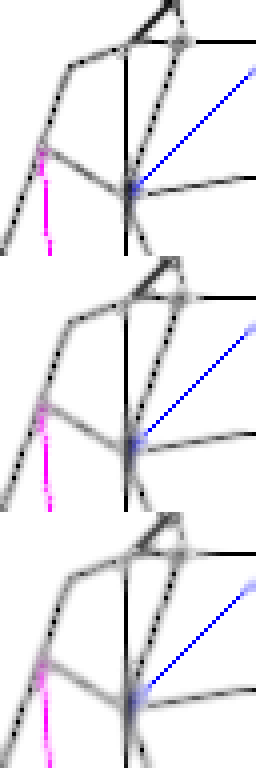

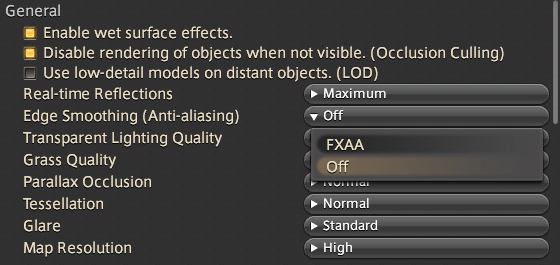

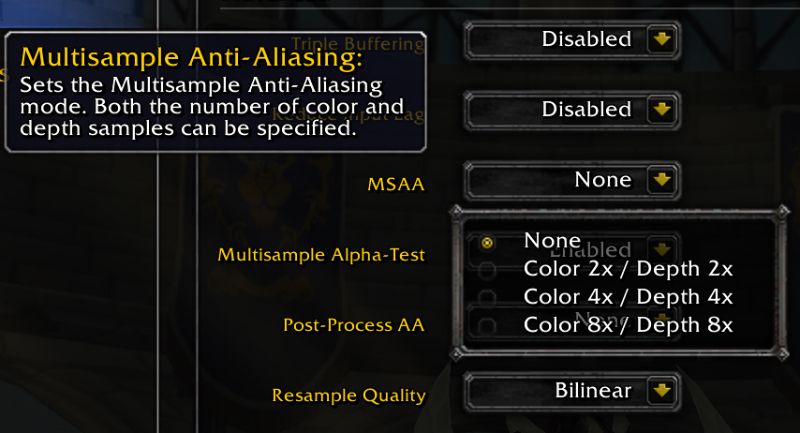

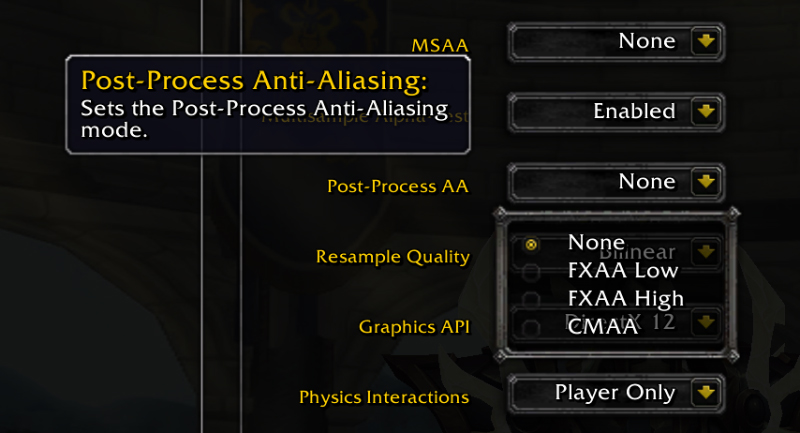

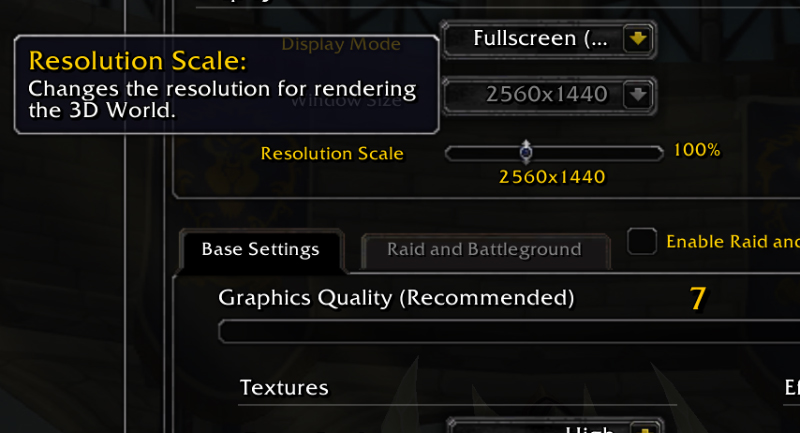

Bonus Chapter - The Elephant in the Room, Part II We need to talk about anti-aliasing. As a developer, you dream of getting the best quality for the best performance. Seldom is the case where a new technology comes up that offers both. That's not to say they don't exist - some cutting edge neural convolution post-processing effects that are just out of the oven are both the best looking and the fastest in their categories. But for the most part, the tradeoff is pretty obvious, and you know what you're getting into. Let's get back to FFXIV, and take a look at the Ambient Occlusion options:  You've probably seen hundreds of options ranging from "Low" to "Ultra" in games, but I like this FFXIV example. They're ordered from heaviest to lightest and it should be somewhat intuitive. I like this example because it gives you the name of the technology being used for the high quality options. And then there are pieces of information that have been distorted because reasons. There are options that, whenever you find them mentioned, are bound to have at least one person start a comment with "Um actually, the human eye..." with no source, logic or reasoning. One of my particular favorites is the very widespread information on how the human eye can only see one million colors, which is completely meaningless - how many of those million colors are dedicated to distinguishing hue and how many are for distinguishing luminosity? Are we talking one million total including every level of pupil dilation, or one million at any given state of eye adaptation, or one million across a very specific band of candelas per square meter? Are we taking in consideration the mach bands illusion when defining "discernible colors"? Are we ignoring the importance of transitional colors not to trigger edge contrast enhancement? Are we inadvertently triggering a cornsweet illusion and maybe producing even more colors in the test than required? Unfortunately, anti-aliasing fall into the category of stuff about which is really hard to find good information. Wait, what is anti-aliasing again? Just so we're all in the same page, anti-aliasing refers to techniques designed to smooth out jagged edges, or "jaggies" if you're friends with them. Because your screen is made of dots (although saying this in itself is enough to make some people very angry, there have been no short amount of articles over the past few decades explaining pixels are not a little square!), it's somewhat easy for humans to perceive the individual dots if their transition is not smooth. This is based on the average pixel density, how far you're sitting away from it, and how good your eyesight is. So for people working in cubicles next to a 24-inch monitor, the pixel density they're seeing might be considerably lower than that of a 34-inch sitting away from you even if they have the same resolution. As resolution and perceived pixel density increases, these edges disappear. For example, take a look at this image:  These are just a bunch of lines with no anti-aliasing. See how the blue diagonal line, in contrast with the vertical lines, is not smooth at all? This is often called a "staircase effect", because, well, it looks like a staircase.  And here's how the same lines look, anti-aliased. At a distance, the jagged effects should be mostly gone because of the softer transition.  Here are a few more lines. Notice how the magenta line is almost vertical, so the transition needs to be veeery sloow. The gradient needs to be super smooth. And here's the nightmare of everyone who ever had to model ropes for a boat:  Notice how the magenta lines now no longer connect. If an object is thin enough and far away from the camera, there will be raycasts missing it. The same happens to one of the lines at the top of the screen. So what's the deal? There are so many different types of anti-aliasing. So many. So, so many. But there's no point in covering all of them. So here's what you need to know. There are two main forms of anti-aliasing: A: Anti-aliasing that samples more than once per pixel in the screen - For a crude example, imagine that your screen is 1920x1080, or 1080p. Now imagine rendering the image four times that, or 3840x2160, the famous 4k resolution. If you render the image at 4k and then downscale it do 1080p you're effectively assigning 4 samples to every pixel. These are generally speaking very costly performance-wise. Examples are the very appropriately named Multi-Sampling Anti-Aliasing (MSAA), Super-Sampling Anti-Aliasing (SSAA), Nvidia's Dynamic Super Resolution (DSR). B: Anti-aliasing that runs an algorithm on the final image - These are post-processing forms of anti-aliasing. The image is already there, so an algorithm has to decide what to do with it. These tend to be lightweight. Examples are: Fast-Approximate Anti-Aliasing (FXAA), Conservative Morphological Anti-Aliasing (CMAA), Temporal Anti-Aliasing (TAA), Subpixel Morphological Anti-Aliasing (SMAA). It's important to understand a key difference between both types, namely that with the exception of TAA, they can effectively be split as: Anti-Aliasing that adds information the scene and Anti-Aliasing that subtracts information from the scene. This is because most post-processing AA is doing is applying a smart blur to the edges, and every blur is a destructive operation (as I explained a few chapters ago, the limit of a more general blur, or its convergence is the image being a single big flat color), while the limit of an infinitely super-sampled image is each pixel representing the perfect average of objects contained within that pixel. FXAA has had a few improvement over the years (we're at FXAA3 right now), but let's see how FXAA stacks with itself over a few iterations: Baseline:  FXAA:  Notice how the gap between the aliased lines still remain. This holds true for most post-processing anti-aliases.  So yeah, the image ultimately converges to a blur. In the real world this isn't gonna happen (although there are crazy, crazy people who stack post-processing AAs and achieve a similar effect through injection) but it should give you an example of the destructive force of FXAA, while also being mostly useless in the cases it's most needed. Now bashing FXAA is fun and all but it's not like it's entirely useless, and funnily enough FXAA tends to scale better with resolution when all you need is a little push to eliminate some flaws. But there are alternatives that work better, and for reasons are not standard. SMAA for example is considerably better than other Post-Processing AA and popular amongst injectors, and yet...  Curiously, the most impressive engine when it comes down to giving you options on how you want your game to look is, I kid you not, World of Warcraft's:  MSAA in a Deferred Engine. WoW started as Forward-Rendering, then moved to Deferred. Most Deferred Engines don't feature MSAA and if you look for reasons why you'll just see comment sections of people teaching you it's impossible, although it hasn't been for, let's see, according to Nvidia... GTX 4xx. Yep that's been a while. You can't turn MSAA on Unity if you're going Deferred. But World of Warcraft has got you covered.  Can't run heavy AA? No problem. You even have an alternative to FXAA!  Not even I care about this poo poo but it's there, and this is a great thing. Here's some more good stuff:  A lot of games nowadays let you dynamically adjust the render resolution independently of your display's resolution. This allows you to play Overwatch on an integrated solution when your video card dies (seriously, Overwatch is optimized as gently caress), while also allowing you to effectively have the option to add SSAA. Since SSAA, DSR, Downscaling are all pretty much the same thing bar a few technicalities. This is because, for years MSAA and SSAA had support faded under being "too heavy", while DSR got positive reviews when it was released, even if they're both the same thing. WoW even tells you the value of the rendered resolution instead of just giving you a % like 75% or 100%. Seriously, games need to do that. Of all the games in the world, yes, WoW is the one being used as a good reference for graphic fidelity. Which makes sense, since the game is mostly flat objects and baked textures, it needs to look as crisp as possible, but there you have it. Temporal Stability is a thing and Screenshots will deceive you One thing to take note is that AA comparisons usually go with the screenshot approach, and under ideal conditions you can hack things to look the same. The problem is, most modern forms of AA don't deal with temporal stability. Basically how an image changes over time. As I've shown you, lines can have gaps in them, and these gaps get blurred with bad AA instead of fixed, and while that's an okay solution for a screenshot (even I can't see the gaps in my monitor when zoomed out), the moment you start moving things get jittery and immediately look not stellar, or inconsistent. Doctor StrangeValve, or How I Learned to Stop Worrying and Love MSAA MSAA is a form of supersampling that only supersamples the edges of objects. It's probably the oldest form of AA actively used by games. It's particularly good in dealing with small objects so games with high-frequency details like, say, a Shmup, find it useful. It doesn't fix all forms of aliasing (namely the ones that occur due to texturing) but it fixes temporal stability while moving. And this is really important so that people don't puke their brains out in VR. From a Valve's powerpoint:  There'll come the day when we no longer need any of that but until then, and as long as my eyesight still works, it'd be nice to have MSAA in Unity's deferred pipeline, and a more diverse array of choices in games, like the aforementioned FFXIV. Seriously, AA is Taboo Perhaps the worst part of all of this is that bringing these things up is almost taboo in some places. One common post I've come across many times is how post-processing is good because it's fast, so if you need better then you have a good video card and thus you should just supersample the entire image - because there's clearly nothing between the choice of rendering the game at 90 FPS or at 30 FPS. And yet this is how it's been presented - all performance or all quality. As it's the duty of an engine to offer you only the fastest solution and nothing else. Except for the heaviest solution possible, that too.  People treat it as a way of life. I just want my games to look as crisp and be as customizable as WoW across a diverse range of PCs. It's pretty amazing they turned an engine that evolved from Warcraft 3 as a reference for that in 2018, but I wouldn't mind seeing other games and engines be more open when it comes down to that. Elentor fucked around with this message at 21:13 on Jul 13, 2018 |

|

|

|

And yes that's a real shirt, and the original is about MBTI. Seriously. I just edited it a little. Edit: Also, yes to your next question. Waifu is real. Elentor fucked around with this message at 20:33 on Jul 13, 2018 |

|

|

|

waifu2x is a weirdly good piece of tech that I'll never use because it's called waifu2x. It also lead directly to this abomination of science. Anyhow, I'm going to take this opportunity to ramble somewhat aimlessly about aliasing in computer graphics. Gaming people tend to talk a lot about geometry edge aliasing (jaggies and crawlies) where the image transitions from one mesh to another but the aliasing problem in graphics as a whole is a lot more general. To say nothing of base signal theory and all the other topics subject to aliasing issues. The basic problem is that pixels are points (they are definitely not squares and thinking that they are may cause Alvy Smith to bludgeon you to death with his Academy awards. You have been warned), yet the world around us and in our video games tends to consist of nice continuous surfaces rather than regularly spaced points. Producing pixel images thus means reducing those continuous things to points, which is a process fraught with danger. Aliasing is specifically a problem that happens when you reduce continuous things that are changing very quickly to a sparse set of points. As a very simple example, I wrote this shadertoy which draws a single set of concentric circles centered on the mouse and zooms in and out on them. As everyone knows, a single set of concentric circles looks like this:  (Made extra aliased by timg. Thanks timg!) The reason you get all that garbage is that the color bands start to vary much faster than the pixel grid, but the GPU still picks one color from one point to represent the entire pixel. This quickly goes very wrong and you get Moiré patterns which also occur in similar scenarios in games -- Final Fantasy 14 actually has some lovely Moiré wherever it has to render stairs, for instance. You can also see that the circles are all jagged even on the right side, because each pixel picks either black or white even though it should pick some sort of average. In general, this is pretty hard to solve because we don't know how much information there is for a pixel to average. Game and GPU renderers in general do it by trying to take the properties of the geometry it considers covered by a pixel -- material color, material roughness, emission color and intensity, surface normal, etc -- and trying to produce a single average of those things. It'll then pretend that doing math on these averaged properties is representative of doing math on the entire covered surface. This is of course incredibly and stupendously wrong. Mathematically it's pretending that f(2) + f(4) = 2f(3) for some unknown function f. However, in practice it turns out that the pixel-coloring lighting functions we're dealing with are frequently nice and kind so this happens to work. The remaining game aliasing areas are the big caveats where it doesn't. Geometry Edges as mentioned. When drawing one thing the game doesn't know what might geometry might be behind or in front of the thing its currently drawing, so it can't average their properties. Nor would the average necessarily be all that useful if it could. MSAA will specifically look for geometry discontinuities and only do additional work there. It's also why level of detail is important: if you draw something with a lot of geometric detail that's smaller than a pixel then the averaging doesn't work, so in order to avoid aliasing you have to be able to swap out distant models for less detailed ones even if you don't need to for performance. Reflections of Bright Things are trickier. Our averaging of the geometry properties is fine if and only if the lighting that's coloring that geometry doesn't vary much. Unfortunately, in a lot of typical cases things are lit by very small, very bright things, such as a luminous ball of plasma a very great distance away. The difference between "slightly reflects the sun" and "does not reflect the sun" for something like polished metal is massive, and edges between the two conditions suffer the exact same problems. Non-geometry material boundaries are somewhat application-specific, but if you've got some gribbly light sources next to some metal or similar then you'll often have a jagged edges and shimmering if the game fails to blend between the two. MSAA doesn't necessarily help here. Final Fantasy 14 again has some lovely examples, like pretty much the entirely of Azys Lla (which is noticeable in that video, and way worse without youtube compression). [E:] Better example. If still from Azys Lla, because I like that FF14 zone. This is my trusty, uh, Accompaniment Node. One shot is up close, one is enlarged at a distance  vs vs  At a distance the thin emissive elements are aliased to hell, since the one sample for the pixel will either randomly hit a red light or not. And that's with the fullscreen filter on which tries to smooth it out but can't keep up. A different renderer could structure its materials such that it can blend the emission and wouldn't necessarily have this particular problem. Games of course work around these things. Lighting in games tends to be soft, because harsh lighting has aliasing issues. Materials in games tend to not be all that shiny, because shiny materials have aliasing issues. And so on. Aliasing is one of those fun things that's also a problem over in offline render land, but our problem scenarios tend to be slightly different. A path tracer will have no problem taking a couple of hundred samples per pixel, but you can easily construct scenarios where there's enough significant detail that 1000 sample anti-aliasing is still not even close to enough. Say, for instance, you model a scratched metal spoon. You then place your lovely spoon on some virtual on pavement on a virtual sunny day and place your virtual camera in a distant building. You point the camera at the spoon. The sun is, again, very bright, and you will expect to see it glint in the scratched metal. You render's odds of actually finding a particular scratch on the metal that contributes is infinitesimally tiny, even if the "SSAA" pattern for an offline renderer looks like this rather than this. There are solutions to even that sort of scenario but they also involve clever averaging. Hell, the idea of a "diffuse" surfaces and microfacet theory, which together form pretty much the entire light-material model used by modern computer graphics, games and otherwise, are both big statistical hacks to get around the fact that what largely determines the appearance of things are teeny, tiny geometrical details and imperfections that are way, way smaller than a pixel and thus hard to evaluate individually. It's aliasing all the way down. Xerophyte fucked around with this message at 08:04 on Jul 14, 2018 |

|

|

|

Elentor posted:Edit: Also, yes to your next question. Waifu is real.

|

|

|

|

Xerophyte thanks for your posts, they're nice to read. I'm glad someone noticed that "not a little square" reference.  I'm grateful for FFXIV, it's the perfect game to use as a reference since it's both far more modern and hilariously enough using far less sophisticated technologies than WoW, a much older and cruder game. Both games are MMOs and have to keep being updated for years/decades while still retaining ancient stuff buried in their code. FFXIV has just the right amount of bad examples. Also looking at that image of patterns you posted, I get the appeal of Quincunx (5 samples!!! at the cost of 2!!!) but good grace is it ever an abomination to behold. Xerophyte posted:It also lead directly to this abomination of science. I had totally blanked that out of my memory. You know what? gently caress it, if that's what science is nowadays, I'm gonna use it. I'm gonna put those fake neurons to work even if just as a basis for drawing portraits. I mean, that's how I started doing procedural ships in the first place, hoping they'd be nice concept art for me to model on top of them. FractalSandwich posted:Ah yes, Wide-Area Intra-Field Upscaling. Of course. I don't even think they're gonna backronym that one.

|

|

|

|

Totally a tangent, but: the Moiré pattern discussion is very interesting to me because I'm used to thinking of interferential patterns as a good thing. I work in manufacturing and interference patterns are commonly used for precision measurements, from the simple vernier scale on micrometers to interferential glass scales and encoders to interference patterns of reflected light, thereby magnifying small linear movements. It's always interesting to see a problem in one area being used to solve another area's problems.

Karia fucked around with this message at 22:08 on Jul 14, 2018 |

|

|

|

I think I accidentally made a traditional turn-based RPG while taking a break and playing with the itemization system I created for TSID.

|

|

|

|

Elentor posted:I think I accidentally made a traditional turn-based RPG while taking a break and playing with the itemization system I created for TSID. You can't write that and not post more.

|

|

|

|

I read that as, "I accidentally turned the Items menu into an RPG." and wanted you to turn the Options menu into a rhythm game next.

|

|

|

|

Anticheese posted:You can't write that and not post more. Sure, I'll give you a whole chapter on it.

|

|

|

|

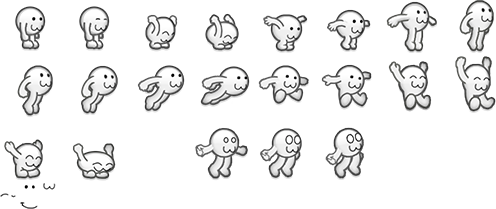

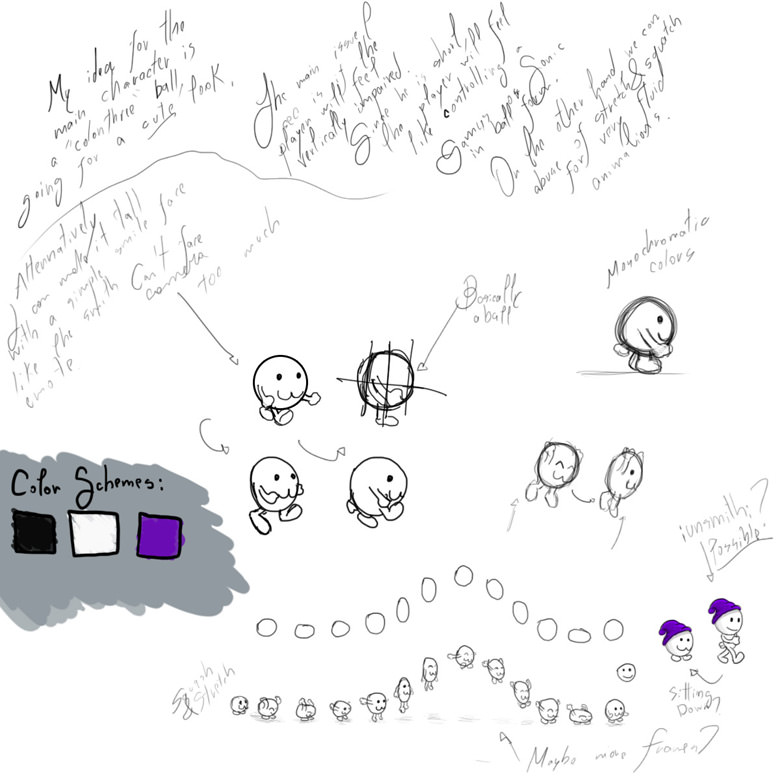

Chapter 34 - I think I accidentally made an RPG, Part I Yeah, this happened. These are gonna be long posts but I think they're worth your time. Origin Story So, a long while ago I tried to make an RPG and the code worked but it wasn't exactly good. Making RPGs isn't exactly easy. Over 8 years (how long I've been coding) I've tried it three times - the first time I didn't get past the early stage. The second and third time I got stuck in different parts of the combat. The code worked but it wasn't exactly scalable and it was clear from the start that the whole thing was gonna crumble as soon as someone shaked it a little, but I learned a lot from these experiences. In case I haven't told this story. It's for all intents and purposes a Flash game post-mortem. This is how I learned to code: I had a presentation to give about game development in a government-sponsored contest. It was an entrepreneurship contest that mixed people from different areas, most of whom were technology-based, and they all got eliminated very quickly - over the course of an year I managed to stay in through making very accurate predictions of game sales, public reception and game announcements, something which was only possible because the entire contest took an year long. So in the end, to prove I wasn't full of poo poo I had a month to complete a game, which had to be presented in the main event, and stay there for an entire day turned on, so no memory leaks. I went with "doodle jump" clone because I just needed something there, and we went on with Flash. So I hired a coder, did all the graphics, had a pretty game design document, and the coder bailed on me with one week left. That was a contender for craziest week of my life. I don't think I slept more than 4 hours a day during that week. I had some experience with very low level reverse-engineering formats, but no actual coding or knowledge of programming logic. So I learned the basics of the Flash API, and through some miracles I managed to pull it out. I remember wasting hours with sound and music because of Flash idiosyncrasies, writing all the action-script code in a single class, rolling my own for loop because I didn't know loops were something built-in. My final build compiled with about 3 hours left, which I used to test and make sure the thing worked before I rushed to the event. Against all odds the thing worked and for some 8 or so hours people came and went and played it on the browser and the thing didn't crash. I don't think words can express how tense I was about that. The story has a happy ending as I was one of the finalists of the event. I went back home and I slept, I slept a lot. Here's the sprite sheet. Though it's something I did almost 10 years ago, I'm still proud of my little  Now, those things worked, for me, on a Doodle Jump game. It was not easy, but the game was out. Over the next month I added a lot of things to it, published it on Newgrounds, added a skin system, hooked with 30 achievements for the achievements API, and in general did a bunch of stuff that I haven't in years. There was a functional leaderboard linked to newgrounds, different difficulties, separate leaderboards for the difficulties. I'm a bit regretful for having removed it a few years later though I still have all the original files and what not, so here's some screenshots:   There was even an attempt at monetize it that hooked up to other APIs, which was from an e-mail we got from a big Chinese hub offering to publish it if we untied the skins from progression and made it monetized instead. This version never made to newgrounds, and it never made us any money either, but it was still a good learning experience on how to (at the time) implement those things. Here's a really old animation, this being just a mockup. At this point I had done the art but no coding and I was super anxious to see how it'd look in action. The see-through moon kills me but it's such a hallmark of a cute cartoonish style that I'm not even sure I'd change it.  The character was based off colonthree doodles I did at the time, that I ended up using for an Awful Jam project in which I was the artist. It was a little game called You Can't Stay Awake. It had some very original and fun game design elements - the level changed as you got sleepier, if I recall. The final level design was a bit rushed and could have been a lot better, it was an idea definitely worth exploring. We ended up second place at the time. The game had a very hand-drawn feel to it. The art was really cute and the way everything became dream-like as you got sleepier makes me happy to see to this day. If you enjoy watching unfinished stages of Sonic games in development this might interest you.    ------------------------------------------------------------------------------------ Learning how to Code So I had come up with this little colonthree character, did art for a jam, and then made a game with it. The reason I'm going off in this tangent is because those things were doable with a total lack of understanding of coding. By the time I wrote the jump game I had absolutely no idea how to do anything at all, which is also why I admire The White Dragon so much for his original work on Dragon since I know how hard it is to put something out there, finished, and to say my code was garbage would be a real insult to garbage. The fact that the whole thing worked was a bit art out of adversity, but it would never have been doable in a week at the time if it was anything more complex than a jump game. With that said, I'm proud that I got so much mileage out of it in terms of experience, because to this day this was still the only time I hooked something up to an achievements/leaderboards API, for example. Before that project I had worked almost exclusively as a 3D Artist. Afterwards, I started working on projects as a Technical Artist now that I knew a bit of coding and art. Structuring things in an RPG isn't intuitive, and the implementation can vary a lot from language to language. I'm gonna give a simple, very specific example of something you pick up with time. For example, I actually had a realization while writing a freelance project in Javascript that a bunch of stuff that was trivial in JS was trivial because I could lambda everything with complete disregard to one's sanity. Since Javascript is weakly typed, writing things like spell effects or uses as lambdas was very easy and things like a string or a dynamic function that returns a string could be passed around interchangeably. I'm pretty sure this isn't the sane way to do things but it works. Everything in JS works. Javascript cares not for your coding practices. This made me realize that instead of just abusing inheritances in C# (A Fireball is a Spell which is a Skill which is a GameEntity which is an object), I could make simple classes with proper delegates. Keep in mind this was part of a learning process. For reference, a Lambda Expression in the context of high level programming is a function that is not coded as a named method. In very layman terms, here's an example: item.heal = 100; item.description = "This is a potion that heals you for 100 HP."; Whenever you call for item.heal, you'll receive the value 100. Whenever you ask for the item's description, you'll receive the message "This is a potion that heals you for 100 HP". If item.description instead of returning a text returned a delegate function that returned a text, you could them write it like this using a lambda expression: item.heal = 100; item.description = (item) => { return "This is a potion that heals you for " + item.heal + "HP."; }; Now, whenever item.description is evoked, you'll get the same message. However, if you change item.heal from 100 to 120, you won't have to update the description. If you have a skill that buffs the item heal, the description might also update automatically. So this looks like a huge improvement, right? But that huge improvement, this huge step forces every expression to be passed in-game. The item description is now hardcoded in the game, and this doesn't make things easier for modders. It also can't work if the game's DB is too big. There's no way you can manually input every single item description in the game by code and not lose your sanity. If you're putting things in a traditional database, they all have to done in pure text. So now an item description needs a parser. Again with WoW since that game is a great example, if you've played enough betas you might have seen once or twice the NPCs saying stuff like: "Hello, $playerName! A mighty $playerClass like you is perfect to help me, $npcName. Collect these 10 $questItem1." This is usually because one of the variables is missing, or the function is not hooked up, or the text is not being parsed, for whatever reason. What happens is that you're seeing how the text is always written behind the curtains. This is such a staple of gamedev that there's a track in Portal named after it. In case you don't remember the joke, check this video at the timestamp. I mean, this seems like a fairly straightforward database joke that probably everyone got. It's funny, and simultaneously it provides some eerie sense of abandonment (you're not in the computer's database, for all intents and purposes you don't exist and there's no one coming to your rescue. Is this facility even still active? Is anyone overseeing this project >at all<?). Like most things in Portal, it's really simple but good idea being used properly. And in this case, it's also a reflection of how a dev ultimately has to deal with scripted events. So now, the item description needs a parser that hooks up whenever it detects $word with a function. I want you to appreciate that this is a very long segment about an item description. You can always boil down to writing a simple text. I played some FFXII in my break and that game loves not to tell you anything useful at all in any item or skill description. But tooltips in many games tell you dynamic numbers. So you see, telling you what an item does is not always a clear cut case. And this ranks very low in terms of how to structure an RPG. When it comes down to "how in heavens am I to make this work?" getting a description text barely scratches the surface of problems you have to solve. ------------------------------------------------------------------------------------ A Time Scale Now I want to talk about something else, time scale. It's not the first time I bring it up, but time is always an important thing to keep in mind. his thread has been going on for about a year. I'm not particularly concerned about duration since my last thread went on for four and to be quite honest I enjoyed it. I hope it keeps going for a while, writing is a lot of fun to me. The first game I produced from start to finish took a month. It was 3 weeks to do the artwork, and a week to learn to code and code an Eldritch abomination. Afterwards I polished the little game for another 2 months, was happy with it, and moved on to other things. Around that time, I read that Fruit Ninja took 3 months to make, by a team of 3 people, someone correct me if I'm wrong. That seemed about right to me. Ultimately, indie games are long endeavors. Braid took 3~4 years to develop by a very small group of people, mostly Jonathan Blow designing and coding it. In my experience, the art is a huge pain in the rear end and it's the part that typically burns me down. Over time, one of the things I learned was to severely reduce my ambitions when it comes down to graphics, because making this dagger:  Is a lot more effort than making this:  While being a >lot< less effort than the careful pixel art animation done for Sonic Mania. So when you see indie games and they all look... not AAA, well, that's one of the many reasons why. Even if you could, the effort is humongous, and so usually is the time spent. And when it boils down to time, ProcGen is an entirely different category. ProcGen games are very tempting. They're fun to make, they seem simple on the surface, and they're an incredible never-ending nightmare that are usually released after 2-3 years of development in an Alpha/Beta state, and development continues for 3-10 years after. Minecraft's creator was one of Wurm Online's founder back in 2003. So Minecraft took about 8 years of experience and development (even if code wasn't reused, concepts are. This is what this post is about, all the things I learned over time) on procedural terrain, not including his coding experience before that. ProcGen games are long endeavors. Space Engine has been going on for 11 years. There have been people asking me to include features in TSID that took years on Space Engine. Path of Exile, a game that I believe to be very similar to mine in some aspects, underwent development for 3 years before its announcement. At this point I'm satisfied with the rate things are going for TSID. There's a game that works and an API and a platform. I can open up and start making things very easily, but I'm a bit burned of the 1 year doing the ShipGen + 1 year working on TSID, coupled with all the jobs I'm doing to keep funding the project. So here I am, looking at this huge chunk of code I wrote for TSID and I realize something. I'm not particularly ashamed of it. This doesn't happen very often, as coding is usually a mess and all code is bad. I go back to one of my RPG games and look at a state machine I wrote. It sucked. It was really, really bad. I remember, as I wrote it, being embarrassed. I'm looking at my state machine for TSID and it looks really good and clean. It's not perfect, but it's so much cleaner. Fifth time's the charm, right? And I copy paste it to my old RPG prototype and it works. Somehow. Then I look at the item system I wrote for TSID. It's so easy to use and intuitive, code-wise. It's built on years upon years of experimenting and failing, and iterating on successes. It was written and rewritten (just see how many times I mention "refactoring" in my project.log) until it was cleaner than any code I built before. I look at my old RPG project and I paste stuff over it. And it works. People hate singletons with a passion, and I know very well why - I've been doing singletons before knowing what singletons were. It's how I intuitively coded my first projects. So I know why they suck, but I've been making them suck less, and I've been understanding how to decouple things more and more. My previous S/O worked as a oil platform coder at the institution that was the birthplace of Lua, and during our time together she was kind enough to share with me so many secrets of using singletons and decoupling elements properly to create a smooth modular programming experience. In exchange, I told her that, to her surprise, that lambda expressions actually had a use.  Now I look at my singleton architecture. It's not perfect. Nothing in my code is. But it's so clean. I revisit my old RPG project and I just paste stuff over it. And it works. The base structure of the project is a mess and it will make things suck in the long-term, but all the new things I did work out of the box. So then I just delete the whole thing and over the past week, for fun, I casually reconstruct it by just copy-pasting the highly modular structure I built for TSID over a year. And they fit in. And today I compiled and a little ATB bar moved. I clicked "Attack" and I attacked a bat. I think I accidentally made an RPG. NEXT TIME: More reminiscences! Modding! I might finally get to PBR!

|

|

|

|

Elentor posted:I go back to one of my RPG games and look at a state machine I wrote. It sucked. It was really, really bad. I remember, as I wrote it, being embarrassed. Unless that code uses the Catch block of a Try/Catch/Finally for an actual programmatic purpose, you can probably feel a little better. Yes it is I, programming's boogeyman.

|

|

|

|

Kurieg posted:Unless that code uses the Catch block of a Try/Catch/Finally for an actual programmatic purpose

|

|

|

|

I can't for the life of me remember why I did such a thing, other than being maybe 3 years out of college and more amused with myself that I did it than anything else. I think it was a workaround for some black box code we weren't allowed to touch, and it worked well enough to get pushed to Test, but it behaved oddly under certain circumstances. Eventually it got kicked up to the Tech Lead who was able to make a proper functioning code block that didn't make everyone on the project look at it in horrified wonderment but it took around 16k characters compared to my 100.

|

|

|

|

That is pretty interesting how very different sorts of games can have similar structures under the covers!

|

|

|

|

90% of a computer science education is learning the good patterns for building software. This can be in the form of generic data structures (which allow you to store and retrieve different types of data depending on which operations you need to be fast), algorithms (generic solutions to problems, like searching for items in a list, sorting the list with different performance characteristics, or detecting whether one object is blocked by another in 3D space) and design patterns (generic solutions to structure an entire program, one pattern is the singleton pattern and another is the model-view-controller). The reason many programs are so similar is two-fold: these generic solutions have been developed by hundreds or thousands of people over decades and really are better than what any self-taught nerd with an Intercal book and 3 weeks of "experience" can come up with, and experienced developers live and breathe these solutions and (sometimes over-)apply them generously. The real reason lambdas are useful is higher order functions. State machines are a simplified model of computation, which is relatively simple to understand and often sufficiently powerful. A more generalized version is a Turing machine (named after Alan Turing), which is basically a typewriter with a state machine. A Turing machine is the accepted model of a computer and has been since the 1930s. Another model of computation is Gödel's recursive functions, invented slightly before the Turing machine. Gödel's recursive functions are higher-order functions, functions that can take functions as parameters. A normal function typically takes values, for example: code:code:code:code:This allows us to make algorithms that are much more generic. For example, consider if we implemented a simple sorting function it would need a way to compare values. If they are numbers, we would just use > and in most languages, we could use the same for strings. For, say, persons, we would need to specify how one person is "greater than" another (e.g., based on age, blood type, wealth, etc). We could then sort numbers using code:code:code:code:code:All these "anonymous" functions are called lambda expressions, named from a third formalism that is used to describe computers, the lambda calculus, which is another later model of computation which interestingly describes exactly the same things as Turing machines and Gödel's recursive functions (and the mu calculus, and Chomsky's context-sensitive grammars and Petri nets and a myriad of other formalisms). Most programming is a matter of combining such primitives, and becoming a good programmer is knowing as many of these primitives as possible; primitives are like a carpenter's tools: the more tools a carpenter has available, the more tasks they can solve, and a hammer can be used to both hammer a nail into some wood or whatever, or to open a bottle of beer.

|

|

|

|

ok, but can I write a computer game in R (this is my pale simulacrum of a programmer joke, much as an engineer who knows how to use R like this toadfish is a pale simulacrum of a programmer)

|

|

|

|

|

| # ? Apr 25, 2024 19:06 |

|

Kurieg posted:Unless that code uses the Catch block of a Try/Catch/Finally for an actual programmatic purpose, you can probably feel a little better. One of my past jobs was cleaning up a program written primarily by biology graduate students. One pattern I saw periodically was: Python code:(Never mind what would happen if they tried to pass a zero-length array to the function)

|

|

|