|

Xerophyte posted:Another is that we're at the point where it's technically feasible, but not really practical. A couple of researchers willing to push the envelope and given couple of weeks of extended ANN training before every balance patch could probably make a decent ANN for playing Civilization, or at least for handling some particularly hard and ML-appropriate sub-task like finding a good set of potential moves for a unit given the current game state. That's not useful enough to do for a release, and I think more boardgame-like games such as Civ are probably one of the easier targets as far as using ANNs for real game AI goes. The other problem with games like Civ is that if you define your goals poorly, every game will just result in the AIs openly joining into an alliance and beating the players face in. Rather than it just seeming like that's what they're doing.

|

|

|

|

|

| # ? Apr 20, 2024 04:51 |

|

Kurieg posted:The other problem with games like Civ is that if you define your goals poorly, every game will just result in the AIs openly joining into an alliance and beating the players face in. Rather than it just seeming like that's what they're doing. You joke, but that sort of thing is a real problem for trying to use a neural network or other machine learning approach to make an interesting overall AI for even a fairly boardgame-style game like Civ, which are the games where we know the most about how to apply all these machine learning techniques. Using the state of the art techniques of today I think Firaxis can probably train a deep neural network to beat any human player at civ, if they hired the right people and threw Amazon machines at the problem for half a year. It'd be a ruthless Civ machine, backstabbing at the drop of a hat, ganging up on anyone who seems the slightest bit ahead, and absolute unfun dogshit for a human to play against. We might figure out how to train for entertainment someday, but there's a lot of open questions to answer before anyone heads down that route. Using ML to solve sub-problems is much more tractable. You can use machine learning for all sorts of hard stuff that we don't quite know how to do: doing keyframe interpolation in your animations, for solving complex many-body physics interactions without needing too many local iterations, for doing post-process filters like motion blur and bokeh on your graphics, for suggesting city placement locations, and so on. For the actual AI, eh, not so much.

|

|

|

|

Would these hypothetical ML Civ AI's also gang up if they did not know who was AI and who was human, and could only communicate with other players with the same tools human players have to communicate with AI players? Okay I suppose they could end up developing a kind of language using the restricted alphabet of the negotiation interface.

|

|

|

|

|

Hey Xerophyte, thank you for your posts. They're always very useful and I appreciate your contribution.KillHour posted:I watched this last night and while I kind of grasp what's supposed to be happening, I still don't have any intuition for what that means with the numbers. I think I'm just too dumb for 3b1b's videos. 3b1b isn't as intuitive as math channels go, unfortunately. And I've seen him overcomplicate issues that are very simple. Sometimes even things that I already know. The "trick" and I say trick in the loosest sense to 3b1b is that a lot of his solutions and explanations are quite ingenious for you to replicate yourself. I say this as someone who really detested school and the way we're taught to learn things, but in 3b1b's case it's really useful. If you pause the info dump he's doing and attempt to replicate what he's doing, even if you don't understand it, the result will be you getting a pretty crazy and deeper understanding of the behind-the-scenes that an explanation on surface level wouldn't be able to convey. nielsm posted:Would these hypothetical ML Civ AI's also gang up if they did not know who was AI and who was human, and could only communicate with other players with the same tools human players have to communicate with AI players? Well you can make part of the training conditions that the player is indistinguishable from other AIs in terms of objectives but there are still things to solve behind that that make the problem not clear-cut. And Xerophyte is right in that making ML to defeat a player is definitely doable. Making AI opponents however to be fun to play against is a whole other story. If anything, consider how many game developers/designers don't know how to do that when coding an AI themselves, and now imagine what kind of set of instructions need to be passed for you to properly train an AI with the purpose of being fun.

|

|

|

|

hey, TMA you may want to read this: https://qz.com/788987/facebook-fb-and-intel-intc-built-killer-ais-that-dominated-a-doom-video-game-competition/

|

|

|

|

Next Chapters should be up around this weekend. To all the people still following the thread, thank you for your patience.

|

|

|

|

Elentor posted:Next Chapters should be up around this weekend. To all the people still following the thread, thank you for your patience.

|

|

|

|

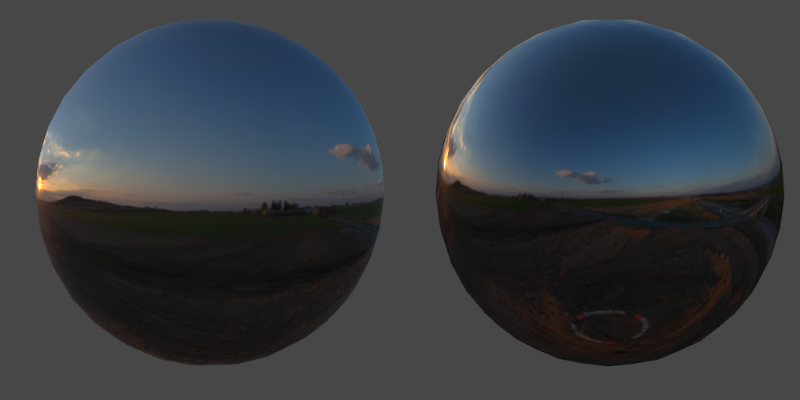

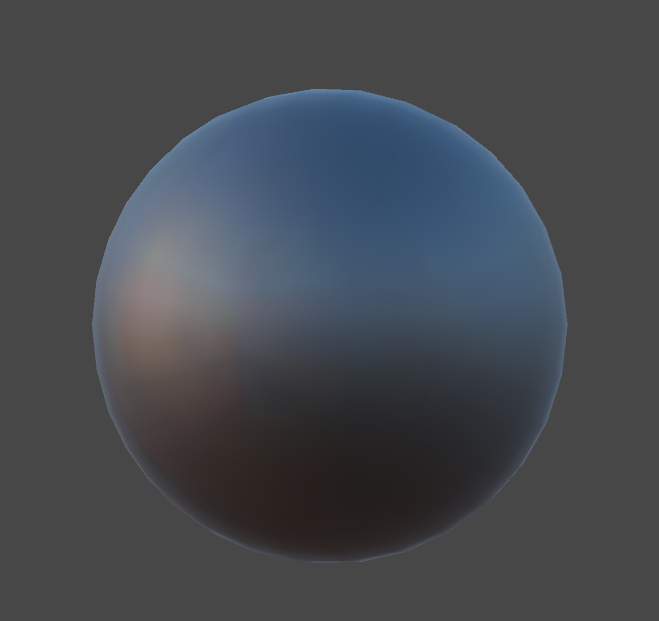

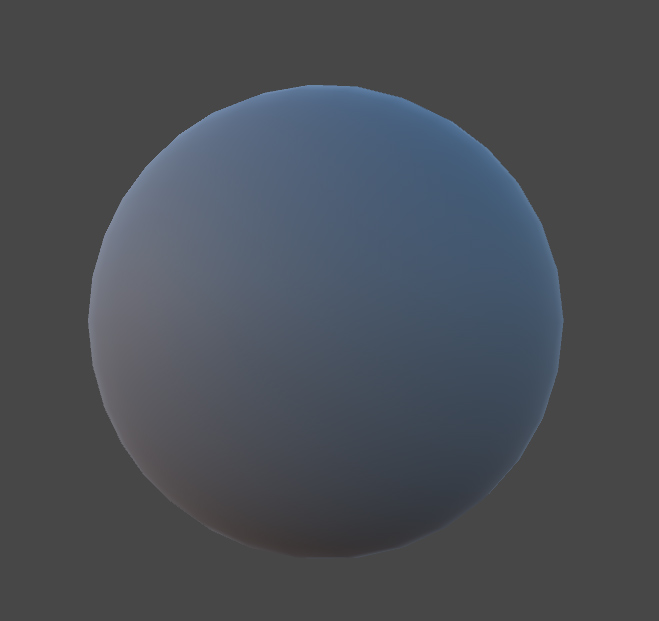

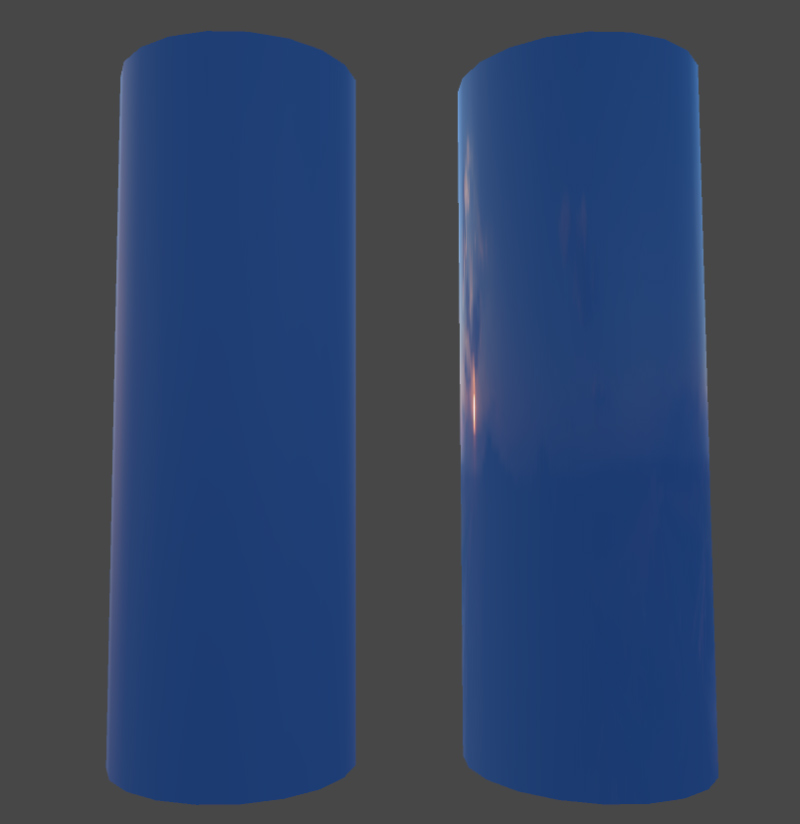

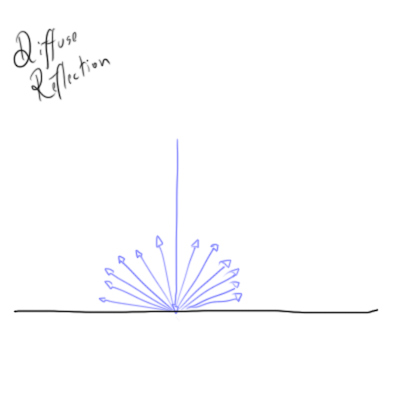

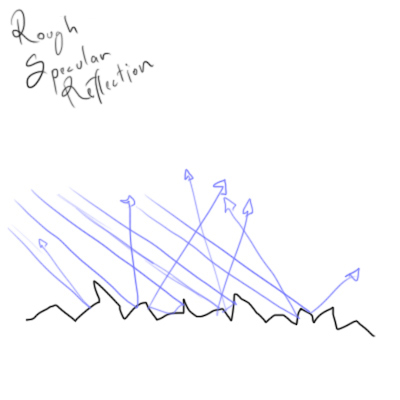

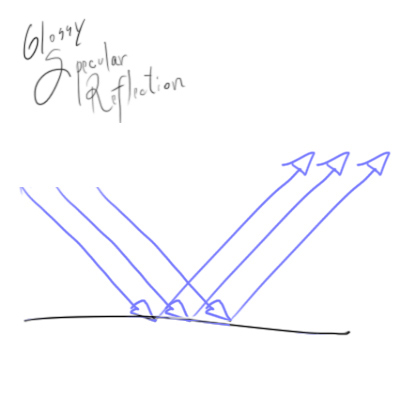

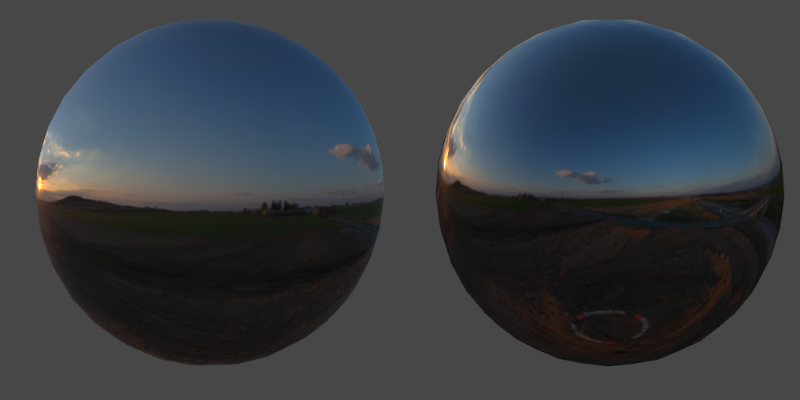

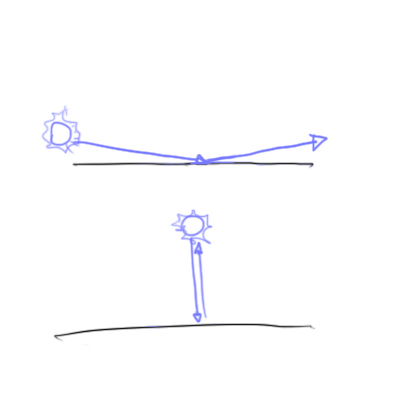

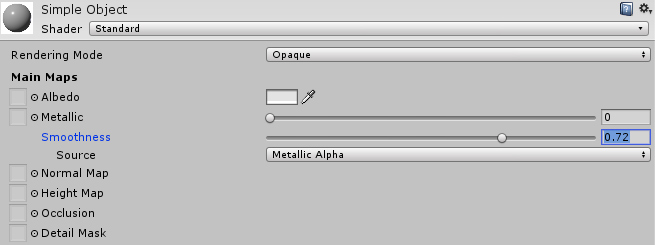

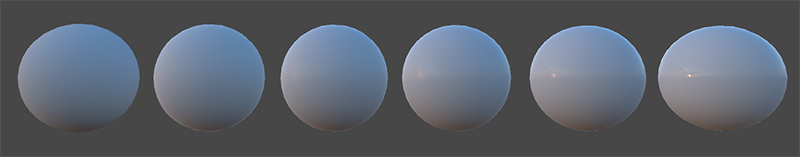

Chapter 36 - Real-Time Rendering, Part V Herein we finally get started on PBR. There was a lot of build-up to get to this point, but I think you'll understand why. The modern pipeline is really complicated, and it is no easy task trying to explain it. It's also a pipeline we can't run away from anymore - everything uses it, even a lot of cartoonish games use it, save a few exceptions. So let's start from where we stopped, and see the next logical step: Image-Based Lighting So last Chapter we saw how people used panoramas to mimic reflection. For a long time now, people have been using HDR images in offline renderers to produce photo-realistic images. The idea is simple: Instead of manually setting up an entire rig of individual light sources, what if we simply take an image like this...  But instead of a LDR, use an HDR with a very large range, so that the white from a sun in it is thousands of times stronger than the rest of the image, use it as a skybox, and let it be the sole source of lighting? In fact, you don't even need to use it as a visible skybox, and you don't need for it to be the sole source of lighting. However, by simply doing that you have a photo-realistic lighting by definition. Your source of lighting, after all, is a photo. With a render that can accurately ray-trace and simulate how that photo would light your 3D models, you have the easiest shortcut available to render images. For example, instead of taking pictures of a car in a studio, you could now have a 3D model of a car and simply use the HDR of a studio panorama. A quick Google Image search for "studio hdr" in fact will show you plenty of cars: https://www.google.com.br/search?q=studio+hdr&tbm=isch Studio HDRs don't even need to be panoramas of a real studio. The lighting is so simple to setup that you could make a 3D model of a studio, render it, and then use that rendered image to light your objects. In fact, there's a program entirely dedicated to it: https://www.lightmap.co.uk/ I recommend you take a look at the gallery, get an idea of what sort of stuff is possibly with 3D Renders using Studio HDRs: https://www.lightmap.co.uk/gallery/ So yeah, basically every product you see is rendered like this. Notice, however, that with Studio HDRs you're mostly gunning for those sweet reflections, but not everything needs to shine like a car. At some point someone realized - what if we move away from simple Lightmaps/Ambient Lights and use these panoramas as a source of lighting in the scene? And thus Image-based lighting in games was born. Physically-Based Rendering Implementing a simple IBL is pretty easy. Instead of reflecting light, we just use the image as a texture as usual, being projected from infinitely far away, like the left image here:  Except this time the texture doesn't rotate with the object, since it's being projected from the world. But wait - in the real world, the lighting is never this smooth. If it were this smooth, then it would be a reflection. And reflections have all sort of distortions, as we know. So we blur it...  And blur it.  Now this looks like something being lit by that panorama... kinda. At this point we have a nice lighting, but we also have a dull material. It looks like rubber, and there are lots of other types of materials that would be reflecting the sun while still being lit by this blurry version of the panorama - that's why we invented speculars in the first place. For example, plastic. You can have a dull, rubberish material, or you can have a PVC material like the one on the right:  At this point it must be clear that, unless we're willing to carefully simulate the way light bounces (and that's what ray-tracing is all about, but we're not there yet - even if you have a fancy GeForce RTX 2080, we still need to get through this). There needs to be two different types of reflection - the blurry one, and the distorted, reflective one. And this is because of the difference between Diffuse vs Specular Reflections.  Diffuse Reflection has to do with the light that is first absorbed by the material, then reflected. Light enters the object, is scattered, and is spread around. Most objects have a high amount of diffuse reflection - the color we see is filtered from multiple bouncings even at what seems to be the surface level.   Specular Reflection is the reflection that happens on surface level. The idea is that when dealing with a perfectly smooth material, light will bounce off of it and good things will happen. You will see a reflection based off the angle of incidence. Because the reflection can never perfectly bounce back (as it will only do so if it's perpendicular to the surface) you get this kind of distortion. Again, look at this image:  The left image is impossible because it would imply every ray is being reflected at the perpendicular of the sphere to your eye. When you look at grazing angles, the distortion of the reflection increases.  However, what if you don't have a smooth material? As we saw here:  The theory of microfacets state that "rough" looking objects look so because they have a lot of imperfections, the aforementioned micro facets, that bounce light all over. As the surface roughness increases, the specular light becomes blurrier, and the object approaches a complete diffuse look. With light being bounced all over, a lot of light from different angles is mixed. Because the first principle of thermodynamics, also known as the Law of Conservation of Energy, states that energy cannot be created or destroyed in a system, that means the surrounding light will blur while at best maintaining the overall incoming illumination. That is, an object can look darker if it absorbs light, but it cannot look brighter than the light it reflects (unless it has its own source of illumination, of course). But to simplify it, the texture can be just blurred using the rules we've established previously here. This means that at extreme roughness, an object reflecting a yellow and blue scenario would look gray (as it would average the two opposite colors into a single blurry mess). In the world of real-time games, this cannot be done through convolution matrices, otherwise it'd take too long. Instead, we do something clever - we started using MIP Maps, lower-resolution versions of the original image, and interpolate between them! Since an extremely low resolution image is gonna have significantly less detail, when stretched, it will naturally look like a blurry version of the original. Of course, being rough or being smooth is not a case of "yes" or "no". There are all sorts of objects in-between a flawless mirror and rock. But thus is born the first result of the theory of microfacets, and our attempt at creating a shader based on the physical properties of real life objects. Instead of having a slide bar telling how much specular an object has, we now have this:  A slide bar telling the smoothness of an object. The smoother it is, the less blurry the reflection is.  And you can see that specular reflection there - instead of a fake reflection of an algorithm, this time, as the actual reflection of the sun in the panorama! Something else of note is that the edges always seem a little brighter. This is because of the Fresnel effect. One of the many things discovered by Augustin-Jean Fresnel, the Fresnel Equations tell us that at grazing angles, no matter the other properties of an object, they'll always appear mirror-like. The math behind it is pretty intense, but part of the physically-based rendering pipeline is try to mimic our real world physics as much as possible, so the Fresnel effect went from a gimmick used only in Velvet/Cartoon/Shield Effect/Atmospheric Shaders to a part of every object. NEXT TIME: Rocks! Metals! The pains that every artist has to go nowadays to deliver you 3D objects! Elentor fucked around with this message at 05:43 on Sep 25, 2018 |

|

|

|

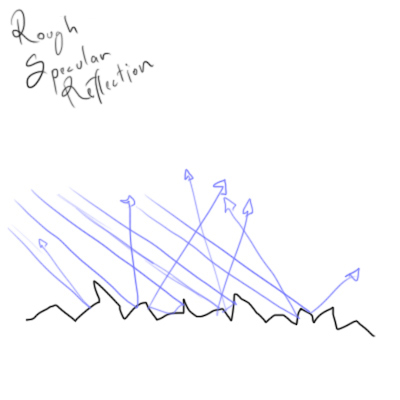

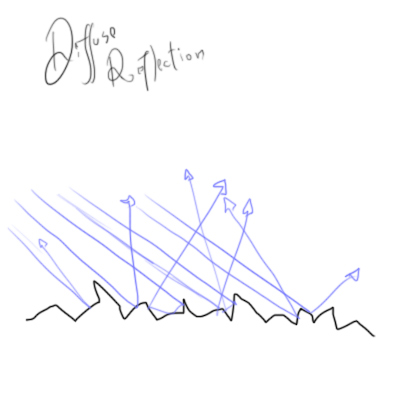

One thing. This is not usually called diffuse reflection*. Light being reflected in differently angled surface microfacets results in what is generally called glossy or rough specular reflection. Diffuse reflection is another phenomenon entirely: it's the "reflection" from the light that was refracted into the material rather than being reflected at the surface. If the material is optically dense -- think plastic as opposed to water -- then this refracted light is quickly diffused inside the material, hence the name. While bouncing around inside the light will either eventually be absorbed by the material as heat or eventually find its way back out. Exactly what light gets absorbed and what gets scattered back out depends on the wavelength, which causes the diffused light that comes out to have a different color than it originally did going in. This is the process that makes wood brown, paint red and so on. Diffuse reflection is in this way different to glossy or specular reflection, which reflect all wavelengths equally for non-metals and so are colorless/white. Diffuse reflection is basically the same process as fog, just simplified for much denser materials. For offline there's typically 3 classes of increasingly complex and expensive rendering solutions to handle different cases: diffuse reflections for very dense media like clay or rocky planets, subsurface scattering for moderately dense media like skin or plastic, and various real volumetric scattering simulations for thin media like air or water. Games usually have a similar split, although the implementations used for subsurface scattering and volumetrics in games tend to be vastly different from offline -- unlike diffuse reflection where both games and offline do the same thing. Ultimately they're all modeling the same phenomenon, just at different scales. Schematically at the very small scale, diffuse reflection looks something like  where the blue and green arrows are the process of diffusion, and the yellow is glossy reflection and glossy refraction. One of the big open problems in computer graphics is around handling both glossy and diffuse reflection well at the same time. There's a coupled interaction where the diffusely reflected light is made up of only the portion of light which was not glossily reflected. Modeling that coupling is, it turns out, very hard to do without breaking the various rules about energy conservation you mentioned. There's a nice paper by Kelemen and Szirmay-Kalos from 2001 which can handle the simplest case of a single layer and can be generalized somewhat, but their approach requires storing a lot of precomputed values, plus it's very hand wave-y and just kind of massages the math so it works. It is still basically the state of the art. Handling the interaction between more complex arbitrary layers in a practical way is something we just don't know how to do. Another thing is that diffusion is in practice only exhibited by non-conducting materials. Light penetrating into a conductor is extinguished almost immediately so diffuse reflection for metals and similar is effectively 0. Those materials acquire their color through an entirely different interaction: they have a wavelength-dependent glossy reflection which causes the glossy reflection to be colored rather than colorless. * At least, it's not Lambertian reflection. The Oren-Nayar reflection model does behave somewhat like this schematic, except with diffuse Lambertian reflection in each microfacet. Oren-Nayar also typically uses a different (and simpler) statistical distribution for the flakes than the more modern models for glossy reflections. ON is a good model for surfaces that are very optically dense and also rough on a larger scale, like distant rocky planets. Then it makes sense to model that larger scale roughness statistically in the same way we do the smaller scale roughness that causes glossy reflections. Anyhow, this is getting super technical and I should shut up.

|

|

|

|

Xerophyte posted:One thing. This is not usually called diffuse reflection*. Diffuse Reflection is how I was taught in school, well over 10 years ago. For example: https://study.com/academy/lesson/diffuse-reflection-definition-examples-surfaces.html quote:Diffuse reflection occurs when a rough surface causes reflected rays to travel in different directions. With that said, upon thinking about this topic I agree with you that I should clarify it. This is a very dense topic and since I'm just explaining things on the surface level there is not much of a point going deeper since even the artists and technical artists don't. When I started implementing metalicity in my own shader before Unity I was asking questions about why metals behave the way they do, for example, and you start from where you stopped (traditional diffuse reflectance vs game diffuse reflectance) and then things start going deep into polarization, conductive behavior, and so on. One of my longest running on-and-off projects was a tool to convert metal-to-specular pipeline both ways which is not possible to do perfectly (due to the fact that you can create impossible materials in the specular pipeline) but I still wanted to approximate, and it was a nightmare. Since you showed knowledge about the way metals work, you should empathize with this. At this point these terms are like colors in color theory. What is a "primary color" depends entirely on the context. In a traditional Lambertian reflectance model yes, I'd have to explain the difference, but in the world of game development Diffuse Reflection and Rough Surfaces are often used interchangeably, which in all fairness you're right, it should not be the case. I rewrote the chapter to match that. Elentor fucked around with this message at 05:40 on Sep 25, 2018 |

|

|

|

I admit I'm not that familiar with the terminology Unity's PBR model, I've used it a little bit since they collaborated with us on a presentation for at SIGGRAPH this year but that was basically just importing data and hooking up textures. Their Standard Shader uses specular and smoothness as parameter names for their microfacet lobe, and I see they do indeed describe smoothness as "diffusing the reflection". I would've actively avoided that term outside of things that map to BRDFs like Lambert or Oren-Nayar to prevent confusion but, sure, I can see what you/they mean. Even if you take "diffuse reflection" to be "anything not a perfect mirror", the majority contribution to that for an opaque dielectric is still the internally scattered and colored light, as modeled with a Lambertian or similar, rather than the specular microfacet reflections as modeled with a Phong or the like. For an opaque dielectric Unity uses a Cook-Torrance GGX BRDF for rough specular/glossy and the Disney Diffuse BRDF for the internally scattered part that I'd normally call "diffuse reflection", with the latter the only one affected by albedo, Unity's primary material color parameter. The study.com quote that "diffuse reflection occurs when a rough surface causes reflected rays to travel in different directions" is if not flat out wrong then at least severely misleading, Wikipedia's "diffuse reflection from solids is generally not due to surface roughness" is a lot saner. I would at least be extremely careful about thinking about microfacet-based Cook-Torrance BRDF lobes as being there for modeling diffuse reflections when working with any material parameterization. If you come across an element that says something about "diffuse color" or similar then it's going to be talking about light that's diffused through the material, rather than the rough microfacet reflections.

|

|

|

|

Xerophyte posted:I admit I'm not that familiar with the terminology Unity's PBR model, I've used it a little bit since they collaborated with us on a presentation for at SIGGRAPH this year but that was basically just importing data and hooking up textures. Their Standard Shader uses specular and smoothness as parameter names for their microfacet lobe, and I see they do indeed describe smoothness as "diffusing the reflection". I would've actively avoided that term outside of things that map to BRDFs like Lambert or Oren-Nayar to prevent confusion but, sure, I can see what you/they mean. These terminologies assumed, due to a poor understanding, different meanings when used inside the game industry. The first IBL shaders were extremely simple interpolations of MIP-Maps. It doesn't help that these terms can be applied to both cases without losing their literal meaning. Unity is not wrong when it says that reducing smoothness diffuses the reflection, as it literally does diffuse the (specular) reflection. Is it conflicting with the Lambertian definition of Diffuse Reflection? Yes. Does it help the general understanding? You're right, it doesn't. I rewrote the chapter in a way that matches the original intent of those naming conventions. Later on when my head hurts less I'll add a bit more to it with regards to naming conventions and the pitfalls of them like the one we're just in. This whole conversation reminds me of the nightmare that is explaining what an array of vectors is in Portuguese because there is no word for array, so it's just called a vector, and a "vector of vectors" can have multiple meanings even if they're all technically right in some literal sense. Either way thanks for pointing it out. I'll admit that after so many years of reading interchangeable uses of diffuse, albedo, reflection, specular, my brain fried for a bit. Elentor fucked around with this message at 09:20 on Oct 4, 2018 |

|

|

|

Another two-minute paper. I really like these material synthesis networks, they feel like magic.  https://www.youtube.com/watch?v=UkWnExEFADI This one is a neural network that recreates a material and a bunch of properties from a single photo. It's obviously going to be just an approximation, but still it's pretty neat.

|

|

|

|

that "cameraphone flash/no flash" is a clever as heck way to get rough and easy depth information

|

|

|

|

Bonus Chapter - On Life Next chapter will be up sometime next week. You know the drill, that might be tomorrow, that might be Tuesday. I'm not great with exact dates because I write, write, write and then I want to polish the chapter a bit more. Next chapter will also be the conclusion of Rendering Talk. I apologize for this series taking so long, and maybe for being too technical. I'm happy with it though, and I'd like to thank everyone involved (you, the reader), and Xerophyte for sharing some wisdom and correcting me where I made mistakes. On a more serious note, a few things happened since the start of this thread. This is a very personal chapter, but I feel like it's the most important read of the thread, because in a way it culminates it: 1) I'm into a bit of a brick wall right now because of possible RSI. It's been a few months since I injured my hand and I haven't been able to do art properly. I can play games where I control the mouse with my arm and do wide angles (usually games with free camera or FPS), but I haven't been able to play games like Path of Exile or MapleStory 2 (which seems really nice!) properly which kinda sucks. Using the tablet has been a bit painful for a while now. TSID is at the where it needs art that I can't provide so I effectively need to hire someone. Until I plan this out properly, TSID is on a hiatus. 2) I finished the ShipGen. To a lot of the readers that seemed to be the main appeal. Despite the hiatus and the bad events, I'm overall happy with the turns this thread took because at least for me, the overall reaction to the ProcGen was a bit of a plot twist. Right now I need to decide what to do with it because I've been getting offers from a lot of companies and while I had gotten two offers before the post mortem, I didn't expect to see so many prospects. So at this point we're no longer in the realm of the project being just a hobby. Which leaves me with a few actual life-altering decisions I need to make: 2a) I can sell the ProcGen for a considerable amount of money (for Brazilian standards) with exclusivity rights. That would probably get me the money needed to complete TSID, but not the rights if I do so. To be honest, I'd even need to check that with regards to our laws here, and that's a can of worms I'm not interested. 2b) I can license it without exclusivity rights. I've been strongly recommended against that as it'd effectively be turned into a piece of software I'd have to offer maintenance to a bunch of other companies, it would be very stressful and while I could turn working on ProcGen software as a full time job, it's not an ideal option. But the offers I received are good enough that from my experience selling assets it'd probably set me for a few years. 2c) I can work full time on a few companies. I would take this if I received an offer from a company I'm really excited about but that hasn't been the case yet - the companies I've liked the most are more interested in buying the project outright. 2d) I can work on something else on my own. This is the riskiest option but the one I'm leaning towards the most. Like I mentioned, the code from TSID is finished. I could sell it as one of those template games in the Unity Store but the whole thing is a very dear project of mine and I have great ideas for it that I want to see executed someday, and a lot of you guys have offered great ideas as well. I'd say there's a certain irony in that the brick wall I hit was an art-related one, but I think that's the opposite of irony and more of a very fitting coincidence. The reason I started with ProcGen art is so I wouldn't have to do art in the first place, and now that the whole thing caught up to it the sheer amount of art assets needed is quite staggering. For 2b, I could in theory make a company (technically I already registered one for these projects) focused on selling ProcGen software. I have plenty of ideas and projects I want to do: ProcGen Music is something I've been researching for a while and I believe to have a far more powerful algorithm than the current cutting-edge neural network algorithms, but much like the ShipGen it'd take a long while to do it. But the market for this is quite good, and considering that doing freelance artwork seem to be going away in the future as my dominant drawing hand really hurts, this is definitely my best fall back plan. For 2d, I can repurpose the code of TSID and do something else with it. I know this already works because I did in about a week a proof-of-concept where I used the entire backend to create a turn-based RPG that is at the moment fully functional (if with no graphics). If instead of 3D I go full lo-fi with it, I can make something sprite-based and extremely simple, and get started from where I stopped in the shmup. Because even very basic shmup 2d artwork would still be much more straining than some basic 2D indie 8-bit graphics. So what seems to be the most interesting idea right now for me is holding onto TSID and working on a lo-fi RPG until I have the means to support TSID's production. One of the ideas that I got was to have a traditional Turn-Based JRPG with the cell-based map of games like Opera Omnia and do a Turn RPG entirely focused on the gameplay aspect, that should be doable, fun and considerably faster as I already have the hard part entirely done. And more importantly, it would be soloable. I reflect a lot about the thread and maybe post a lot about posting because these projects are effectively my life right now. The stuff I made here opened some doors that would not be available from me working in Brazil, and there's a lot involved in it. To me, games are personal in a way that is very important. Games united my family in times of hardship. Games are part of our culture, and when choosing a venue to LP a game that I liked, I picked up a SSLP knowing that it offered no financial reward whatsoever. At that point I (and a lot others) considered YouTube because of a bigger public, and since then a lot of YouTubers became streamers, so the format has changed quite a bit over the time for career gamers, which I chose not to be. I felt like I could convey my ideas better as a writer, and I genuinely like the SA Community. It's amusing to think that despite those choices, I made an LP that connected intimately with my career in a completely different way. This may not be the way I envisioned when I started the thread but it's a hell of a plot twist. Either way right now I need to decide on the next steps. I feel like I should share this with all the folks who followed the thread so far. Elentor fucked around with this message at 13:05 on Oct 13, 2018 |

|

|

|

Not saying that this is necessarily the way you should go, but if it's just art assets and the game loop is solid, you could put the game up as early access. That could fund the rest of development if there's enough interest.

|

|

|

|

Have you tried to use a trackball for the RSI? I think I saw you posting in the WoW thread a lot so presumably you play that game regularly. Me and a friend who both spend all work day at the computer had RSI flare up pretty bad when we got back into WoW. WoW isn't a precision clicking game, but it does have plenty of mouse movement and it's a game that encourages hours of continuous play. We're both trying trackballs to see if it helps.

|

|

|

|

FuzzySlippers posted:Have you tried to use a trackball for the RSI? I think I saw you posting in the WoW thread a lot so presumably you play that game regularly. Me and a friend who both spend all work day at the computer had RSI flare up pretty bad when we got back into WoW. WoW isn't a precision clicking game, but it does have plenty of mouse movement and it's a game that encourages hours of continuous play. We're both trying trackballs to see if it helps. WoW is not a huge problem yet - I play games like WoW and PUBG with wide arm movements and in WoW I just sit still on WASD doing the rotations. Games that involve a controller or require finer mouse movement where I don't feel comfortable with low DPI are a nightmare. I pretty much had to quit PoE because of that which sucks because this league looked great, one of my posts was asking for builds that worked with hand injuries, a totem build worked fine for a while but eventually I gave up. I'm not sure trackball will help, but I'll try it out. KillHour posted:Not saying that this is necessarily the way you should go, but if it's just art assets and the game loop is solid, you could put the game up as early access. That could fund the rest of development if there's enough interest. This is an interesting idea but at this point and time I don't feel comfortable with EA as a means to gather money to do the game in first place. There's a lot of stigma surrounding that given all the unfinished Steam titles and I prefer to only release something once I have a 100% guarantee for the consumer that it'll be something good regardless of what happens. It's a weird can of worms which I'll have to give a lot of thought but generally speaking I'm not that interested in selling a game in early access unless it meets that criteria.

|

|

|

|

So uh, this week I found that most useful water puddles images are expensive stock photos, either that or Google has been prioritizing the paid services/stock services in GIS. I'm waiting a bit more to receive permission of a few people to use some of their photos for educational purposes but I'm having a little less luck with that this time.

|

|

|

|

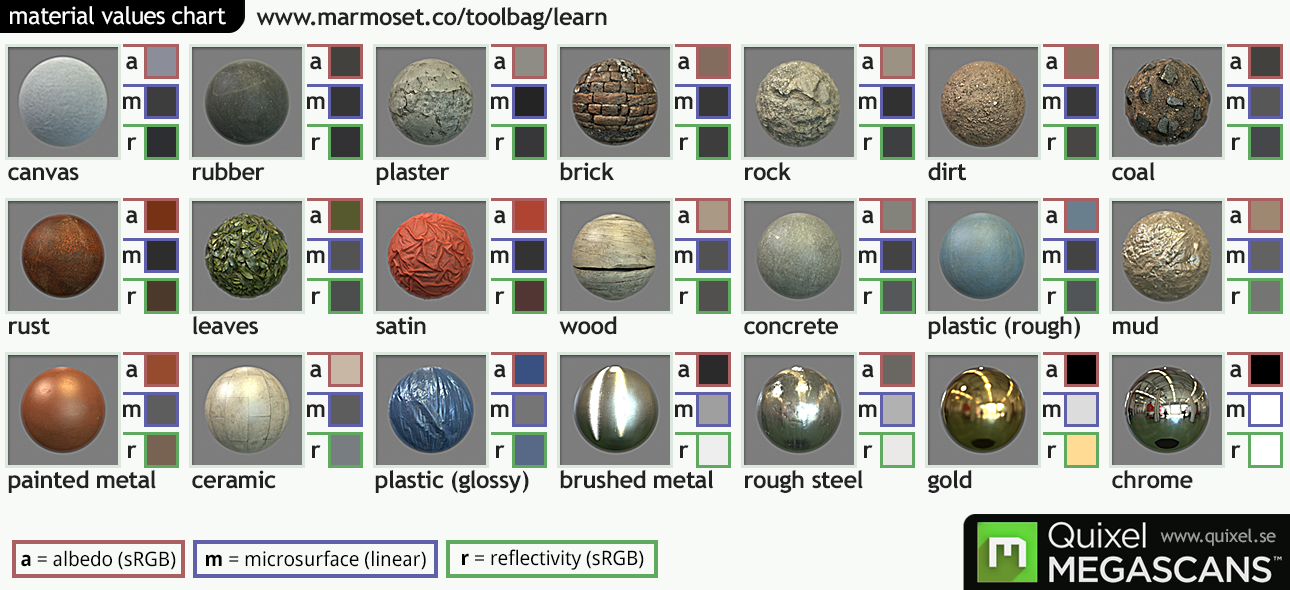

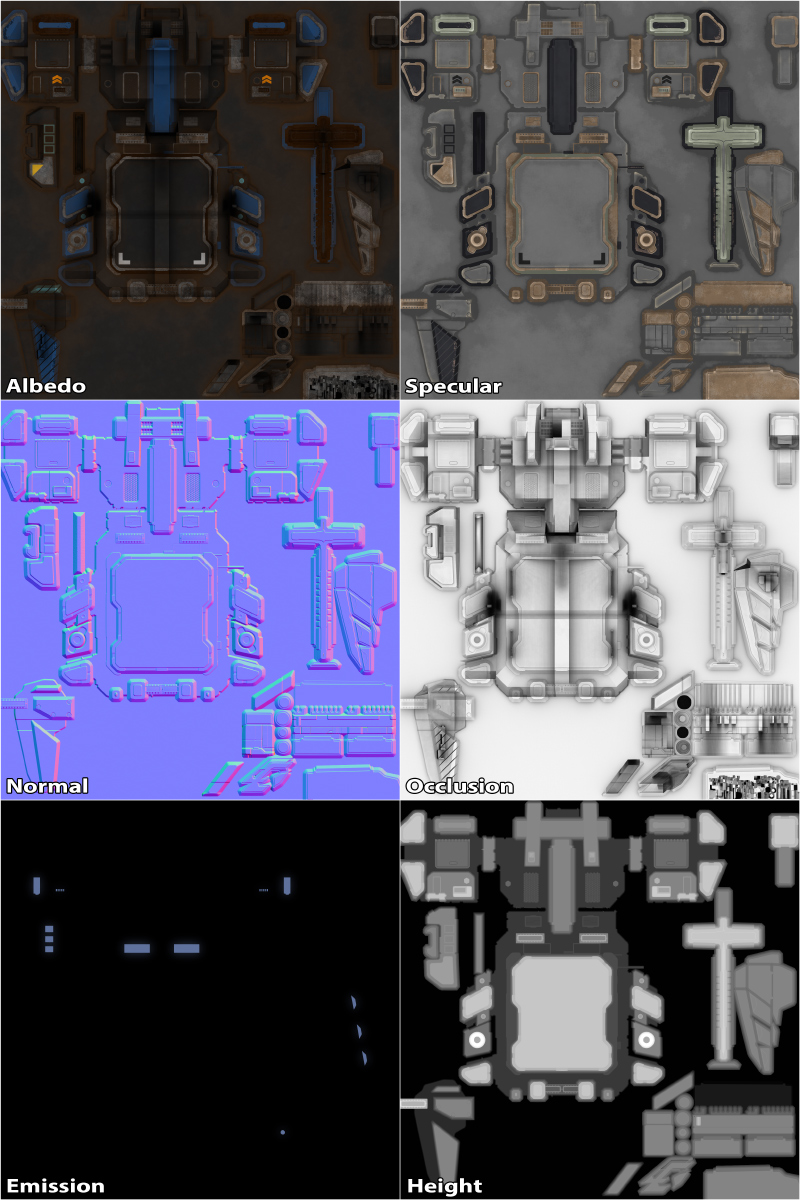

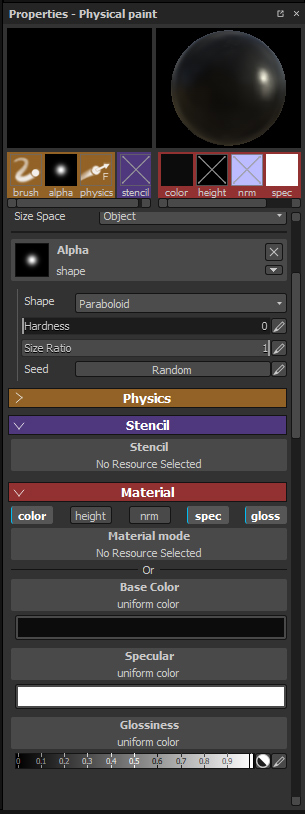

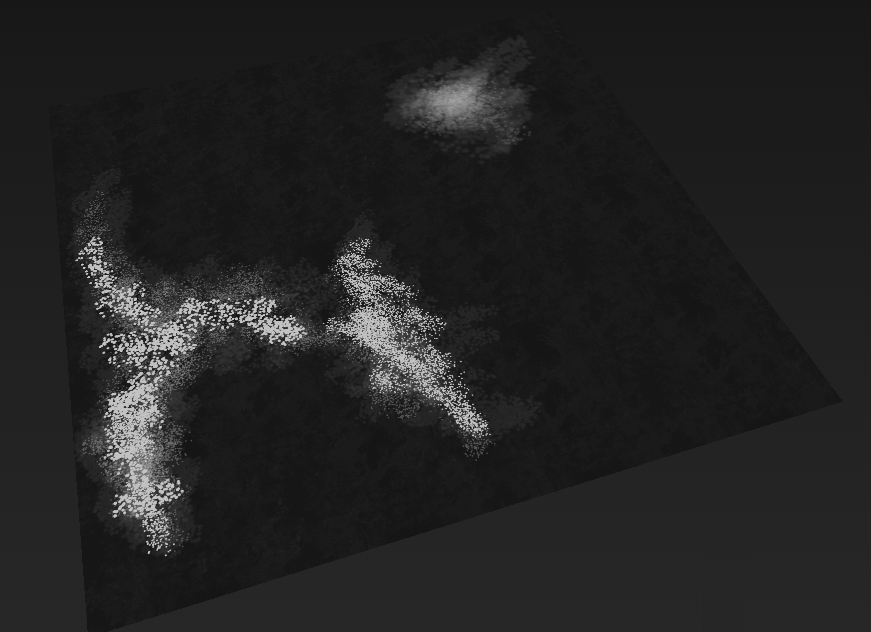

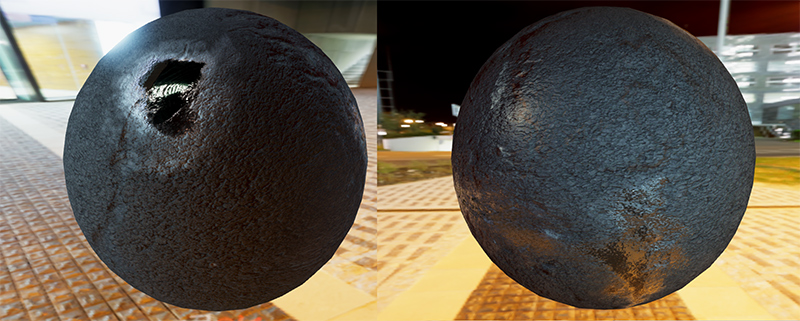

Chapter 37 - Real-Time Rendering, Part VI Last Chapter we talked about the basis of PBR - having an image, in the form of a cubemap or panorama, and then using it to illuminate the objects in a scene. Today we wrap PBR up. Probes With Image Based Lighting up and running, the next step comes in: instead of using pre-defined skyboxes and panoramas taken from the real world, creating instead them dynamically, or not dynamically but baked from the game scene. Thus we have probes - you spread these across a scene, and they'll take 360 degree "pictures" from the scene around them, and then they're used for reflection. As you walk around the game will interpolate from one probe to another. Probes work really good in some cases, but the closer or tighter the room is, the weirder the effect gets (after all, these are reflections from infinitely distant objects). If you've ever played Overwatch, pay attention to the ground reflections. Especially water puddles or mirrors. You'll see that they align with the background - but not quite. They more or less align with where things should be, but the reflections seem much distant. Nowadays there's a lot of mixing of IBL with Real-Time Reflections done through Post-Processing. In the future, there'll be even more mixing thanks to Raytracing. This video promoting NVIDIA's new technique goes into this a lot and even though it's for a demo (with some over-the-top reactions), it's a good watch. Metal One of the really important aspects of having a light-conserving model with separate Diffuse and Specular reflectance values is that you can now have something that games had trouble with in the past - Metallic objects. Metals are... weird. They are very unintuitive at first under the PBR model. The reason for that is because Metals possess no diffuse reflection at all. So when painting the color channel which is typically understood as the color one wants to paint the material as, and you're painting, say, a red fabric with a metallic gold coloring, you would previously want to paint it red and then change to yellow to paint the golden part. However, as we can see in this chart:  Gold is listed as having black albedo. If that chart makes your head hurt that's understandable, but at a glance you can see how unintuitive painting a texture would be now. Quoting Wikipedia: quote:Few materials do not cause diffuse reflection: among these are metals, which do not allow light to enter; gases, liquids, glass, and transparent plastics (which have a liquid-like amorphous microscopic structure); single crystals, such as some gems or a salt crystal; and some very special materials, such as the tissues which make the cornea and the lens of an eye. These materials can reflect diffusely, however, if their surface is microscopically rough, like in a frost glass (Figure 2), or, of course, if their homogeneous structure deteriorates, as in cataracts of the eye lens. To make things easier for the artist, a new Pipeline was then created - Instead of you providing the color of the diffuse and specular reflection, you can now provide the final proportion of reflected color (albedo) and whether the object is a metal or not (which Unreal Engine literally calls metalness). And then we have more charts.  Now you can paint the red fabric red, and the gold decals gold, and all you do is paint the metalness texture black for the fabric and white for gold. Generally speaking metalness is treated in terms of black and white since an object either is or isn't metallic, but this isn't always the case when mixing materials - light impurities or dirt in a metal can use the greyish shades to interpolate. These are the two main pipelines when creating a standard material in most engines - Specular Color vs Metalness. Other than that some engines use Roughness, some engines use Glossiness (basically they're inverted). How your average game material looks like With all that said, let's take a look at how a game material might look like. These are the textures used for the Destroyer:   The Height Map can go very much unused (in fact, I don't use it for the Destroyer, but it's worth pointing out it exists) for most materials. Sometimes, though, when you need Parallax or Tessellation, that's where the Height Map comes in. FFXIV uses parallax effects on the ground to give it a sense of depth. These work particularly well in ground textures for stuff like stones and rocks so they look like they're popping out. The Occlusion map is used to prevent the reflection of environment in areas that are, well, occluded. The entire object is treated as exposed, so if there are self-shadows the occlusion helps preventing those dark areas from reflecting a bright sunny spot through the specular map. As you may have noticed, these are a lot of maps. Hand-painting these materials is possible but it's very hard without some sort of automation. Programs like Substance Painter come in handy, since it allows you, with one brush, to paint multiple different textures (I really wish we had this in Photoshop already):  The PBR Pipeline allows for very easy creation of water-soaked materials, which were effectively impossible to do realistically in the past. This is because a water puddle is a very specific transition between materials (and it's actually still more complex in real life than the typical PBR allows, but at least we can have a good approximation now). Say we do something like this to the glossiness map of an asphalt texture (as well as other stuff):  And even with a very crude job like that, we can get something like this:   In the real world the water and the road don't merge into a single plane, as the water is filling a hole on the ground. You could achieve a more realistic/complex result by using two different geometries (and a loooooot of tuning), but this material works fine the way it is. In our simplified version, the water-soaked asphalt starts losing roughness (as a water pool is glossy). Depending on the environment, as the asphalt material becomes less rough you see a huge shine of the specular reflection all over the edges before it converges. It's a quite amusing effect. Because most of the photos on google are either stock photos that require licenses or 3D renders, I'll leave you with this link: https://www.google.com.br/search?tbm=isch&q=asphalt+water+puddle As of the moment there are a few quite beautiful pictures that illustrate that quite well around. Look at this one: https://www.flickr.com/photos/68147348@N00/14333421271 If you take a look at some of the GIS results, you will notice that when not reflecting a bright sky, or depending on the angle or environment, the asphalt looks darker instead of brighter, especially as the water is drying out. Xerophyte can probably correct me on this or explain better, but that's generally speaking due to the porosity of the material. Objects like asphalt, sand or fabric have high porosity so when soaked wet, the wet area will appear darker due to the water's higher refractive index making the object absorb more of the incoming light. Source #1 #2 This blog post has a particularly good photo: http://runnerjimlynch.com/a-day-off-of-running-a-walk-on-the-beach/ As you can see both the darkened aspect of the sand, as well as the increased glossiness of it - even though the sand is reflecting the sky and gaining a blue tint, it's still darker! Doing a search for Beach Shore Line and you can see all sorts of beautiful marks. Some will look brighter, some will look darker, depending on the angle, soaking and environment: https://www.google.com.br/search?q=beach+shore+line While there's such an enormous beauty inherent to those environments, I think there's also an enormous beauty in appreciating how all these light processes work together to deliver us all the scenery we witness every day. From the rayleigh scattering in our sky to the minute bouncings and reflections that light has to undergo in each object before we get to see how they look. Even in the areas where light is blocked and its different parts, there's a boundless beauty that our eyes can capture, and while I'm far away from understanding and appreciating the whole of the process behind that beauty, I am happy to be blessed with being able to learn about that, and I hope over the past few posts you were able to learn something. This beauty is one of the main reasons that attracted me to this field. There's a phrase that is very common has a lot of variants - we are a way of the universe to experience itself. I, particularly, like the poetry in Carl Sagan's speech. Through Pixel Shaders we've been able, in games, to approach reality, step by step. And while we're far away from reproducing the real world faithfully, each step is beautiful in its own way. There's something in translating the things we observe to algorithms, formulae and numbers that I find poetic, seeing the minimalism in something so deep and complex, optimizing to high FPS a crude approximation and refine it to make it less crude. Funnily enough, I'm not that interested in playing realistic games. I can certainly appreciate them and find the beauty in them, and I love to read and work on all these technologies, but I like to play with more abstract representations, and let the imagination fill the gaps. But I appreciate the poetry behind the numbers, and even the plane of complex numbers can reveal hidden beauties if we play with it just enough.  There's no doubt that the modern art pipeline is way different from what it was a while ago, but I love how far things have come, and I can't wait to see what awaits us all in 10, 20 years. In the meanwhile... The Deep End https://www.shadertoy.com/ is probably one of the most amusing websites. It allows you to code shaders in real time and test them in real time in your browser. For the most part everything is pure code - you can add some sounds and use some textures but no models, so every 3D model you see is created through math. You can just browse around the main page and see all the incredible stuff. There's this Mario recreation (volume warning): https://www.shadertoy.com/view/XtlSD7 This fantastic rendering: https://www.shadertoy.com/view/XdsGDB This acid trip (also volume warning): https://www.shadertoy.com/view/XsBXWt This shader that renders its own code: https://www.shadertoy.com/view/llcyD2 And last, but definitely not least, look at this heavy weight behemoth: https://www.shadertoy.com/view/4ttSWf Or pretty much iq does: https://www.shadertoy.com/view/ld3Gz2 Some more Resources: DONTNOD has a Rendering model that adds Porosity to it so they can make more realistic Wet Surfaces. You can read about it here: https://seblagarde.wordpress.com/2013/03/19/water-drop-3a-physically-based-wet-surfaces/ https://seblagarde.wordpress.com/2013/04/14/water-drop-3b-physically-based-wet-surfaces/ These can get a bit more technical than my posts but they yield very nice results. And one last material chart, because for a while they were everywhere in the life of a Real-Time 3D Artist:

Elentor fucked around with this message at 01:09 on Oct 21, 2018 |

|

|

|

Elentor posted:https://www.flickr.com/photos/68147348@N00/14333421271 That sounds right to me. The primary color of most non-metal materials is caused by light entering the material and being scattered inside. How that scattering affects different frequencies of light determines the color we perceive. Most to all of the scattering happens when light hits an index of refraction discontinuity, like between air and glass, or membranes of a cell. The greater the discontinuity, the stronger the reflection. Water has an index of refraction closer to most dielectrics than air, so replacing air-thing interfaces inside the material with water-thing interfaces means light tends to penetrate further down before it finds its way out again, which makes the overall appearance darker. That's actually the opposite of the effect increased index of refraction usually has. Primary reflections, like light on the surface of a liquid, a window or a gemstone, will be brighter the higher the IOR of the material since the air-material IOR discontinuity will be greater. You should be able to get the opposite effect for pores too: if your porous material has an IOR of 1.2 and you pour some magical liquid diamond with an IOR of 2.4 on it then you've increased the discontinuity compared to air and will get shorter internal scattering paths. Elentor posted:And last, but definitely not least, look at this heavy weight behemoth: Inigo is awesome, he's one of the co-creators of shadertoy itself and has been involved in all sorts of demoscene insanity. This one is a particularly famous 4kb demo from 2009 where I believe he did all the graphics. He slowed down a bit since he sold out to the man, i.e. pixar and facebook, but good for him. He made a timelapse of the development of the snail scene which I really liked, since it's hard to fathom how he got there when seeing the finished stuff.

|

|

|

|

thanks so much for diving deep into this world and bringing back a useful field guide and good links i think that basically anything millions of people care about is interesting, because by definition such a thing will have certain features like interpersonal drama, high-level math (what would the exception be? it sure as hell ain't sports), and all kinds of other stuff that nobody hates all of. so even though i have no particular interest in game design and really hardly even play them these days tbh i really geek the gently caress out for your posts. because they're good. good content, good presentation edit: not stating that very well, i guess i'll lay out just a few examples of what i'm talking about -ive never played tabletop RPGs ever, i don't think, but i enjoy reading about that industry's history, drama, and sometimes detailed mechanics discussions can get into ok not high-level math but at least statistics. still, this like everything else these days where dollar numbers in the seven figures plus are sloshing around probably has analytics going on in the background, unless all the companies are as hapless as people on SA make them seem -i don't particularly care for professional wrestling but i enjoy reading about the business drama, the history of the thing, and tricks of the trade -i don't like reality tv but i like reading about that industry's history, what's worked and what hasn't and why, poo poo like that so on, so forth. but i do like the stuff you're talking about so it's even more fun and thanks to you too aboveposter for adding so many great details and collaborating so well with the op on this journey oystertoadfish fucked around with this message at 04:08 on Oct 21, 2018 |

|

|

|

Xerophyte posted:That sounds right to me. The primary color of most non-metal materials is caused by light entering the material and being scattered inside. How that scattering affects different frequencies of light determines the color we perceive. Most to all of the scattering happens when light hits an index of refraction discontinuity, like between air and glass, or membranes of a cell. The greater the discontinuity, the stronger the reflection. Water has an index of refraction closer to most dielectrics than air, so replacing air-thing interfaces inside the material with water-thing interfaces means light tends to penetrate further down before it finds its way out again, which makes the overall appearance darker. Oh I didn't know that that was the same person as who made elevated. Seriously, you folks should check the kind of stuff this Inigo guy does. They're out of this world. Completely unreal. oystertoadfish posted:thanks so much for diving deep into this world and bringing back a useful field guide and good links I really appreciate your kind words and I'm glad that the read was enjoyable. I really enjoy reading about assorted stuff as well. I think in a way there are so many fields that link with each other, and it's extremely beautiful to me.

|

|

|

|

Elentor posted:Oh I didn't know that that was the same person as who made elevated. He has a website with in-depth explanations for a lot of the tricks involved in the things he's done over the years, including a presentation on how elevated was made. The site is a great resource if you want to try your hand at coding your own distance field ray marcher, and who wouldn't?

|

|

|

|

I just caught up with this thread and I just wanted to say hope all is good. Thanks for the really really interesting reads.

|

|

|

|

Hey, no problem. Thanks. I've put TSID on a hiatus. I think I mentioned before but I realized that with the system I wrote I could get to writing an RPG I had hit a brick wall for a while before I realized I could use TSID's system wholesale.    However my hand injury got a bit worse with time. I've been perma-stuck with this:  At this point I'm playing stuff right-handed (Path of Exile with totems and righteous fire. WoW with a USB pedal, a lot of mouse buttons) and working on stuff to automate typing/coding, doing voice commands, and so on. I think my career playing mobas and FPSes is over.  It was a good run though, I'm happy about it. It was a good run though, I'm happy about it.My dev time has instead been towards developing accessibility tools for myself. At this point I'm trying to spare my right hand as well so my current open project is a tool to move the mouse with voice commands based on pitch and modulation. Not interesting stuff for a gamedev thread. However, my arm injury and the realization that I can no longer play certain types of games with ease made me also start a cross-code to implement a major accessibility tool for my turn-based RPG: The ability to give voice commands to control everything in it. Since the project started as a text-based game, I want it also to be able to be able to give you every relevant information via-voice commands and report everything through sound (enemy A has X HP and is a Y type, and so on). This way a blind person could play it, and a person without hands could play it as well. I've been side-tracked by it and I'm not sure what or if to post anything. Most of my time is spent researching since those are fields of which I hold no deep knowledge (or any at all). I'm starting from my knowledge with doing bots to automate tasks and going from there. Elentor fucked around with this message at 08:18 on Dec 11, 2018 |

|

|

|

I can't speak for everyone but I'm personally quite interested in hearing about the development of accessibility features for games. It's something I don't know much about, but I'd definitely like to.

|

|

|

|

Yeah, I think whenever you get the thought "I'm working on [thing related to game] which is not a core game concept, are people interested?" the answer is always yes. I really enjoyed the entire derail about graphics, together with the input from others, and I'm definitely interested in what you're learning about making games accessible!

|

|

|

|

Speaking of accessibility anyone played around with the xbox adaptive controller? Seems to have flown under the radar a bit but looks like you can do a bunch of interesting stuff with it. Relatively cheap I think too as far as accessibility controllers go.

|

|

|

|

|

Accessibility is definitely worth talking about, and it's not something I've heard too much over; I think it's a worthwhile subject to get into.

|

|

|

|

Thanks for the feedback, folks. When I have something more concrete beyond my research state I'll update with some content about accessibility.

|

|

|

|

if somebody wants to change the title to 'deep, specific, and interesting observations on divers aspects of video game design' that's really what I have loved and want from this. or just don't bother whatever project you're working on, you write well and contextualize like a badass so I'm confident all of it will be both interesting in its own right and draw all kinds of connections I never would have thought to examine the issue at hand in the light of

|

|

|

|

Sorry for no updates, just wanted to share this: https://belcour.github.io/blog/research/2018/05/05/brdf-realtime-layered.html Really interesting stuff. Apparently Unity is implementing a lot of high-end shaders in their new release. Elentor fucked around with this message at 19:39 on Dec 30, 2018 |

|

|

|

Also: https://www.unrealengine.com/en-US/blog/epic-2019-cross-platform-online-services-roadmap quote:Throughout 2019, we’ll be launching a large set of cross-platform game services originally built for Fortnite, and battle-tested with 200,000,000 players across 7 platforms. These services will be free for all developers, and will be open to all engines, all platforms, and all stores. As a developer, you’re free to choose mix-and-match solutions from Epic and others as you wish. This is a very, very interesting move from Epic. Epic seems to be trying to seize the moment, probably due to Fortnite's success. They're offering a lot of very interesting incentives for people to release games in their platform (including taking a 12% cut versus Valve's 30%). What I find interesting about this roadmap is: quote:The service launch will begin with a C SDK encapsulating our online services, together with Unreal Engine and Unity integrations That means that Epic is willing to offer an integration with a direct competitor of its engine. One would say the biggest competitor. This is incredibly telling of two things: 1) Epic is making a very aggressive move towards being a publisher, and seizing that sizeable market share, and sees that as a far bigger motivator than trying to monopolize the market with their engine. 2) They don't have the hubris not to do that. 2 being a key point that competitors don't seem to keen on doing even when it seems to be the right market move from an outsider's perspective. The notion that Epic would be offering its Online Services with a complete Unity integration doesn't seem absurd from a business point of view, but it still surprises me nevertheless because of how uncommon that is.

|

|

|

|

Elentor posted:I felt like I could convey my ideas better as a writer, and I genuinely like the SA Community. I'm glad of it

|

|

|

|

I started my 2019 GameDev .log thread where I dump my assorted stuff in it as I do them. If you want to follow my train of thought, I'll update it as I go just like I did with my 2018 one. My GameDev Stuff - 2019 Edition Once I have something worthwhile to make a full post I'll make a full fledged update again. Thanks!

|

|

|

|

I don't think this kind of stuff gets enough attention when it really should, but here it is: Deep Learned Super-Sampling I've posted other stuff before, like this, which is pretty much magic: https://www.youtube.com/watch?v=YjjTPV2pXY0 The interesting stuff about DLSS is that NVIDIA trains them on a game-by-game basis and rolls with drivers. I'd be super interested to see the details of the data sets of each game. Elentor fucked around with this message at 07:35 on Feb 23, 2019 |

|

|

|

https://www.youtube.com/watch?v=KgcU2HBOXAw This is a pretty nice demo that was put up by Unity, mostly to show off some of their new techs. They have half a dozen videos on the behind-the-scenes. It's not the biggest or most advanced demo but it's extremely procedural/L-Tree based and in many ways similar to the stuff I posted on this thread.

|

|

|

|

This is a fantastic GDC Talk about Path of Exile. Very fast, energetic, frontloaded and no bullshit. Probably the best GDC Talk I've ever seen - https://www.gdcvault.com/play/1025784/Designing-Path-of-Exile-to In other news, there'll be likely be a few updates in the upcoming weeks because I'm developing something really cool right now and I plan to write on them. If you still follow the thread, God bless, and I hope you'll enjoy those updates when they come.

|

|

|

|

|

| # ? Apr 20, 2024 04:51 |

|

C-c-combo breaker. I'm still following along and clicking most of the videos. There's just not much to discuss, aside from the demos looking cool (being demos made to look cool rather than real applications), but you shouldn't be left with the impression you're just yelling into the void.

|

|

|