|

Just got a 290x, I fired up AC4 to see how it ran (at 2560x1440 and considering it's unoptimized as poo poo, decently) and I noticed I now have an NVIDIA: THE WAY IT'S MEANT TO BE PLAYED splash screen on startup where there was none before when I had my 570. That's... actually pretty clever.

|

|

|

|

|

| # ¿ Apr 25, 2024 14:57 |

|

Shaocaholica posted:Im kinda late to the game on this but what does mantle mean for the XBO and PS4? Could they support it or is the hardware incompatible? What would mantle bring, if anything, over the current developer APIs? Would it be politically impossible for MS to support, even on a future console? Mantle is a low level API so 'close to the metal' optimizations can be applied to GCN graphics cards in PCs. Console development has always been 'close to the metal' - think of how they managed to wring out performance that could play GTAV at 30FPS on graphics hardware roughly equal to a midrange consumer graphics card from 2005. I don't really think there's any incentive for it to be used in console development, the whole point of the project is to get console-like optimization out of PC hardware.

|

|

|

|

What would be the best aftermarket cooler for a 290X? Would like to make it sound less like a jet engine since it kind of defeats the object of me having a 'silent' case. I was thinking of the Arctic Accelero Xtreme 4 but it's ugly, takes up like 5 slots and isn't even that good at cooling the card apparently. Apparently the best solution would be some kind of closed loop liquid cooling solution - any suggestions that would fit a Fractal Define R4 well? Never done any kind of liquid setup before - what should I be looking at that won't spray water over my case and electrocute me? What's the kind of flexibility that I can get, since if it works well I might want to replace my hyper 212 cpu cooler. Getting a bit tired of the 290X having the heat and noise output of an Apollo launch.

|

|

|

|

Y'know, I was just about to post that selling off the 290X and getting a 980 or something would be more economical rather than trying to band aid the flaws endemic to the card. Plus I've always much preferred Nvidia's drivers and featureset compared to AMD's iffy implementations. If I was to do that I'm leaning toward the 980 as I have a 1440p monitor and SLI still hasn't swayed me as being worth the money.

|

|

|

|

DarthBlingBling posted:Well I'm assuming an old router doesn't have a 5GHz wireless mode. 2.4GHz will not cut it whatsoever Isn't 2.4GHZ actually less affected by walls and other obstacles than 5GHZ? Sure I heard that somewhere

|

|

|

|

cisco privilege posted:I have one of these - it's ridiculous how much power they've stuffed in such a tiny, hand-held box, but drat if they didn't throw some lovely caps in there. Suiscon or whatever on the secondary is not cool. The semi-fanless PSU is quiet and runs very cool for being so small though which is kinda impressive I guess. Yeah are there any SFX supplies that aren't no-name or silverstone? Obvious reasons for the former and Silverstone products seem to be waaay overpriced for what they are from what I've seen. If I build a new PC at some point I'd love to stick it in something like the [url=https://www.ncases.com/[NCase M1[/url] since I don't use any expansion card slots anymore (GPU excepted) apart from a soundcard that I've perfunctorily transferred over for years. Obviously an ATX power supply with a full size graphics card in there is dicey to say the least and I'd probably prefer getting ripped off by silverstone. Generic Monk fucked around with this message at 17:49 on Apr 18, 2015 |

|

|

|

is there any way to see what programs are using your GPU? in the past few days my GPU usage in windows keeps going to 100% for no discernible reason, which since this is a 290x means that my PC's effectively functioning as a space heater. could it be the GTAV beta drivers I've got installed?

|

|

|

|

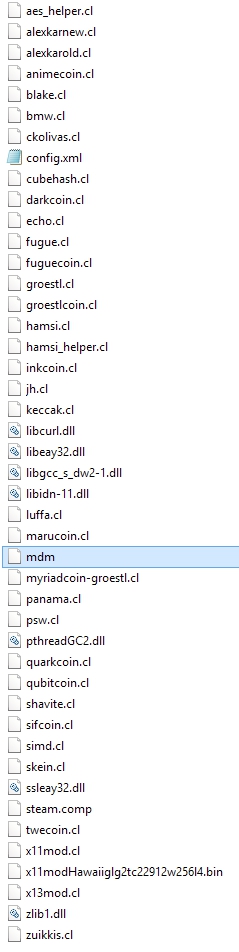

Don Lapre posted:Check for any unusual folders or programs running. Could be coin mining malware I'd done a virus scan so I was a little skeptical of this, but I looked through task manager and found a process called 'mdm' constantly eating about 5% of my CPU. Killed it and the GPU usage went away. Searched the filesystem for it and these turned up nestled in a subfolder of appdata:  oops  guess that's one upside of the 290X being deafeningly loud; if it wasn't it'd probably have taken me a few weeks to notice Generic Monk fucked around with this message at 16:47 on Apr 25, 2015 |

|

|

|

KakerMix posted:I feel this way too. The highest I'd go would be where I'm at now which is @3440x1440 at 34 or so inches. Same pixel density as a typical 27 in 1440 monitor, lots of pixels to push but also allows you to use the monitor for non gaming things. Like POST or just Windows. And 21:9 is wicked awesome for games. could just use OS X where the UI scaling's basically immaculate. failing that, win10's scaling purports to be decent. even in win8 when it fails it just seems to make poo poo a bit uglier

|

|

|

|

Don Lapre posted:TD sells the Asus one for 1029 and you can get about $50 cash back with fatwallet and boa cash back. somehow I doubt the audience for a $1000 graphics card cares that much about $20

|

|

|

|

xthetenth posted:Seriously doubt they'll run it at 3k or higher RPM. I really don't want to imagine what a 290 or 980ti blower would sound like at 100% fan speed since iirc neither crack 60% in normal use. just downloaded msi afterburner to try this out and holy poo poo it's nearly as loud as my loving vacuum cleaner

|

|

|

|

THE DOG HOUSE posted:you should also use that to OC it's already hot and loud enough thankyouverymuch

|

|

|

|

THE DOG HOUSE posted:Oh, 290 im guessing :x lol stock blower 290x as alluded to by the post i was replying to. considering how loud it gets in games i just assumed that was the highest it would go but nope, not even close

|

|

|

|

El Scotch posted:Good lord, OC.net is full of illiterate troglodytes. How hard is it to use simple capitalization and sentence structure? if you expected anything better than either unintelligible rambling from children (eg youtube) or intelligible awful opinions from disgusting 30yo men (eg reddit) from internet discourse i really don't know what to tell you

|

|

|

|

Gwaihir posted:(Derail, but) Witcher 1 isn't at all worth playing really compared to 2 and 3. It's actually pretty cool and good, as well as being a decent entry point to the series for those who don't want to read the books. Only major issues are that some sections are a bit long for their own good and the combat doesn't really work like it should because of the choice of engine. Worth a play though.

|

|

|

|

so uh is there any way to stop amd drivers from installing that loving raptr app whenever you update?

|

|

|

|

Kazinsal posted:Custom install, not express install. Uncheck AMD Gaming Evolved App. ty, was sure i tried that before

|

|

|

|

goodness posted:Trying to run an art program for a friend on a MBPro and it's not as well as I would like. Just need something good enough to run that program smoothly. do you intend to buy another mac or pc? you know you can't upgrade the gpu in laptops right?

|

|

|

|

goodness posted:I vaguely recall seeing an external GPU at a friend's awhile ago but I could be wrong? And I didn't know all Macs were unable to change there GPU. That is pretty dumb, it is his pc though so I was just trying to help him s bit. It's possible to run an external GPU through thunderbolt in a pretty unstable, unsupported configuration. You could do some fuckery with a bespoke thunderbolt chassis (expensive) or thunderbolt-expresscard>expresscard-pcie connectors if your're dead set on it I suppose. Intel are apparently going to officially support external GPUs with thunderbolt 3 but that's kind of irrelevant for your friend and his pre-existing laptop. Fauxtool posted:alienware does one too. Like all their products, its terribly marked up and covered in ledzz it also uses a proprietary connector only found on some alienware laptops so yeah, kinda lame Generic Monk fucked around with this message at 16:17 on Jul 20, 2015 |

|

|

|

Sixty-Proof posted:What is the consensus on the r9 390? Mainly, I want a beast card for gaming. But I am also curious if the 8GB VRAM will help me with multiboxing (running multiple windowed versions of the same game). why would you want to do this

|

|

|

|

Shumagorath posted:There were OSX point releases that were worse than Win10. Remember that one where the scrolling direction was arbitrarily changed to be one that made sense for touch screens when no Macs have them? Or when Yosemite introduced a new network stack that had to be rolled back after a round of seppuku? this makes sense for touchpads (i.e. most macs) and actually feels legit good. utterly bizarre if you've got a mouse plugged in though

|

|

|

|

japtor posted:This is why I'm tempted to just go with integrated graphics until HBM comes down to tiny low/midrange cards tbf you can fit a full size graphics card in an ncase m1 no problem, which is kinda what i'd expect someone to do considering how expensive it is

|

|

|

|

is there any hot gossip on when pascal is meant to be dropping?

|

|

|

|

SwissArmyDruid posted:AMD's supply woes work to their benefit again? tbf this is the first article i've seen that actually explains what 'hdr' in this context actually means beyond some sony executive saying 'oh hdr is going to blow you away man, it's baller' so it's just more bits per channel? ok

|

|

|

|

DuckConference posted:Brightness on every practical display is in a much smaller range than the range of brightness in the real world. Compare the darkest and brightest levels your monitor can produce to what you see outside on a sunny day vs. what you see in a darkened room. oh so it's what i thought it was from the beginning; some mythical display technology that is so nebulous in details of its actual manner of execution and/or technical limitations that it might as well not exist. i'll reserve judgement until i see the "dude it's just 1 year away we promise" OLED schtick repeated at least twice, then i'll know it's not worth paying attention to KakerMix posted:Isn't this the problem that is solved by OLEDs though? As each pixel gives off its own light thus just turns off and WOW real black. supposedly this does for whites what OLED does for blacks. that being said i tend to find myself irked by lovely looking black levels on my screen more than i lament not being literally blinded every time I look at a picture of the sun, which seems to be what this purports to do repiv posted:Now that I think of it, consumer cards allowing fullscreen 10-bit output isn't a new thing. What exactly is AMD bringing to the table besides "wouldn't it be cool if consumer HDR monitors were a thing?" if you think that amd isn't desperate enough to talk up any feature that could possibly be construed as somehow better than their competition (no matter how small) then you don't know amd Generic Monk fucked around with this message at 02:36 on Dec 9, 2015 |

|

|

|

Col.Kiwi posted:There is nothing nebulous about 10 bit, the guy is just trying to put it in laymens terms apologies for any lack of clarity; i'm referring to this nebulous HDR display technology that there are no details of (as in the screen backlight) as opposed to 10bpc

|

|

|

|

repiv posted:Dolby Vision is a working HDR display technology, nVidia were showing off their cards in conjunction with a no doubt ludicrously expensive Dolby HDR monitor at SIGGRAPH this year. i guess they're not pushing it super hard at the moment because it's even harder for the layman to conceptualise than 4K or OLED. the only really effective marketing strategy would be to ship screebs out to stores so people could gawk at the brightest thing in the room, which probably isn't even close to economically feasible at the moment. gotta focus on 4k for the moment, with such electrifying launch titles as, uh, chappie and... amazing spider man? DrDork posted:While I suspect you are correct, the press release from AMD makes mention of mass-market HDR monitors being "on track for 2H2016," with notes about monitors with a nit range of 0.1-2000 via local dimming for "holiday 2016." Makes me wonder if they know something we don't, or they're just making really optimistic guesses to help build interest. 'mass market' makes a lot more sense when you define 'mass market' as 'under $10,000.' also is there any technical reason why a monoitor can't have a variable refresh of up to 60Hz and just sell it as a nice visual quality value add to eliminate any tearing or stutter, as opposed to only being available as THE ULTIMATE 300Hz MLG QUICKSCOPE EXPERIENCE. the only company i see doing anything close to this is apple with the ipad pro's display, and even then they only market it from the power saving angle. Generic Monk fucked around with this message at 03:01 on Dec 9, 2015 |

|

|

|

DuckConference posted:I saw a demo of a sony HDR display at a trade show, and it looked much better than the comparison "normal" panel, but the brightness of the "normal" panel was really poorly calibrated such that the blacks were gray so it was hard to tell. this was absolutely intentional repiv posted:There's no hard limitation, but if the panel can't be driven to a particularly low refresh rate before its quality starts degrading then it may not be worth the expense of including a VRR scaler. thinking about it the only people who even buy highend desktop monitors anymore are gamers and creative professionals, and the latter don't really have that much use for variable refresh rate. both groups are pretty much synonymous with 'expensive' but it's way harder to find an unassuming, tasteful 'gamer' anything Generic Monk fucked around with this message at 03:29 on Dec 9, 2015 |

|

|

|

Malloc Voidstar posted:

hey at least it doesn't start at 40

|

|

|

|

Police Automaton posted:It has probably cropped up in this thread but I'm going to ask anyways - I've been an NVidia-Customer for a while but I want to try out ATI (AMD) again. The Radeon R9 390 looks attractive to me because all that VRAM would be of advantage for the kind of games I play and the price is also very attractive where I live vs. something like an NVidia 970. I'm not interested in 4k, 5k or whatever (and won't be for a long time) and I'm that kind of rear end in a top hat who's perfectly happy with locking all games to 30 FPS. It'd just be nice if I get good performance on 2560x1440 which from what I read, both companies will deliver. I'd also kind of like to throw AMD a bone because the market needs them. unless it's dramatically cheaper I'd really just suggest getting the 970; the performance is about the same but it's a nicer experience overall. last i chacked the linux drives were ropey but usable. the drivers have utterly broken some recently launched games for me until I've downloaded a hotfix; it's not a common thing but it's happened a few times this year. I have a 290x which afaik is basically the same card (w/ half the RAM) and it runs pretty much anything fine at 1440p with at least 30FPS. I really doubt that the doubled VRAM on the 390 is necessary, it seems likely that you'd saturate the GPU long before you came close to filling the VRAM. Generic Monk fucked around with this message at 19:25 on Dec 11, 2015 |

|

|

|

Police Automaton posted:Thanks for the feedback and links. Also, even as much as I like Linux for everything that isn't games, I still boot into windows for games. Even with my nvidia card now steam games that are multi-platform pretty much always perform worse in Linux, sometimes even considerably (as long they have any kind of graphics complexity to them that is, with games that would even run fine on integrated graphics, of course it doesn't matter). Even worse are the random-rear end bugs you'll get in Linux with such games sometimes which the developer usually doesn't know how to fix because they didn't really made the engine. Even as longtime Linux user, I've got no interest in Linux gaming for those reasons. With driver situation I really only meant the barebones stuff, like multimonitor support, video acceleration.. that kind of thing. Nvidia certainly isn't perfect there either. There has been a driver problem recently with the Linux nvidia drivers where it doesn't know how to send screens off into standby properly that are connected via display port. The backlight is left on. Don't get a freesync monitor. If the technology isn't standard in everything in a few years it'll at the very least be cheaper and somewhat mature. You CAN run windows in a VM and pass through the GPU if you have a haswell (I think) or later processor but it's an incredibly hardware dependent, weird process that happens entirely through the command line, with the user discoverabiliity of actual buried treasure. Maybe someday someone will build a friendly looking installer that does it for you, or being as this is Linux complete 3/4s of a friendly installer which stumbles on for years before being put out of its misery. Just follow the ancient sage advice of mac os for most stuff, windows for gaming and linux if you're doing specialised CAD or computer science work or are a server or supersomputer. Linux on the desktop is still a 'technically working, barely' shithouse in my experience. Seamonster posted:Have one, its good enough for my 2560x1600 needs. I'd recommend setting up a custom fan profile in Afterburner if you're not comfortable or used to having your GPU idle at 55-60C - I don't like it when fans shut off completely amd cards are not only rated to run at 50ish, my 290X was DESIGNED to idle at 50ish. you're not really killing the card and are actually taking advantage of the extra money you spent on that cooler Generic Monk fucked around with this message at 00:00 on Dec 12, 2015 |

|

|

|

Police Automaton posted:Yeah, 50C isn't really that hot for stuff like this. There's lots of old electronics going through my hands which have ICs that happily worked at such temperatures and more for 30 years, regularily. Without any kind of direct cooling. TBF I tried fedora... 22? recently and it was remarkably slick - people hate on gnome but it's really come on leaps and bounds in providing an experience that feels like it belongs in the 21st century. I can't help but loathe poo poo like Ubuntu and Mint though. yeah, I'm probably being too hard on it. it's absolutey fine for 90% of the poo poo you do day to day on a pc and even the sound more or less works at this point. anything graphics related is still a huge clusterfruitcake imo tho

|

|

|

|

Dogen posted:Serious question: is there enough difference between 120 and 144 that worrying about that is worth it? no

|

|

|

|

repiv posted:http://www.pcper.com/news/Graphics-Cards/AMD-launches-dual-Fiji-card-Radeon-Pro-Duo-targeting-VR-developers-1500 wow sony really went off the rails with that memory card pricing

|

|

|

|

Paul MaudDib posted:NVIDIA is sitting on an 80% marketshare. Where does the 800 pound gorilla sit? yeah amd has a lot of potential great stuff lined up, with GCN finally coming into its own and zen possibly being not-poo poo. i'd like to say that there's not much chance that things could get much worse for them, but it's totally possible - they've made an artform out of lining up a big advantage and utterly blowing it

|

|

|

|

wolrah posted:

This is a dell issue? I thought this was just an issue with my bog-standard korean monitor, then when I got the dell I just thought it was an issue endemic to Win10. ffs e: i like that the official dell advice to fix this for their 4k monitor is to make it refresh at 30hz. lmao. http://www.dell.com/support/Article/us/en/19/SLN295708/EN Generic Monk fucked around with this message at 20:14 on Jun 6, 2016 |

|

|

|

Hungry Computer posted:It does seem to be a Dell issue, but it also seems to have the most impact on Windows 10. It actually causes Surface Pro 3s on W10 to occasionally BSOD when entering or exiting sleep mode if the monitor is plugged in. Of course none of the people affected by this at my work are willing to replace their Dell monitors or Surface. Yeah, win10 seems to be really sensitive to it. Even 8 was more respectful, same with OSX.

|

|

|

|

are backplates just a matter of course with high-end cards now? are they generally removable? i want a decent 1070 but I'm running a large PSU in my ncase M1, which means I can't use a backplate without loving my power cables right up. not that i'm going to buy a 1070 until they're actually available so uh, prob gonna be waiting a while

|

|

|

|

AVeryLargeRadish posted:Yes and yes. Though you must have an insanely tight fit if the 2-3mm of a backplate is going to make a significant difference. the modular power connectors are literally pressing against the back of my backplateless 290x maybe i'll just flog the sfx-l psu and just get a standard sfx model - if i end up getting a 1070 it's going to be peanuts compared to that anyway

|

|

|

|

|

| # ¿ Apr 25, 2024 14:57 |

|

AirRaid posted:I did a thing. I just ordered this myself. Hopefully it actually fits in my ncase M1. Does it have the 0 RPM mode that the twin frozr one has?

|

|

|