|

Elentor posted:Let's play a bit with System.Random. I wrote a script on Unity that will output a texture where each row corresponds to the first X states generated from Y axis used as a seed. I'm kind of amazed at how well that worked out. You're basically (ab)using the fact that the C# System library uses a poor lagged Fibonacci generator as a feature to get a texture with visible periodic structure. Neat! LFGs are typically not very good generators, so patterns appearing when you start doing cuts through the sample space isn't terribly surprising. I've done some work with procedural texturing (I worked on the procedural wood model that's used to render this thing, for instance) and I would probably have taken a completely different tack to generate a space station-y texture. Subdivide the height dimension into floors, texture each floor with some combination of rectangular tiles and manhattan distance voronoi cells, maybe? Anyhoo, the entire differential-Fibonacci-modulo approach is elegant and a lot less work. It can probably be generalized to produce similar patterns with any Fibbonacci-like sequence, not just whatever C#'s System::random does. On RNGs, there's a distinction between state and entropy. An RNG with more state has a larger period (or can have, at least). An RNG with more entropy is harder to predict. I'd say that in graphics you typically don't care about entropy. Usually the requirements for noise in graphics are the exact opposite of crypto: you want a predictable output that can be easily reproduced. Using hashes and fixed sequences with no entropy whatsoever is the norm. Noise quality is important in the sense that your noise should keep the spectrum expected for its class (white noise, blue noise, etc) for any subsequence of noise values you use, such as for each row in a white noise texture. You can get that with a well-formed (and much cheaper) hash function. In either case, I definitely suggest avoiding anything platform specific like System::random or /dev/urandom that doesn't guarantee a specific implementation. That way lies nondeterminism and pain. If you do need an RNG then you still don't need a ton of entropy as such. You need enough bits of state that the inherent periodicity of any RNG doesn't become obvious and an algorithm that makes good use of those bits. Dom3's sounds-kinda-XORShift thing has neither, the Knuth-based LFG apparently used C# probably has more state* but is pretty bad at using it. Still, there's no particular need for the RNG to entropy-preserving like Blum Blum Shub (yes, that's an actual RNG type). You'll note that the other guy used a Marsenne Twister, which is easy to predict and doesn't preserve entropy but does have a well-researched distribution and is known to not have obvious correlations for a range of sampling patterns. * The C# docs don't specify the exact implementation of System::random so I don't know how lagged it is, but they do say that initialization is expensive which indicates that they're doing at least some of the priming required for an LFG with decent state. E: I was bored, so I tried to prototype a procedural texture that uses a hierarchy of tiles and Voronoi cells on shadertoy. Needs a lot more work to combine them to something remotely good looking but it has a vague vehicle camouflage-ish pattern at least. Xerophyte fucked around with this message at 05:46 on Sep 4, 2017 |

|

|

|

|

| # ¿ Apr 25, 2024 11:58 |

|

Elentor posted:drat, that's a fantastic wooden texture and the whole thing looks very realistic. Something like this, I guess?

|

|

|

|

FractalSandwich posted:Great, another article going on about the theoretical pitfalls of randomness without providing a single example of how it's ever affected anything in the real world. What point is he trying to make, exactly, apart from "maybe don't manually reseed your PRNG with consecutive numbers", which seems like a pretty trivial observation? Say, for instance, you have a game world and you're using procedural heightfield. You want to know the height above sea level at a specific latitude and longitude (x, y), but you don't actually want to store that value permanently. You need to be able to do the computation height(x,y) without needing to know anything about the height anywhere other than at x and y. He's saying RNGs are a very poor tool for that sort of situation. They're fine at generating a heightfield texture from scratch that you store, but often pretty crap if you want to just statelessly evaluate the field. That's not a particularly new insight, but since people occasionally do try to use RNGs for this sort of thing it's probably a point worth repeating. I can't really speak to the quality of his xxHash function. Making a hash function is pretty easy so I've seen a few different ones over the years that have worked, and a few that have not. I can say that the quality of your hash function and how it stands up to being sliced and projected in different ways absolutely matters for the visual appearance when doing procedural anything. A poorly chosen hashing will yield immediately repeating patterns and generally look like arse.

|

|

|

|

Aw yeah, I always love seeing diffraction spikes in star fields. They're totally ingrained in what we think stars look like and also a complete fabrication in some sense. They appear in astronomy photographs because we use mirror telescopes and the top mirror in a mirror telescope is supported by a structure called a spider. A 4-legged spider causes those 4-spoke diffraction artifacts, with fatter spikes for fatter spider legs. The same patterns also appear when using regular cameras, but there the shape of the spike pattern instead depends on the aperture blade setup. For a sensor with a nice, round aperture -- like those of you with well functioning Human Eyeballs -- you'd get a symmetric, circular diffraction pattern (an Airy disk) and no spikes. You can generate the diffraction pattern from an arbitrary aperture by doing a Fourier transform of the 2D aperture mask function and squaring the magnitude, then scaling the resulting kernel up and down for different wavelengths (presumably pick one wavelength for each of R, G and B in a fake filter). Here's a page with diffraction patterns for a couple of different telescope variants. Wikipedia has some schematic examples for different camera aperture types. You can generate a whole heap of different filter kernels, approximating different sensor types if you want to have more than the cross pattern type. Probably you're well aware of all this and went with fat crosses because they look nice, I just think diffraction spikes are real cool and spacey.

|

|

|

|

Karia posted:Hell, even path tracing is a weird approximation where they simulate photon bouncing backwards since it's less computationally wasteful. * Caveat: the results are identical if the virtual materials you use behave like actual, physical materials in certain key ways. This is one of a few reasons the physically-based trend has been very strong in offline land: if you cheat too much you get unpredictable results between different rendering techniques, and we'd like everything to be predictable. Games are going more physical, but I don't believe any game uses models that are 100% energy conserving, perfectly reciprocal, etc, since those are comparably costly and games don't have to care that much. You can gently caress up post-processing in various different ways (like approximating a real bloom filter by processing an LDR image with a hard cutoff threshold and blur  ) which I think gives it an overly bad rap in video games. The truth is we have to do lens flare, bloom, aberration, etc because, ultimately, your monitor is not as bright as the sun. This is probably a good thing as an 8000K monitor half a meter from your face would likely be uncomfortable. However, since we still want to render images of the sun, and reflections of the sun, and things lit by the sun, and still have people read those things as "very loving bright" even though they aren't, we absolutely, positively have to cheat. There is no other option. We can inform our cheats by how the eye behaves when it looks at bright things and emulate that adaption effect, and by how cameras and imperfect lenses behave when there's something very bright in view and I think as an industry we do a reasonable job most of the time. Sometimes you still get a JJ Abrams who wants to dial the lens flare knob to 11 but most people use their powers responsibly. ) which I think gives it an overly bad rap in video games. The truth is we have to do lens flare, bloom, aberration, etc because, ultimately, your monitor is not as bright as the sun. This is probably a good thing as an 8000K monitor half a meter from your face would likely be uncomfortable. However, since we still want to render images of the sun, and reflections of the sun, and things lit by the sun, and still have people read those things as "very loving bright" even though they aren't, we absolutely, positively have to cheat. There is no other option. We can inform our cheats by how the eye behaves when it looks at bright things and emulate that adaption effect, and by how cameras and imperfect lenses behave when there's something very bright in view and I think as an industry we do a reasonable job most of the time. Sometimes you still get a JJ Abrams who wants to dial the lens flare knob to 11 but most people use their powers responsibly.

|

|

|

|

Elentor posted:If you have any fun anecdotes/trivia re: path tracing I'm all ears. I'm really interested in reading more about this stuff. Well, uh, jeez. I'm not entirely sure where to start with that one. If you or anyone else has something they're curious about then rest assured that I am both very fond of the sound of my own typing and full of questionable facts & opinions. Someone mentioned subsurface scattering, so let me just apropos of that post this classic animation showcasing what you can do with subsurface scattering in your path tracer and a soft body simulation: https://www.youtube.com/watch?v=jgLzrTPkdgI Xerophyte fucked around with this message at 07:19 on Jul 7, 2018 |

|

|

|

waifu2x is a weirdly good piece of tech that I'll never use because it's called waifu2x. It also lead directly to this abomination of science. Anyhow, I'm going to take this opportunity to ramble somewhat aimlessly about aliasing in computer graphics. Gaming people tend to talk a lot about geometry edge aliasing (jaggies and crawlies) where the image transitions from one mesh to another but the aliasing problem in graphics as a whole is a lot more general. To say nothing of base signal theory and all the other topics subject to aliasing issues. The basic problem is that pixels are points (they are definitely not squares and thinking that they are may cause Alvy Smith to bludgeon you to death with his Academy awards. You have been warned), yet the world around us and in our video games tends to consist of nice continuous surfaces rather than regularly spaced points. Producing pixel images thus means reducing those continuous things to points, which is a process fraught with danger. Aliasing is specifically a problem that happens when you reduce continuous things that are changing very quickly to a sparse set of points. As a very simple example, I wrote this shadertoy which draws a single set of concentric circles centered on the mouse and zooms in and out on them. As everyone knows, a single set of concentric circles looks like this:  (Made extra aliased by timg. Thanks timg!) The reason you get all that garbage is that the color bands start to vary much faster than the pixel grid, but the GPU still picks one color from one point to represent the entire pixel. This quickly goes very wrong and you get Moiré patterns which also occur in similar scenarios in games -- Final Fantasy 14 actually has some lovely Moiré wherever it has to render stairs, for instance. You can also see that the circles are all jagged even on the right side, because each pixel picks either black or white even though it should pick some sort of average. In general, this is pretty hard to solve because we don't know how much information there is for a pixel to average. Game and GPU renderers in general do it by trying to take the properties of the geometry it considers covered by a pixel -- material color, material roughness, emission color and intensity, surface normal, etc -- and trying to produce a single average of those things. It'll then pretend that doing math on these averaged properties is representative of doing math on the entire covered surface. This is of course incredibly and stupendously wrong. Mathematically it's pretending that f(2) + f(4) = 2f(3) for some unknown function f. However, in practice it turns out that the pixel-coloring lighting functions we're dealing with are frequently nice and kind so this happens to work. The remaining game aliasing areas are the big caveats where it doesn't. Geometry Edges as mentioned. When drawing one thing the game doesn't know what might geometry might be behind or in front of the thing its currently drawing, so it can't average their properties. Nor would the average necessarily be all that useful if it could. MSAA will specifically look for geometry discontinuities and only do additional work there. It's also why level of detail is important: if you draw something with a lot of geometric detail that's smaller than a pixel then the averaging doesn't work, so in order to avoid aliasing you have to be able to swap out distant models for less detailed ones even if you don't need to for performance. Reflections of Bright Things are trickier. Our averaging of the geometry properties is fine if and only if the lighting that's coloring that geometry doesn't vary much. Unfortunately, in a lot of typical cases things are lit by very small, very bright things, such as a luminous ball of plasma a very great distance away. The difference between "slightly reflects the sun" and "does not reflect the sun" for something like polished metal is massive, and edges between the two conditions suffer the exact same problems. Non-geometry material boundaries are somewhat application-specific, but if you've got some gribbly light sources next to some metal or similar then you'll often have a jagged edges and shimmering if the game fails to blend between the two. MSAA doesn't necessarily help here. Final Fantasy 14 again has some lovely examples, like pretty much the entirely of Azys Lla (which is noticeable in that video, and way worse without youtube compression). [E:] Better example. If still from Azys Lla, because I like that FF14 zone. This is my trusty, uh, Accompaniment Node. One shot is up close, one is enlarged at a distance  vs vs  At a distance the thin emissive elements are aliased to hell, since the one sample for the pixel will either randomly hit a red light or not. And that's with the fullscreen filter on which tries to smooth it out but can't keep up. A different renderer could structure its materials such that it can blend the emission and wouldn't necessarily have this particular problem. Games of course work around these things. Lighting in games tends to be soft, because harsh lighting has aliasing issues. Materials in games tend to not be all that shiny, because shiny materials have aliasing issues. And so on. Aliasing is one of those fun things that's also a problem over in offline render land, but our problem scenarios tend to be slightly different. A path tracer will have no problem taking a couple of hundred samples per pixel, but you can easily construct scenarios where there's enough significant detail that 1000 sample anti-aliasing is still not even close to enough. Say, for instance, you model a scratched metal spoon. You then place your lovely spoon on some virtual on pavement on a virtual sunny day and place your virtual camera in a distant building. You point the camera at the spoon. The sun is, again, very bright, and you will expect to see it glint in the scratched metal. You render's odds of actually finding a particular scratch on the metal that contributes is infinitesimally tiny, even if the "SSAA" pattern for an offline renderer looks like this rather than this. There are solutions to even that sort of scenario but they also involve clever averaging. Hell, the idea of a "diffuse" surfaces and microfacet theory, which together form pretty much the entire light-material model used by modern computer graphics, games and otherwise, are both big statistical hacks to get around the fact that what largely determines the appearance of things are teeny, tiny geometrical details and imperfections that are way, way smaller than a pixel and thus hard to evaluate individually. It's aliasing all the way down. Xerophyte fucked around with this message at 08:04 on Jul 14, 2018 |

|

|

|

Elentor posted:With NVIDIA using Machine-Learning-based algorithms for its new Anti-Aliasing method, and MI-specific processors being produced, we can expect Neural Networks to make their way more and more towards gaming over the next decade. I would say that technology-wise, Deep Learning is going to be the next "big thing". Again, this is just my guess. But I'd say the overall major leaps in real-time 3D standards came would be: This sort of thing is already used in real time applications, if not games specifically. If you run a newer Photoshop then Adobe are using various neural networks to do all sorts of image processing tasks, I believe including at least some of their upscaling filters. They've at least published a lot of research in that field. That particular upscaler from the two minute papers video seems to have been published at NTIRE 2018: it's one of multiple of different neural network upscaler papers presented just for that one conference, and even more are detailed in their 2018 Challenge paper. The "fully progressive" one didn't even have the lowest reconstruction error in its category if I'm reading the paper correctly, although it was a lot faster than the one with less error. Over in offline land, we use neural network-based filters in production today. Almost all offline renderers work by producing ever better approximate solutions where the error appears as white(ish) noise over the image. One of the major trends of the last couple of years has been applying denoising filters on our crappy intermediate results to get to a usable image faster. The one common major development of the last year is everyone and their dog implementing Nvidia's ML denoiser for their renderer, which like that upscaler is based on a neural network. Nvidia's latest research one uses what's called a recurrent autoencoder (as opposed to something like generative adversarial network like that particular upscaler also this is getting uselessly technical). Karoly actually did a video on that one too: https://www.youtube.com/watch?v=YjjTPV2pXY0 There are in general a lot of graphics problems that are variations of "I want to produce this known nice looking image that took me ages to get from this known lovely looking image that took a fraction of the time to generate, but I have no idea what a filter function that does that work should look like" which is a type of problem ML is very well-suited for. It's still very much an active research area because ANNs are fickle beasts: you need to be careful with your training data and make sure its sufficiently general to avoid fitting the filter to the data, and the more general you want it to be the harder it is. Nvidia's denoiser initially came with all sorts of evil caveats -- for instance we had to run it on 8-bit LDR images, which is just not right. They've refined it since and it's great for something that can clean up horrifyingly noisy inputs in milliseconds but it still overblurs or otherwise fails for some common cases. It also gets even trickier when you start looking at temporal stability: in a game, video or other sequence you need the images to not flicker from frame to frame, which means the filter needs to be more complex. Because of this all the production offline renderers I know of still use some sort of more predictable non-local means or other more traditional filter to do any final frame denoising. Here's one for deep images Disney published yesterday for instance. Anyhow. Machine learning is mature enough that you could use ML-based filter techniques in a game today if you really want to. You could train an ANN on the game's own output to produce a moderately effective game-specific upscaler, if you have a small research team and a love of adventure. It might even be a good idea if you want to do some sort of specific upscaling that's otherwise hard, like foveated rendering for VR. It'll have to be a lot simpler than the ones used in image processing, which take seconds rather than milliseconds, but it can probably be made to work pretty well. The problem is you'd be jumping into the unknown, and most game companies aren't super keen on betting their graphics pipeline on their ability to do weird stuff no one has tried on the fly (rule-proving exception: media molecule). I'd expect the real time ray tracing hardware to see quicker adoption even if the hardware is more recent (nonexistent, currently), simply because we've done raytrace queries in a raster pipeline since Pixar started making movies with Reyes 30 years ago. We've accumulated a lot of institutional knowledge on what sort of effects are feasible with 2 rays/sample, and now we finally have the hardware support to do 2 rays/sample in a 60 FPS game at a small but not insignificant cost. There's a lot of nicely visible low hanging fruit just waiting to be plucked, and promptly overused in a flurry of chrome spheres and refractive glass sculptures.

|

|

|

|

TooMuchAbstraction posted:I wonder if anyone's actually tried to do something like that, where the behavior of in-game actors is dictated by neural nets. I know people are making AIs that can play games the same way humans play them, but do you know if anyone's using ML to produce the gameplay that humans experience? AI isn't exactly my field, I just go to graphics conferences and listen to the occasional Nvidia engineer gush about machine learning things they'd rather do instead of push pixels. I'm sure there's research going on, but I at least haven't heard of any "real" video game AIs using neural nets. I know there's a couple of open source board game AI opponents built on the types of techniques Deep Mind used for alphago, which basically worked by using the ANN as a heuristic to importance sample for a traditional Monte Carlo tree search AI. Valve's collaboration with OpenAI for Dota bots and Blizzard's with Deep Mind to add an AI feature layer to Starcraft 2 are probably mostly done because those companies are very financially secure and full of nerds who think machine learning is cool, but in some part because they want to keep tabs on how close the tech is to viable for real usage. The Dota 2 bots are pretty credible -- if not all that open, far as I know -- and that's a drat complex game for an AI. I think there are a couple of practical reasons ANNs still haven't been used for real, even where they in principle could. One is that it's real hard to add any sort of manual control to an ANN. They're black boxes that approximate some optimization function and you generally can't open them to see how they work or tweak their behavior. The goal of most game AI isn't to defeat the player, it's to create something nice and varied that the player will enjoy repeatedly beating the tar out of. ANNs are good at making something very consistent that will either always lose or always win, depending on how well you did in designing and training it. They're not necessarily all that entertaining to play against and it'll be hard to make them varied and appropriately challenging. You can still make them useful by not using them directly. You could train a helper ANN for your AI that'll rank some action options depending on current game state and then you do some fuzzy logic weighted random selection on those with the weighting based on your difficulty to select a move. That just basically gives you a slightly different heuristic then what you'd have done by hand, which could be nice for complex things but is probably not critical. A manual heuristic combined with appropriate cheating works, is proven and is almost certainly easier to predict, control and tune. If your AI keeps always invading Russia in winter and the game ships next week you probably want to be able to tell it to stop, not train it until it figures out that it's a bad idea. Another is that we're at the point where it's technically feasible, but not really practical. A couple of researchers willing to push the envelope and given couple of weeks of extended ANN training before every balance patch could probably make a decent ANN for playing Civilization, or at least for handling some particularly hard and ML-appropriate sub-task like finding a good set of potential moves for a unit given the current game state. That's not useful enough to do for a release, and I think more boardgame-like games such as Civ are probably one of the easier targets as far as using ANNs for real game AI goes.

|

|

|

|

Kurieg posted:The other problem with games like Civ is that if you define your goals poorly, every game will just result in the AIs openly joining into an alliance and beating the players face in. Rather than it just seeming like that's what they're doing. You joke, but that sort of thing is a real problem for trying to use a neural network or other machine learning approach to make an interesting overall AI for even a fairly boardgame-style game like Civ, which are the games where we know the most about how to apply all these machine learning techniques. Using the state of the art techniques of today I think Firaxis can probably train a deep neural network to beat any human player at civ, if they hired the right people and threw Amazon machines at the problem for half a year. It'd be a ruthless Civ machine, backstabbing at the drop of a hat, ganging up on anyone who seems the slightest bit ahead, and absolute unfun dogshit for a human to play against. We might figure out how to train for entertainment someday, but there's a lot of open questions to answer before anyone heads down that route. Using ML to solve sub-problems is much more tractable. You can use machine learning for all sorts of hard stuff that we don't quite know how to do: doing keyframe interpolation in your animations, for solving complex many-body physics interactions without needing too many local iterations, for doing post-process filters like motion blur and bokeh on your graphics, for suggesting city placement locations, and so on. For the actual AI, eh, not so much.

|

|

|

|

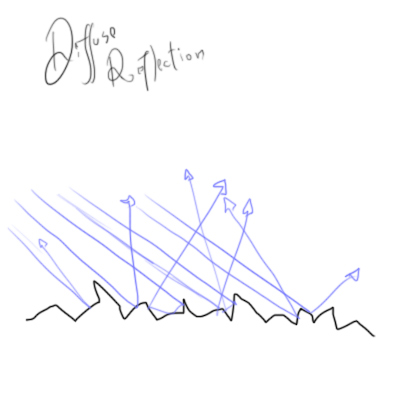

One thing. This is not usually called diffuse reflection*. Light being reflected in differently angled surface microfacets results in what is generally called glossy or rough specular reflection. Diffuse reflection is another phenomenon entirely: it's the "reflection" from the light that was refracted into the material rather than being reflected at the surface. If the material is optically dense -- think plastic as opposed to water -- then this refracted light is quickly diffused inside the material, hence the name. While bouncing around inside the light will either eventually be absorbed by the material as heat or eventually find its way back out. Exactly what light gets absorbed and what gets scattered back out depends on the wavelength, which causes the diffused light that comes out to have a different color than it originally did going in. This is the process that makes wood brown, paint red and so on. Diffuse reflection is in this way different to glossy or specular reflection, which reflect all wavelengths equally for non-metals and so are colorless/white. Diffuse reflection is basically the same process as fog, just simplified for much denser materials. For offline there's typically 3 classes of increasingly complex and expensive rendering solutions to handle different cases: diffuse reflections for very dense media like clay or rocky planets, subsurface scattering for moderately dense media like skin or plastic, and various real volumetric scattering simulations for thin media like air or water. Games usually have a similar split, although the implementations used for subsurface scattering and volumetrics in games tend to be vastly different from offline -- unlike diffuse reflection where both games and offline do the same thing. Ultimately they're all modeling the same phenomenon, just at different scales. Schematically at the very small scale, diffuse reflection looks something like  where the blue and green arrows are the process of diffusion, and the yellow is glossy reflection and glossy refraction. One of the big open problems in computer graphics is around handling both glossy and diffuse reflection well at the same time. There's a coupled interaction where the diffusely reflected light is made up of only the portion of light which was not glossily reflected. Modeling that coupling is, it turns out, very hard to do without breaking the various rules about energy conservation you mentioned. There's a nice paper by Kelemen and Szirmay-Kalos from 2001 which can handle the simplest case of a single layer and can be generalized somewhat, but their approach requires storing a lot of precomputed values, plus it's very hand wave-y and just kind of massages the math so it works. It is still basically the state of the art. Handling the interaction between more complex arbitrary layers in a practical way is something we just don't know how to do. Another thing is that diffusion is in practice only exhibited by non-conducting materials. Light penetrating into a conductor is extinguished almost immediately so diffuse reflection for metals and similar is effectively 0. Those materials acquire their color through an entirely different interaction: they have a wavelength-dependent glossy reflection which causes the glossy reflection to be colored rather than colorless. * At least, it's not Lambertian reflection. The Oren-Nayar reflection model does behave somewhat like this schematic, except with diffuse Lambertian reflection in each microfacet. Oren-Nayar also typically uses a different (and simpler) statistical distribution for the flakes than the more modern models for glossy reflections. ON is a good model for surfaces that are very optically dense and also rough on a larger scale, like distant rocky planets. Then it makes sense to model that larger scale roughness statistically in the same way we do the smaller scale roughness that causes glossy reflections. Anyhow, this is getting super technical and I should shut up.

|

|

|

|

I admit I'm not that familiar with the terminology Unity's PBR model, I've used it a little bit since they collaborated with us on a presentation for at SIGGRAPH this year but that was basically just importing data and hooking up textures. Their Standard Shader uses specular and smoothness as parameter names for their microfacet lobe, and I see they do indeed describe smoothness as "diffusing the reflection". I would've actively avoided that term outside of things that map to BRDFs like Lambert or Oren-Nayar to prevent confusion but, sure, I can see what you/they mean. Even if you take "diffuse reflection" to be "anything not a perfect mirror", the majority contribution to that for an opaque dielectric is still the internally scattered and colored light, as modeled with a Lambertian or similar, rather than the specular microfacet reflections as modeled with a Phong or the like. For an opaque dielectric Unity uses a Cook-Torrance GGX BRDF for rough specular/glossy and the Disney Diffuse BRDF for the internally scattered part that I'd normally call "diffuse reflection", with the latter the only one affected by albedo, Unity's primary material color parameter. The study.com quote that "diffuse reflection occurs when a rough surface causes reflected rays to travel in different directions" is if not flat out wrong then at least severely misleading, Wikipedia's "diffuse reflection from solids is generally not due to surface roughness" is a lot saner. I would at least be extremely careful about thinking about microfacet-based Cook-Torrance BRDF lobes as being there for modeling diffuse reflections when working with any material parameterization. If you come across an element that says something about "diffuse color" or similar then it's going to be talking about light that's diffused through the material, rather than the rough microfacet reflections.

|

|

|

|

Elentor posted:https://www.flickr.com/photos/68147348@N00/14333421271 That sounds right to me. The primary color of most non-metal materials is caused by light entering the material and being scattered inside. How that scattering affects different frequencies of light determines the color we perceive. Most to all of the scattering happens when light hits an index of refraction discontinuity, like between air and glass, or membranes of a cell. The greater the discontinuity, the stronger the reflection. Water has an index of refraction closer to most dielectrics than air, so replacing air-thing interfaces inside the material with water-thing interfaces means light tends to penetrate further down before it finds its way out again, which makes the overall appearance darker. That's actually the opposite of the effect increased index of refraction usually has. Primary reflections, like light on the surface of a liquid, a window or a gemstone, will be brighter the higher the IOR of the material since the air-material IOR discontinuity will be greater. You should be able to get the opposite effect for pores too: if your porous material has an IOR of 1.2 and you pour some magical liquid diamond with an IOR of 2.4 on it then you've increased the discontinuity compared to air and will get shorter internal scattering paths. Elentor posted:And last, but definitely not least, look at this heavy weight behemoth: Inigo is awesome, he's one of the co-creators of shadertoy itself and has been involved in all sorts of demoscene insanity. This one is a particularly famous 4kb demo from 2009 where I believe he did all the graphics. He slowed down a bit since he sold out to the man, i.e. pixar and facebook, but good for him. He made a timelapse of the development of the snail scene which I really liked, since it's hard to fathom how he got there when seeing the finished stuff.

|

|

|

|

Elentor posted:Oh I didn't know that that was the same person as who made elevated. He has a website with in-depth explanations for a lot of the tricks involved in the things he's done over the years, including a presentation on how elevated was made. The site is a great resource if you want to try your hand at coding your own distance field ray marcher, and who wouldn't?

|

|

|

|

|

| # ¿ Apr 25, 2024 11:58 |

|

After playing through the stage once, you and the enemy timewarp to the start. On the next loop(s) you have to defend your past self while you dodge your past self's bullets. Your forward thrusters have failed. Propel yourself through the level using gunfire reaction force alone. On the not-appropriate-here scale: you're trying to shoot down a small agile space ship (or anime girl in frilly dress), but you can only fire slow moving bullets in highly intricate geometric patterns in time to the music. Build combos to crescendo the song and up your pattern intricacy until the opponent is caught.

|

|

|