|

"machine learning" is an exciting technology which allows computers to become more racist than ever before. silicon valley loves it because it lets them squeeze more money out of the massive amounts of surveillance data they've got sitting around. however, most of the time it doesn't actually work. FAQ: Q: how do i machine learning? acquire a large amount of illegally harvested user data. feed it to your computer. trust whatever the computer spits out Q: how should i use machine learning? don't use machine learning Q: what is deep learning? deep learning is when you use your graphics card to do machine learning. deep learning is only useful for speech synthesis and niche pornography Q: is machine learning going to cause the singularity? no. machine learning exists exclusively to allow hiring managers and advertising executives be racist slightly more efficiently Q: how do i acquire machine learning figgies? get a phd. sorry, i don't make the rules Q: what is the 'kernel trick'? a sex act performed by talented professionals in a particularly exclusive gentleman's club on the outskirts of Reno animist fucked around with this message at 07:58 on Nov 18, 2020 |

|

|

|

|

| # ¿ Apr 25, 2024 23:45 |

|

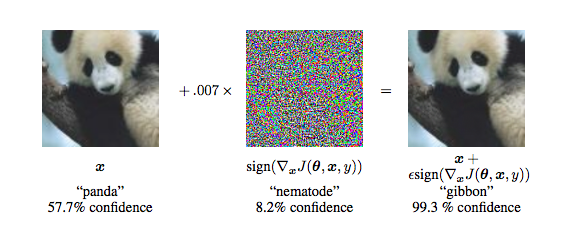

DONT THREAD ON ME posted:how do we do anti-machine learning things like how do we create more bogus user data that will gently caress with machine learning poo poo i always lie on the youtube quizzes that show up sometimes there's also adversarial examples which are basically optical illusions for attacking deep learning systems:  those are pretty crazy, like you can wear pixelly facepaint at a protest to trick facial recognition systems

|

|

|

|

power botton posted:we do ~*UBA*~ software and after years of management crying we don't have machine learning and can't sell anything without it, we finally released something and barely any of our customers give a poo poo aside from a checkmark on an RFP. it's well known that customers care deeply about the implementation details of the software they use

|

|

|

|

Bloody posted:is there hdcp but for webcams? just require the user's pics come from an hdcp-compliant 3d webcam. much harder to just upload a 3d scan of a rando celeb's head that way. still very easy for them to just hold up their hostage child or whatever tho or just, like, hold up a picture of a child pointsofdata posted:we replaced a vendors "ml" process with an afternoon writing regexes and got better result. seems like a rip off op, I wouldn't buy again.

|

|

|

|

the year is 2023. i walk into a mall kiosk labeled PORN-O-SCAN. "one scan please," i say. "i want to watch porn with my face in it."

|

|

|

|

Bloody posted:holding up a picture doesnt work because of the hdcp 3d webcam oh i meant hold up a sculpture of a child

|

|

|

|

Helianthus Annuus posted:i wanna read that post okay, so, first thing you gotta understand is what people mean by "deep neural networks". a neural network is made up of "neurons", which are functions of weighted sums. thats it. here's a "neuron": code: inputs get multiplied by weights ([10, 300, -.5]), then summed, and passed through a nonlinear function. the function we're using is called ReLU, defined relu(x) = (x if x > 0 else 0). you might think thats a lovely function but it's super common in deep learning for some loving reason of course, neural network people hate labeling things, so theyd actually draw it like this:  which looks more convincing. now, what if you don't know what weights to use? that's easy. pick some random weights. then, steal a dataset of car specs + prices from somewhere. pick a random car and feed its specs to your function. your function will return a price, which will be wrong. so, tweak your weights to make them more correct. this is easy to do, because you know how much the output will change if you change your weights. if the car you're looking at has a wheel size of 10, changing W[0] by 1.5 will change the output by 15. capische? so just tweak all your weights a little so your function's output is a little closer to the actual price. now do this a bazillion times. if you're lucky, your network will now give good estimates for car prices. if it doesn't, you can always add more neurons:  the later ones are sums of the earlier ones, see. what do the ones in the middle mean? idk, but now your network can express more functions. the training algorithm still works the same way, divide-and-conquer style. but that's childs play. thats barely any neurons at all. that's the sort of poo poo you'd see in a neural network paper from the 1980s. weve got gpus now. you can throw as many neurons as you want around, in giant 3d blocks of numbers, each made up of the sums of other giant 3d blocks of numbers. just go hogwild:       these are all state-of-the-art networks. if you take a long time and learn a bazillion tricks to train them correctly, you can get these to give pretty good accuracies for benchmark problems. they were all discovered by, basically, loving around. there's barely any theoretical basis for any of this. machine learning! this post got fuckoff long so im not gonna even post about interpretability. just think about picking some numbers from the middle of those giant networks and trying to decide if they're racist. now imagine your career hinging on getting good results from that. welcome to my grad program animist fucked around with this message at 10:47 on May 30, 2019 |

|

|

|

poty posted:thanks for the post it helps my imposter syndrome to know that everyone does the same stupid bullshit and not just me op we are all students of the ancient practice of loving around until something works

|

|

|

|

ai won't kill us, but it should because we deserve it

|

|

|

|

lancemantis posted:hey now posting convnets is cheating ill have you know that convnets are an extremely exciting area of research with wide-reaching implications in the fields of speech synthesis and niche pornography

|

|

|

|

interpretabilityposting: how tf do you understand what's going on in a deep convnet? how do you know what the network will do? some people have tried using like, formal proofs and geometry to describe network behavior. sometimes they can get this to work for more than, like, 10 neurons. but it's mostly useless for larger networks. too many variables, too high-dimensional. more interesting approaches for understanding neural networks are empirical, imo: do experiments, treat neural networks like lab animals and try to do biology to them. the best recent paper is prolly https://distill.pub/2018/building-blocks/ which is fun to play around with in the browser if you've got 10 minutes. their code hallucinates images that activate different slices of a neural network; you can tell it to activate some neuron or group of neurons and it'll make a picture that activates them. sometimes the pictures even make sense. it's hard to tell what extent that's just pareidolia and selection bias, though. if you reimplement that paper but don't tune your hallucination code right, you'll just get white noise instead of pictures of dogs for your visualizations; and there's no reason to assume that white noise is a worse description of the behavior of the network than the dog pictures. it's like... trying to understand how a squid thinks by FMRIing its brain. but then only using the FMRIs that you think look cool. idk. the other super cool empirical paper to come out recently is the lottery ticket hypothesis, which has pretty far-reaching implications if it turns out to be true. it's suggesting that the big convnets we're training are actually tools that let us do searches over combinatorially-huge numbers of smaller meaningful subnetworks. basically if you throw a giant bucket of spaghetti at the wall a little bit of the spaghetti will stick. if it pans out it could explains why, like, deep learning works at all. on the other hand it might be bupkis

|

|

|

|

lmao https://twitter.com/farbandish/status/1134485622352240640

|

|

|

|

does anybody know anything about tsetlin machines? are they more or less than the average amount of nonsense

|

|

|

|

Lonely Wolf posted:just make a NN that outputs "ethical" or "not ethical", problem solved with a small perturbation of the input I can get it to say everything is ethical. so much more efficient than ethics boards!

|

|

|

|

huhwhat posted:

this always returns false, it should be the other way around

|

|

|

|

lol https://www.technologyreview.com/s/613630/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/ "To get a better handle on what the full development pipeline might look like in terms of carbon footprint, Strubell and her colleagues used a model they’d produced in a previous paper as a case study. They found that the process of building and testing a final paper-worthy model required training 4,789 models over a six-month period. Converted to CO2 equivalent, it emitted more than 78,000 pounds and is likely representative of typical work in the field." that's the lifetime emissions of 5 cars btw animist fucked around with this message at 21:04 on Jun 9, 2019 |

|

|

|

this ml app is actually kinda cool. neural art is neat, imo

|

|

|

|

spooky paper: "adversarial examples" are actually just the computer picking up on patterns that humans can't see https://arxiv.org/abs/1905.02175 on the plus side you can train deep neural networks to not use those features. but then they lose accuracy

|

|

|

|

Captain Foo posted:no it's because it can theoretically break the panopticon in ways that are less obvious than IR blinders or strobes gdi you're right i just started research on improved adversarial defenses and somehow i didn't grasp that this is what i'm actually getting paid for, lol

|

|

|

|

https://twitter.com/byJoshuaDavis/status/1147538052639682565

|

|

|

|

big scary monsters posted:have there been examples of adversarial approaches that limit access to the network they're trying to spoof? presumably a real adversary isn't going to hand you their model and give you a week's unrestricted cluster time running them against each other - what's the minimum attempts you can use to turn a gun into a turtle? there's a bunch of "black box attacks" that work this way, only seeing inputs and outputs often you don't even need those, though, because adversarial attacks usually transfer between networks. so you can just train your own model, and as long as it's even vaguely similar to whatever you're attacking, images that trick it will probably also trick your target

|

|

|

|

lancemantis posted:it also helps that transfer learning is common in vision so you can expect whatever you’re dealing with to be some fine tuned head on an imagenet base fun fact: there are more than 120 breeds of dog in imagenet, out of 1000 categories total. they're wayy overrepresented compared to everything else in there. so, over the past 10 years, humanity has collectively spent billions of dollars in R&D on... building the perfect dog show judge.

|

|

|

|

Alan Smithee posted:Question: was looking for a thing that was going around not too long ago. It was like a neural network of morphing faces (not sure if it was that or a straight render). Looked like the wall of faces from doom. Anyone know what I’m talking about? Driving me crazy I can’t find it this? https://www.youtube.com/watch?v=XOxxPcy5Gr4

|

|

|

|

using something called "random sampling", a concept from quantum chromodynamics,

|

|

|

|

Winkle-Daddy posted:Anyway, what I want to know now is which ML library should I install to make a star trek computer using a trinary object model(???)? maybe pytorch?

|

|

|

|

ontology is when you add IF-THEN statements to your code, and the more IF-THEN statements you add, the more ontological it is

|

|

|

|

i noticed i had some strange lumps in my brain so i went to see an ontologist

|

|

|

|

https://twitter.com/stephentyrone/status/1192053227061207040 ...

|

|

|

|

Sagebrush posted:if elected president i will require driver re-testing every three years and drivers will be graded on a bell curve. those scoring in the lowest 25% of all drivers tested will have their license revoked. use this as an excuse to build more public transit pls

|

|

|

|

unrelated: https://talktotransformer.com is kinda incredible

|

|

|

|

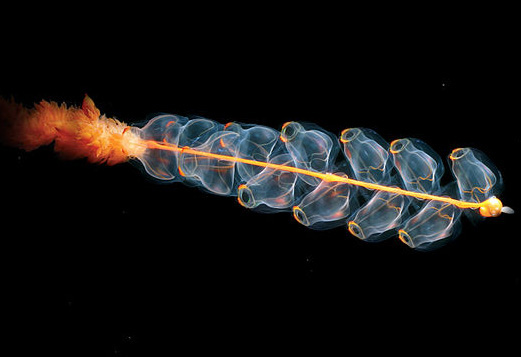

update: instead of learning, it is drifting about filter feeding in the abyssal zone. probably for the best

|

|

|

|

the BAC increased 3 points today, stirring fears of a lapsed recovery after a shaky summer

|

|

|

|

https://juliacomputing.com/blog/2019/11/22/encrypted-machine-learning.html incredible. a technology where both the user and provider are systematically prevented from having any idea what's going on

|

|

|

|

Bloody posted:lol julia the type-safety of python with the compile times of c++

|

|

|

|

refleks posted:Machine learning found in production at work

|

|

|

|

if you're serious about high-performance numerics you should be writing CUDA anyway oh also, check this out: https://tvm.apache.org/2018/10/09/ml-in-tees a custom compiler for running ML models inside SGX enclaves. now the system that implacably denies your loan application could be running inside your very own computer! And you *still* won't be able to see how it works! animist fucked around with this message at 03:33 on Dec 5, 2019 |

|

|

|

can confirm that debugging high-performance scientific code is hell (because i'm doing that right now)

|

|

|

|

e: wrong thread

animist fucked around with this message at 23:09 on Dec 5, 2019 |

|

|

|

there's also the universal approximation theorem which states that single-layer (shallow) NNs can approximate functions over reasonable chunks of R^n. that's been proved for sigmoid and ReLU. In practice we use deep networks instead, i think there's some results about how they're much more efficient in terms of representation power. there's also some work with "verifying" ReLU-based networks, which rely on the simple structure of ReLU to prove geometric properties of the network. in practice these can only prove generalities, though. Stuff like "if you make a small change to the input, the output can only change by this amount." they can't prove deeper specs about network correctness, because, if we could specify exactly what operation we wanted the network to do, we wouldn't need a neural network, now would we? one other way to think about the linear stuff is just to intuit that a linear operation followed by another linear operation is linear. so, stacking linear layers, you're really only training a single linear transformation. you don't generally have that problem with nonlinear activations by definition; that property is pretty unusual. and the algorithms can optimize through pretty much whatever operations you want, so, the nonlinearity isn't a problem. ReLU is used because it works very very well in practice (and is cheap in hardware), idk why it works so well though.

|

|

|

|

|

| # ¿ Apr 25, 2024 23:45 |

|

Cybernetic Vermin posted:

it's definitely a handy hammer to have. not everything's a nail though. also lol at the legions of prospective PhD students whose life aspiration is to just twiddle knobs until they can finally get all those overpaid truckers fired

|

|

|