|

The thing that I most wish Sony copies from Microsoft is the ability to seamlessly use your phone to access stuff you've just made through the Share button. It is absurd that currently the most reliable way to get a screenshot to my phone right now is to tweet it and then download it. The optimal way would be to integrate dropbox or google drive or something of that sort, but if it has to go through the PS app, so be it, but just let me grab my screenshots or whatever without having to literally publish them somewhere, please.

|

|

|

|

|

| # ¿ Apr 25, 2024 11:24 |

|

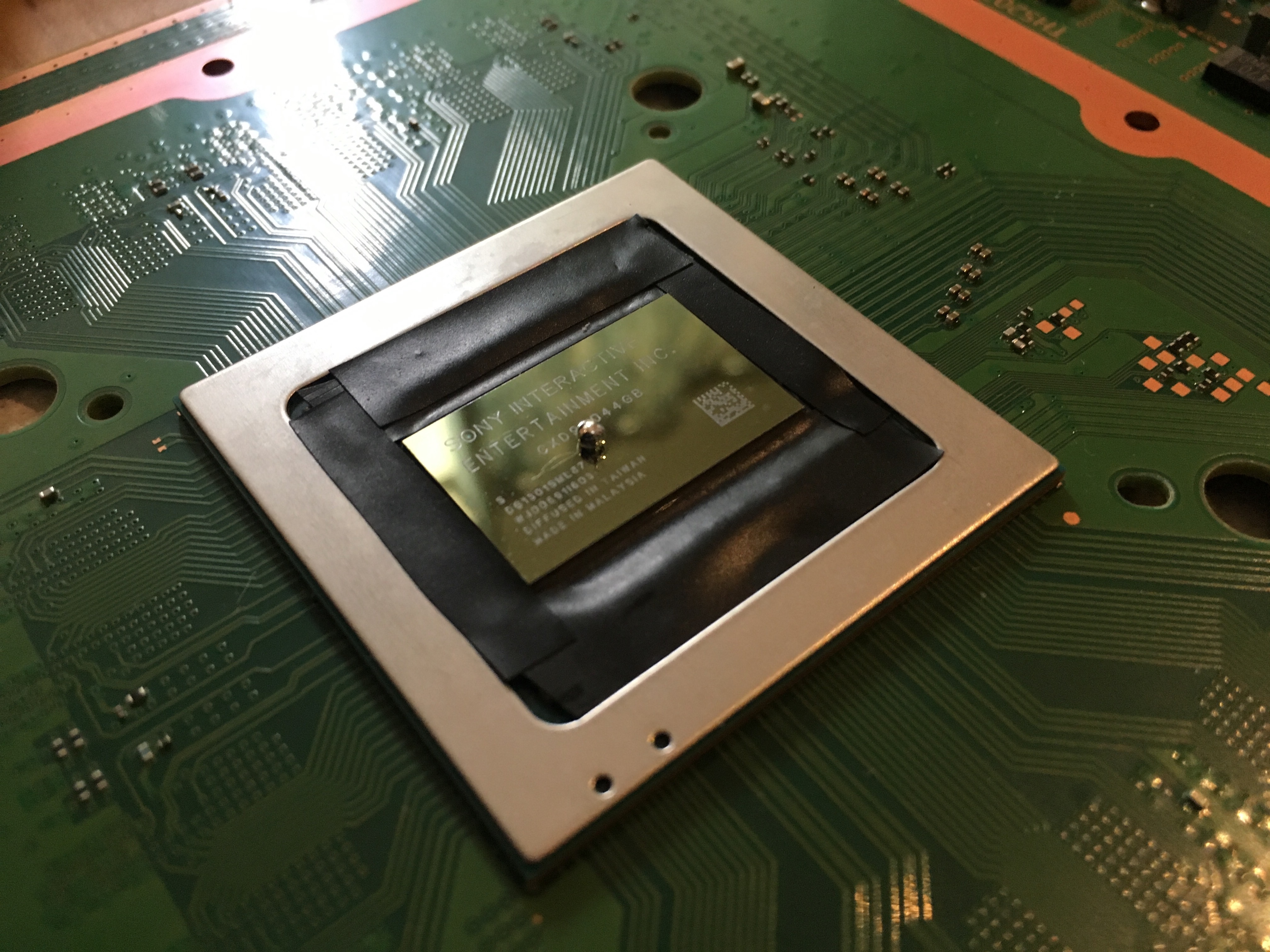

This liquid metal deal fascinates me. Liquid metal thermal interface material (TIM) is great, because like Sony said and like you can probably guess, it's a really good thermal conductor, better by an order of magnitude than the ceramic paste you normally see on CPUs. It also has two very big problems: firstly, it - being mostly based on gallium - corrodes copper (aka the thing most heatsinks are made of) in the presence of air, which among other problems will gradually ruin the thermal interface, and secondly, it - being metallic - will short basically anything on the mainboard it comes into contact with. Because of these problems, metal TIMs are typically only seen a) inside commercial CPU blocks between the silicon die and the heat spreader which is fully sealed off and almost never accessed by consumers and b) in enthusiast custom builds, where the builder is free to tackle these problems - or be ignorant and get bitten my them - as they wish, usually by either working very carefully, committing to some maintenance, or knowing they'll probably replace most of it in a few years anyway. What I'm getting at here is that it is a bold choice to use a liquid metal TIM in a mass market consumer electronic device like the PS5, especially one that's intended to work in various orientations and be carted around in backpacks and be allowed anywhere near children. The teardown video mentioned "two years of research" and alluded to some patents. I can only presume that all this work was figuring out how the hell to work around the problems inherent in putting interesting liquid gallium alloys inside your electronics. It seems a little reductive to suppose that the sum total fruit of two years of research is "put an insulating block around it" but I can't really argue with the sense of that. Perhaps the work went into producing not only a block (which might be made of something interesting) but also a manufacturing method to consistently apply the block, the liquid inside the block, and the seal on top of it, all reliably, in a way that ensures a good interface between the SoC and the heatsink that's fully contained and airtight to stop it reacting. Plus I imagine they'll treat the copper contact plate into the bargain, I think that's a thing. It's also possible Sony might have developed an entirely new kind of liquid metal TIM that alleviates some of the problems associated with it - in any case I highly doubt they've simply bought a couple hundred vats of Conductonaut. I don't think it's going to be any kind of exotic compound, though, because I guess at some point this stuff still has to be reasonably user-serviceable, I hope? What I really wonder is how this will affect dissassmbly. Most people don't take apart their Playstations as far as the actual SoC, and for those that do, ceramic TIM is foolproof enough that you can just tell people to just slather some on there and it'll probably be fine (hell it's not like the factory puts much effort into applications either) and you can't really ruin anything by spilling it. Obviously that's not so with liquid metal, the proposition is inherently going to be spicier. My guess is that Sony are taking the approach - not altogether unreasonably - that all bets are off once a consumer is prodding at their silicon instead of the fans, heatsink, SSD or antennae (good god, at least they actually let you get at the cooling assembly without going through the loving mainboard this time around). My point with this is, with all the fun considerations in place around liquid metal it's in everyone's interests to never ever open that thing up if you can help it. And my point with that is, I absolutely cannot wait for the stories of people deciding to do it anyway. Also, I idly wonder if there'll be some kind of RRoD/YLoD-scale scandal that turns out to arise from this insulating block not being quite as airtight as we hoped... Finally, for a funfact, because I'm just that kind of a person, I actually took apart my PS4 Pro and replaced the factory paste with Conductonaut and surrounded it with a bunch of electrical tape in a crude approximation of what turns out to be exactly what Sony made with the PS5. I did this in the full knowledge that it would probably eventually brick the thing (if not immediately, if I did it right), but it turns out that three years later it's still going strong and doesn't seem to have degraded noticeably at all (not that I can directly test much of it). While I was at it I also swapped out the fan with one from a later iteration that was less whiny. Like many PS4 owners, I was very concerned about noise.  Fun afternoon.

|

|

|

|

Here's the RTings writeup of the LG UM6900. It doesn't have the brightness to do anywhere near justice to HDR. RTings also provides some broad suggestions for how to configure it.

|

|

|

|

John Wick of Dogs posted:Ok so my tv is bad at it, which confirms my suspicions that HDR is made up HDR isn't so much made up as confusing, inconsistently implemented, and invariably extremely poorly explained and understood. But it's real, and good. The problem is contrast. The whole point of HDR is to be able to display bright highlights and dark (preferably black) shadows at the same time (and to have a signal that offers better control over these details, but that's a whole sub-rabbit-hole). Therefore, to display it correctly, a TV needs to be able to get one part of a frame millions of times brighter than another part of the same frame. There are a couple ways to do this but if your TV has a bright backlight and no local dimming and can only shine up to 300 nits in the first instance then it simply cannot be done, and maybe you can curve an HDR signal into a 50-300 nit range but it will look like garbage. (OLEDs are the best for this despite their generally limited brightness of "merely" 700 nits or so because they can get perfectly black at the pixel level, giving them infinite contrast)

|

|

|

|

BeanpolePeckerwood posted:the new tv jargon shuffle every 3-4 years is literally the least compelling branch of consumer commodity affection in existence This is both true and a little unfair. HDMI (and HDMI 2.0 and HDMI 2.1), 4K, HDR, VRR, contrast, brightness, bit depth, colour gamut, framerate and whatever else are all real terms with real meanings which deliver real benefits. It's also true that different people will care or not care about each of these to differing extents, and it is very much in the interests of in particular TV manufacturers to convince you that all of these things are earth-shatteringly important when in fact most of them are just really neat. The one actual unbelievably good thing about HDMI is that it's fully back compatible, so whatever HDMI 1.0 display from 2002 maxing out at 1080p/60Hz/8bit will function perfectly with the PS5 if it still powers on at all. All of the stuff layered on top of it since then is great and represents meaningful strides in our capacity to get sources and displays to talk to eachother by means of pushing loving fundamental particles down god drat copper wires to render the shimmering worlds of our dreams on ridiculous contraptions, which we forget somtimes is an enormously complicated endeavour that frankly I still can't believe was ever made to work, let alone made to approach anything near easy to consumers without a background in electrical engineering. Each one of these things delivers a big improvement. If you actually care, I can tell you in very specific detail what VRR accomplishes and what you might expect to gain and why you might consider spending more than otherwise on a TV that supports it. Or, if you don't care, you can simply continue not to care, because it's fine not to care about this stuff, mostly. But that doesn't make any of it less real, or less compelling, or anyone excited about it wrong (unless maybe they've been lied to by TV manufacturers). E: Quantum of Phallus posted:My brother has a 144hz monitor for his PC and the first time I just moved the mouse around the desktop it broke my mind. I've been Mac since 2010 and everything Apple put out is 60hz (not sure about the new Cinema Display but I think it's 5K60 over thunderbolt) so it was a real eye opener. Apple's very pro-est lines of stuff cater to professional video crowds, all of whom are producing content ultimately destined for 24 or 30fps, so it follows that their most singing most dancing displays focus primarily on colour accuracy, contrast and clarity than on refresh rate. The iPad Pro specifically has its 120Hz display for the benefit of artists who will value the immediacy of feedback when drawing on the screen, and all the marketing focuses on that. I don't think Apple really understands (or maybe just doesn't care) about the implications of that screen for gaming. Fedule fucked around with this message at 18:04 on Oct 14, 2020 |

|

|

|

This is kinda what I meant about HDR being not consistently implemented or consistently understood. ...But let's be clear about one thing; the SDR implementation wasn't great either, and nor was it particularly simple, but you could use it without doing any setup and it would work, kinda. In fact, the whole reason we have these per-game calibration screens in the first place is because people eventually figured out it'd be simpler to do that stuff at the game level per TV than to expect people to open up their TV settings menus per game. Also, the consequences for doing this wrong were largely the same; crushed blacks, blown out whites, generally a loss of detail everywhere. The HDR version of this is exactly the same adjustments, and exactly the same pitfalls, except instead of working in a system where the game says "this pixel is 100% bright and this one is 4% bright", it's in a system where the game says "this pixel is 600 nits and this one is 0" and it's expected the display should know what to do with that. The process hasn't actually gotten any more complicated, we just understand now that we should prompt users to do some initial calibration. The fact that Assassin's Creed wants you to input the loving peak brightness rating of your TV rather than move some sliders around is a failure of Ubisoft, not of the HDMI Consortium, both in that those are lovely ways to expect consumers to be able to calibrate these things because who the gently caress actually knows the peak brightness stats of their TV (I'm a giant nerd about this stuff and I had to look it up) and because they should be reading the system-level calibration from the console anyway, which is something I hope we'll all be better about this gen than last. The way PS4 does HDR calibration at the system level is great, honestly, it's a very simple process with very clear parameters that basically anyone can follow with visibly confirmable results (find the furthest setting at which the symbol is still visible) that you do once until you switch TVs. And, yes, there are a hundred different tweakables on every TV that do all sorts of very fine grained thing, but that is also not a new thing, and you can still take as much or as little an interest in that as you please.

|

|

|

|

univbee posted:The signal cutting out is a problem that exclusively happens with HDR enabled and results in the TV manufacturer and the device manufacturer pointing fingers at each-other. This is a "known" problem with the PS4 Pro and, at minimum, some Samsung and HiSense HDR TV sets, but it's not exactly something that's in any of their marketing. This is a problem with either cables or TVs, not with consoles or with HDMI or HDR. It happens with HDR because HDR increases the bandwidth required for any given res/framerate, and due to either outdated cables, degraded cables, too long cables or a fault in the TV (as plagued several Samsung models) the connection can't accommodate the signal and eventually craps out, resetting the connection (hence the brief blackout). This is what happens when any digital signal fails. It doesn't have anything to do with HDR save for HDR being the thing that puts the bandwidth over the edge, which is why in your case turning off HDR "solves" the problem. (I sympathise heavily with trying to argue any of this with Samsung's support, though; it took me weeks to convince them to give me a main board replacement, which fixed this problem when I had it) The fact that every TV calls HDR something different also is not a problem with HDR, it's a problem with TVs, the same as how they also can't agree on what to call motion interpolation, black frame insertion, etc, which are all standard features with standard meaningful names everywhere outside a TV settings menu. Calibrating HDR in the TV menus also isn't different to calibrating SDR, you use the same settings for the same reasons, although most new TVs will use different settings profiles for HDR and SDR, which is very very correct.

|

|

|

|

univbee posted:I get that it's a bandwidth thing but it only very rarely happens with Xbox One X and has never happened to me with Apple TV 4K and Nvidia Shield with the same cables and inputs used when I was trying to narrow down the cause. Is the PS4 Pro outputting a more bandwidth-heavy signal than all those other devices for some reason? Disclaimer: I have never owned an Xbox One. I think this is because of PS4's automatic "resolution". There are a couple different signal standards under the 4K umbrella that deal with colour in different ways. The PS4 calls them "resolutions" and lets you chose one or another or lets you use automatic, which will not only switch between 1080p or 2160p based on the display on the other end of the line but also switch between 4K in YUV420, YUV422, or RGB (in increasing order of how much bandwidth they eat), and in S or HDR for the YUVs (HDR in RGB is not supported with HDMI 2.0 due to... bandwidth) This is great because nobody wants to have to think about this stuff, ever. The PS4 and TV will tell eachother what signals they nominally support, but if something in the connection is dodgy then there will be problems when you actually use them. This is why when you're getting the flickering issue from HDMI bandwidth shortage on PS4 you can sometimes solve it by forcing PS4 to output in YUV420 instead of leaving it on Auto, which will try to use YUV422 - this can get you under the bandwidth threshold. I gather that Xbox One lays out your options for output a little differently and if I understand correctly doesn't try to automatically determine whether to use YUV422, but requires you to enable it as an Advanced Setting. So I theorise that you're not seeing this on Xbox One because you're not outputting in YUV422 on the 'Bone. Apple TV seems to have a very good capability to test the connection to your TV and set its output accordingly, so it's probably constraining itself. If you go into its video output settings you'll be able to see exactly what signal it's outputting. Also, if you're matching frame rate and watching Netflix, it might actually be outputting at 30fps, which will obviously only eat half the bandwidth of the 60fps output of consoles.

|

|

|

|

BeanpolePeckerwood posted:Does use of HDR wear out panels faster? Are you referring to OLED burn-in? If so, overall it'll be kind of different but also kind of the same; by default the HDR profiles on OLEDs will set the backlight to max (which typically does accelerate burn-in (but you can still turn it back down)) but HDR content overall will appear darker broadly so it's all even in the end. For any other interpretation of "wearing out a panel", HDR won't be any different to SDR.

|

|

|

|

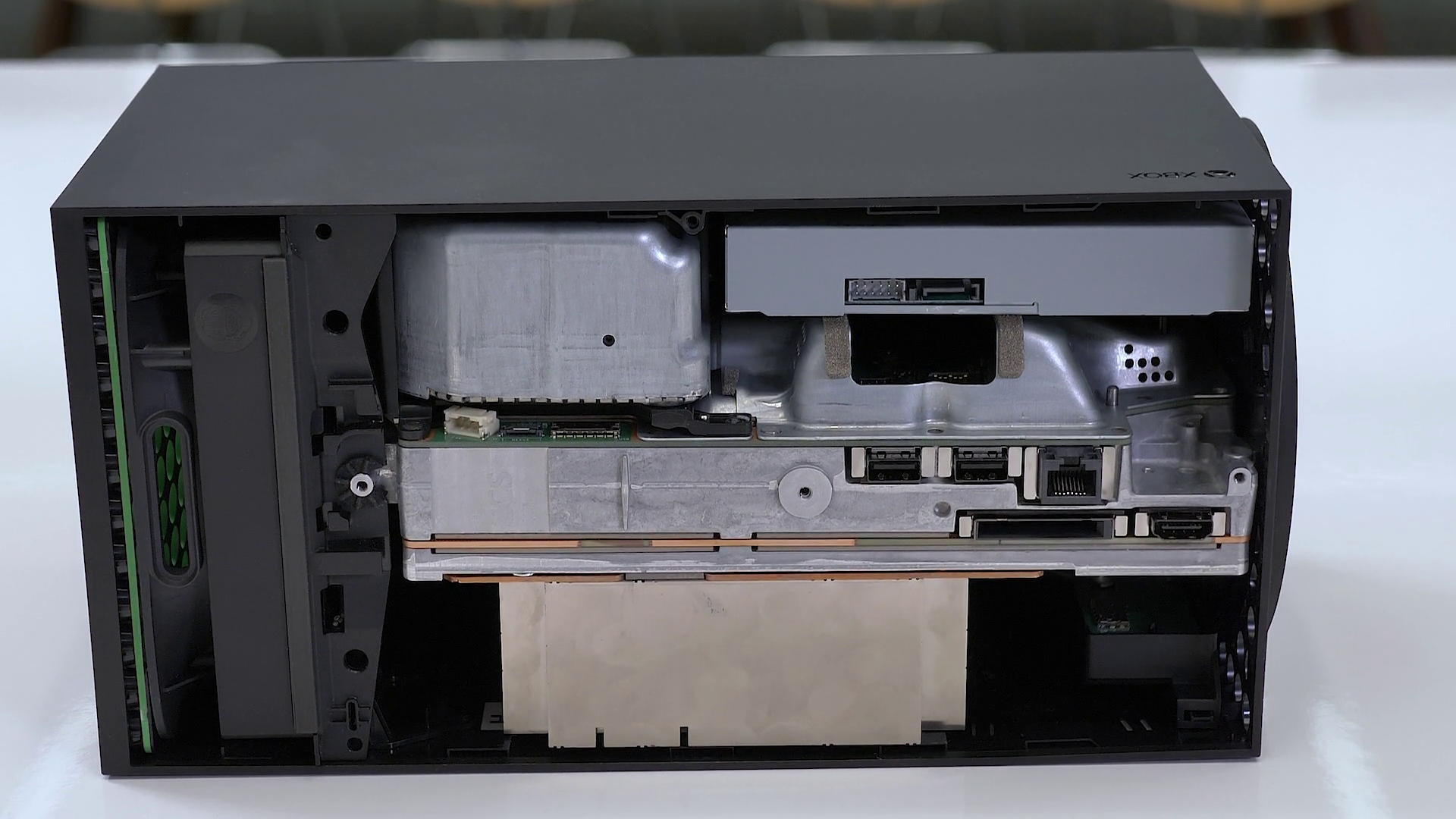

Cooling talk (whoops, the UI stuff came out while I wrote this up, hope you still care about this!): The reason the boring blocky Xbox Series X gets described as having an interesting system while the weird uniquely-shaped PS5 gets called conservative is because the X is a lot more integrated at the design level - the entire interior of the box is built in a way that accommodates all of its systems together in a fairly compact space (relatively speaking, anyway, the X is still a chonky boi) while the PS5 is very much a computing system with a cooling system layered on top of it. The most interesting thing about the X is that it's got a big thermal division running up the middle of the case.  The SoC and its heatsink takes up one side, with various other components like the optical drive and the storage and apparently the entire southbridge (I really need to look this up in more detail honestly) and their heatsinks on the other side. Honestly this kind of setup seems more like the kind of thing Apple did in their Mac Pro line (particularly the c2009 and 2019 models, not the trash can). The most striking thing about it, really, is how small the main heatsink is. Admittedly it's got a vapour chamber in there, which counts for something, but it's still surprising to me. What this suggests to me is that the Xbox is designed to always be pushing up against its limits. Remember that the purpose of a cooling system isn't to keep things cold, but to stop them from getting too hot. There isn't any real difference between a given chip running at 50 degrees and 90, so long as it's not breaking 100 (or whatever the actual safe limits on the Navi are, I just made those numbers up). The other very important thing to remember about cooling is that things cool proportionately quicker when they're at a higher temperature differential than the thing that's cooling them (something that's 80 degrees in a 20 degree room (delta 60) will cool three times as fast than something that's 40 degrees in that room (delta 20)), which means that letting your stuff run hotter allows you to cool it more efficiently (ie, more quietly). You tell all this to a team that can control both the chip, the cooling system design, the OS and the form factor, and what you get is a chip with a known maximum thermal output, a known upper operating temperature limit, connected to a cooling system with a known cooling capacity per temperature delta. There were some reports about the Series X running very hot; I surmise this is because it's designed to run as hot as it can all the time, at a constant temperature, and has a cooling system that can keep it from going above that temperature at a given inaudible RPM. And if you run it in a very hot room, they can just deign to make some fan noise. The PS5, in contrast, for all its interesting shape and shiny liquid metal fun, feels more like a desktop PC in that its cooling solution is designed to enable maximum headroom rather than precisely accommodating some thermal limit; it's just the biggest possible heatsink, with the pushiest possible fan, slapped on top of the SoC. Doubtless they're still very mindful of the limitations and the worst-case-scenario operating environment, but like Cerny said their priority was ensuring that it would be consistent in any environment, but because they have that headroom, they can simply use more of it in a bad environment than have to spin up the fans more to get more airflow. This is exactly what most desktop PC builds do that aren't either watercooled or using the stock cooler that ships with your CPU (you fools!); you don't go looking up your CPU's thermal draw, you just slap the biggest loving heatsink that will fit in your case and call it a day. Okay, PS5 has a little more deliberation than that, and still has some bespoke flourishes like the vacuum holes (very underrated feature if you ask me) but you take my meaning, I think.

|

|

|

|

univbee posted:I don't think that's giving the PS5's design enough credit. You can tell looking at the teardown that they spent a LOT of time and resources on designing every single inch of the system including its contours to precisely figure out the most efficient way to circulate air quietly, as well as the extremely non-trivial liquid metal implementation. There doesn't appear to be any wasted space inside the PS5 at all for what they're trying to do. Oh absolutely, there's a ton of design work that went into the thing, they certainly wern't all twiddling their thumbs for six years. I'm just saying, the concept of the PS5 cooling system, removed from any other aspect of its design, is very simplistic and leans into headroom, while the X's is more tightly integrated and leans into very precise design.

|

|

|

|

Wiggly Wayne DDS posted:i know where you're coming from but i'm still pessimistic about the xbox's thermal design choices. there's is a very rough thermals video out today (caveat they really don't know how to use a db meter and seem to limit themselves to 5-10 minute soaks), but there are only series x enhanced titles out not native games so it's peaking at mid-50C and that's where the fan will start to ramp up. until a native title that pushes the gpu comes out i don't think we're getting a good comparison outside of multi-plats Well. There's the caveat that I make no claims regarding the X's success with its plan. Maybe they ballsed it up. I'm just saying, if it fails, that's what they're failing at. Wiggly Wayne DDS posted:i posted that nikkei article with the interview and asked for someone who knows more a japanese to clarify the technical details as there's some interesting parts in there that are new: What I'm getting at with the desktop comparison is that desktop CPU coolers are built around headroom as a rule, because there are so many CPUs at so many specs that the optimal strategy, both for manufacturers and for builders, is to make sure there's always going to be headroom to lean into, rather than to try and figure out any working definition of how much cooling is "enough". To be even blunter, I think the X design team thought "how small dare we make it" while the PS5 team thought "how big can we make it". The details in that nikkei article are also cool as gently caress (lol). I'm never going to say that there's not impressive, innovative tech work going on inside the PS5. I'm just saying it seems to me like everything the PS5 team did was a matter of maximising the amount of cooling stuff they could cram into the form factor, while everythign the X team did was a matter of minimising how much they had to. Similarly, Stux posted:it takes more precision in your thermal design to aim for a lower operating temperature while keeping things quiet, and running consoles hot is the exact same design philosophy everyone has used for like 15 years. Yes, consoles have typically been designed to be small and run hot (original Xbone excepted). They go to lengths to get the cooling systems operating to very tight specs, typically with low headroom. In other words, it is typical for these systems to be unconventional compared to most PCs. The PS5 is notable for having a design that seems (to me, anyway) to be focused on maximising headroom; in other words, it is unusual that it has this in common with PCs. But, respectfully, it does not take precision to make a cooler, quieter, system; you just make it bigger.

|

|

|

|

morestuff posted:In this case it seems like "simpler" is also, probably, better. There's a tradeoff on the size but it also means manufacturing defects and failure rates are likely lower I'm inclined to agree and frankly have always wished Sony would have just released a gigantic but silent PS4. Looks like I finally got my wish.

|

|

|

|

Wiggly Wayne DDS posted:having more [thermal headroom] seems to be a failure of design is rather bizarre. This is the opposite of what I believe! I think the PS5's approach is preferable in almost every way to the X's. I wish every console had been doing this from the beginning (even though I understand why the alternate approach might have been preferable to them). I'm all about Big Air. Wiggly Wayne DDS posted:rather than fill the ps5 thread with this chat go with that exact line into the gpu thread and see how far it'll take you, we don't really have a generic cooling thread on sa that i recall Well, yes, this stuff eventually hits limits. But I think at the power levels and form factors we're talking about here, we're still comfortably inside them (also I'm more talking about heatsink volume than fan size, although eventually heatsink volume must account for fan size). Of course they could have made the PS5 even bigger, and also I definitely get that there's more to it than how big it is and that the shape is accomplishing more than filling more space. I only mean to say (and largely because this all got started in response to a DF video seemingly be more impressed with Microsoft's internals situation than Sony's) that even despite the impressive interplay of all the elements in the PS5 the way they've gone about dreaming it up is - from a perspective I can sympathise with - less involved, and makes it less obvious how many things they've accomplished (We might say that an enormous amount of work has gone into making the PS5's internal design appear effortless). Like, okay, eventually this will come down to different people being impressed by different things to different extents and past a point this is all subjective. At the end of the day I get the sense we are both of the opinion that the PS5 is extremely impressive in terms of hardware design and the X is less so, I'm just prevaricating over whether the X should get some points for trying, is all.

|

|

|

|

In UI , Activities looks cool but also rather tiresome and I hope you can block it from displaying, I hope this stuff scales well for those of us on 1080p TVs, and screen-share to Party looks hype as gently caress and I'm all for it but also I fear developers are going to poo poo all over it by blocking cutscenes just like they did with shareplay. (What I also really hope is that screen-share to party will not have the arbitrary 1-hour time limit that shareplay had) RIP: Firewatch Dynamic Theme. You proided many years of leal service, and were unrivalled.

|

|

|

|

Heavy Metal posted:On TV talk, I'll say at the moment I'm not itching for HDR. The talk about how it needs to be really bright to look good etc, that's the opposite of my preference. I keep my TV at 50% brightness and backlight, lower sometimes on PC. I take eye comfort and maybe better sleep over this fancy HDR business. HDR doesn't - practically speaking - need to be really bright to work. What it needs is very high contrast. On most TVs, you get high contrast by cranking the backlight while using local dimming to turn it off in other parts of the panel, making them closer to black. If your TV has good enough local dimming, or is an OLED, you can get all the goodness of HDR without coming out of the 4-500 nit zone for most of most scenes (HDR is particularly about being able to show bright lights and dark shadows, not necessarily doing it all the time).

|

|

|

|

Are there any worthwhile writeups concerning exactly is the deal with the PS5's APU variable clock trick or is it just people paraphrasing the Cernycast still? I've never heard it explained entirely to my satisfaction. In particular: they made such a big deal of saying that instead of varying the power, they vary the clock speed. But, I always thought that varying the power was how you varied the clock speed; I'm not really sure what the PS5 is doing that's unique.

|

|

|

|

So let me make sure I have this right (according to understanding of currently known information): Ordinarily, in a PC situation, you have your chip idling at a low speed, using however much baseline power to remain on. Then when work comes in, you throw more power to do that work, and even more power to up the clock speed to get the work done faster, until either the work is done or everything gets too hot at which point you throttle (and meanwhile a cooling system is reacting to the temperature by making fans spin faster, hopefully preventing throttling). The presumptive kicker being that you can control clock-related power very finely but work-related power less so, making systems susceptible to being overdrawn during worst-case scenarios or torture tests. The idea with the PS5 is to implement a notional power draw cap that cannot be exceeded, such that when the system is under heavy workload it will downclock itself proportionately in response, possibly (but apparently practically never) all the way back down to the base clock, in order to bring the combined clock+work power draw back inside the limit instead of going beyond it and hoping the cooling can keep up. The numbers for this work out favourably because you can get a fairly large power saving by downclocking a tiny bit. If I'm understanding this right, the innovation is that, somewhat counterintuitively, rather than never having to get hot and either hard throttle (disasterous for games) or get loud (irritating to gamers), the PS5 is actually throttling a tiny bit all the time. Also, because of [insert AMD technobabble], they can independently control the power going to the CPU and the GPU on their SOC, downclock the CPU when it's not in heavy use, and allow the GPU to use more (and presumably the system can still fully downclock and use less power than the fixed limit when idle). (I wonder if we'll hit any problems with a game that's both CPU and GPU-intensive, like, say, No Man's Sky?)

|

|

|

|

So, yeah, TVs have had 120Hz panels for ages now, since 2006 I think, but for the first few years it was a scam because they didn't have inputs that could take 120Hz signals, so that framerate was only available if you were using interpolation to fill it out. HDMI 1.4, which launched in 2009, notionally supported 1080p120 but nothing using HDMI for output at the time was really capable of notional 120Hz output, let alone running a video game rendering at 120fps. Computers and consoles have since advanced a lot. The vast majority of TVs in operation today will support 120Hz input of some kind, although only TVs with HDMI 2.1 (first available in TVs 2019) can support 120Hz at 4K.

|

|

|

|

I'm extremely down to buy some black/dark grey PS5 replacement sides just as soon as they're available from someone with literally any credibility

|

|

|

|

The thing that worries me is, will this drat thing even fit in a backpack or what

|

|

|

|

The prevailing ideals at play in designing TV-adjacent electronics are fascinating, to me. (I mean, not that they really matter very much to me, practically. Were it up to me, every console from here to infinity would look more or less identical to the Series X, at least on the outside.) The fact is, nobody really cares very much about the aesthetics of these things, not when you push them. You're buying these to play games on, you care about form only in the very abstractest sense when considering the thing as an ornament, or when it has a direct effect on function (like, trying to fit the thing somewhere, or being vaguely aware that an over-compact form factor combined with inefficient cooling all-too-frequently bricks the thing). It's not that most people particularly outwardly expressly want their consoles and such to be understated in form, but the very practical purpose they serve, and the prevalent idea with TV setups that, not without reason, you don't really want ostentatious elements distracting from the screen and what's on it, render understated designs preferable. Designers know this. Market researchers know what people like (broadly), and want to be the ones to provide it. But, market researchers also know that they serve a Brand, and the Brand must have an Aesthetic; it must be unique, it must be characteristic, it must be iconic, there must never be a soul on earth who would look at the thing for a microsecond and not know that it is a PlayStation/Xbox. Therefore, these inoffensive, practical, understated objects also need to loudly scream their origins and intents at you. Everything designed to sit in a living room since the dawn of the phrase "consumer electronics" has served over its lifetime in a proxy war between these ideals. These ridiculous contraptions always want at the same time to tuck neatly into a set of shelves and to be front and center as the aesthetic focus of your living room. Consider just the top surface of PlayStations. We went from the necessarily top-loading design of the PSX and the PSone to the perfectly flat top of the PS2, then the same design but also top-loading for the PS2 Slim, then to the ostentatiously displayable Spider-Man-fonted Playstation 3, to the significantly more restrained PS3 Slim, to the surprise-toploading-again Super Slim, to the flat but illuminated PS4, to the flat but unilluminated PS4 Pro, and now to whatever the hell we even begin to describe the PS5's aesthetic situation as. Considering their profiles is as interesting; even as the profile of each PlayStation changed over the years, they all - save of course for the top-loading models - all retained the characteristic of being basically fine to fit flat inside a tight shelving unit, venting air through the front, back, and sides, but never the top. The PS4's parallelogram-profile always seemed to me to be the best compromise to date between the idea of just making a box and making something unique and iconic. Contrast the Xboxes One, taking their profile cues from first VCRs and later the PS2 arguably, but always making a point of covering that otherwise perfectly flat top surface in extremely necessary vents. The Series S takes a similar view. The Series X, notably, I suppose, takes a very understated, restrained approach to aesthetics in form, but by virtue of its form demands even more than previous Xboxes to sit out in the open, rather than in a set of shelves (although apparently Microsoft did quite carefully consider most people's vertical shelf space availability when deciding how big to make the thing? And consider also, I guess, the green light coming out the top of it; still relatively understated, considering (Probably actually becomes a little irritating with a shelf directly above, honestly), but unmistakeably Xbox). The PS5, then. I guess it's notable that for all its ostentatiousness, and for its large size, and obvious intention of being positioned vertically next to your TV like the Series X, within its dimensions it will fit horizontally without compromise to function within a tight shelving space if it has to. That's something. I don't really have a point with any of this, just, like, man, consoles are weird.

|

|

|

|

Notably, if it's so easy to make custom PS5 sideplates, it won't be much harder to have a custom plate which changes the overall profile of the device. As long as the internal shape of it matches up (so you can actually attach the thing, and presumably retain some subtle but very important airflow characteristics) and you don't cover anything important there's probably no reason you couldn't have something which turns the whole thing into a cuboid, if you're into that.

|

|

|

|

Neddy Seagoon posted:Just for argument's sake, wouldn't the extra density cause heat-retention issues inside the case though? Doubtful. Those plates are a plastic of some kind, so their thermal conductivity will be negligible to begin with. All the cooling happens inside the machine when air drawn in by the fans passes through the heatsink and is then ejected out the back. No matter what you replace the sideplates with, the interior shell will also still be plastic and will bottleneck any theoretical passive cooling.

|

|

|

|

https://twitter.com/fedule/status/1320761847767830532 I'm at least happy that more people will join me in my suffering.

|

|

|

|

Policenaut posted:https://www.youtube.com/watch?v=peX9k5wMqWk I'm obviously missing something. The download link (iOS) just goes to the PS App I already have, which hasn't been updated in months. I can't find this new app elsewhere on the app store.

|

|

|

|

I dunno about Demon's Souls (yet) but I'm thinking that once I get PS5'd I might finally give Sekiro another shot. I bounced off it hard because, like, it's not even like things didn't seem to be going according to what everyone tells me is the plan (walk in, die repeatedly, eventually don't die) because that's exactly how it was going, it was just, everyone swears up and down to me that this is fun, and I just found it hateful, and eventually I figured The Children Are Wrong on this one and left it. But hey, the game's still on my account, and presumably will have a nice framerate buff on PS5, so maybe it'll click this time? Worst case I'll just ragequit again and play DMC5 instead.

|

|

|

|

I notice the PS App (now that I've got the update) no longer has Activity Feed in it. Is the feed dying? Please, Sony, I'm begging you, give me a way to quickly retrieve recent captures from my phone without having to go through some service I don't care about. Just copy Xbox. It's okay. Nobody will blame you.

|

|

|

|

A lot of this controller stuff winds up being really cool but also broadly impractical and often only of use to devs who weave it into a game's design from the very beginning. Like, yeah, the technology in the abstract is cool as poo poo. Insert anecdote about the ball-bearing minigame in 1-2-Switch! here. Also, the bubbly water in that one beach kingdom in Mario Odyssey was extremely neat too. The thing is that none of it is trivial to implement and all takes a bunch of work to add to a game, and a lot of devs aren't going to bother (Especially on Switch, where the Pro Controller, very good as it is, seems to lack the full HD Rumble capabilities of the joycons). Know what else was extremely cool tech? Kinect. God drat that thing was impressive. A nifty little package of cameras, chips and circuitry that enabled an API that could track individual fingers in a user's hand at five meters and read the heat patterns on their face. God drat. But, same problem. Cool tech does not equal cool video game controller feature, because tech can get away with only having a few applications, but the whole point of game tech is that it's got to be usable by anyone who rolls up with a game. Kinect was sufficiently difficult to implement that barely anyone managed to actually get a satisfying implementation working, and what are widely considered to be the best games are the ones that eschewed the complex 3D tracking and relied on silhouettes instead (eg: Dance Central, and especially its menus in contrast to literally any other Kinect menu). (Honestly, beyond all of that, the biggest problem with Kinect was that it was completely wasted as a video game controller) It came up earlier in the thread but the same tech used in Nintendo's HD Rumble was (and still is) used in trackpads on Apple's laptop range (and their standalone trackpad accessory), to provide real good clicking action, consistently all over the surface of the pad, without any actual mechanical action. There, it finds a good niche, because using the trackpad on a laptop is something you'll do (almost) all the time, and it integrates seamlessly into existing system functions that everybody is already using, uses extremely simple actions from the user that they're also already used to, and it's easy to take existing elements in some UI and add more click actions to them, resulting in widespread adoption of the enabled functionality. Contrast, on the same range of laptops, Apple's Touch Bar, a pointlessly flashy highly bespoke inconsistently attached multisensory all-singing all-dancing interface. Definitely cool as poo poo still, but we're back to the problem of needing developers to care when they have no reason to go to the effort. Astro's Playroom is, without doubt at this point, going to be an extremely cool showcase for the Dualsense controller. What they have been able to make those actuators accomplish is immediately compelling. But, Playroom is a completely bespoke experience made by devs who took the time to painstakingly implement all of these features. How easy have Sony made it to produce some given pattern you have in your imagination - or which you can have your foley guy produce (this would be foley, right?) - into that controller? What does it take to make it move? Are there going to be a range of good APIs to make it work (an example of a good API off the top of my head might be for the spidey-sense thing in Miles Morales, you provide, say, figures for pitch/yaw/roll and the controller will produce a pulse with that orientation). These are questions we're going to need devs to answer. (Also, watching the Astro demo made me immediately think about The Witness, a game in which a lot of painstaking audio work went into footstep sounds, which for large parts of the game are the only really active audio you'll hear. It is exactly the kind of game that would have an interest in doing the exact gimmick Astro did where every surface you walk on also creates a characteristic vibration.) The triggers will I think be a little easier - I can imagine the implementation there being very easy to understand, in that you send the controller a profile that very linearly maps trigger progression to resistance, and... that's it. The only necessary work is creating a profile for any given thing you want a unique trigger on, and a hook to load it up at the right time. Fedule fucked around with this message at 13:52 on Oct 30, 2020 |

|

|

|

Rad Valtar posted:No Mans Sky next gen upgrade listed haptic feedback on PS5 as a feature so that kind of invalidates your argument that it is only useful to games from the beginning of their design. We will have to see how well itís used. What I imply here is not that all controller tech other than face buttons and analogue sticks require completely bespoke game design but that this is a frequent pitfall of those features, see: many WiiU games absent or poor use of the tablet, use of the PS4 touchpad generally for anything other than an enormous select button (although some common usages did emerge, like using it for scrolling or using each side as a shortcut), the complete lack of uptake for Xbox's rumble triggers, etc etc. Difficulty of implementation is a risk to be overcome, and if Hello Games have been able to retrofit it in (and assuming it's good and anywhere near the quality of Astro), then that's a sign that Sony have succeeded. Hell, if you really can patch sound waves in and get vibrations out then that's more or less the ballgame already.

|

|

|

|

Cicero posted:Based on the PS4, do we have a good idea how well the PS5 will handle multiple consoles/accounts in the same household? In terms of being able to play with each other, and switch which console you're using for a game without losing saves, not having to buy every game twice, etc.? DRM such as it is should be identical to how it worked on PS4: your account owns the game and can play it anywhere it's logged in (but can only be logged in in one place at a time) and can designate a primary console on which that account's games can be played by any account whether or not logged in. What I don't know, now I think about it, is if you can have a primary PS4 and a primary PS5 at the same time. With disk-based games, you can play the game on the disk on any console while the disk is inserted. Beyond that, cloud saves will work as they always have, but there's no specific system by which you can seamlessly start a game session on one console and then move it to another.

|

|

|

|

Fix posted:You guys see this? Looks like a gameplay cross between BotW and SotC Oh hell yes take the good thing and mash it up with the other good thing and hook it into my veins This is turning out to be one of my better kickstarter impulses. I'd actually forgotten about it but hey now I get to feel all that hype come back.

|

|

|

|

Vikar Jerome posted:the 1.01 patch came out the other week I cannot believe FFVII-R hit v1.0.0 as stable as it was, with only a handful of texture errors, one semi-rare softlock and apparently some unintended speedrun tricks, and went so long without a patch, which was just for PS5 compatibility.

|

|

|

|

OLED burn-in is, to all practical intents and purposes, a non-issue. The issue has been under very empirical long term investigation by blessed website RTings. Their findings suggest that you might start seeing it after 3000 total hours, if;

Under more real-world conditions, you'll see 5000 hours usage, but again, that's all using worst-case content without any mitigation features (which are things like, the screen can detect and dim (at the pixel level) static images when they're detected, like some video game HUDs, or, the screen can gradually shift the picture around a little inside the frame when it's on for extended periods (this is tricky to notice but you can see it, it makes your set look like the bezels are different sizes), and also the screen can monitor long-term the state of each pixel over very long periods and gradually adjust how it displays colours, compensating for burn-in). On top of that, the panels in the latest models are all iterative improvements, although we don't have four years of longitudinal testing data on stuff that came out just this year. Between these panel improvements, the array of mitigation features offered, turning the backlight down to 80% for SDR (HDR and Dolby Vision are better at not actually going full pelt brightness on stuff that's not a light source), and actually varying what content you display over 5000+ hours of cumulative usage, you will probably not actually see burn-in on your set before you replace it. Look at the RTings test pictures; at the last available data point, 102 weeks (14280 hours), the effects are still extremely mild on everything other than the TVs showing CNN, and barely visible at all on the TV showing Call of Duty. All of which to say, the CX is by far and away the best TV you can buy, if you have the budget for it. The C9 models are about as good, and cheaper, if you can find them, but they're out of manufacture now.

|

|

|

|

I found the browser handy for pulling up a few test patterns to help me set my picture settings, and for a more reliable speed test than the system one.

|

|

|

|

punished milkman posted:alright just did some PS5 controller surgery and made the face buttons on it feel way, way better. you can too if you're brave and have one of those $20 tiny screwdriver kits. this is just, a completely nuts thing to do, and I feel compelled to also do it

|

|

|

|

Lumpy posted:So thanks to all the good press in this here thread, I picked up an LG CX today. Many potential pitfalls. Firstly, Instant Game Response is, unless I'm much mistaken, an HDMI 2.1 specialty, so possibly it's not working on that basis alone. You're much better advised to manually set your picture mode to Game Mode while using your console (the CX and webOS TVs and I think TVs generally at this point will remember settings per input unless you specifically hit Apply To All Inputs). Also, the webOS TVs in particular (and possibly others) use different profiles for SDR, HDR and Dolby Vision on the same input, so you'll want to manually dive into settings again when HDR comes on. Don't mess too much with the brightness and backlight settings in the HDR profiles though. (blessed website RTings has a guide to setting up your settings.) You don't necessarily have to choose Game for your picture mode, you just need to use a profile with all the post-processing options turned off (Game Mode happens to do that by default, but you can do it with other profiles too. Remember that profiles are unique per input so you're not messing up other inputs by futzing with this stuff on your console).

|

|

|

|

AndrewP posted:Okay actually it occurred to me that I also had this issue on my C9 and I fixed it by turning some setting off.. I just can't remember what it was. Definitely try adjusting some post processing stuff. Well it was probably everything under advanced picture settings, but most likely it was, uh, whatever it is that LG chooses at its unique name for motion interpolation, a feature which by design forces you to always be two frames behind source at minimum as it holds frame n while it waits to receive frame n+1, then uses computer fuckery to generate n+0.5 when n+1 comes in, only letting you see frame n when frame n+2 is coming in.

|

|

|

|

The preorder situation has been completely nuts. Apparently they're Just Selling That Well, although of course Sony would say that. I've been signed up for alerts on Amazon (UK) since they supposedly went through the first batch and it's been silent ever since. I've never seen anywhere stock them, and I've never known anyone managing to pre-order one. I get the feeling I'm just gonna have to wait, put in an order after launch, and it'll get here when it gets here.

|

|

|

|

|

| # ¿ Apr 25, 2024 11:24 |

|

Was it always the case that Amazon UK listing for PS5 games listed as releasing November 12th? EG: Demon's Souls

|

|

|

I set it up following a game setup guide (turned on "Instant Game Response" blah blah blah) but it still seems to have a ton of input lag. I am coming from a plasma, which I think had little / none. Any tips / tricks, or do I have to suffer through being even worse at Rocket League?

I set it up following a game setup guide (turned on "Instant Game Response" blah blah blah) but it still seems to have a ton of input lag. I am coming from a plasma, which I think had little / none. Any tips / tricks, or do I have to suffer through being even worse at Rocket League?