|

One can trivially CPU bottleneck any program by doing dumb poo poo. A faster CPU will be more tolerant to bad programming. Game programmers are awful and it's a miracle anything good ever gets produced. Doing something like cranking up the resolution, using higher res meshes and textures, or throwing on a few post effects won't really affect your CPU. Pushing out draw distance, increasing effects, using higher quality animations, or increasing the amount of detail props (grass clutter non-gameplay debris) will tax your CPU. They can all actually be offloaded to the GPU, but no one is going to do that because everyone's terrible.

|

|

|

|

|

| # ? May 24, 2024 11:33 |

EdEddnEddy posted:WTF is going on with the FX - 9590 vs the FX 8XXX ones below it? I'm guessing the FX-9590 is throttling itself randomly since it was always a ridiculous power hog and was basically a max factory OC right out of the box. Lots of people had trouble getting theirs to run even at the "stock" clocks, that would explain the very low yellow bar.

|

|

|

|

|

While I do want nice shiny things like a 6700k, my 5ghz 2500k has lasted me 5 and a half years and it's probably only going to be replaced when it explodes.

|

|

|

|

That's a really good picture/summary thanks

|

|

|

|

THE DOG HOUSE posted:

AMD guys like to get down. You should see what happens when Joe Macri parties. That dude has gotten drunk with just about everyone in the industry. Yes, that includes me Durinia fucked around with this message at 19:59 on Jun 3, 2016 |

|

|

|

Watermelon Daiquiri posted:Huh. So it is, though I don't know enough about PCIE to know whether two cards can share the jtag, smbus and wake reactivation pins like that... Those don't really matter, you'd need the PCIe root port to support bifurcating to 2 x8, and then also add a PCIe clock buffer to drive REFCLK to each downstream card. If you wanted you could share the other pins as well with some simple muxes and stuff, but it all sounds like more trouble than it's worth.

|

|

|

|

Serious sam (the most important game in the list) makes it worth the upgrade.

|

|

|

|

Ak Gara posted:

What GPU and what clock speeds for the CPUs?

|

|

|

|

Seamonster posted:What GPU and what clock speeds for the CPUs? Exactly. If the 2500K isn't running at at least 4.3, this chart is sorta worthless.

|

|

|

|

Zero VGS posted:With a bifuricated Mini-ITX like the newest ASRock stuff, you can (theoretically) use a custom ribbon cable to split the single x16 PCIe port into two x8, like with this: http://www.ebay.com/itm/331051900725 Counterpoint: that's real retarded, just buy a 1080 and don't gently caress around with that poo poo Dual 1080s or 1080 Tis and we can talk about it Paul MaudDib fucked around with this message at 19:25 on Jun 3, 2016 |

|

|

|

Seamonster posted:What GPU and what clock speeds for the CPUs? Source as found by a reverse image search: http://arstechnica.com/civis/viewtopic.php?t=1248499 Looks to be a Titan with everything stock and no HT.

|

|

|

|

Peanut3141 posted:Source as found by a reverse image search: http://arstechnica.com/civis/viewtopic.php?t=1248499 Yup, which means a 2500k with a +50% overclock means the numbers are even better. (when vs a 4790k, I'd love to see one against a 6700k)

|

|

|

|

Debating the swap from a Pentium G3258 to a 4790K in the HTPC since I can snag one for around $170. Not like I really NEED it for the HTPC comp as it's only driving a 760 and no idea when I may upgrade that, but for the future proofing and ease of having to worry about trying to find a Z97 compatible i7 K version down the line that isn't used after being OC'ed to hell for years.... Another thing I don't NEED, but do want... That and a Xeon 1680V2 for my X79 board...

|

|

|

|

EdEddnEddy posted:Debating the swap from a Pentium G3258 to a 4790K in the HTPC since I can snag one for around $170. Not like I really NEED it for the HTPC comp as it's only driving a 760 and no idea when I may upgrade that, but for the future proofing and ease of having to worry about trying to find a Z97 compatible i7 K version down the line that isn't used after being OC'ed to hell for years.... I'll take it if you don't want it. Yeah, it's utter overkill for a HTPC. G3258 is fine, maybe an i3-4170 at most. By the time a HTPC needs that much power you won't want to run one of those power-guzzling Haswell when you could be running the fancynew Photronic 9000 instead. After all, it has built-in H268 decoding and DisplayPort 3.2. It'd make a nice server or games machine though. Paul MaudDib fucked around with this message at 19:56 on Jun 3, 2016 |

|

|

JnnyThndrs posted:Exactly. If the 2500K isn't running at at least 4.3, this chart is sorta worthless. It also looks like it is measuring average frame rates. Where I have seen the most gains in frame rate over the various generations of CPUs is in the minimum frame rate, and minimum frame rate is mostly what I would care about when shopping for a CPU for a gaming computer.

|

|

|

|

|

But that minimum framerate you're looking so hard at is might actually be towed more by motherboard memory bandwidth than any function of actual CPU power. The proliferation of DDR4 chipsets has clouded the picture a bit there.

|

|

|

Seamonster posted:But that minimum framerate you're looking so hard at is might actually be towed more by motherboard memory bandwidth than any function of actual CPU power. The proliferation of DDR4 chipsets has clouded the picture a bit there. Absolutely, but it's sort of moot since you won't be getting DDR4-3200 bandwidth without an upgrade to Skylake anyway, 16GB of DDR3-2800 is a minimum of $200, 16GB of DDR3-3000 runs you $400, the highest speed DDR3 you can get for a reasonable price is DDR3-2400. When you also factor in the IPC increases you get from going from Sandy to Skylake and the features you get with newer chipsets and mobos it starts to make more sense to just upgrade the whole CPU/Mobo/RAM combo instead of trying to get more memory bandwidth to increase minimum frame rates.

|

|

|

|

|

sauer kraut posted:Go to desktop resolution in the nvidia control center, and set output colour depth to 'full' This, and if you haven't already, run through the color calibration for the monitor and use the NV Control Panel color settings to tweak everything (under Adjust desktop color settings). Just pick the personalized settings, leave NVCP open and then open the Windows display calibration so you can tweak settings in real time, you'd be amazed how much of a difference it makes.

|

|

|

|

Paul MaudDib posted:Counterpoint: that's real retarded, just buy a 1080 and don't gently caress around with that poo poo You know how the saying goes... Once you go Freesync, you never go Gsync. [because I already have a nice Freesync monitor] Edit: Plus, you can't do that trick with the GTX 1080's because so far they all insist on keeping the DVI taking up the second slot. They seriously need to make the DVI piece hooked up to the board with a detachable ribbon cable, like those low-profile cards where you can gently caress with the VGA port placement. Zero VGS fucked around with this message at 21:35 on Jun 3, 2016 |

|

|

|

The need for single slot high end graphics cards is honestly non existant.

|

|

|

|

besides, they're already in laptops and their cooling fans sound like vacuum cleaners

|

|

|

|

AVeryLargeRadish posted:Absolutely, but it's sort of moot since you won't be getting DDR4-3200 bandwidth without an upgrade to Skylake anyway, 16GB of DDR3-2800 is a minimum of $200, 16GB of DDR3-3000 runs you $400, the highest speed DDR3 you can get for a reasonable price is DDR3-2400. When you also factor in the IPC increases you get from going from Sandy to Skylake and the features you get with newer chipsets and mobos it starts to make more sense to just upgrade the whole CPU/Mobo/RAM combo instead of trying to get more memory bandwidth to increase minimum frame rates. Thats exactly what I'm going to do...eventually... oh and don't forget the PCI-E SSD!

|

|

|

|

Don Lapre posted:The need for single slot high end graphics cards is honestly non existant. Who doesn't want to slam four top end cards together with single slot waterblocks?

|

|

|

|

but nvidia wont let you quad sli 1080s and amd doesnt make top end cards???

|

|

|

|

Nvidia will, they just dont want you too because it sucks

|

|

|

|

Only the top Benchmark seekers need apply. (unless there is some sort of buttcoin mining that these can do good now) outside of that, once you have 2 1080's the only thing you would need to actually stress them is an apple 5K screen or bigger, and hope that the game has SLI profiles. (Which in reality, the haven't been terrible on in current drivers..) On that note, with Apple going from a 1440P Thunderbolt screen, to 5K, how long do you guys think it will take before they start rejecting a few batches of those and we get cheap Korean 5K DP displays?

|

|

|

|

EdEddnEddy posted:Only the top Benchmark seekers need apply. (unless there is some sort of buttcoin mining that these can do good now) Dell has been making them already for a while so if they arn't out now they prob wont be out for a bit.

|

|

|

|

Yea look like 5K is a bit of a way out. But 4K is coming around a bit per this site. Also Monoprice sort of has a 4K with Overdrive to 5K one but I have no idea how good that would be. And dang This is a cheap 4K screen with 3 DP EdEddnEddy fucked around with this message at 22:33 on Jun 3, 2016 |

|

|

|

So uh. There's a tweet chain that's really interesting. http://wccftech.com/async-compute-praised-by-several-devs-was-key-to-hitting-performance-target-in-doom-on-consoles/ Roll call of the names involved: * Tiago Sousa, Lead Renderer Programmer @ id Software * Dan Baker, Co-founder Oxide Games * James McLaren, Director of Engine Technology @ Q-Games * Mickael Gilabert, 3D Technical Lead @ Ubisoft Montreal That aside, I think the real news here is that Andrew Lauritzen makes an appearance in there, who works at Intel: https://twitter.com/ryanshrout/status/738263437118054400 https://twitter.com/AndrewLauritzen/status/738387457876463618 So maybe we'll see Intel doing Async compute on their igpus down the line?

|

|

|

|

Yes... https://twitter.com/R3DART/status/738841676312158208

|

|

|

|

Zero VGS posted:You know how the saying goes... Once you go Freesync, you never go Gsync. You can literally cut it off, and it will function just fine. Linus showed it a few times on his channel, its the same as being not connected to anything.

|

|

|

|

EVGA or similiar even made a single slot bracket for the original titan i think. People were desolding the dvi ports.

|

|

|

|

SwissArmyDruid posted:So uh. There's a tweet chain that's really interesting. Compute on GPUs is inherently asynchronous. You kick off a workload on them and it will execute in a massively parallel fashion and shove the result in some memory to be retrieved later. What AMD does differently is expose multiple dedicated compute queues which lets you submit multiple workloads at one time which have the potential to execute in parallel given the right conditions (ie. if you are rendering a depth-only scene which doesn't do any fragment processing you can have the shader cores do compute work instead of sitting idle). In contrast, Intel GPUs expose one universal queue and Nvidia exposes multiple universal graphics/compute queues which do who knows what under the hood. The_Franz fucked around with this message at 02:07 on Jun 4, 2016 |

|

|

|

http://www.digitimes.com/news/a20160530PD201.html Nintendo is delaying the NX to add VR support. If AMD won the bid for the NX that gives them four platforms for VR. It'll be interesting to see if VR can take off at all but also if it does where it'll do the best.

|

|

|

|

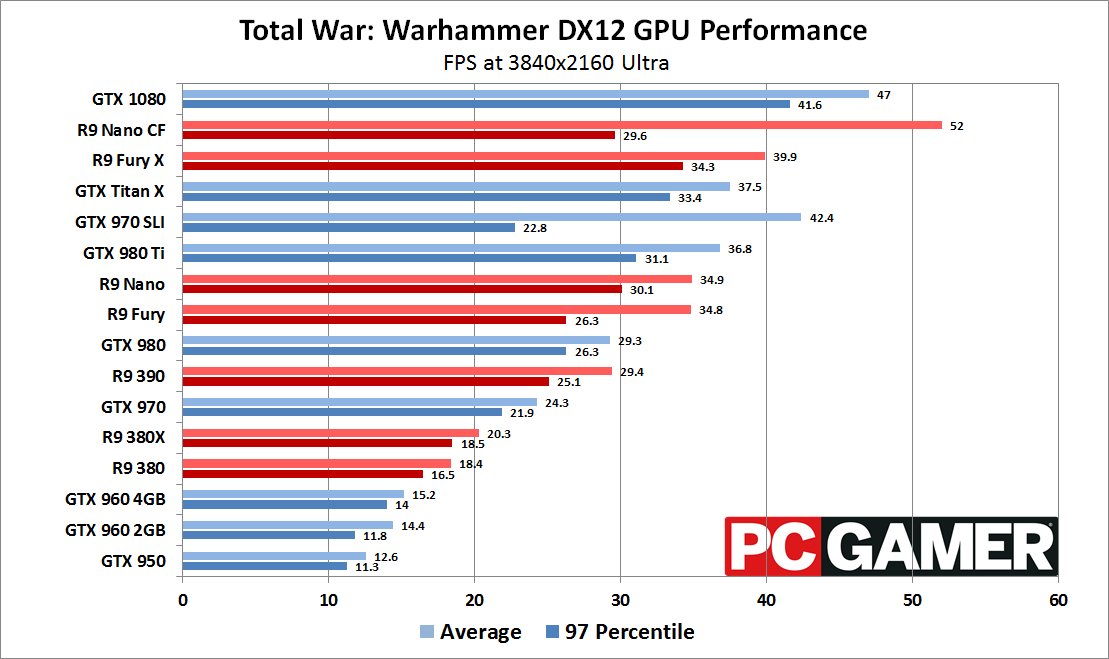

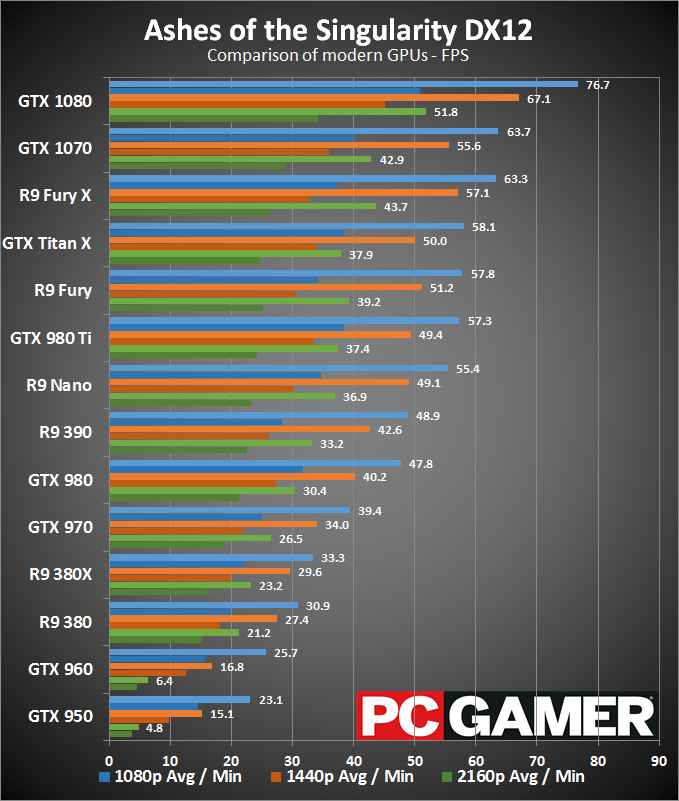

I recently bought a 4k monitor with free sync. My 970 is definitely getting a little long in the tooth and I was wondering what the best way to get to 4k on AMD's current lineup, seeing as they don't seem to have any high end updates coming down the line until next year. It looks like you might get a used r9 fury on eBay for 350ish, what's the state of crossfire these days if I wanted to run two?

|

|

|

|

DonkeyHotay posted:I recently bought a 4k monitor with free sync. My 970 is definitely getting a little long in the tooth and I was wondering what the best way to get to 4k on AMD's current lineup, seeing as they don't seem to have any high end updates coming down the line until next year. It looks like you might get a used r9 fury on eBay for 350ish, what's the state of crossfire these days if I wanted to run two? Depends what games you play:   Only you can decide whether 10 additional FPS and Freesync is worth $350. Out of curiosity does your Freesync monitor support Low Framerate Compensation? KingEup fucked around with this message at 02:25 on Jun 4, 2016 |

|

|

|

OK but I was mostly asking about crossfire, which still comes in cheaper than the street prices for a founder's edition.

|

|

|

|

DonkeyHotay posted:OK but I was mostly asking about crossfire, which still comes in cheaper than the street prices for a founder's edition. Crossfire support is game dependent. Those Total War benchmarks above include Crossfire R9 Nano.

|

|

|

|

DonkeyHotay posted:AMD's current lineup, seeing as they don't seem to have any high end updates coming down the line until next year edit: And yes CF is very game dependent. Works great when the game supports but sucks when it don't. If it works for the games you play and you don't care about performance in the games that don't (I didn't back when I used CF) then it could be perfect for you but its something to consider if you're not sure. PC LOAD LETTER fucked around with this message at 02:38 on Jun 4, 2016 |

|

|

|

|

| # ? May 24, 2024 11:33 |

|

Vega was originally roadmapped for 1H 2017, but there has been a leak/rumour that it has been moved to October/Q4 2016.

|

|

|