|

I don't like having multiple machines that do the exact same thing just because corporations engage in anti-competitive practices. There are enough games on one platform. I like PC because of maximum flexibility and framerate.TheCoach posted:Do note that "4GB card is enough for you" only applies if you never do any 3d work on your machine and never mod games. I'm used to doubling VRAM with every upgrade. It just seems weird to upgrade from 1440p to 4k with real time ray tracing without increasing memory at all.

|

|

|

|

|

| # ? Apr 29, 2024 09:04 |

|

I think it would be a great idea for Nvidia to release cards with not enough ram, because the cards are coming out in less than a month and the new consoles aren't. If it were true that there's not enough vram, then they're guaranteeing that people will have to buy better cards next refresh if the console ports perform badly. If they future-proof them, then they'll make less money.

|

|

|

|

This conversation is reminding me of that fiasco with the Nvidia card that had like 3.5 GB of VRAM and then 0.5 that was kinda there but wasn't? I didn't experience it first-hand but the stories made it seem like it was a huge headache for anyone who encountered it.

|

|

|

|

gradenko_2000 posted:This conversation is reminding me of that fiasco with the Nvidia card that had like 3.5 GB of VRAM and then 0.5 that was kinda there but wasn't? I didn't experience it first-hand but the stories made it seem like it was a huge headache for anyone who encountered it. the 970 completely punched above its weight so you had anyone with a tech youtube account trying to get clicks to show why this was just a trick and amd will release a better card any day now

|

|

|

|

The 970 was the most bang-for-your-buck card I ever bought and I only upgraded to a 1070 because it brokeredreader posted:I think it would be a great idea for Nvidia to release cards with not enough ram, because the cards are coming out in less than a month and the new consoles aren't. If it were true that there's not enough vram, then they're guaranteeing that people will have to buy better cards next refresh if the console ports perform badly. If they future-proof them, then they'll make less money.

|

|

|

|

dang, really? I've been bamboozled by clickbait yet again thanks y'all

|

|

|

|

repiv posted:im the titan with less bandwidth than the 3090 That's probably the 24GB card with double stack memory, they have been rumored to not clock as high as regular 1GB modules for some time.

|

|

|

|

DrDork posted:None. I'm just saying if you want RTX, that's gonna take a bunch 'o VRAM, while separately pointing out that I think 4GB cards are going to really start to struggle in the next year or two. 4 GB cards have until *checks sheet* basically the next actual next gen game is released As it is, the other aspects beyond memory will probably constrain any of the current 4GB models I can think of. (clockspeed, no rtx, etc) E: also lol at that game needing 14GB of system ram, wtf

|

|

|

|

Wiggly Wayne DDS posted:you were completely misinformed, which isn't surprising given how much bs was being spread then. the 970 had a 4GB of ram with 0.5GB of it being slower, but due to how nvidia handled ram allocation and profiled it off of games it only got noticed months after release in extremely synthetic tests. i'm talking "let's move 4GB of ram around on a card with 4GB of ram", things a game will never do and it took extreme scenarios like that for any implementation issues to be noticeable Within 3-4 months after the card released people started noticing stuttering in certain games and observed the cards were only allocating 3.5 GB VRAM, then other people started analyzing the problem with ~extremely synthetic tests~ and figured out that the card could only use 3.5 GB of the 4 GB of VRAM at full speed. The driver/memory management tried to get around that limitation as much as possible and yes in many games of the time it wasn't noticeable because 3.5 GB of VRAM (+ a slower 512 MB "buffer") was good enough. Not in all games though, and if you played games or used applications that actually wanted more than those 3.5 GB it was definitely noticeable or people wouldn't have complained about it. In newer games that regularly use more than 3.5 GB you can actually see the GTX 970's frametimes dip lower than the usual performance difference to contemporary 4 GB cards. Nvidia only admitted to the crippled memory setup once people started flinging poo poo their way, they could have avoided the entire controversy by simply marketing it as a 3.5 GB card, but chose not to.

|

|

|

|

Yeah the last 0.5gb is pretty crippled. Due to the way Nvidia cut the chip down from the 980, the first 3.5gb are on a 224 bit bus, and the last 0.5gb is on a 32 bit bus. 1/7th the memory speed is not exactly a minor difference. It is functionally a 3.5gb card. Not all that many situations where it matters, though.

|

|

|

|

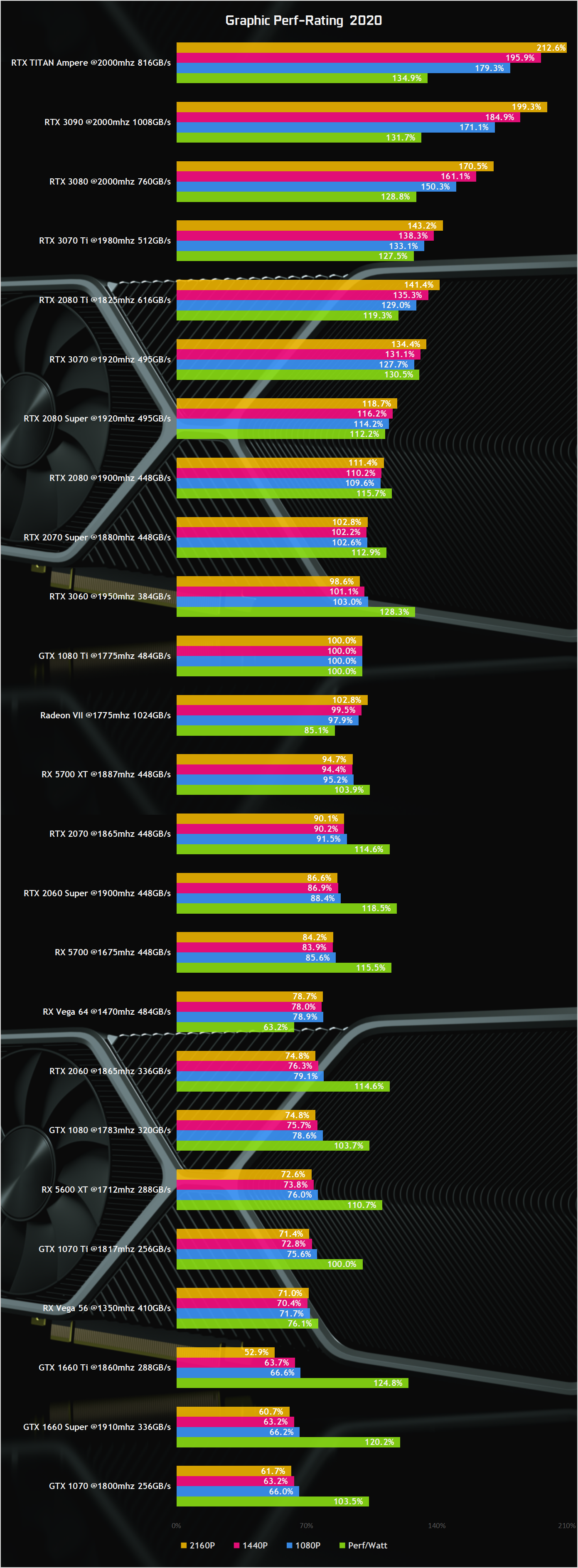

lol if true, the 3060 is basically a 1080Ti but 28% more power hungry?

|

|

|

|

jisforjosh posted:lol if true, the 3060 is basically a 1080Ti but 28% more power hungry? Other way around, it's 28% more efficient.

|

|

|

|

I just want to know how long it's going to take me to financially recover from it

|

|

|

|

Beautiful Ninja posted:Other way around, it's 28% more efficient. Oh poo poo, ok that makes more sense. Thought that was power consumption

|

|

|

|

From the info so far it sounds like while performance/watt will increase, performance/$ is going to remain static which sucks. New AMD cards are probably the only thing that would force Nvidia to drop their prices, at least for the mid range.

|

|

|

|

Mercrom posted:I don't like having multiple machines that do the exact same thing just because corporations engage in anti-competitive practices. There are enough games on one platform. I like PC because of maximum flexibility and framerate. Yeah, but at the same time, a whole bunch of that was driven by the need to simply have more VRAM than the last gen so they could slap the bigger number on the box and make it obvious to less-savvy customers that bigger is better. AMD, in particular, did this as a method to try to offset their performance deficit vs NVidia. It didn't matter that basically no game used that extra space, it just needed to be on the box! Now we're at the point where doubling the VRAM would be a very expensive proposition, so they're not going to be so quick to throw gobs of it onto a card unless they need to. Or are putting it on a $2000 card in which case whatever, you paid for it.

|

|

|

|

Keep in mind, it's not inverse power draw, it's fps per watt, basically. So the 3070 is giving you ~30 more frames but charging you ~30 more wattage per frame, which is kind of a concern.

|

|

|

|

Subjunctive posted:Yeah, I am really looking forward to the 7th and 8th cores being of material value for a game I’m playing, but I’m not holding my breath. I'm going with the complete opposite prediction! The biggest performance jump for next gen consoles is actually going to be 1T performance going from low power cat cores to zen2. Given that, developers are going to give up on working really hard to eke out performance from parallelism since they don't have to and we're going back to fast single core supremacy! My 2500k will rise again!

|

|

|

|

Lockback posted:Keep in mind, it's not inverse power draw, it's fps per watt, basically. So the 3070 is giving you ~30 more frames but charging you ~30 more wattage per frame, which is kind of a concern. Unless I'm missing something, 130% perf/watt would be 30 more frames at the same wattage assuming a 100fps baseline, which yields less wattage per frame, not more. Edit: not to say that that chart is accurate or w/e. Just hypothetically. VorpalFish fucked around with this message at 14:54 on Aug 17, 2020 |

|

|

|

Lockback posted:Keep in mind, it's not inverse power draw, it's fps per watt, basically. So the 3070 is giving you ~30 more frames but charging you ~30 more wattage per frame, which is kind of a concern. No, the 3070 is providing 30% more fps at the same power consumption of a 1080ti.

|

|

|

|

VorpalFish posted:Unless I'm missing something, 130% perf/watt would be 30 more frames at the same wattage assuming a 100fps baseline, which yields less wattage per frame, not more. poo poo! I got it backwards too right after I piggybacked on the "you got it backwards post"! Yeah ok that makes way more sense.

|

|

|

|

That 3080 (if the chart is real) is reaching the magic 170% of 1080ti performance for me, I just hope I don't need to remortgage my house for it.

|

|

|

|

Zedsdeadbaby posted:That 3080 (if the chart is real) is reaching the magic 170% of 1080ti performance for me, I just hope I don't need to remortgage my house for it. Same, though I think pricing is more going to determine whether I try to grab one near launch or wait for Zen3 before I grab one, more than whether or not I'll grab one at all.

|

|

|

|

DrDork posted:Same, though I think pricing is more going to determine whether I try to grab one near launch or wait for Zen3 before I grab one, more than whether or not I'll grab one at all. Zen3? Is that third party manufacturers? this will be my first purchase of a gfx card at or very near launch, only taken till my mid thirties to have gaming disposable income.

|

|

|

|

MonkeyLibFront posted:Zen3? Is that third party manufacturers? this will be my first purchase of a gfx card at or very near launch, only taken till my mid thirties to have gaming disposable income. I can just imagine you sticking a monster 3090 in your early 2000s Hewlett Packard prebuilt and wondering why it blew the PSU the gently caress up lmao Zen 3 is the architecture name of the upcoming Ryzen CPUs, much like Ampere is to GPUs, Zen 3 is a big deal on the CPU side of things and is also just around the corner. Lots of people are anxious to get a zen 3 and ampere system.

|

|

|

|

MonkeyLibFront posted:Zen3? Is that third party manufacturers? this will be my first purchase of a gfx card at or very near launch, only taken till my mid thirties to have gaming disposable income. Zen 3 is the name of AMD's next iteration of CPUs. Their current ones are Zen 2 (most chips in the 3000/4000 naming scheme). Basically I don't need a new system right now so the question for me is whether it's going to be worth picking up a new GPU at launch, or whether I might as well wait a few months for the new CPUs to drop and just do a whole system rebuild at once.

|

|

|

DrDork posted:Same, though I think pricing is more going to determine whether I try to grab one near launch or wait for Zen3 before I grab one, more than whether or not I'll grab one at all. I'm going to be realistic and know that I am not going to have the money till next year. I'm looking at handing my 1080ti to my girlfriend for her upgrades so it will be a matter of when. Canada always get hosed over in supply and pricing with anything tech related.

|

|

|

|

|

I don't know where you want the APU discussion, but Hot Chips just dumped a bunch of info about the AMD API in the upcoming Xbox: https://www.tomshardware.com/news/microsoft-xbox-series-x-architecture-deep-dive

|

|

|

|

Zedsdeadbaby posted:I can just imagine you sticking a monster 3090 in your early 2000s Hewlett Packard prebuilt and wondering why it blew the PSU the gently caress up lmao Haha I have built before but it has always been mid/budget range, so now I'm just sussing out what's what.

|

|

|

|

After careful moral consideration I have determined that if the 3090 is more than $1499 I'm not buying it, gently caress that sheit. A man has values, god drat it. Also that GPU comparison chart looks like some bullshit my guys. (says the guy who always posts wccftech) e: Random question: I currently have a 6600k in my machine @4.1 ghz while I wait for glorious Zen 3. Is that going to seriously bottleneck my poo poo until then, assuming 4k/120? kinda thinking it will but I also know that 4K gaming is relatively GPU intensive... it's starting to look like my machine is going to have major issues until it gets a new CPU afaik. Unfortunately the Zen 3 rumors keep sliding the release date closer to end of year (in theory). Some Goon posted:Yes, 8 thread minimum. Assuming your GPU can push sufficient frames at 4k. Yeah. Assume I'll shortly have at least a 3080/Ti/90/what the gently caress ever. Sounds like it's going to be a paperweight for a while though. gently caress. That almost makes me want to get a Zen 2 and slot in a 3 later, it's so wasteful though. But it's also wasteful as gently caress to buy a nice new card and spend potentially months without the ability to use it fully. It's like a car; the initial time you have with it, relatively, is the most "costly". I have some thinking to do. Taima fucked around with this message at 18:39 on Aug 17, 2020 |

|

|

|

Yes, 8 thread minimum. Assuming your GPU can push sufficient frames at 4k.

|

|

|

|

The most disturbing thing about a $1500 3090 isn't the $1500. Its the near certainty of a x80 non-TI part costing 4 figures.

|

|

|

|

Taima posted:Yeah. Assume I'll shortly have at least a 3080/Ti/90/what the gently caress ever. Sounds like it's going to be a paperweight for a while though. I wouldn't worry too much. Let's assume that they announce in September. Realistically that means you probably wouldn't have a card in your hands until the end of the month. Current rumors have Zen 3 in October. So potentially not a big gap for you there.

|

|

|

|

Seamonster posted:The most disturbing thing about a $1500 3090 isn't the $1500. Honestly I still find that extremely hard to believe, but I will admit that moving the 3080 to ga102 is definitely a sign that they may be trying to raise the bracket. My money is still on more like $849-899 or something. Still a lot for a base x80 though. DrDork posted:I wouldn't worry too much. Let's assume that they announce in September. Realistically that means you probably wouldn't have a card in your hands until the end of the month. Current rumors have Zen 3 in October. So potentially not a big gap for you there. Ah ok that's good to hear. If it's October that works!

|

|

|

|

Annual reminder that product names are 1 part marketing, 0 parts anything else. Being uncontested at the top end I wouldn't be surprised if Nvidia holds perf/$ flat again and just adds some higher perf tiers.

|

|

|

|

Taima posted:After careful moral consideration I have determined that if the 3090 is more than $1499 I'm not buying it, gently caress that sheit. A man has values, god drat it. It's going to depend on the game. Skylake cores are still plenty fast but there are some games out there that get stuttery on only 4T. If you already have the 6600k and are gonna jump on zen3, I probably wouldn't buy zen2 in the interim only to replace it like 3 months later or whatever, but you do you.

|

|

|

|

Some Goon posted:Annual reminder that product names are 1 part marketing, 0 parts anything else. Being uncontested at the top end I wouldn't be surprised if Nvidia holds perf/$ flat again and just adds some higher perf tiers. That's exactly why I'm so skeptical and I made a post about that recently. From a marketing perspective, it makes far more sense to just sku up rather than make a ton of people mad by making the x80 a grand. But this is Nvidia, so the outside chance remains. VorpalFish posted:It's going to depend on the game. Skylake cores are still plenty fast but there are some games out there that get stuttery on only 4T. If you already have the 6600k and are gonna jump on zen3, I probably wouldn't buy zen2 in the interim only to replace it like 3 months later or whatever, but you do you. That's fair. I was thinking more like an end of year launch anyways, if it's set for more like october, that's fine. I'm usually fairly pragmatic and willing to wait for new stuff instead of buying holdover tech and then just going "well I'll upgrade later if it makes sense". But the prospect of buying a nice GPU and not being able to really utilize it for, say, 4 months, is rough. Sounds like it's more like 2 though, which is manageable for sure. Taima fucked around with this message at 18:51 on Aug 17, 2020 |

|

|

|

Watch nvidia switch to hex so they have more digits to play with

|

|

|

|

If that chart is true, going from 2070S to 3080 will give me ~60% more performance. That's before DLSS2 I presume. Which is nice. Because I want one for CP2077 and WD:L. Mad RTX framerates

|

|

|

|

|

| # ? Apr 29, 2024 09:04 |

|

Taima posted:

Here's what you're missing from not having 8T https://www.anandtech.com/bench/product/1544?vs=1543 Mostly....not much impact @ 4K (Some 99th percentile differences, which is where you'll see it the most). These are mostly older games, but Ashes and GTAV are still common benchmarks. Basically, I doubt the 6600K is going to hold you back very far if you're mostly targeting around 60FPS. If you end up getting a 3080 or w/e then I'd say CPU is definitely on the list but I wouldn't rush anything at this point. Honestly the best advice is to not buy something today to try to future proof tomorrow. Run that 6600k until you start actually playing games where its a problem, THEN upgrade.

|

|

|