|

I am running Ubuntu 16.04 and I need to image my boot drive to a new SSD 256 Gig. The BIG issue is the boot drive is a 256 Gig USB drive and I know there are some issues when you image between device types. The USB has been configured to keep as much cache off of it as possible but I am still worried about the finite writes on USB. SSD would be better and the reason I am doing this. What would be the advice of everyone to start from? Some things I am thinking of and need some feedback on 1) Which utility to use? rsync (which I am still not good at understanding the huge amount of options. only used it for simple directory moves) or simple dd? There are two partitions on the usb disk using ext4; one for boot and one for everything else. Worried about DD finding an error on the USB and reducing the speed to slower than slow; option with dd is to use ddrescure which ignores bad blocks until it's done moving the rest of the good blocks. 2) Probably safer to export the ZFS drives and redo the zpool again, but I would love if I didn't have to do this since I would have to reconfigure Plex. 3) grub is going to be poo poo to redo. 4) dd can't copy from a bigger drive to a smaller drive. I need to find out the number of blocks the ssd has to see even if I can dd it. EVIL Gibson fucked around with this message at 20:40 on Jan 28, 2017 |

|

|

|

|

| # ¿ Apr 27, 2024 04:25 |

|

eames posted:multiple hosted servers and recording them directly to ACD "for fun". I'll admit, I don't use Amazon cloud to know that acronym so I thought you meant he was recording it to the photomanager ACDsee For actual NAS question, I am trying to move away from SMB and goto NFS. I see a lot of tutorials of how to set up different permissions for different IP/IP ranges but I am trying to find a way to make it so I can have read only for anonymous users while I have rw for an authenticated user . There are no real good guides I'm doing this through zfs except for those long rear end zfs set sharenfs="to lots of characters" commands For those that did it before, is creating a share outside of NFS easier or is the fact that zfs brings up the shares as soon as the mounts are ready better ?

|

|

|

|

GokieKS posted:Back when I used NFS (before Apple completely hosed up NFS auto-mounting in on one of the recent versions which prompted me to just give up and move to netatalk/AFP instead of NFS, since slow SMB performance on Mac clients was the only reason I used NFS, and even that required a lot of tuning), I used the "zfs set sharenfs" command. I have SMB up and running. It's great. The reason is that I would like to move to NFS because I always hear it's more efficient at transmitting without needing to do hacks like increasing the MTU on my machine. It might be complete garbage but I would like to fully take advantage of the ARC .

|

|

|

|

apropos man posted:Because I had difficulty accessing shares from one, so I also installed the other while I worked out what was going to be the best option. I usually wouldn't have both of them in an everyday situation either. I wish Emby were as slick as Plex, though. It's just not quite there in terms of UX but has the advantage of being a bit more specific with watch folders. Also the fact you can replace embys version of ffmpeg with one you built to implement hardware decoding and encoding. Plex used to use ffmpeg before they went closed source but have been cock teasing hardware for the past year or two . Emby does it perfectly properly prioritizing first gpu then CPU.

|

|

|

|

evol262 posted:I'm gonna assume you mean decoding here. Accel has been there for a desktop, and it's practically required for s number of DEs. Uh, they do really well. not sure what you are looking at to make that case. Also are you confusing branch prediction with matrix transformations?

|

|

|

|

evol262 posted:No, I'm not. So I assume you are running the preview version where you can turn on hw transcoding because the current release of plex does not support it? Do you specifically configure your Plex to only use 2 cores? Do you optimize your videos for easier streaming (ac3 to aac, rear end subs to movtext, mkv to h264???) because no test I can do can get the on the fly transcoding numbers as using nvenc. EVIL Gibson fucked around with this message at 02:55 on Jun 28, 2017 |

|

|

|

apropos man posted:I've went with Emby as my video player. I'm interested in compiling my own ffmpeg, as mentioned upthread, and using it as the custom transcoder. What are you running because I got a guide that certainly helped me out. Also what type of card?

|

|

|

|

apropos man posted:No GPU, just the Skylake 6100 to do transcoding. CentOS 7 headless host running CentOS 7 VM with emby. Gigabyte X150-ECC motherboard with 16GB unbuffered ECC DDR4. Try to see if your ffmpeg doesn't already have quick sync enabled code:Then you can try to encode a file by itself and see if it properly works. You should see h264_qsv being transferred to. code:Here is a guide of how to install the prerequisite libraries and compile a version of ffmpeg on centos. I am not familiar with your VM but qsv may not be available in a sandboxed environment. https://www.intel.com/content/dam/www/public/us/en/documents/white-papers/quicksync-video-ffmpeg-install-valid.pdf EVIL Gibson fucked around with this message at 20:46 on Jun 28, 2017 |

|

|

|

EssOEss posted:This may sound like a silly question but why are you guys encoding video on your NAS systems? Is it a case of overcoming format incompatibilities or what? For me, I have mkv files and that format cannot be streamed as easy as h264. Subtitles, forget about it. Transcoding to easier to render formats for the the end client saves bandwidth and network saturation especially if you are supporting multiple streams My phone does not need 4k resolutions and 5.1 sound. 720 with stereo is good enough but I sure don't want to encode every single movie and then show to 720 (which Plex allows you to do by "optimizing")

|

|

|

|

IOwnCalculus posted:You seem to be mixing up container and video codec I means maktroka video and whatever you want in mp4 haha. I am really getting familiar with the differences and why tools like mp4automater exists 😀

|

|

|

|

apropos man posted:I tried to compile it earlier tonight and couldn't quite pull it off. Kept running into dependency problems as I could only find guides for older versions of CentOS and the Intel suite isn't exactly well explained. Try that command to list codecs because I know FFMpeg started auto building nvenc (Nvidia) into the main distro for Ubuntu. If you see it there, all you'll have to do is install the library files or sdk from Intel , if that.

|

|

|

|

Does anyone else try to order the same size and model drive from different stores so you'll be pulling from different batches? My biggest worry is if one drive starts going the other drives might start going as well at the most critical time of reslivering. Just remember Seagate drives getting the click of death at a certain read/write count for some drat reason.

|

|

|

|

BobHoward posted:Assuming you're talking about what I think you're talking about, that last one wasn't a QC or manufacturing defect, it was a firmware bug. Then this was the best kept secret because me and several others had no clue wtf was going on in 2006 or so Tech support was awful to look at then. Downloads not any better since they all looked like geocities pages with miles and miles of manufacturer firmwares and you considered yourself lucky if there was also a link to download the tool to flash the firmware hah EVIL Gibson fucked around with this message at 20:42 on Jul 3, 2017 |

|

|

|

DrDork posted:There was a time when they were shucking external drives because it was notably cheaper than buying normal internal drives, but I think that was back when they were mostly using 1.5TB drives. Pretty sure everything 2TB and up are "normal" procurements. Basically, if you can put up with the chatter a HGST puts out, get them for your NAS. But that's why you build networks so you can keep them in a dark, cool, but not humid place.

|

|

|

|

SamDabbers posted:It was designed on Solaris and has more and better-integrated features on it and Illumos distros, but napp-it has basic support for Debian and Ubuntu. Dang their page has the best FAQ written as if I was back in the year 2000 reading a description off of cheap pc component's box. Here are some hot takes: napp it bitching faq posted:No bitrot EVIL Gibson fucked around with this message at 20:33 on Jul 10, 2017 |

|

|

|

I remember when I ran a windows file home server ... Thing (forget the name, it let you build a raid 0 bastard where you could throw in any size drive and made sure there were at least two copies of a file on two disks) the one thing I really appreciated was the hard drive visualizer I used. I think it was built into windows but whatever it was it let you create a rough model of your PC with hard drive images that could be linked to hard drive details. If you know a disk failed you didn't need to play 20 disk pulls to figure out which disk is which. Is there a command line program that can do this with the best (the best) ascii art and possibly perform commands right there to offline a disk while still showing you the PC contents?

|

|

|

|

IOwnCalculus posted:Reverse proxying is what I'm going to set up on mine. Is there a good reverse proxy guide because I will have to do that for emby soon. Some I am finding that I think are good are from 6 years ago and wanted to make sure all options are explored.

|

|

|

|

Paul MaudDib posted:

Nvidia locks off at 2 on my 730 . 4 is like a joke if that's true. It's been like 4 years. Now here's a hot tip: AMD doesn't have a lock. You can encode as much as you want. And encoding is something I need to do like I mentioned before. I would do it using QuickSync for Intel but my Xeon doesn't have that package. It is becoming old to have to predownload stuff to watch it on my phone with Plex but their work into the transcoder is just to make a worse version of ffmpeg you can't recompile (the loop holes they go through to not have to show their code in GitHub is hilarious).

|

|

|

|

VostokProgram posted:Maybe you can make a symbolic link to a share instead of mapping the drive? Another vote for symbolic links just for the fact they were created to aid in Unix migrations and compatibility. Just be careful about the flags when making those links.

|

|

|

|

Steakandchips posted:So if I have 2 synology nases, and I have the exact same stuff on both, on the same LAN, but then, i take 1 of them far away, and link them together via the internet, what do I need to do make them continuously synced? Unison https://www.cis.upenn.edu/~bcpierce/unison/ is built to keep two computers updated to have the latest files. It uses rsync on the back end to do the diffs and transmission handling, but uses it so both computers do it to each other . Demo directions: https://www.howtoforge.com/tutorial/unison-file-sync-between-two-servers-on-debian-jessie/

|

|

|

|

G-Prime posted:Just be aware that Unison can spaz out very badly when you do a large number of file changes in a short period, or when you change very large files. One of my coworkers uploaded a multiple gig tar file to one of our boxes and ran it completely out of RAM because Unison decided to try to open and read the whole drat thing. That's strange. That's not how rsync works and if a program using rsync is not doing hash blocks then it's not using the best reasons rsync is a great tool. gently caress unison then.

|

|

|

|

BobHoward posted:How do you think rsync computes the hashes for each block of a file? It has to read the whole file in, and do the number crunching. I thought how it was worded it was opening and getting metadata out like this doc file was last opened by so-and-so which doesn't even make more sense if it is really doing block by block hashing and not a god drat entire file hash. If it is transferring the entire file each time it changes, it is not using rsync period.

|

|

|

|

BobHoward posted:I think you might be a bit confused about how rsync works? (Everything I've already described) I bring up rsync doing block by block hashing several times and someone already said the original post can be understood as the program was doing weird things to the files.

|

|

|

|

Paul MaudDib posted:I was wondering the same thing. A proper shutdown should have everything flushed, is it necessary to explicitly export it or is a shutdown enough synchronization? Export always flushes. Also it looks like it is possible to convert drives referenced as disk assignment (/dev/sdx) to use drive id when the zpool is reformed. Here is a thread but make sure you read it all because the asker messes up but figures out what you need to exactly do. https://ubuntuforums.org/archive/index.php/t-2087726.html

|

|

|

|

Takes No Damage posted:Not sure if there's a better thread to ask this: The level of money you would probably be willing to spend will get you the company running stuff you can already do , maybe freezing it and then hoping the heads can start reading it before it thaws. Now if we are talking take apart platter by platter and scanning each one in, we are easily hitting thousands and thousands.

|

|

|

|

kloa posted:Never tried them, but Noctua makes 1U size fans Delta also makes 1U fans

|

|

|

|

Furism posted:I'm trying to export my ZFS pool so I can import it into another OS later on. But FreeBSD is giving me poo poo: Try "zpool export -f pool1"

|

|

|

|

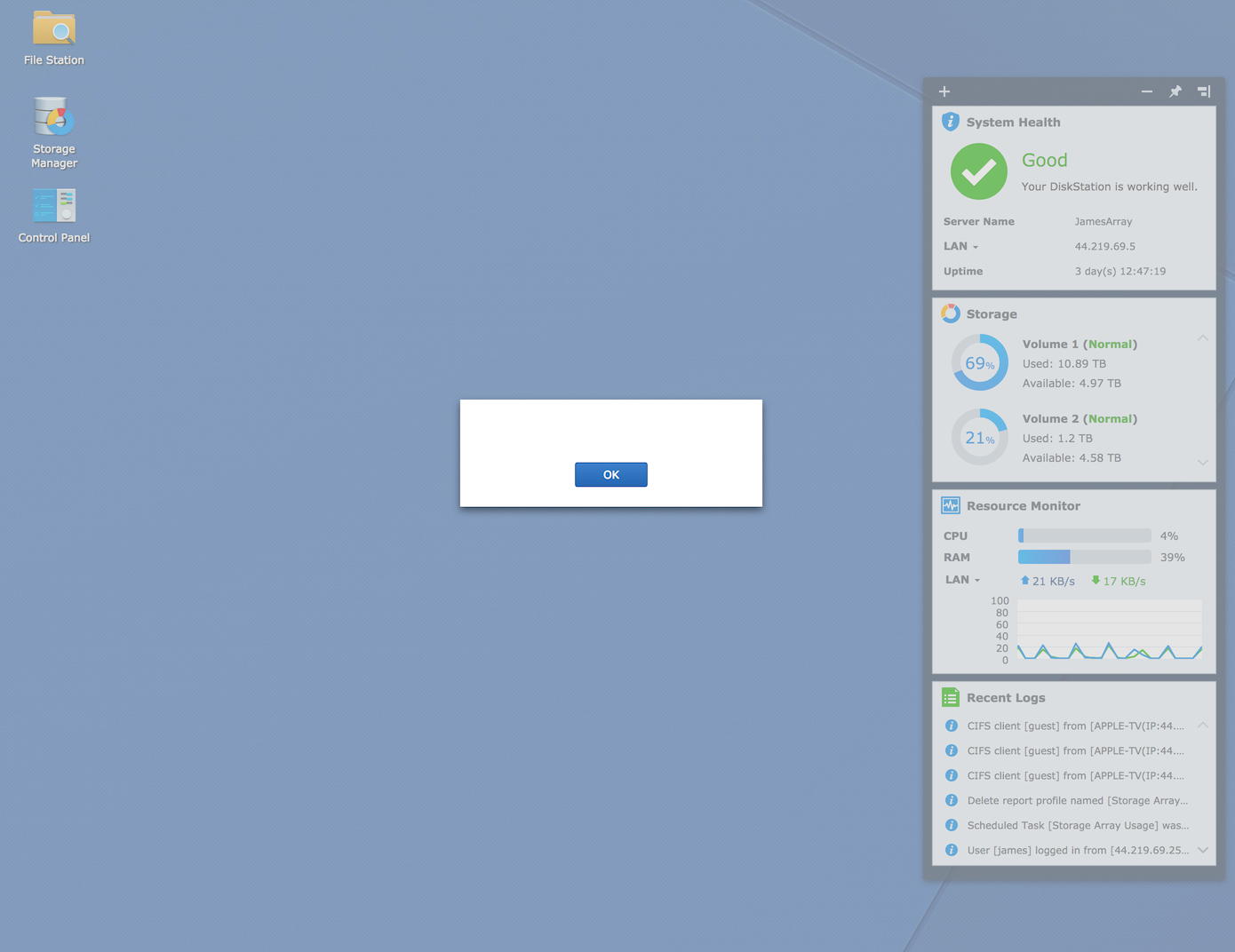

Sniep posted:Anyone know on Synology why now and again I'll go to the admin page UI and it will just be dog slow + constantly pop up empty boxes with just an "OK" button? Joke answer: don't worry, it's just saying everything is OK and wanted to say it. (Is there a process explorer or something similar so you can see what might be causing that?)

|

|

|

|

bobfather posted:Quick question for those in the know: What you can do is create your 3 disk vdev with two real drives with one missing. Zfs won't let you create a vdev without all drives present on pool creation. I was in the same situation. One solution is to create a fake drive mount that reports as whatever size you need it to be but is actually a file that starts at no size but will grow in size as you write to it. Get the two disks lined up and then create the fake hdd. You will add them to the pool successfully and you'll see the fake drive starting to fill up; bring down the pool immediately and remove the fake mount. The pool will report as degraded. Then when you are ready, use the replace command to put in your real third drive and wait until zfs repopulates and marks the pool as good. All this time, you will be one failure from destruction so backup backup backup.

|

|

|

|

I have to do some file sync up against files I do not know where they could be in the file server. I used a tool before that let me do this function before, but forgot if it was some odd rsync flag or whatever. Imagine this scenario code:After I moved over 004 to my a new directory for "Trip_To_Appalacians" I will delete everything from the source.

|

|

|

|

eames posted:Yes, I did this passthrough with Linux KVM and Plex. The encoding quality is visibly worse than software and not worth it because the processors can do much better at relatively low load. IMO Quicksync only makes sense for underpowered Celeron/Atom NAS boxes but I don’t know how those will fare when h.265 becomes standard. I use the hardware encoding for live transcoding to a lower format over severely restrictive bandwidth (my data plan). Since Plex incorpreated it into the current version of Plex, I have started seeing less drops out or complaints of quality loss through the Plex mobile app.

|

|

|

|

The Milkman posted:So, I finally migrated off my rapidly decaying Corral install over the weekend. I was trying to hold off until 11.1 for the Docker support. But predictably, 11.1 is late, and probably won't even have it anyway if I'm reading the tea leaves right. Between plugins/a couple hand rolled jails I have my essential services running again. If you haven't yet, scheduling your zfs scrub to perform weekly. Installed a service to connect to my Google drive through command line so I can upload/process stuff to my server remotely via the drive . Script to download direct 1080p from services I subscribe to via youtube-dl (not just YouTube, check out the filter list). Let me know how that emby thing works out for you. I wanted to host it on a reverse ssl proxy connection where it only accepts connections that have the matching certs on the port I've created. EVIL Gibson fucked around with this message at 01:24 on Nov 22, 2017 |

|

|

|

Photex posted:I've been using unraid for a little over a year, it just works is the easiest way to sell it to people. There is a ton you can do with it though. Now what happens you explain to them they also need a proper backup system? "Don't I already have that now?"

|

|

|

|

As someone who actually started my file server to boot from a USB , don't. Even though I understood that I had to move all caching off the USB to some other place (in my case, ram drive), it was such a bitch to handle upgrades since that always worried me the finite amount of write cycles USB memory has. I know ssds have the same issue, but on a vastly different scale and they have SMART to let me know very shortly when it is going to gently caress up. USB SMART (or the equivalent I was looking at) is very limited what it can pull.

|

|

|

|

kloa posted:Probably a silly question, but I have a RAID1 setup with a Synology DS212j and they are formatted as ext4. Here's a guide of how to pull the files off a Synology Nas drive in Ubuntu (or really any Linux since they mostly have the same applications except a few) https://forum.synology.com/enu/viewtopic.php?t=51393 Raid1 disks are just a full on mirrors with a bit of metadata

|

|

|

|

Twerk from Home posted:You can also just rip the disks straight to an MKV with MakeMKV, and then Plex (or any media player at all) can handle it just fine. I encode to mp4 because some of the people that watch my stuff have appleTV and other apple products which seems to be a better experience for them (live FF/Rew instead of a black screen for one example)

|

|

|

|

Matt Zerella posted:Sure! But quicksync doesn't limit you to two streams. I am in the awkward position that my Xeon i3 came with no built in quicksync so... GPU is it.

|

|

|

|

Lowen SoDium posted:I currently have a Ubuntu file server with 3x 3TB WD REDs in RAIDZ. Disk are just over 5 years old and the array is full. Looking to replace them all. Data will be copied from old array to new array. You have to consider that the rebuilding requires lots of writes and reads across all drives. If you have 1 parity, then you need to hope to hell or high water no other drive goes offline/goes bad while rebuilding. This happens (seeingly) more often if you raid is made of hard drives from the same batch. If you have 2 parity, one other drive can go bad during the heavy write/reads and it will not stop the rebuild process.

|

|

|

|

IOwnCalculus posted:Counterpoint: ZFS does not poo poo the bed when this happens. I had this occur once during a rebuild and it marked a few files as corrupt, which let me easily restore them from backup. Really, it's more safety concious where I build in case the worst case possible which always seems to happen lol

|

|

|

|

|

| # ¿ Apr 27, 2024 04:25 |

|

Falcon2001 posted:How often should I consider proactively replacing disks? My NAS runs 24x7 but doesn't see a lot of day to day use. I'm running 4x Western Digital WD40EFRX 4 TB WD Red, all purchased almost exactly 4 years ago. To be honest, just have a 5th running hot spare (if you can) and check either SMART or , as zfs does, tells you when a drive is running degraded (it picks up a lot of bad writes/reads and is constantly removing them from being used)

|

|

|