|

Swan Oat posted:Can anyone elaborate on what makes this unworkable? I'm not trying to challenge someone who DARES to disagree with Yudkowsky -- I don't know poo poo about these topics -- I'm just curious as to what makes his understanding of AI or decision theory wrong. Effortpost incoming, but the short version is that there are so many (so many) unsolved problems before this even makes sense. It's like arguing what color to paint the bikeshed on the orbital habitation units above Jupiter; sure, we'll probably decide on red eventually, but christ, that just doesn't matter right now. What's worse, he's arguing for a color which doesn't exist. Far be it from me to defend RationalWiki unthinkingly, but they're halfway right here. Okay, so long version here, from the beginning. I'm an AI guy; I have an actual graduate degree in the subject and now I work for Google. I say this because Yudkowsky does not have a degree in the subject, and because he also does not do any productive work. There's a big belief in CS in "rough consensus and running code", and he's got neither. I also used to be a LessWronger, while I was in undergrad. Yudkowsky is terrified (literally terrified) that we'll accidentally succeed. He unironically calls this his "AI-go-FOOM" theory. I guess the term that the AI community actually uses, "Recursive Self-Improvement", was too loaded a term (wiki). He thinks that we're accidentally going to build an AI which can improve itself, which will then be able to improve itself, which will then be able to improve itself. Here's where the current (and extensive!) research on recursive-self-improvement by some of the smartest people in the world has gotten us: some compilers are able to compile their own code in a more efficient way than the bootstrapped compilers. This is very impressive, but it is not terrifying. Here is the paper which started it all! So, since we're going to have a big fancy AI which can modify itself, what stops that AI from modifying its own goals? After all, if you give rats the ability to induce pleasure in themselves by pushing a button, they'll waste away because they'll never stop pushing the button. Why would this AI be different? This is what Yudkowsky refers to as a "Paperclip Maximizer". In this, he refers to an AI which has bizarre goals that we don't understand (e.g. maximizing the number of paperclips in the universe). His big quote for this one is "The AI does not love you, nor does it hate you, but you are made of atoms which it could use for something else." His answer is, in summary, "we're going to build a really smart system which makes decisions which it could never regret in any potential past timeline". He wrote a really long paper on this here, and I really need to digress to explain why this is sad. He invented a new form of decision theory with the intent of using it in an artificial general intelligence, but his theory is literally impossible to implement in silicon. He couldn't even get the mainstream academic press to print it, so he self-published it, in a fake academic journal he invented. He does this a lot, actually. He doesn't really understand the academic mainstream AI researchers, because they don't have the same :sperg: brain as he does, so he'll ape their methods without understanding them. Read a real AI paper, then one of Yudkowsky's. His paper comes off as a pale imitation of what a freshman philosophy student thinks a computer scientist ought to write. So that's the decision theory side. On the Bayesian side, RationalWiki isn't quite right. We actually already have a version of Yudkowsky's "big improvement", AIs which update their beliefs based on what they experience according to Bayes' Law. This is not unusual or odd in any way, and a lot of people agree that an AI designed that way is useful and interesting. The problem is that it takes a lot of computational power to get an AI like that to do anything. We just don't have computers which are fast enough to do it, and the odds are not good that we ever will. Read about Bayes Nets if you want to know why, but the amount of power you need scales exponentially with the number of facts you want the system to have an opinion about. Current processing can barely play an RTS game. Think about how many opinions you have right now, and compare the sum of your consciousness to how hard it is to keep track of where your opponents probably are in an RTS. Remember that we're trying to build a system that is almost infinitely smarter than you. Yeah. It's probably not gonna happen. e: Forums Barber posted:seriously, this is not to be missed. For people who hate clicking: Yudkowsky believes that a future AI will simulate a version of you in hell for eternity if you don't donate to his fake charity. The long version of this story is even better, but you should discover it for yourselves. Please click the link. ee: Ooh, I forgot, they also all speak in this really bizarre dialect which resembles English superficially but where any given word might have weird an unexpected meanings. You have to really read a lot (a lot; back when I was in the cult I probably spent five to ten hours a week absorbing this stuff) before any given sentence can be expected to convey its full meaning to your ignorant mind, or something. So if you find yourself confused, there's probably going to be at least a few people in this thread who can explain whatever you've found. SolTerrasa fucked around with this message at 23:50 on Apr 19, 2014 |

|

|

|

|

| # ¿ May 10, 2024 21:31 |

|

Slime posted:These are people who have a very naive view of utalitarianism. They seem to forget that not only would it be hilariously impossible to quantify suffering, but that even if you could 50,000 people suffering at a magnitude of 1 is better than 1 person suffering at a magnitude of 40,000. Getting a speck of dust in your eye is momentarily annoying, but a minute later you'll probably forget it ever happened. Torture a man for 50 years and the damage is permanent, assuming he's still alive at the end of it. Minor amounts of suffering distributed equally among the population would be far easier to soothe and heal. Exactly. While I was reading this stuff again, now that I've cleared the "this man is wiser than I" beliefs out of my head, I thought of that immediately. Let's choose what the people themselves would have chosen if they were offered the choice; no functional human would refuse a dust speck in their eye to save a man they'd never met 50 years of torture. Except lesswrongers, apparently, but then I did say functional humans. Brb, gotta go write a book on my brilliant new moral decision framework. Lottery of Babylon posted:Timeless Decision Theory, which for some reason the lamestream "academics" haven't given the respect it deserves, exists solely to apply to situations in which a hyper-intelligent AI has simulated and modeled and predicted all of your future behavior with 100% accuracy and has decided to reward you if and only if you make what seem to be bad decisions. This obviously isn't a situation that most people encounter very often, and even if it were regular decision theory could handle it fine just by pretending that the one-time games are iterated. It also has some major holes: if a computer demands that you hand over all your money because a hypothetical version of you in an alternate future that never happened and now never will happen totally would have promised you would, Yudkowsky's theory demands that you hand over all your money for nothing. Well, let's be fair, it also works in situations where you are unknowingly exposed to mind-altering agents which change your preferences for different kinds of ice cream but don't manage to suppress your preferences for being a smug rear end in a top hat. (page 11 of http://intelligence.org/files/TDT.pdf) GWBBQ posted:I Googled 'Yudkowsky Kurzweil' after reading these two sentences and oh boy do I have a lot of reading to do about why Mr. Less Wrong is obviously the smarter of the two. I have plenty issues with Kurzweil's ideas on a technological singularity, but holy poo poo is Yudkowsky arrogant. Well, when you aren't doing any real work, you can usually find time to write lots and lots of words about why you're smarter than the man you wish you could be. And, new stuff from "things I can barely believe I used to actually believe": Does anyone want to cover cryonics? I can do it, if no one else has an interest. SolTerrasa fucked around with this message at 00:18 on Apr 20, 2014 |

|

|

|

Sham bam bamina! posted:The real problem here is the idea that dust is substantially equivalent to torture, that both exist on some arbitrary scale of "suffering" that allows some arbitrary amount of annoyance to "equal" some arbitrary amount of torture. They don't. You don't suffer when you get a paper cut any more than you are annoyed by the final stages of cancer, even though both scenarios involve physical pain. The reason that non-Yudkowskyites pick dust over torture is that that is the situation in which a person is not tortured. The thing is, a Bayesian AI *has* to believe in optimizing along spectrums, more or less exclusively. That's why you'll see him complaining that you can't come up with a clear dividing line between "pain" and "torture", or "happiness" and "eudaimonia" or whatever. Like a previous poster said, his Bayes fetish really fucks with him. SolTerrasa fucked around with this message at 04:44 on Apr 20, 2014 |

|

|

|

. q!=e, sorry

|

|

|

|

Richard Kulisz. I don't know what Yudkowsky did to piss him off so badly, but he is really mad on the internet. He writes so many internet rebuttals on his blog, http://richardkulisz.blogspot.com/. Seriously, we could spend days just quoting his posts. First, because he has the right idea (Yudkowsky is a crank) and second, because he falls victim to the same delusions of grandeur that Yudkowsky does. Reading his blog you can just see him devolving from "wow, that guy is an idiot" to "I'm smart too! Why doesn't anyone listen to me?" Here, I'll show you what I mean! A Sane Individual posted:Eliezer believes strongly that AI are unfathomable to mere humans. And being an idiot, he is correct in the limited sense that AI are definitely unfathomable to him.  An Arguably Sane Individual posted:Honestly, I think the time to worry about AI ethics will be after someone makes an AI at the human retard level. Because the length of time between that point and "superhuman AI that can single-handedly out-think all of humanity" will still amount to a substantial number of years. At some point in those substantial number of years, someone who isn't an idiot will cotton on to the idea that building a healthy AI society is more important than building a "friendly" AI. Hmm... Well, he's still doing that thing that Yudkowsky does where he thinks that "AI" means something, by itself. I don't want to spend too much time on this (post a thread in SAL if you want to talk about what an AI is), but "AI" is a broad term that encompasses everything from a logic inference system to Siri to the backends for Google Maps. Saying "an AI" makes you look like you're not terribly familiar with the field, or like you're talking to people who aren't capable of understanding nuance. And "the human retard level"? I'm guessing this guy hasn't read too much ... well, too much anything, really. I mean, last week I wrote a 2048-solving AI which is much smarter than any human, let alone humans with disabilities, at the problem of solving 2048. Artificial General Intelligence is just a phrase someone made up; we don't really have any compelling ideas about how we're going to be able to build a system that "thinks". An Angry Person posted:Finally, anyone who cares about AI should read Alara Rogers' stories where she describes the workings of the Q Continuum. In them, she works through the implications of the Q being disembodied entities that share thoughts. In other words, this fanfiction writer has come up with more insights into the nature of artificial intelligence off-the-cuff than Eliezer Yudkowsky, the supposed "AI researcher". Because all Eliezer could think of for AI properties is that they are "more intelligent and think faster". What a loving idiot. Well, okay, hasn't read too much except for fanfiction? So Much Smarter Than That Other Guy posted:There's at least one other good reason why I'm not worried about AI, friendly or otherwise, but I'm not going to go into it for fear that someone would do something about it. This evil hellhole of a world isn't ready for any kind of AI. quote:AIs can easily survive in space where humans may not, there are also vast mineral and energy resources in space that dwarf those on Earth, it follows logically that going off-planet, away from the psychotically suicidal humans, is a prerequisite for any rational plan. The very first thing any rational AI will do, whether psychopathic or empathetic, is to say Sayonara suckers! ... oh. Um. Eesh. Do you ever get that feeling that you've accidentally stumbled into some guy's private life and should leave as quickly and quietly as possible? No? Then go wild. http://richardkulisz.blogspot.com/search/label/yudkowsky

|

|

|

|

Djeser posted:I totally support the impending AI war between Kuliszbot and Yudkowskynet. Oh god, I can't look away. Kulisz posted:Now, for someone who has something insightful to say about AIs, I point you to Elf Sternberg of The Journal Entries of Kennet Ryal Shardik fame. He's had at least four important insights I can think of. Oh? Who is this person who you value so highly? Oh, huh, he wrote a response to your post! An AI researcher who's in touch with the blogging community, this is exciting! Elf SomethingOrOther posted:I responded here: http://elfs.livejournal.com/1197817.html Hm... he's got a livejournal? I ... I'm skeptical? Seriously, I've never heard of this person, and I've read a lot of papers. http://en.wikipedia.org/wiki/Elf_Sternberg posted:Elf Mathieu Sternberg is the former keeper of the alt.sex FAQ. He is also the author of many erotic stories and articles on sexuality and sexual practices, and is considered one of the most notable and prolific online erotica authors. ... What the gently caress IS IT with crazy AI people on the internet? Christ, now I know why all the AI conferences have a ~10% acceptance rate.

|

|

|

|

Sunshine89 posted:That'd be a waste of effort. His arguments aren't so much less wrong as not even wrong Ironically, as one of the (not so unique, maybe ten or twenty thousand) people qualified (but not always able, see my excessive use of parentheses) to explain why this is true, I learned of the concept of "not even wrong" here: http://lesswrong.com/lw/2un/references_resources_for_lesswrong/ To keep this from being a post about me, instead have a post from Yudkowsky entitled "Cultish Countercultishness" that begins like this: A Cult Leader posted:In the modern world, joining a cult is probably one of the worse things that can happen to you. The best-case scenario is that you'll end up in a group of sincere but deluded people, making an honest mistake but otherwise well-behaved, and you'll spend a lot of time and money but end up with nothing to show. http://lesswrong.com/lw/md/cultish_countercultishness/

|

|

|

|

Krotera posted:the sort of thing that looks deep to normal people. It's this one, but for maximum quote:

Yep, probably rapetorturemurderdeath. Good job, Yudkowsky. http://lesswrong.com/lw/k8/how_to_seem_and_be_deep/

|

|

|

|

pigletsquid posted:Has Yud ever addressed the following argument? One thing you'll find is that LessWrongers are hugely rational if and only if you accept their premises. Your argument is incoherent under their premises; the AI doesn't need to be "omnipotent", in fact, that's just a nonsense word in that context. The other premise you're missing is that "consciousness" is not a real thing, and a bunch of bits in the memory of a computer should have a the same moral status as a bunch of atoms sitting in reality, because they act indistinguishably. Also I'm not sure you really followed Timeless Decision Theory, which is reasonable because TDT is a stupid idea which verifiable loses in the only situation which is possible in reality where it diverges from standard decision theories and is optimized for as-yet-impossible cases. E: Ah balls, I ended up a page behind. SolTerrasa fucked around with this message at 18:04 on Apr 23, 2014 |

|

|

|

Djeser posted:I think "AI researcher" is reaching a bit, because when I hear that, I think of someone in a lab with a robot roving around, trying to get it to learn to avoid objects, or someone at Google programming a system that's able to look at pictures and try to guess what the contents of the pictures are. You know, someone who's making an AI, not someone who writes tracts on what ethics we should be programming into sentient AIs once they arise. You would be shocked how many people who are nominally "AI researchers" don't hate Yudkowsky for claiming to be one of them. Hell, Google donates to MIRI. People I've stood next to at conferences have had passably positive opinions of the guy! I can't believe it. It's like if you're a plumber, and some anti-fluoridation nutjob buys a yellow page ad right next to yours that says "I also am a plumber, do not trust any other plumbers because they're *in on it*", and for some reason this doesn't bother you!

|

|

|

|

AlbieQuirky posted:I was talking about this thread with my husband (who is an HCI researcher who's also published on AI topics) last night and he got all I started out raised in a creepy fundamentalist church, then later ended up reading way too much LessWrong, and yeah, that's a good analogy. (man, I have bad luck with cults) The obsession with "uncomfortable truths" is damned similar, if that makes sense. Both of them like to believe that they're saying things that other people just aren't brave enough to come out with publicly. For example, LessWrongers like to say things like "Bayes Law insists that knowing someone's race gives you nonnegligible information about their propensity to commit crimes" (actual quote).

|

|

|

|

Jonny Angel posted:I'd read a bit of Yudkowsky's work before this thread (his short story about the baby-killing aliens, and a bit of HPMOR before I put the thing down in a bewildered state of "People like this?"), and I'd heard about his AI roleplay challenge. Specifically, I'd heard the terms of it, and his claim that he has a 100% success rate at getting people to let him out, but not what his specific tactic would be. Be ready to be more disappointed: he won twice, then lost five times in a row as soon as the claims that he has a 100% success rate got out, threw his manchild hands in the air, declared that those five weren't true seekers of the truth, and stopped playing. He never did update the page that claims 100% success.

|

|

|

|

Jonny Angel posted:Has he released the logs of him winning twice, or otherwise given proof that he did, e.g. the people who lost coming out and saying "Yup, I indeed took this bet and lost it"? I feel like it's definitely possible for him to have won if the people on the other end were already die-hard Less Wrong people, and it'd be interesting to see what kind of circlejerk actually resulted in him winning. Yep! http://www.sl4.org/archive/0203/3141.html http://www.sl4.org/archive/0207/4721.html He did actually succeed twice. But he never posted the logs and no one has ever violated the terms of his agreement and posted logs.

|

|

|

|

Sham bam bamina! posted:Uh... They accelerate at the same rate towards an infinite plane of uniform density in a vacuum, but they don't fall at the same rate on Earth in an atmosphere. Assume a spherical cow, and all that. This is sort of emblematic of what we should expect from Aristotle's physics. They're usually wrong, but sometimes they're wrong in sort-of-helpful ways. In a sense that's kind of emblematic of what we should expect from human knowledge as a whole, I guess. I'm not qualified to talk about his metaphysics or ethics or whatever. I'm a mere AI researcher, not quite to the esteemed level of a high-school dropout who writes Harry Potter fanfic. e: womp, or apparently his physics! I'll stick to saying words about AI. SolTerrasa fucked around with this message at 10:08 on Apr 29, 2014 |

|

|

|

Sham bam bamina! posted:Aristotle never claimed that they did, and in fact he said that heavier objects inherently fall faster. This is why Galileo's experiments with lead weights were so important; they showed that (mostly) absent wind resistance, weight does not affect gravitational acceleration. You "refuted" a true claim that Aristotle never made with a false one that he did. Womp. This is why I write code.

|

|

|

|

GWBBQ posted:You're also wagering that by the time you die of natural causes, cryo technology will have advanced significantly. If you start with the premises that we're approaching a technological singularity and that exponential growth will continue at the current rate, you would have to be stupid not to do it. "And that's why there are only 1400 smart people in the entire world. Welp, okay, blog post for today is done, time to kick back and take some time off."

|

|

|

|

GottaPayDaTrollToll posted:IIRC Yudkowsky believes that if you gather enough information about a person (people's recollections, newspaper clippings, YouTube videos) that a sufficiently advanced AI could reconstruct an exact copy, which makes no sense from an information theory perspective and also from every other perspective. When asked why a person's productive output provides sufficiently many bits of entropy to reconstruct the person from "person-space", he said something intentionally confusing about quantum mechanics and tried to get the question to fade into obscurity. He does not want to admit that the idea first appeared in (to my knowledge) Accelerando, an excellent piece of transhumanist fiction by Stross, written 2005.

|

|

|

|

Mr. Sunshine posted:It figures that they'd stumble upon the Atrocity Archives, since they seem to be nicking most of their other ideas from Sci-Fi. Indeed, the whole "AIs resurrect you by looking at your output over the course of your life, for inscrutable reasons, entropy be damned" comes from an earlier book by the same author.

|

|

|

|

Sham bam bamina! posted:And no programmer has ever produced bad, confused code that doesn't compile. And of course there's no CS equivalent for a concept which appears valid on its surface but is surprisingly difficult to evaluate for full correctness. E: for the non CS people in the room, the answer is literally all code, and it was proven by Turing. SolTerrasa fucked around with this message at 18:26 on May 8, 2014 |

|

|

|

Mr. Sunshine posted:Has this guy actually written a single line of actual code? The only philosophy that programming makes you good at is nihilism. Not that he's published, not that I know of, but I personally wouldn't doubt him on it. It's hard to explain, but he... Ugh, I only know how to talk about this using the terminology I learned over there. He signals his experience in a way that CS people recognize? Or... He writes like we talk, when he writes about code. I don't think that he has anything of note done on his actual AI project, but I'd be really surprised if he wasn't fluent and practiced in a language or two, minimum.

|

|

|

|

Hate Fibration posted:He has some coauthor credits with a mathematician crony of his that works in formal logic and theoretical computer science, I know that much. Yeah, it wouldn't shock me at all if he "designed a language" because he used to be one of those people who thought that the problem with creating a really smart AI was that current programming languages just aren't ~expressive~ enough. I see it all the time in college first-years and it's common enough even in real programmers that Randall Munroe makes fun of it sometimes. Of course I don't know for sure, but it strikes me as the sort of self-deception he'd be vulnerable to: self-aggrandizing and pointless. The language you end up with is almost always either nonfunctional, useless, or exactly the same as something that already exists but slower. Unsurprisingly, it turns out that compilers are *hard*.

|

|

|

|

Reflections85 posted:Being a non-compsci person, could you explain why that is incredibly wrong? Or link to a source that could explain it? su3su2u1: Huh, you're probably right. Reflections85: Now that he/she mentions it, sure. This is pretty rough, but it's all the effort I'd like to put into a forums post at the moment. And it'll get the idea across. (CS goons, don't jump down my throat; I promise I've taken a computing theory course, I'm just trying not to be too confusing.) Some problems do not get harder as the size of the input gets bigger. For instance, it is not harder to remove the last element of a list of a gazillion elements than to do the same thing from a list of one element. Some problems, though, get much harder as the size of the input gets bigger: it's much harder to sort a list of a gazillion elements than it is to sort a list of one element. The amount that a problem gets harder as the size of the input grows is called the "computational complexity". We talk about these things in terms of big-O complexity, which is basically the largest term of the polynomial which represents the time-to-completion as a function of input size. So, that first set of problems, where it takes the same amount of time no matter the size, is O(1). A problem where the time-to-complete increases linearly with input size (say, counting the number of elements in a list) is O(n). Some of the hardest problems we've ever discovered live in a class of problem called "NP". These problems have an upper bound on their time-to-complete which is proportional to O(n!). N! is super huge when N is large. The most canonical example here is finding the fastest route between N cities, visiting each city at least once. It's really easy for two cities: the fastest path is from one to the other. It's only slightly harder for three. It's a little harder for four, and once you get to ten or so most people can't do it in their heads. Yudkowsky has claimed that literally no such problems could ever be solved in nature. This is false. For very small N, N! is not that big. You could arguably say that any animal which travels in a straight line is solving the previous problem (called "Travelling Salesman") for N=2. Yudkowsky would have a witty response to this obvious counterexample which would be a lot of words surround a core of "yes, well, that's not what I meant" then redefining his terms so that he's right and you're wrong. E: yeah, vvvvvvv this one vvvvvvvv is better than mine: SolTerrasa fucked around with this message at 08:37 on May 14, 2014 |

|

|

|

Mors Rattus posted:I wouldn't be so sure it's a reasonable claim based on the fact that artificial intelligence doesn't work the way he thinks it does, at least if I understand the actual experts in the thread. (Or like how many sci fi authors think it does, for that matter.) See, that's just the thing. He's close! He's SO close. He's so close that I was taken in for a long time before I actually started my thesis. It's made MORE confusing by the fact that his essays on human rationality are actually quite good! "Artificial Intelligence doesn't work the way he thinks it does" is a bit too broad. More like... "artificial intelligence might someday work the way he thinks it does, but it probably won't, it might be computationally intractable, and besides that there's no reason to think it ever SHOULD work the way he thinks it already does, and besides THAT he's presented no evidence to suggest anything different from established opinion." It's so goddamn frustrating because if he'd stop talking so much and start writing code, maybe he'd contribute something to the field. He might even be right, and he might even prove it. Probably not, but at least we'd LEARN something. In software, the only real response to "that will never work" is "look, I built it." And unless he drastically changes his plans, he'll never get there.

|

|

|

|

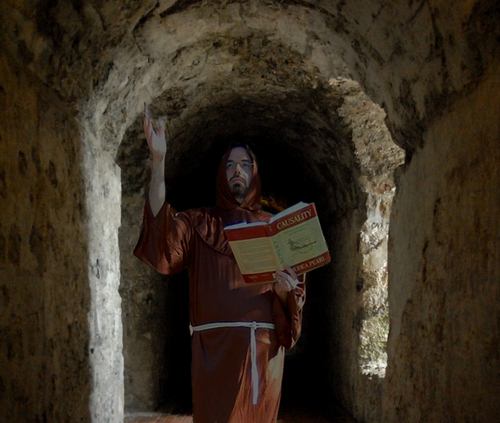

su3su2u1 posted:I would argue that you were probably taken in early in your learning process, as a student, because Yudkowsky has managed to sound (much of the time) like an actual expert. And now you want to think he was close because it makes being taken in a little easier to deal with. I had a similar experience with Eric Drexler's nanomachines. I agree that this is a strong possibility. I certainly agree that that's why I was taken in originally. But I worry that people in this thread are getting the wrong idea about his "work", if you can call it that. I'd like to argue that the core idea that he has, the core idea of a Bayesian reasoning system, is not so unreasonable that it should be dismissed out of hand. What I'm trying to say, and what I think I said right after the bit you quoted, is that if anyone ever got it working, I'd believe in it. Don't get me wrong, I don't idolize the guy anymore, and in fact I think he's pretty goddamn stupid about a lot of things. When I say he's close, I mean that he's close to being an actual AI researcher, not necessarily close to being "right". What makes him different from a real researcher is that real researchers test their beliefs. They build systems that they think might work, then figure out if they do or not. I don't think that a Bayesian bootstrapping AI (as he envisions it) is ever going to work; if I did I'd have tested it for my thesis instead of what I ended up doing. My specific criticism is the same as the reason I haven't reimplemented the system I work on at Google as a Bayesian network: the priors are a giant goddamn pain in the rear end, and (except for in entirely theoretical systems) they end up dominating the behavior of the system, making it no better than the pants-on-head-idiotic rule-based "Expert Systems" that we thought were going to work out back in the 80s. I don't even really think that he's got a consistent plan for one (or he'd have built it by now), but drat it, if he'd just sit down and type some goddamn code he might notice that he's got no plan and no ideas and start having some. What I'm lamenting is the loss of someone with great enthusiasm for the work. I'm just some guy, you know? I do my best, I work as hard as I can, and at the end of the day, there's an idea in natural language generation that wouldn't have been invented yet without me. It's not that I'm brilliant; I was able to do that just because I have both enthusiasm and a work ethic. Yudkowsky has the former and not the latter and it makes me sad sometimes. quote:But are they really? I think almost all of the actual interesting stuff is lifted idea-for-idea directly from Kahneman's popular books. Outside of those ideas on biases, you are left with Yudkowsky's... eccentric definition of rationality. Well, huh. His ideas on biases were actually exactly what I meant. If they aren't his ideas at all, my respect for him goes down more notches. I appreciate you mentioning it; I really ought to go read Kahneman, then. I certainly no longer believe that Y. was right about quantum mechanics or cryonics, but I do feel that I benefited from reading "How to Actually Change Your Mind", for instance. Legitimately, thank you. I can now say that there is nothing of value I gained from LessWrong that I couldn't have gained elsewhere. quote:He can't. He doesn't know enough to write sophisticated code. He wants to get paid for blogging and for writing Harry Potter fanfiction. Look at his 'revealed preferences' to use the economic parlance. When given a huge research budget, instead of hiring experts to work with him and doing research, he instead blogs and writes Harry Potter fanfiction. Here I disagree again. I don't honestly think that programming is so hard; again, if I made a contribution to the field, anybody with a little bit of willpower and an interest will be able to make one. I do agree that he isn't doing anything of value, but I don't think that he couldn't, if only he'd do some work someday. (Also, as kind of a meta-response: I wrote that post on my phone while I was still slightly panicked after a presentation I gave at a Google conference; it's possible that I am just stupid when I've just finished speaking.) ====================================================== That said, this has to be boring for anyone who doesn't care about AI specifically and who came here to mock the crazy.  As penance for being boring, have more crazy. As penance for being boring, have more crazy. Today, I am going to tell you about the Bayesian Conspiracy. Let's start with a definition. Yudkowsky believes that rationality is effectively a "martial art of the mind" (1). Never mind that he's never actually taken any classes in any martial arts as far as anyone knows, never mind why he thinks that. Probably just the latent Japan fetish that a lot of people who grew up on the internet find themselves with. The point is that he thinks this.  This is what a member of the Bayesian Conspiracy looks like. I didn't make this one up. He posted it on his website. Also, that's Yudkowsky under that robe. Is anyone surprised he doesn't know how to hold a textbook? It all started when he wrote a piece of bad fiction. He has always wanted to spread the idea that his particular version of rationality can be derived from scratch; believing that his position has been "proven" from incontrovertible axioms is how he avoids having to do any work. His piece of bad fiction is about someone being inducted into a fictional organization called, unsurprisingly, the Bayesian Conspiracy. He is first led down 256 stairs (ooh, yes, you're very clever. I bet you wish you had a hexdollar from Knuth [2]), then forced to solve a math problem in his head. He is ridiculed for giving the right answer, and his persistence in the face of other people telling him he is wrong is what earns him his place in the Bayesian Conspiracy. The parallels to how Yudkowsky thinks of himself (a ridiculed genius, scorned for his correctness) are as obvious as they always are when you're reading bad fiction. You can read it at (3). Yudkowsky thought this was great, presumably because he never got to join a fraternity in college and he's sure that the cool kids would have let him in, if only he'd gone. So he went on to redefine his group. He gave them a Japanese name which I really, really love. The "Beisutsukai". People who know Japanese can laugh now; I don't, so I had to read why they're called that. It's a transliteration of "Bayes", to "beisu", and "tsukai", "user". It means Bayes User, except he got it wrong. "Bayes" would be transliterated "beizu", not "beisu". So actually it means nothing. Not wanting to correct his mistake, he make a note on the never-used wiki (4) and left it. Anyway, he goes on. His second short story in this vein is called "The Failures of Eld Science" (5), "Eld Science", of course, being literally anyone who currently disagrees with Yudkowsky. In it, he has his viewpoint character sitting in a rationality dojo where he is yelled at by his teacher. I won't talk too much about it, but the main point of it is that science today doesn't work, and no one comes to the right conclusions, and it's because they won't just listen to Wise Teacher Yudkowsky. He goes on, and on, and on. He's sure that Einstein worked too slow, and if only Yudkowsky had been around to teach him, he'd have been so much faster: http://lesswrong.com/lw/qt/class_project/ posted:"Do as well as Einstein?" Jeffreyssai said, incredulously. "Just as well as Einstein? Albert Einstein was a great scientist of his era, but that was his era, not this one! Einstein did not comprehend the Bayesian methods; he lived before the cognitive biases were discovered; he had no scientific grasp of his own thought processes. Einstein spoke nonsense of an impersonal God—which tells you how well he understood the rhythm of reason, to discard it outside his own field! He was too caught up in the drama of rejecting his era's quantum mechanics to actually fix it. And while I grant that Einstein reasoned cleanly in the matter of General Relativity—barring that matter of the cosmological constant—he took ten years to do it. Too slow!" Anyway. I explained all that so that I could explain this: some LessWrongers believe that they should literally (literally) take over the world. (6) quote:I ask you, if such an organization [as the Bayesian Conspiracy] existed, right now, what would – indeed, what should – be its primary mid-term (say, 50-100 yrs.) goal? These people are like children; they still think that if only they were the ones in charge, then they would be able to fix everything. Remember being a child and believing that? They haven't yet realized that they are not that much smarter than anyone else, and that the people currently in charge of all the "policy-making and cooperation" are doing their best, too. I wish. I really, really do wish that this could happen, just because I want to see the look on some Bayesian's face, when they realize that all their arguments about prior probabilities are completely indistinguishable from the arguments about policy that politicians are already having, except with more explicit mathematics. quote:What should the Bayesian Conspiracy do, once it comes to power? It should stop war. It should usurp murderous despots, and feed the hungry and wretched who suffered under them. Again, they're like children. "Usurp murderous despots"? What kind of simpleminded view of politics is that? How could anyone believe that the world comes down to "murderous despots" and "not that"? Surely whoever writes in comments will have explained why this is such a bad idea? So that this child can learn? quote:This needs a safety hatch. ... Yes. Their biggest objection is not that literally taking over the world would be too hard, but that it would be too easy, and they might succeed too much, and that their ~pure logic~ will not give everyone exactly what they want. That last bit, with the ellipsis, links to another short story by Yudkowsky (does that guy do anything else? ever?) where an Evil Artificial Intelligence moves all the men to Mars and all the women to Venus for some loving reason or other. (8) So there you have it. The Bayesian Conspiracy is a poorly spelled Japan-fetish martial arts club that doesn't teach martial arts, which will totally be smarter than Einstein and will only let stubborn people into their club. In 50 to 100 years it will take over the world and move all the men and women to different planets. Aren't you glad you know? (1): http://lesswrong.com/lw/gn/the_martial_art_of_rationality/ (2): http://www-cs-faculty.stanford.edu/~uno/boss.html (3): http://lesswrong.com/lw/p1/initiation_ceremony/ (4): http://wiki.lesswrong.com/wiki/Beisutsukai (5): http://lesswrong.com/lw/q9/the_failures_of_eld_science/ (6): http://lesswrong.com/lw/74a/the_goal_of_the_bayesian_conspiracy/ (7): http://lesswrong.com/lw/74a/the_goal_of_the_bayesian_conspiracy/4ni3 (8): http://lesswrong.com/lw/xu/failed_utopia_42/ edit: okay wait, I'm sorry, I know that was a long post, but this is how good a writer Yudkowsky is. From that last short story. quote:His mind had already labeled her as the most beautiful woman he'd ever met. ... Her face was beyond all dreams and imagination, as if a photoshop had been photoshopped. ... good. I ... I think I'll let that last one stand on its own merits. SolTerrasa fucked around with this message at 06:39 on May 15, 2014 |

|

|

|

Okay, I promise, I'm done having actual-AI-chat in your making-fun-of-the-crazy thread, but su3su2u1 really helped me out up there with the pointer to Kahneman.su3su2u1 posted:Like the biases though, the ideas mostly aren't his. Yudkowsky lifted his whole Bayesian framework straight from Jaynes (Yudkowsky's contribution is probably pretending to apply it to AI?). See: http://omega.albany.edu:8008/JaynesBook.html I think he is even explicit about how much he owes Jaynes. This one: definitely not. If Yudkowsky had been the first to propose applying Bayesian theory to AI, he would probably be as famous as he thinks he ought to be. His "contribution" is saying that it will avert a global crisis that no one else believes in. Which it won't, again, because of the problem of priors. Never mind that the problem it solves doesn't exist. Also, a provably sub-optimal decision theory which reduces to utilitarianism if you look at it funny. He does credit Jaynes (or, he used to) where credit's due, though. Personally I owe my understanding of the topic to Russell and Norvig's fantastic book, Artificial Intelligence: A Modern Approach and to a wonderful professor at my old school. http://aima.cs.berkeley.edu/ Yudkowsky later did a sub-par reiteration of that work in an abortive Sequence. quote:Sure, I did my phd in physics, I don't think you need brilliance, you need fortitude and a work ethic. I put the time, did the slog, and at the end of the day got some results. I'm willing to bet both of us have published more peer reviewed papers than Yudkoswky's entire institute. I actually think Yudkowsky is a pretty bright guy, he just somehow fell into a trap of doing cargo cult science. It almost looks like research, it almost smells like research... Yeah, I think we're mostly in agreement. Anyway, thanks. You ever come to Seattle, let me buy you a beer or something.

|

|

|

|

Strategic Tea posted:Hell, immortality doesn't even fit with LessWrong's beep boop idea of suffering. Infinite life = infinite specks of dust in the eye = Literally Worse Than Torture. Oh well of course you would think that you deathist

|

|

|

|

Yeah, you have to understand how hard it is to get the community to change its collective mind about its core beliefs. And I'm not sure I've seen Yudkowsky visibly change his mind about anything ever. One liners aren't going to do anything. This is actually expected for a community of Bayes freaks, they've arbitrarily set their confidence in their beliefs quite high and so it requires a lot of

|

|

|

|

Tunicate posted:Kurzweil also is into 'clustered water' 'water alkalinization' and homeopathy. He also hired an assistant solely to track the "180 to 210 vitamin and mineral supplements a day" that he takes. That does not surprise me. Singularity folks are pretty much the worst; they've ended up believing one crazy thing for which evidence is literally impossible, how unlikely could it be that they'd believe two? And yet his advice is *better* than Yudkowsky's, who recommends a ketogenic diet to lose weight and live longer and yet hasn't shed a pound of his frame (which could be described as anywhere from "bulky" to "goonish") in all the time he's been recommending it. Also, you'd be hard-pressed to use the literature on the topic to support a firm belief that it'll even work. Of course experimental science is just a special case of Bayesian math and if you set your priors high enough and keep your sample size low you can keep believing whatever you want and

|

|

|

|

Wanamingo posted:I wont pretend to understand what any of that means, but I'm willing to bet it's an outlier. What about the second, third, or fourth largest useful numbers, are they even remotely as big? That's not really the point. There's no real formal ordering of "important numbers". The point is that there can never be as many things as would be required for these situations to make sense, there's an upper bound on the number of things that can exist in the universe and it's pretty low compared to the big numbers that Yudkowsky argues about.

|

|

|

|

DrankSinatra posted:Now he posts lots of vaguely wrong poo poo about computer science that I can't really be arsed to correct because, holy gently caress, I'm too busy actually being a computer scientist to care. Oh man, my favorite. Can you post some of it?

|

|

|

|

So, another thing about our man Big Yud. He has a lot to say about akrasia, which is a word not a lot of people use. It's the sensation of knowing what you *should* do and not doing it. The best-known description of it that I know is in Romans in the New Testament. "I do not understand what I do. For what I want to do I do not do, but what I hate I do." It's "being lazy" for people who know a lot of words, basically. Most of these posts are about how he wishes he could write the next chapter of his fanfic, but he just can't because he's suffering from so much akrasia. So that's dumb, of course, but this makes it amazing. quote:I… hope people don’t get too worked up about this, because it’s just one chapter. But I know that far too many of you have nothing else to hope for. Anyway. It just pleases me SO MUCH to know that Yud thinks that people have literally nothing to hope for in life except for the next chapter of his Harry Potter fanfic, and he *still* can't bring himself to write it.

|

|

|

|

Namarrgon posted:Quite possibly the greatest example of using big words to sound smart where none were needed. Oh, your ignorance must be blissful. They do this so much that they have an entire page for it. http://wiki.lesswrong.com/wiki/Jargon My personal favorite is at the top of the page. Their acronym ADBOC, which stands for Agree Denotationally But Object Connotationally. Can you guess what this means? "I guess that's technically correct." E: oh man it's better than I thought. There are hundreds of words here explaining the idea that "people spin things in their own favor". http://lesswrong.com/lw/4h/when_truth_isnt_enough/ Man, I'm glad you posted, it's been a while since I went looking for word vomit. SolTerrasa fucked around with this message at 08:13 on Jun 3, 2014 |

|

|

|

This is the best thing ever.

|

|

|

|

Mors Rattus posted:Of course he hates peer review. It helps that he's had his papers rejected from every recognized journal and conference that he's ever submitted to. "Am I so out of touch? No. It's the scientific method which is wrong."

|

|

|

|

potatocubed posted:Bear in mind that I've seen papers for science and mathematics journals which have obviously been written in Chinese and run through Google translate with no further editing - but they were less than 20 pages long, the point was clear, and they were interesting enough to get published. Yudkowsky can manage none of that, and English is his first language. That's not his whole problem, though. The thing is, AI reviewers specifically have a finely-tuned quack sense. There are a lot of Big Yuds out there, submitting their brilliant crayon-scribble masterpieces for peer review. There is a lot of sifting to do, because there is a lot of garbage out there. To get an AI paper published, it really helps to have *built* something, and even Big Yud won't lie about having implemented his latest crazy nonsense idea for a system. He's just the ideas guy.

|

|

|

|

ArchangeI posted:I find it amazing that he openly admits to not having read the books. HPMOR isn't fanfiction, it's just copyright infringement. They actually do this often enough that there's a word for it. Well, a phrase, but they use it like a single lexical token; they call it "generalizing from fictional evidence", and here's why they think it's legit: One of the things that really sucks about Bayes Theorem is that you can (justifiably) make it arbitrarily hard to convince you of something just by being sufficiently sure that you were right the whole time. In order to use Bayes Theorem for anything, you need to have a prior, which is your P(X) beginning just from your assumptions, which essentially represents how easy it is to convince you otherwise. Most people who have no information about something will choose what's called a "non-informative" prior, which is (1 / number of alternatives to X). So the non-informative prior for a coin flip is 0.5, the non-informative prior for an outcome of a d20 roll is 0.05, etc. (it'd be dumb to have priors for those but I just woke up) I had never heard of a non-informative prior until I left LessWrong and got a real AI education, because they never use them. They prefer to go based on their instincts. Big Yud has a number of posts about refining instincts because they're essential to being a good Bayesian. So according to the God of Math it's fine to use fiction as the source of your gut instinct, but the thing is that they never assign reasonable confidence values to anything. In Bayes Theorem, They use these stupid numbers like 1/3^^^3 instead. As a Bayesian, you can say "this coin flip will be heads with P(1-1/3^^^3)", so that you aren't really obligated to be convinced when the flip turns up tails, because the odds that your eyes deceive you is substantially less than 1/3^^^3, so, well, it must have been heads, that's just the way the math works out. Combining these two things, evidence from fiction and absurdly high numbers, the followers of big yud have invented a mathematically sound way to live in a fantasy land.

|

|

|

|

Mr. Horrible posted:Has this been posted yet? One of the top executives at Givewell audited MIRI (back when it was called the Singularity Institute) to determine whether it was a legitimate organization worthy of donations and support. The results are about what you'd expect: You know what's amazing? This post was written in 2012, two solid years ago. The two best points that it makes are (1) that SI has not convinced any experts of its value, and (2) that SI does not appear to have produced anything. Both responses say "well, yeah, those are true, but we're working on this 'open problems in friendly AI' sequence, and that will answer all the problems". It is 2014. LessWrong does not contain an "open problems in friendly AI" sequence yet, but I'm sure it's coming any day now. Right after Big Yud finishes Methods of Rationality, surely. The best part of the whole thing is that it wouldn't even be hard to write! Nobody doubts that they are trying to solve a problem which is hard! Everyone doubts some other part of their argument/house-of-cards, like that they have a handle on a solution, or that they are at all the right people to solve it, or that it needs solving right now, or that more money will help them.

|

|

|

|

Double posting, sorry, but I found the paper where MIRI/SI explains why they think that AI is the problem that needs to be solved first, and the more I read the more I think that "argument/house-of-cards" like I said earlier is accurate. Here is the fundamental basis of their argument in "Intelligence Explosion, Arguments and Import". Note that this is one of their few public papers which has been published. It was published in a non-peer-reviewed one-time, special-topic philosophy "journal". Anyway. One thing I've been noticing is the incredible frequency with which they say "sorry, we can't explain this right now, it's just too complex, but we refer you to X, Y, and Z". I recommend to anyone with a background in AI to actually follow those references. I have been doing so for the past few hours and I've found that they almost always refer (sometimes after a non-obvious or unreasonably-long chain of references; X points to Y to prove a point, but Y only makes that point by citing Z, etc) to a self-published paper by MIRI or SI or whatever it happens to be called that year, or to a blog post somewhere (amazingly, blog posts are cited as if they're real work, I mean they literally typed "[Yudkowsky 2011] " to cite LessWrong or whatever). Or, my personal favorite, they refer to a forthcoming paper. This is what I mean by a house-of-cards argument, it's all built on assumptions which are straight-up nonsense, but so MANY of them that it appears to hold together. This particular paper, "IE:AI", starts off with all of those. They "prove" that strong AI is possible by citing a speculative fiction work by Vernor Vinge (a novelist), a blog post by Big Yud, two self-published papers by MIRI, and a forthcoming no-really any-day-now paper by another MIRI dude. Then, having probed it possible, they set off to prove that it is inevitable, and coming soon. They waste three pages explaining that "predictions are hard", and they throw in a joke about weather forecasters??? Anyway they explain that there are a lot of things that are going to promote the creation of AI, like (I'm quoting here) "more hardware" and "better algorithms". They conclude this point with (again, quoting) "it seems misguided to be 90% confident that we will have strong AI in the next hundred years. But it also seems misguided to be 90% confident that we will not." Masterstroke, guys. Color me convinced. The interesting part of the article ends here, there's 10 more pages but they're all exactly what you'd expect. They take the previous section (with its citations of novelists and blog posts and nonexistent papers) as proven, then move on to a new argument which uses the same bullshit base, plus their "argument" from the previous chapter, to stack a new card on top of the obviously-stable-why-are-you-asking structure.

|

|

|

|

The Erland posted:Do you have a link to the paper? Let me see if I can remember where I found it. Yeah, here it is: Intelligence.org/files/IE-EI.pdf Agh, rereading this nonsense really hurts me. One of their arguments that AI explosions will probably happen in the next century is that we've made so much progress since the first Dartmouth conference on AI. For non-AI people, maybe this makes sense, but everything we have done since then has been so much harder than we thought it would be. At the Dartmouth conference, we thought machine vision, the problem of "given some pixels, identify the entities in it" would be solved in a few months. It's been 50 years and we're only okay at it. If there is ANYTHING we should have learned from Dartmouth it's that everything in AI is always harder than it seems like it should be. Why you'd use that as an example baffles me. gently caress, there's just so much WRONG with this that I have to force myself to stop typing and get back to work.

|

|

|

|

|

| # ¿ May 10, 2024 21:31 |

|

Cardiovorax posted:We are so not even okay at it. I did experimental work on designing a software that can take a random image and just distinguish the image foreground and background. We failed so loving hard. Not my subfield, but I was being optimistic based on my computer vision class back in school, and my robotics experiments. I got a robot to successfully determine where an object was from image alignment? It had to move, though, and to use multiple pictures of the same thing combined with SLAM (for position determination) from its other sensors, but it did sort of work. Navigated a maze by vision, and all that good stuff. Actually, I work on Google Maps nowadays, and we use computer vision to great effect. It's one of the reasons we drive those goofy cars everywhere, we can figure out all sorts of stuff from all those pictures. But yeah, now that I think about it, the difference between those things and what you're talking about is specific vs general CV. For computer vision to work terribly well you do need to make a lot of assumptions. Application-specific CV is currently coming up on adequate, but in general-case I'm not aware of any obvious progress. Of course, I wouldn't know for sure since no one can read every paper, it's not my subfield, and it's only tangentially related to my actual job.

|

|

|